Video action detection method based on central point trajectory prediction

A motion detection and trajectory prediction technology, applied in neural learning methods, instruments, biological neural network models, etc., to achieve strong scalability and portability, good robustness and efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

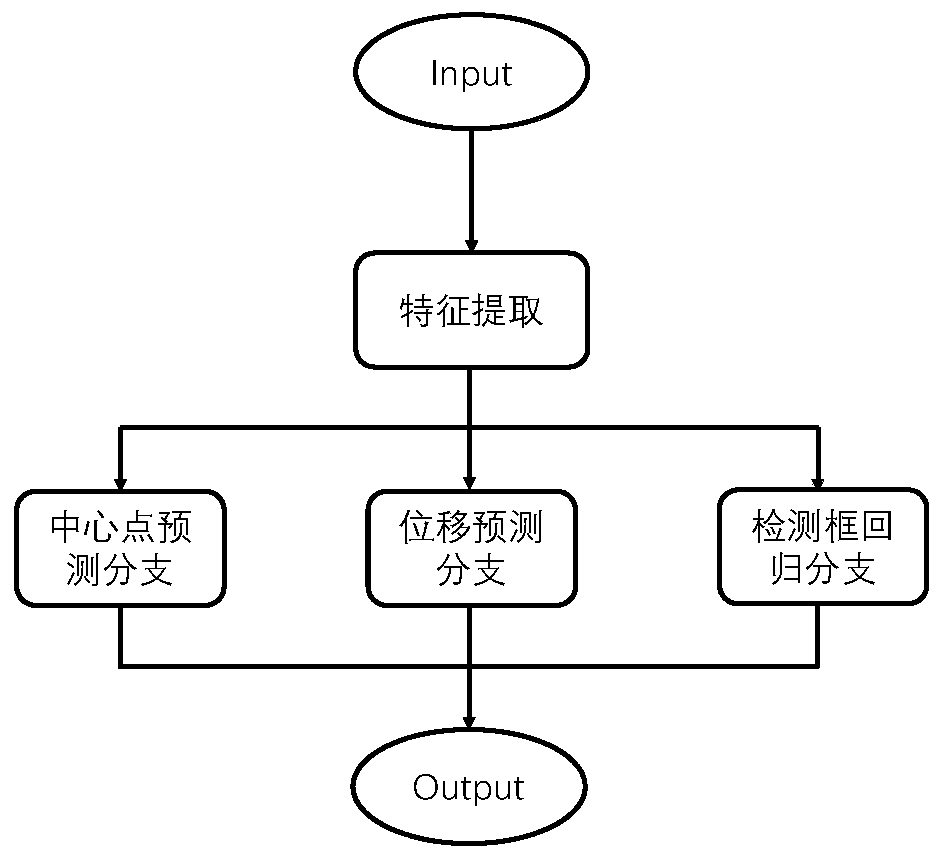

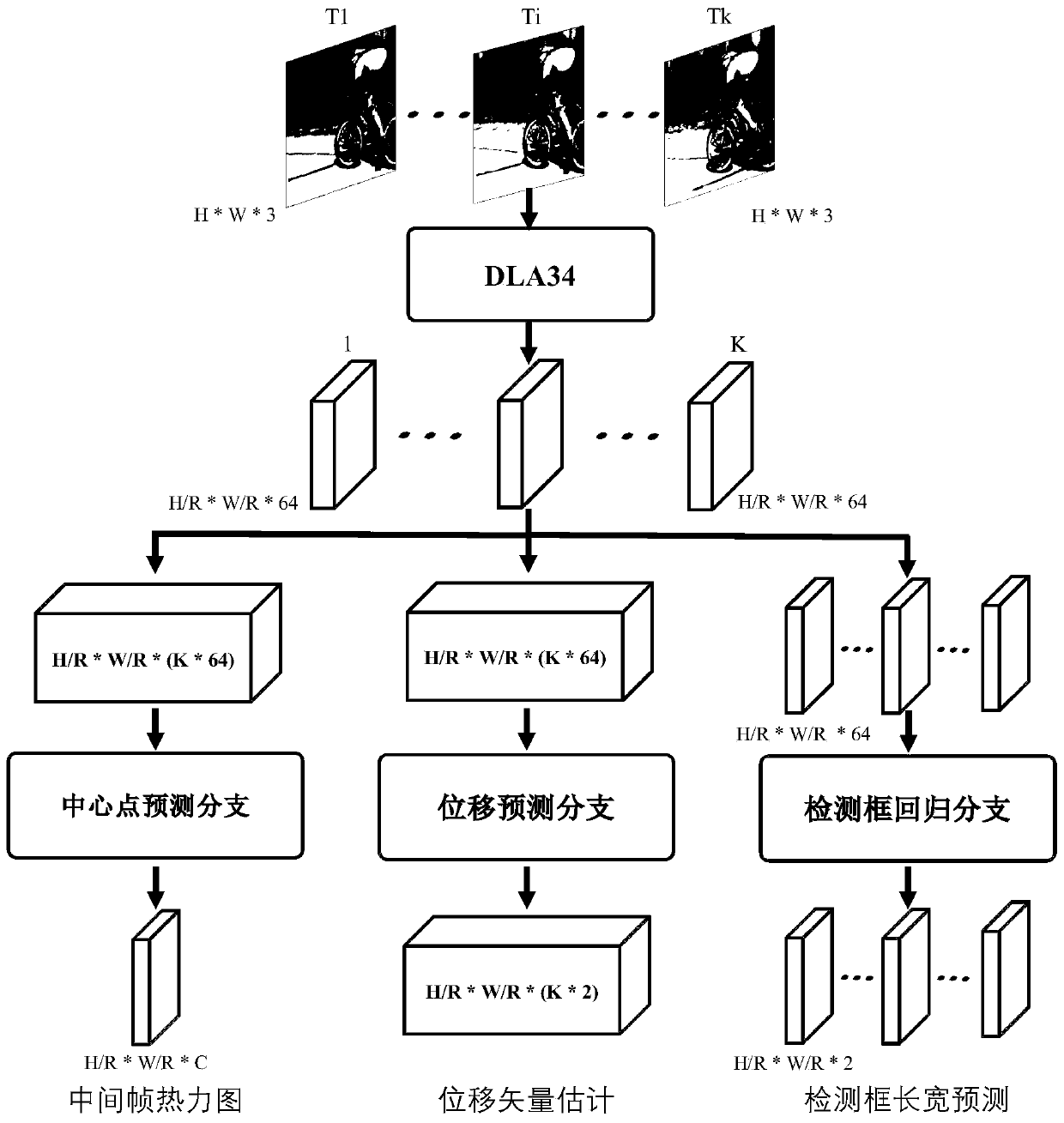

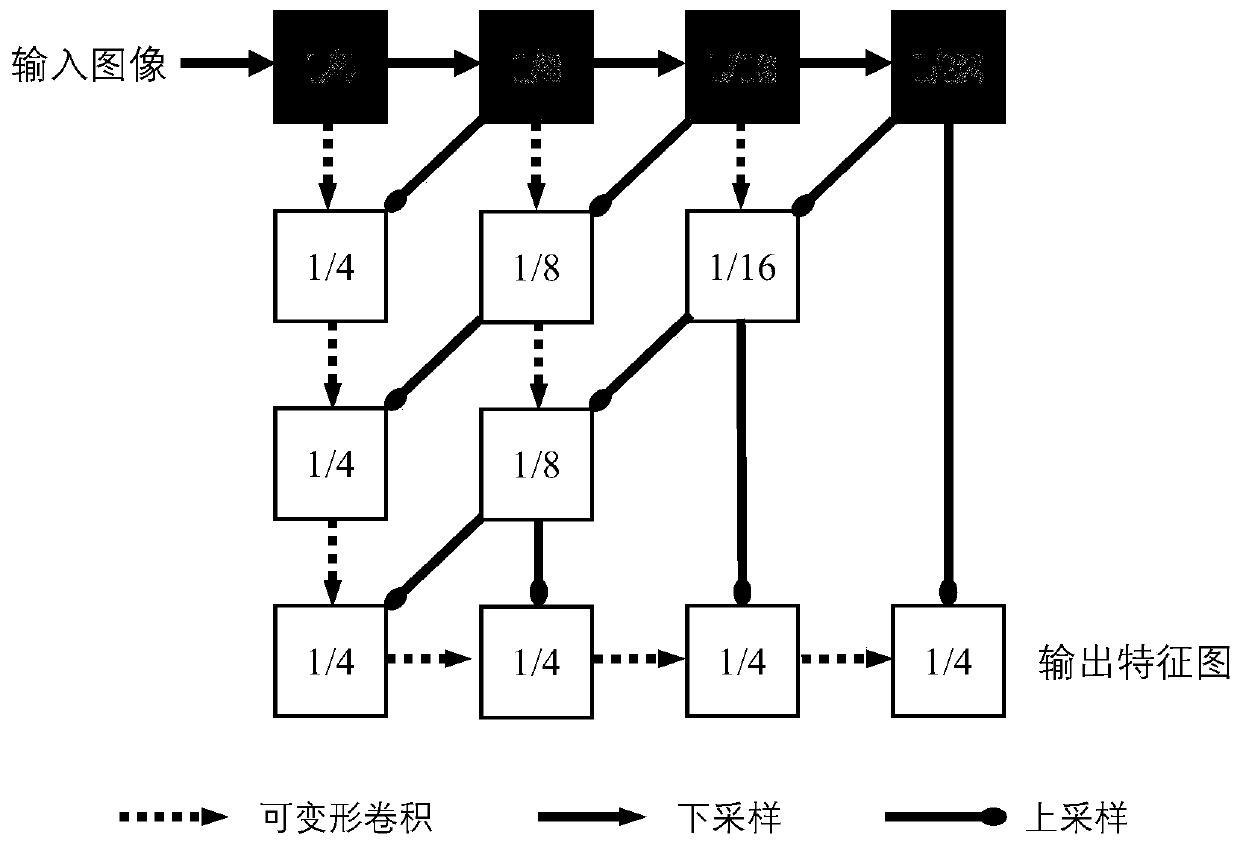

[0033] Inspired by the recent anchor-free object detectors such as CornerNet, CenterNet, FOCS, etc., this invention re-examines the modeling idea of spatio-temporal action detection from another perspective. Intuitively, motion is a natural phenomenon in video, which more essentially describes human behavior, and spatio-temporal action detection can be simplified to the detection of motion trajectories. On the basis of this analysis, the present invention proposes a new idea of motion modeling to complete the task of spatio-temporal motion detection by considering each motion instance as the movement trajectory of the central point of the motion initiator. Specifically, a set of motion sequences is represented by the center point of the action in the middle frame and the motion vectors of the action center points of other frames relative to it. In order to determine the spatial position of the action instance, the present invention directly regresses the size of the action...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com