Behavior recognition method based on space-time attention enhancement feature fusion network

A feature fusion and attention technology, applied in character and pattern recognition, biological neural network models, instruments, etc., can solve the problems of ineffective use of different branches, feature overfitting, etc., and improve feature overfitting and classification The effect of ability improvement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

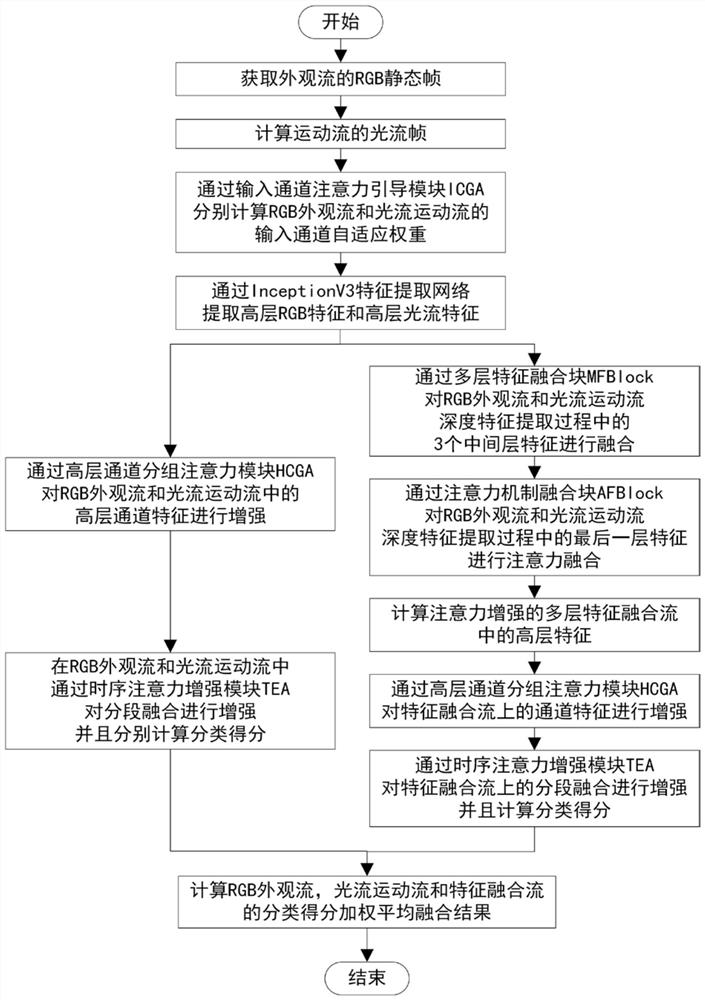

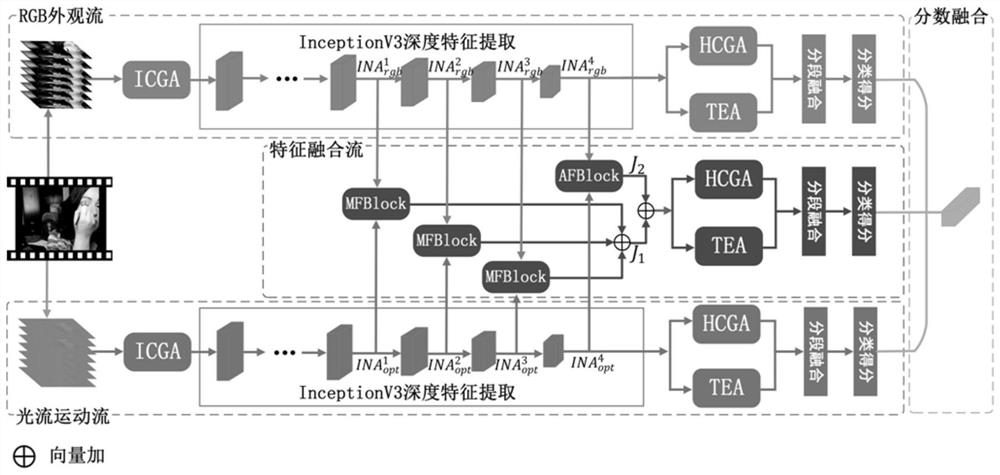

[0031] figure 2 It represents the algorithm model diagram of the present invention. The algorithm takes RGB frames and optical flow frames as input, and performs joint judgment through three branches: RGB appearance flow, optical flow motion flow, and attention-enhanced multi-layer feature fusion flow. The feature fusion flow passes through the multi-layer feature fusion block MFBlock and attention The fusion block AFBlock fuses RGB appearance flow features and optical flow motion flow features. At the same time, a variety of attention modules are added to the three branch networks, namely the input channel attention guidance module ICGA, the high-level channel group attention module HCGA and the timing attention enhancement module TEA for network guidance and feature enhancement. Finally, the classification scores obtained from the three streams are weighted and fused.

[0032] In order to better illustrate the present invention, the following takes the public behavior dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com