Patents

Literature

195 results about "Stream network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

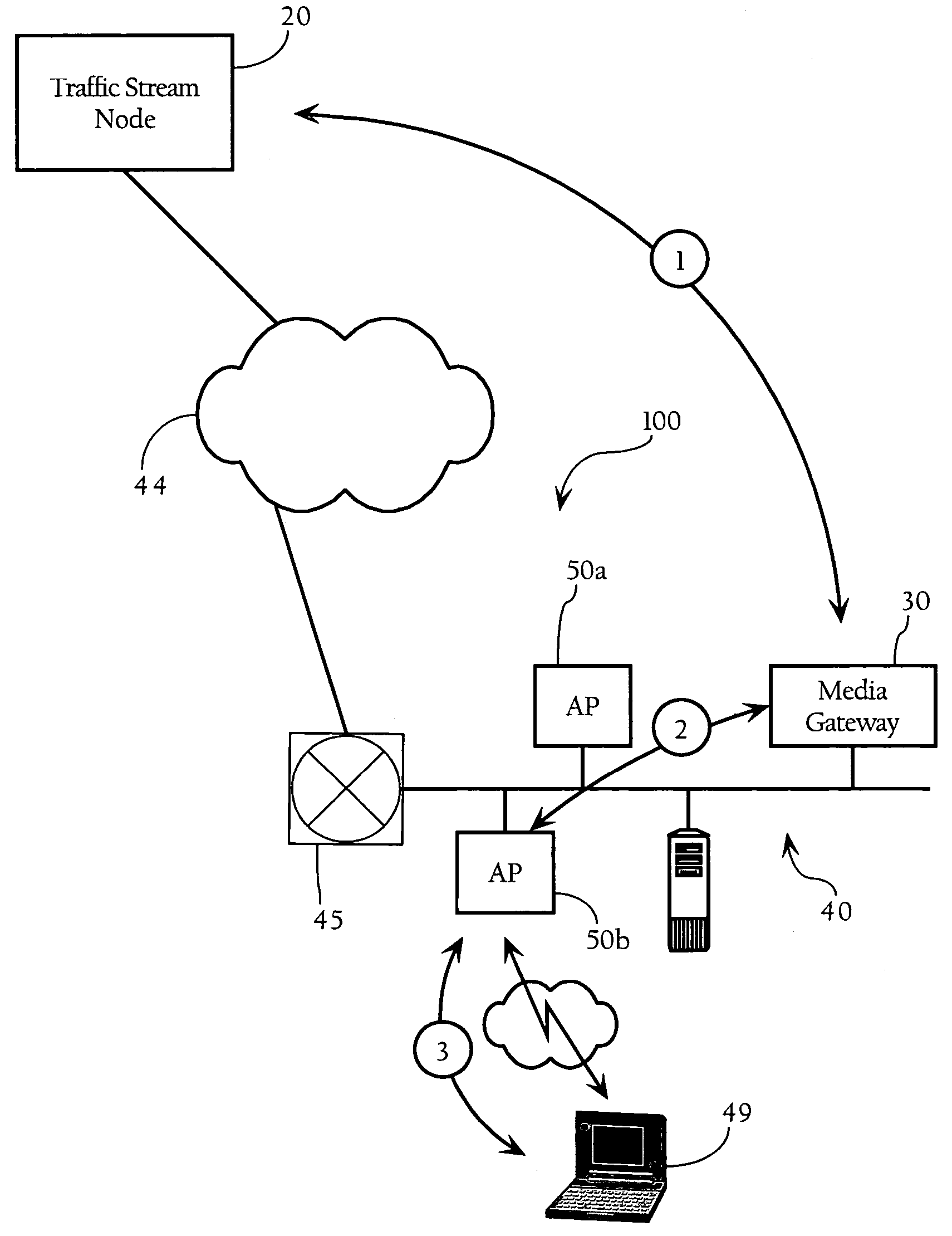

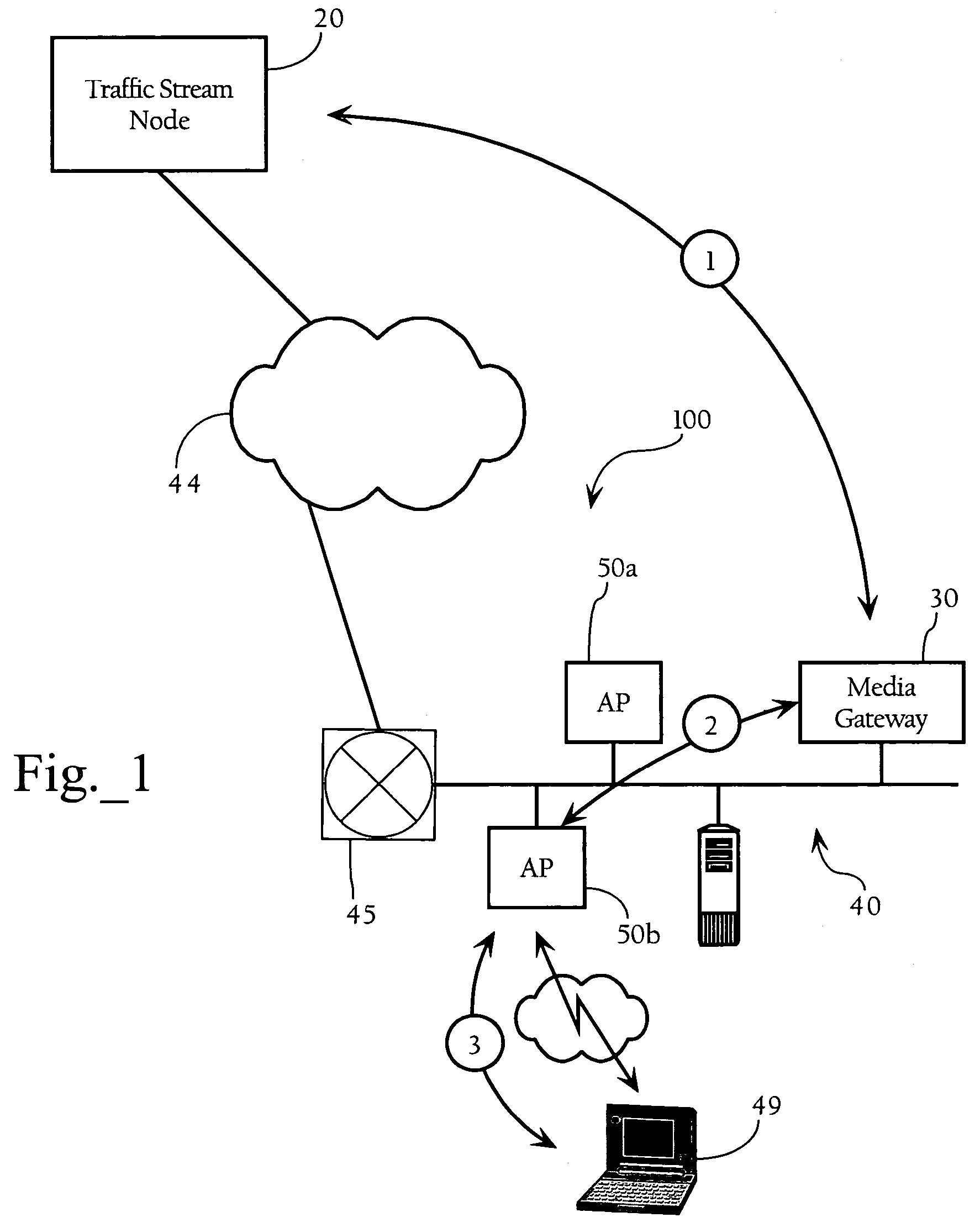

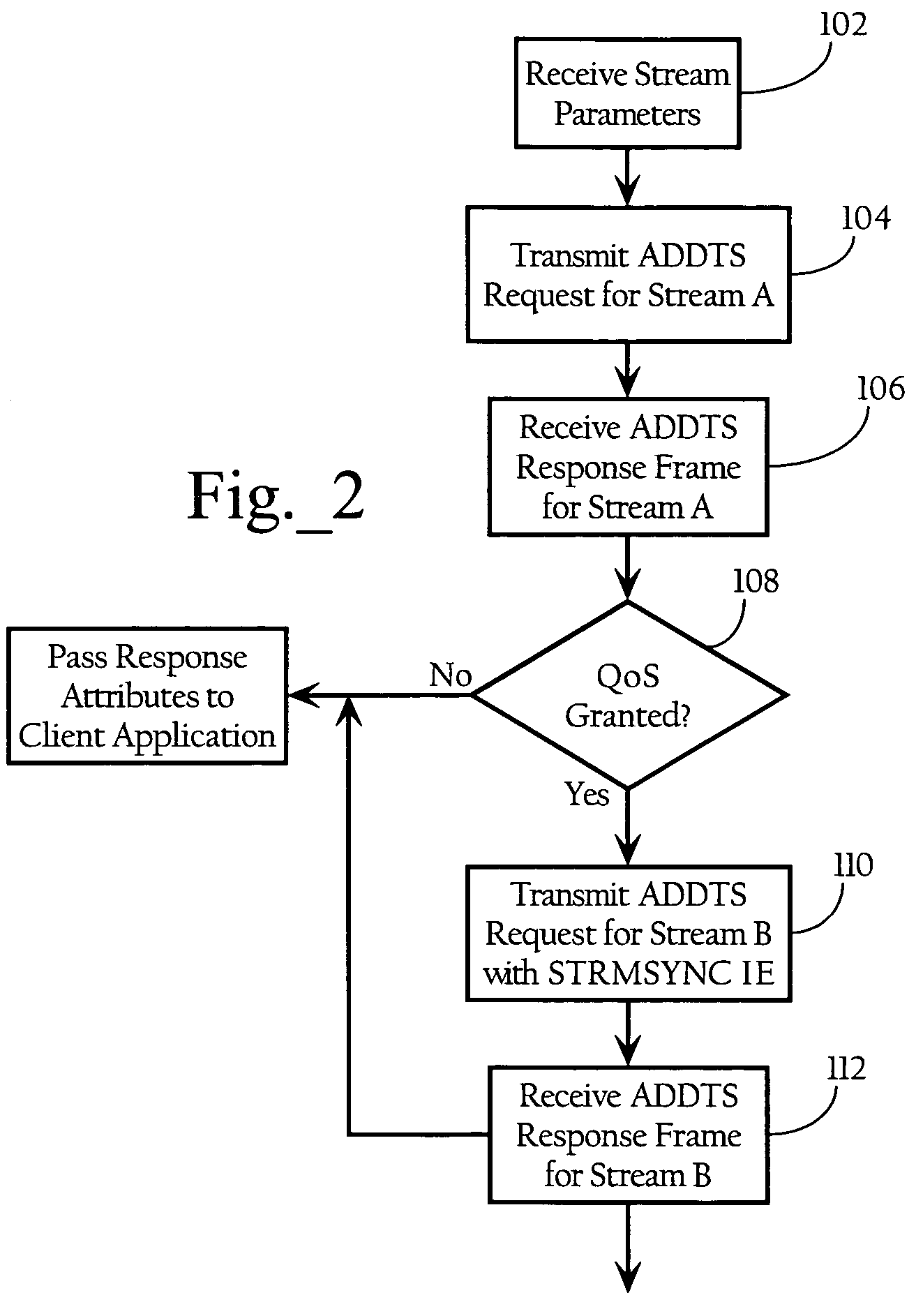

Method and system for media synchronization in QoS-enabled wireless networks

ActiveUS7486658B2Improve performanceGood synchronizationSynchronisation arrangementError preventionData synchronizationWireless mesh network

Owner:CISCO TECH INC

Methods for interactive visualization of spreading activation using time tubes and disk trees

InactiveUS6151595AData processing applicationsDigital data information retrievalSpreading activationContinuous measurement

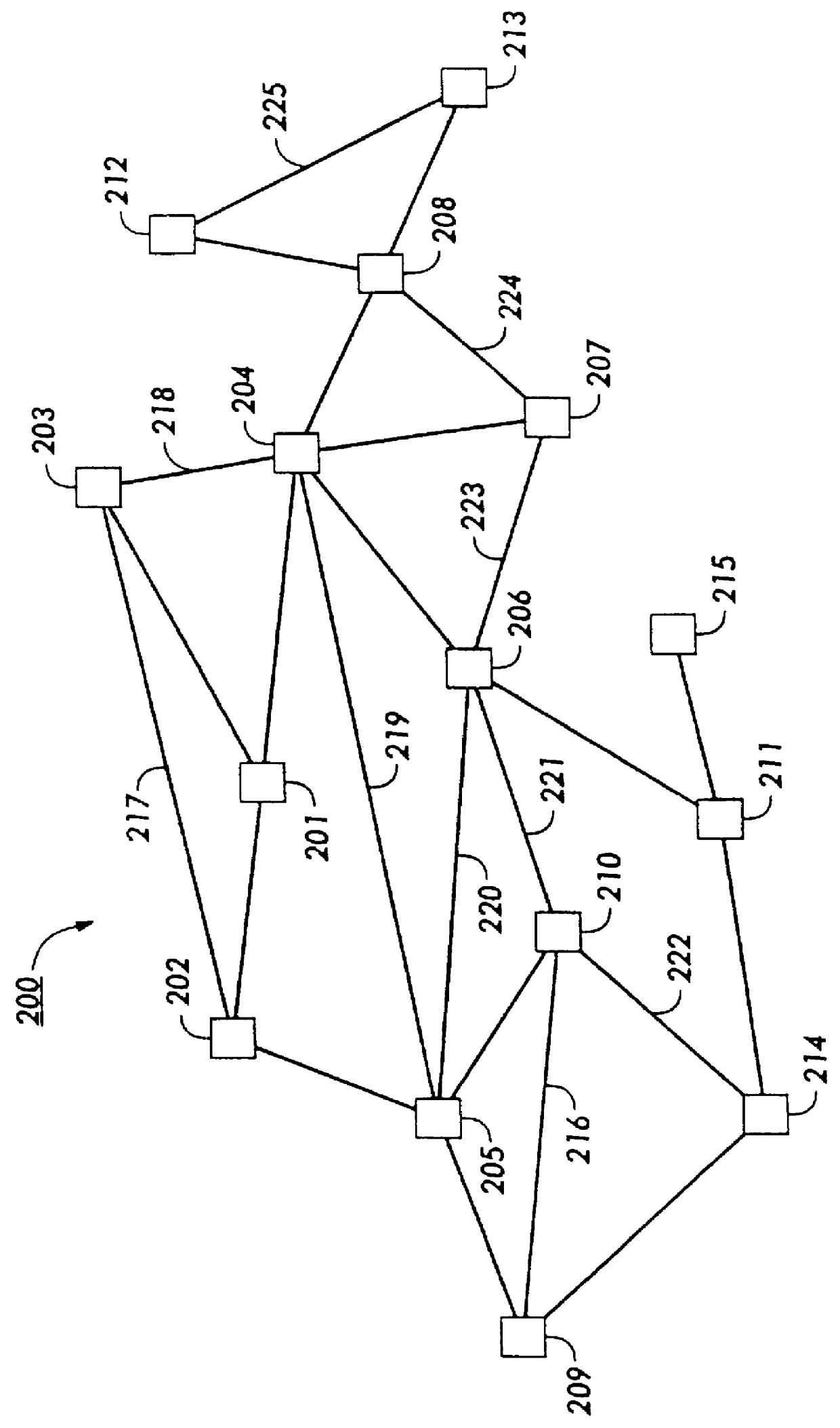

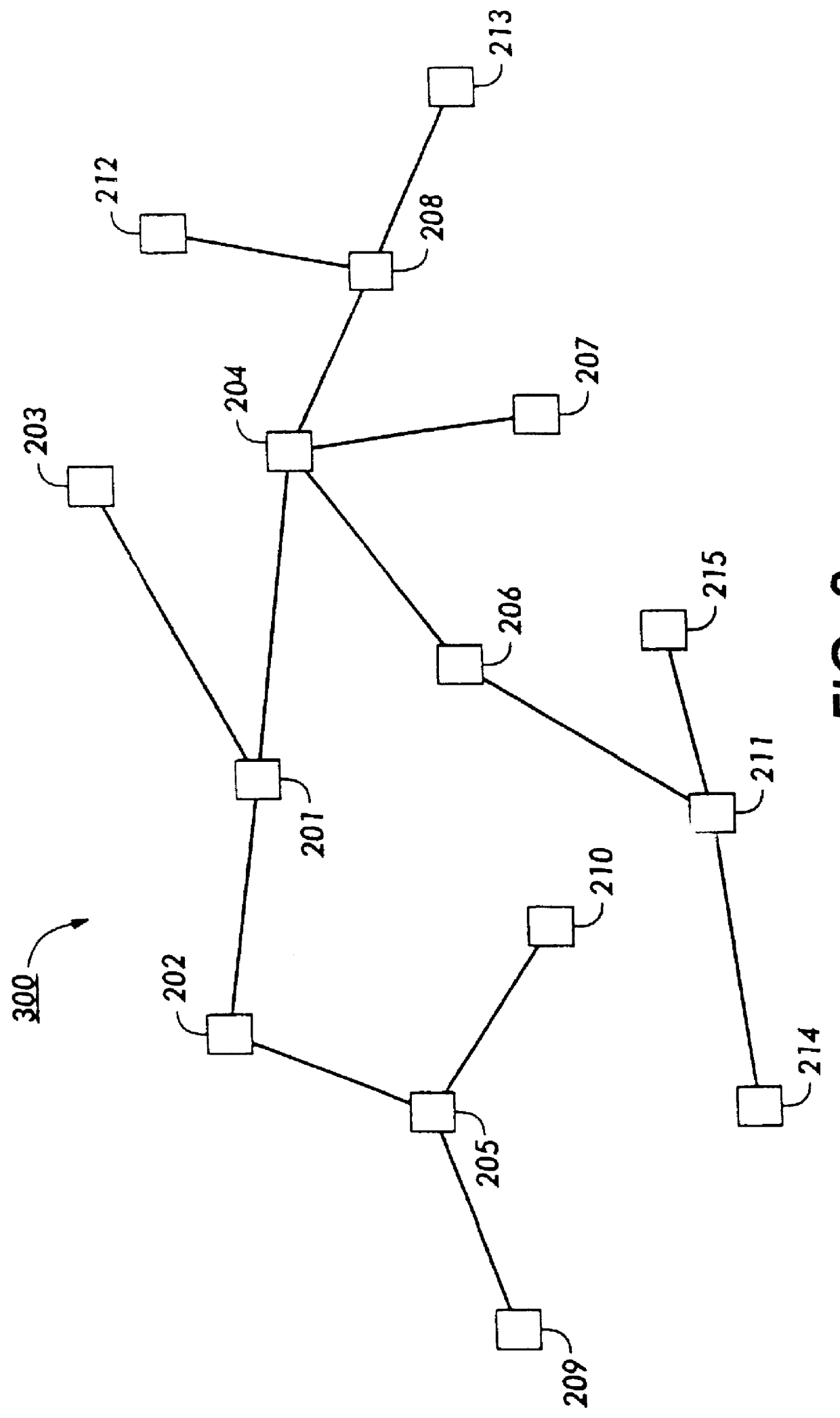

Methods for displaying results of a spreading activation algorithm and for defining an activation input vector for the spreading activation algorithm are disclosed. A planar disk tree is used to represent the generalized graph structure being modeled in a spreading activation algorithm. Activation bars on some or all nodes of the planar disk tree in the dimension perpendicular to the disk tree encode the final activation level resulting at the end of N iterations of the spreading activation algorithm. The number of nodes for which activation bars are displayed may be a predetermined number, a predetermine fraction of all nodes, or a determined by a predetermined activation level threshold. The final activation levels resulting from activation spread through more than one flow network corresponding to the same generalized graph are displayed as color encoded segments on the activation bars. Content, usage, topology, or recommendation flow networks may be used for spreading activation. The difference between spreading activation through different flow networks corresponding to the same generalized graph may be displayed by subtracting the resulting activation patterns from each network and displaying the difference. The spreading activation input vector is determined by continually measuring the dwell time that the user's cursor spends on a displayed node. Activation vectors at various intermediate steps of the N-step spreading activation algorithm are color encoded onto nodes of disk trees within time tubes. The activation input vector and the activation vectors resulting from all N steps are displayed in a time tube having N+1 planar disk trees. Alternatively, a periodic subset of all N activation vectors are displayed, or a subset showing planar disk trees representing large changes in activation levels or phase shifts are displayed while planar disk trees representing smaller changes in activation levels are not displayed.

Owner:XEROX CORP

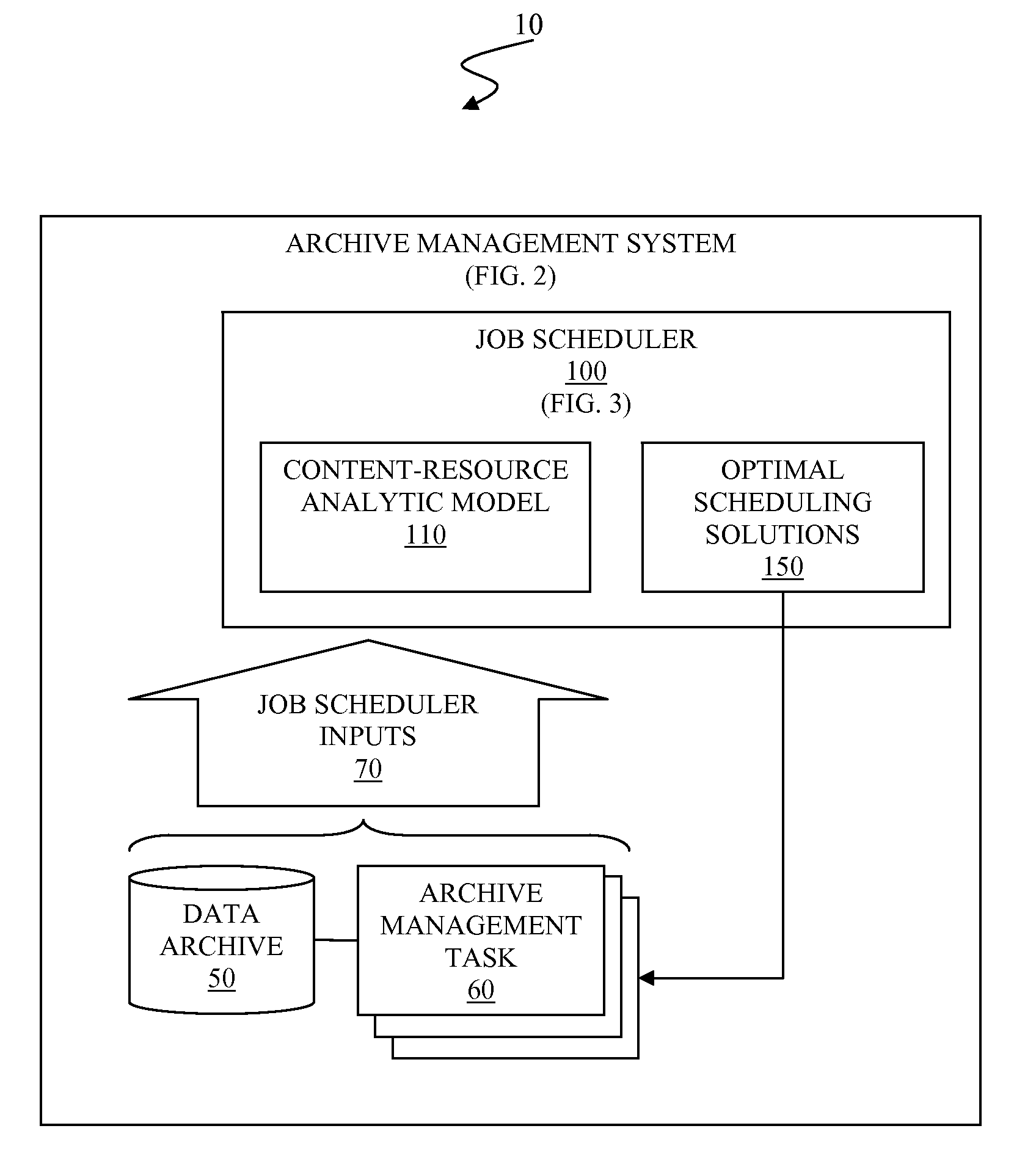

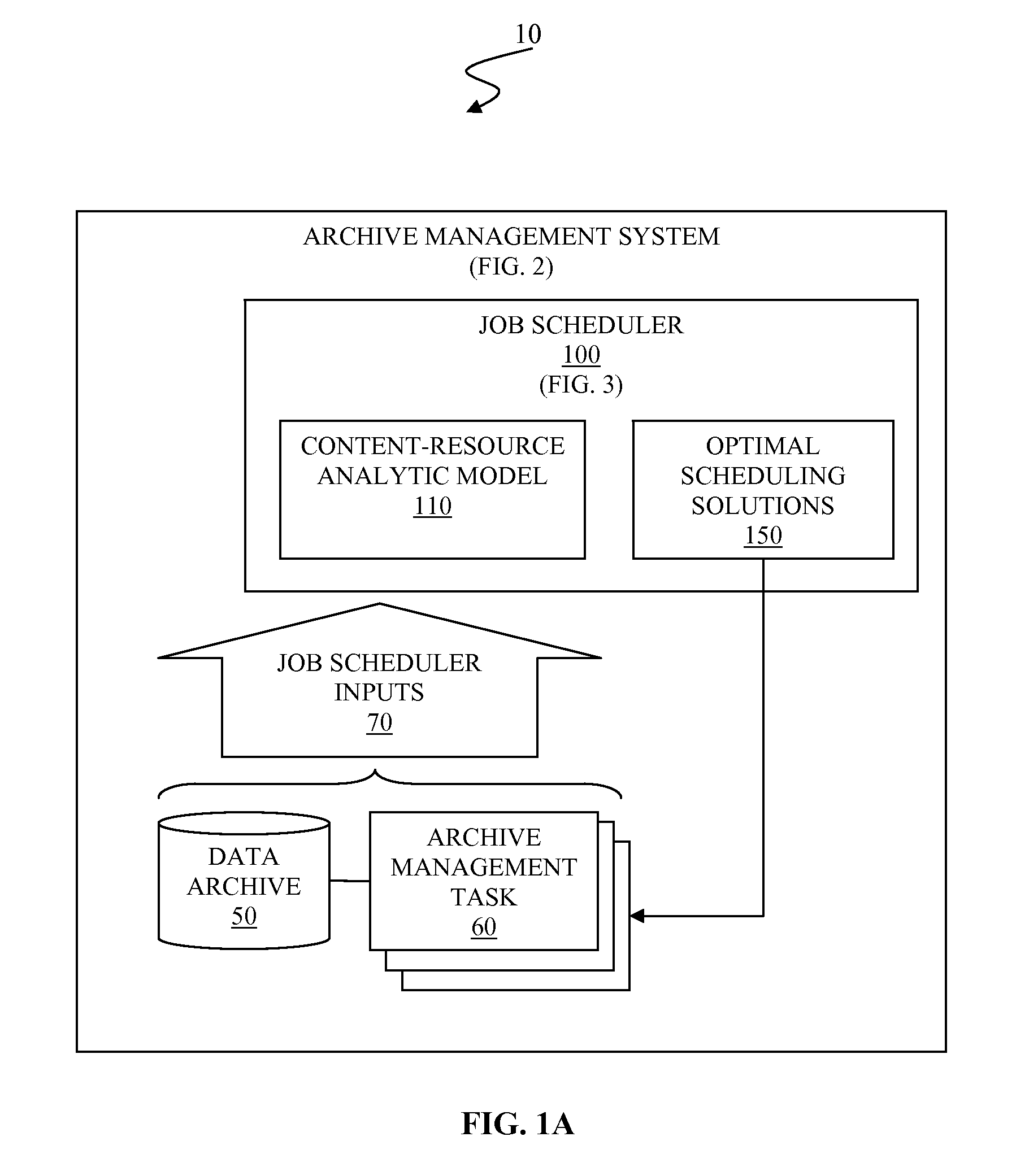

Continuous optimization of archive management scheduling by use of integrated content-resource analytic model

InactiveUS20110138391A1Easy to manageContinuously optimizingError detection/correctionMultiprogramming arrangementsAnalytic modelHeuristic

A system and associated method for continuously optimizing data archive management scheduling. A job scheduler receives, from an archive management system, inputs of task information, replica placement data, infrastructure topology data, and resource performance data. The job scheduler models a flow network that represents data content, software programs, physical devices, and communication capacity of the archive management system in various levels of vertices according to the received inputs. An optimal path in the modeled flow network is computed as an initial schedule, and the archive management system performs tasks according to the initial schedule. The operations of scheduled tasks are monitored and the job scheduler produces a new schedule based on feedbacks of the monitored operations and predefined heuristics.

Owner:KYNDRYL INC

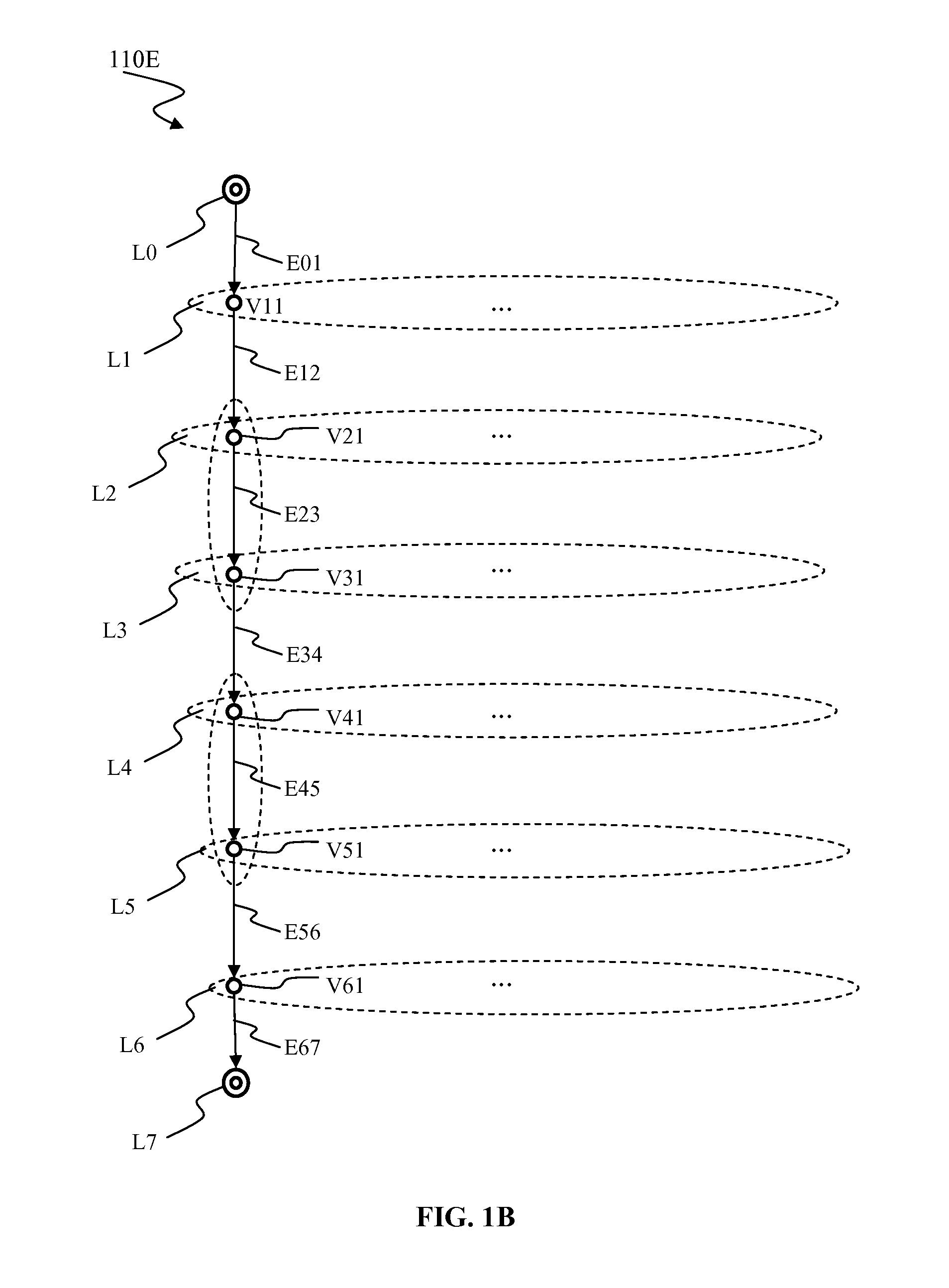

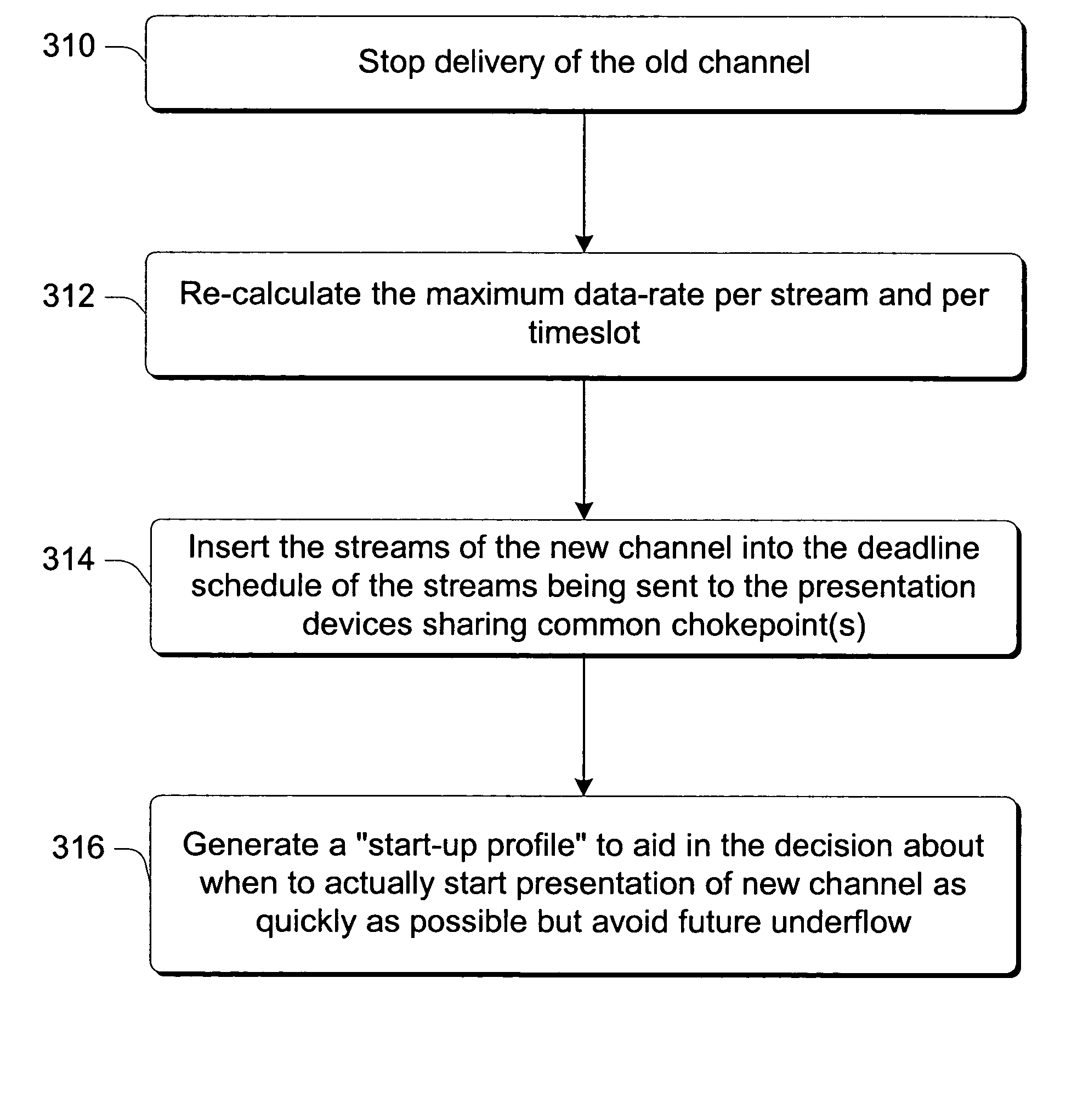

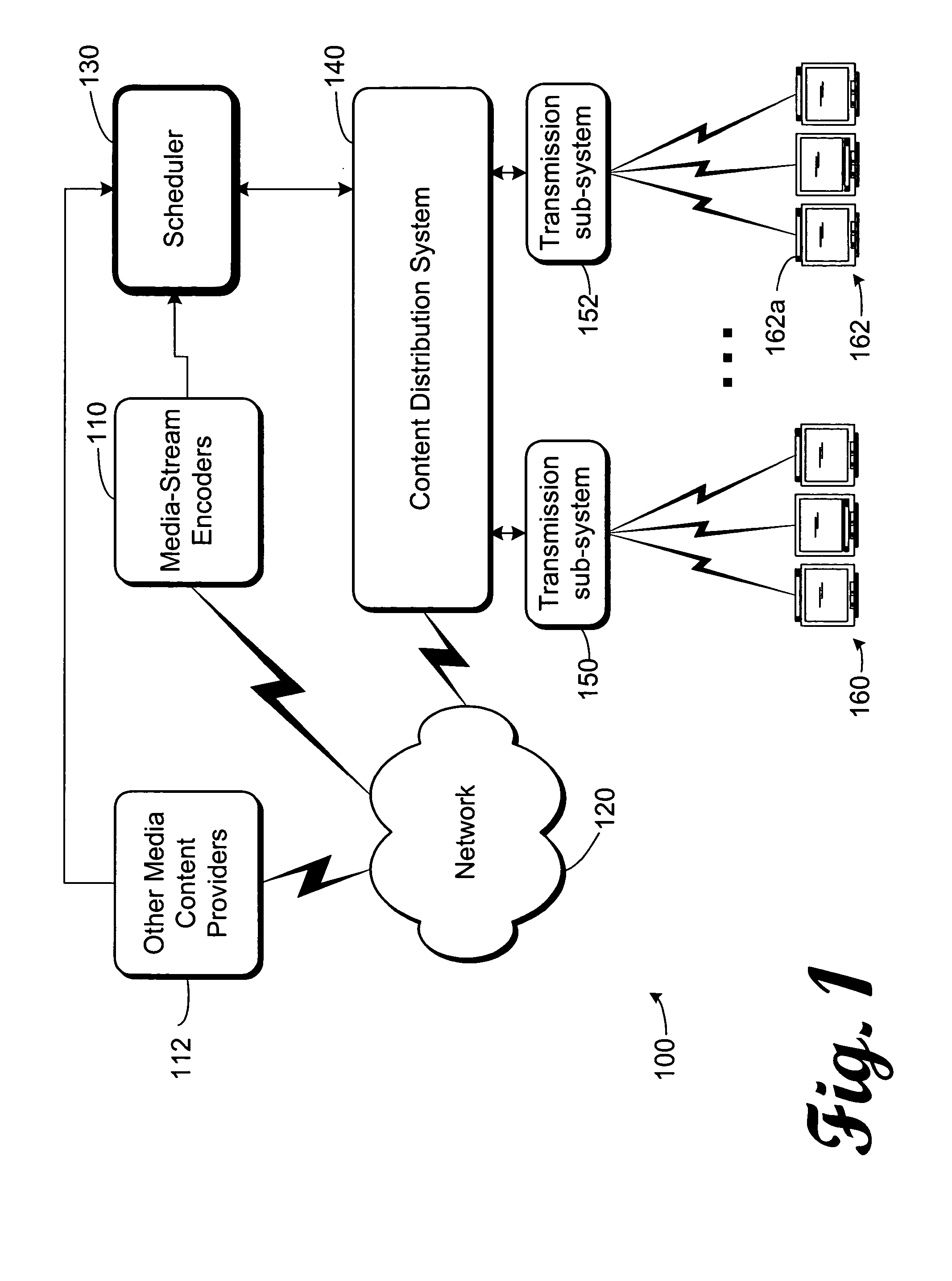

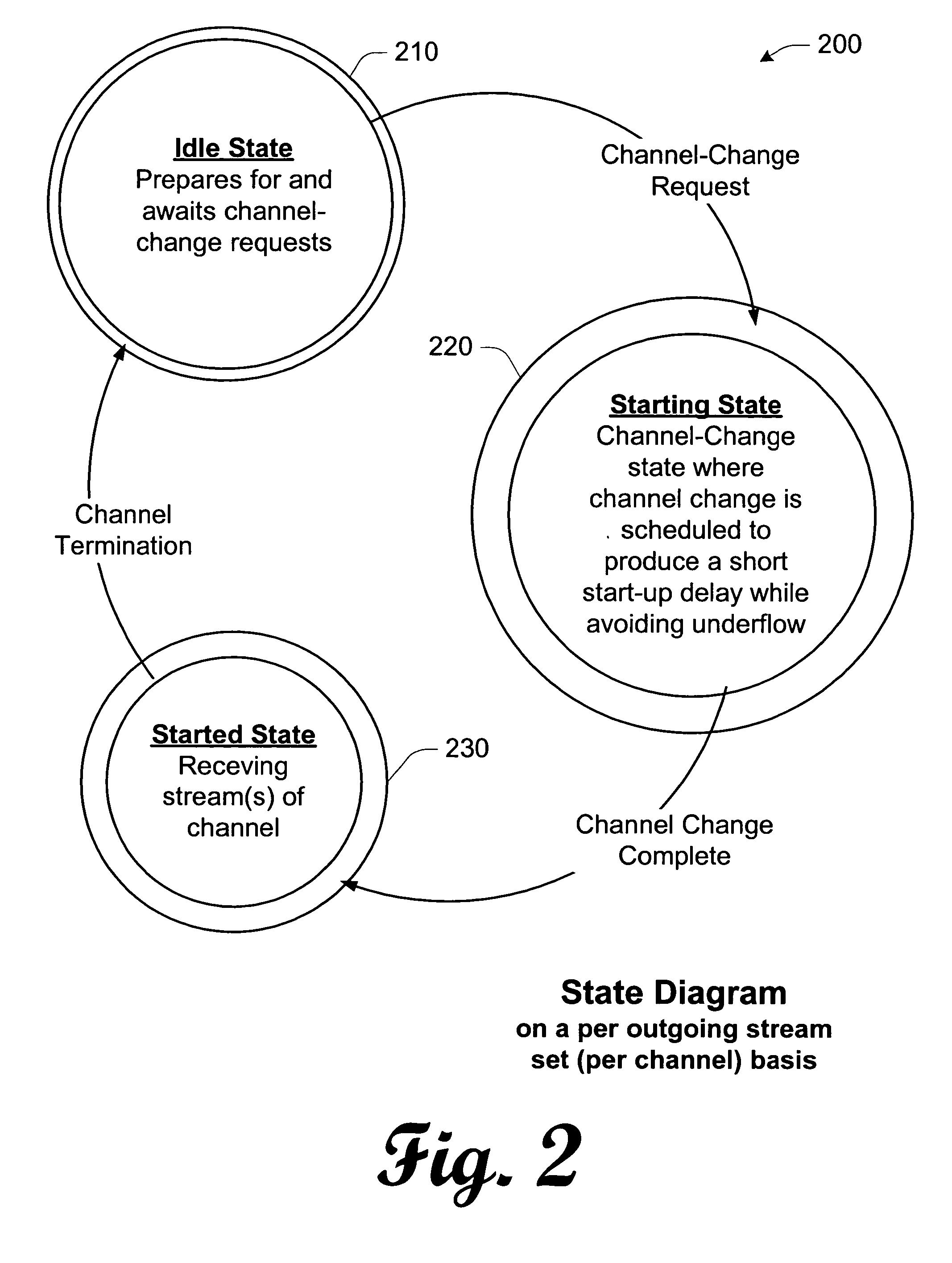

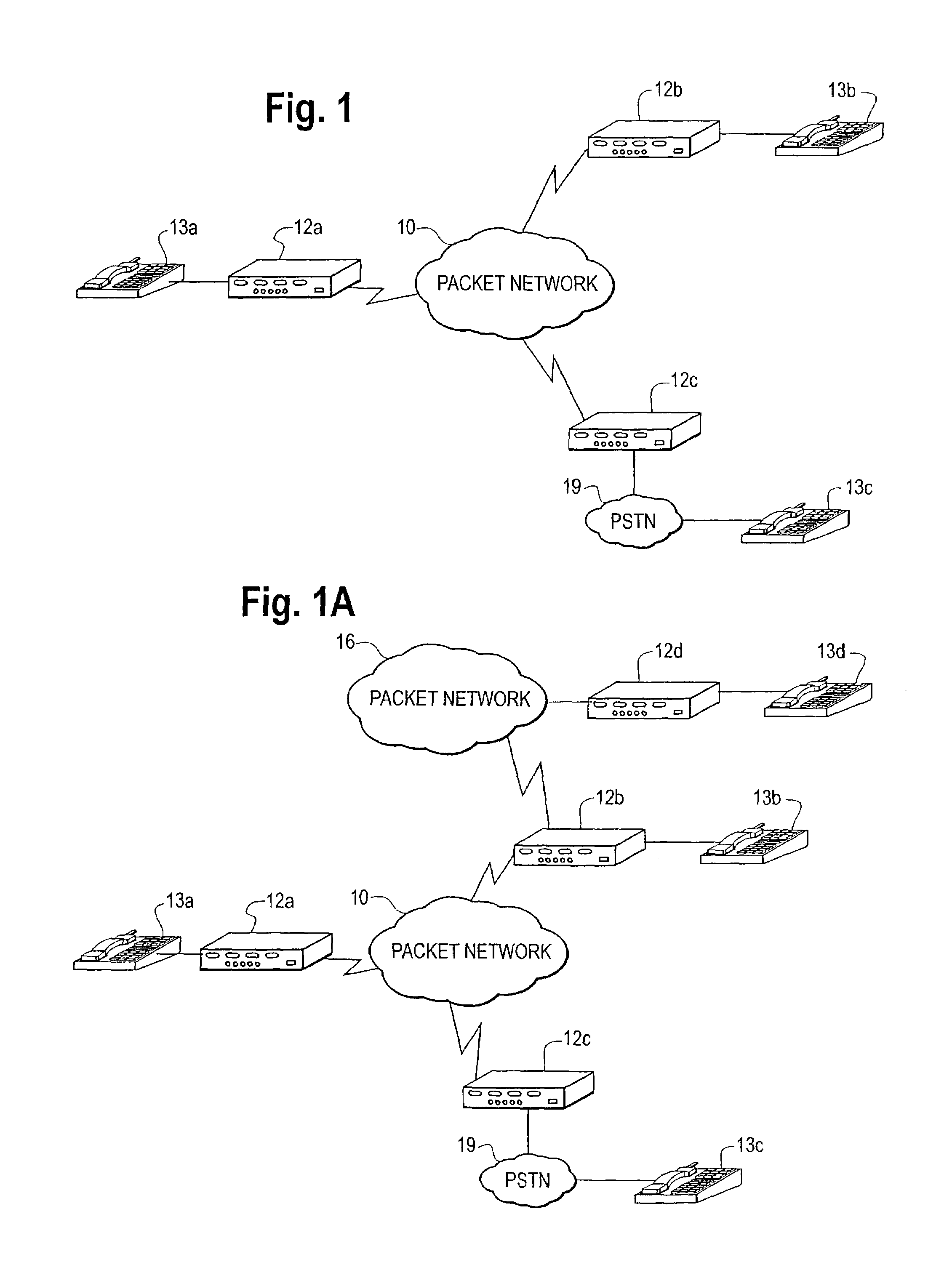

Media stream scheduling for hiccup-free fast-channel-change in the presence of network chokepoints

InactiveUS20050080904A1Facilitates fast start-up of a new media streamAvoid disturbanceTelevision system detailsPulse modulation television signal transmissionHiccupsNew media

An implementation, as described herein, facilitates fast start-up of a new media stream while avoiding temporal interruption (i.e., “hiccups”) of the presentation of that new media stream. At least one implementation, described herein, coordinates the delivery of multiple simultaneous media streams on a media-stream network. Its coordination accounts for traversal of bandwidth-restricted chokepoints; quickly stopping delivery of one or more media streams from the set of streams; quickly initiating delivery and presentation of one or more new media streams not previously in the set (i.e., a “channel change”); and producing clean playback of all of the streams in the set, despite their different timelines. This abstract itself is not intended to limit the scope of this patent. The scope of the present invention is pointed out in the appending claims.

Owner:MICROSOFT TECH LICENSING LLC

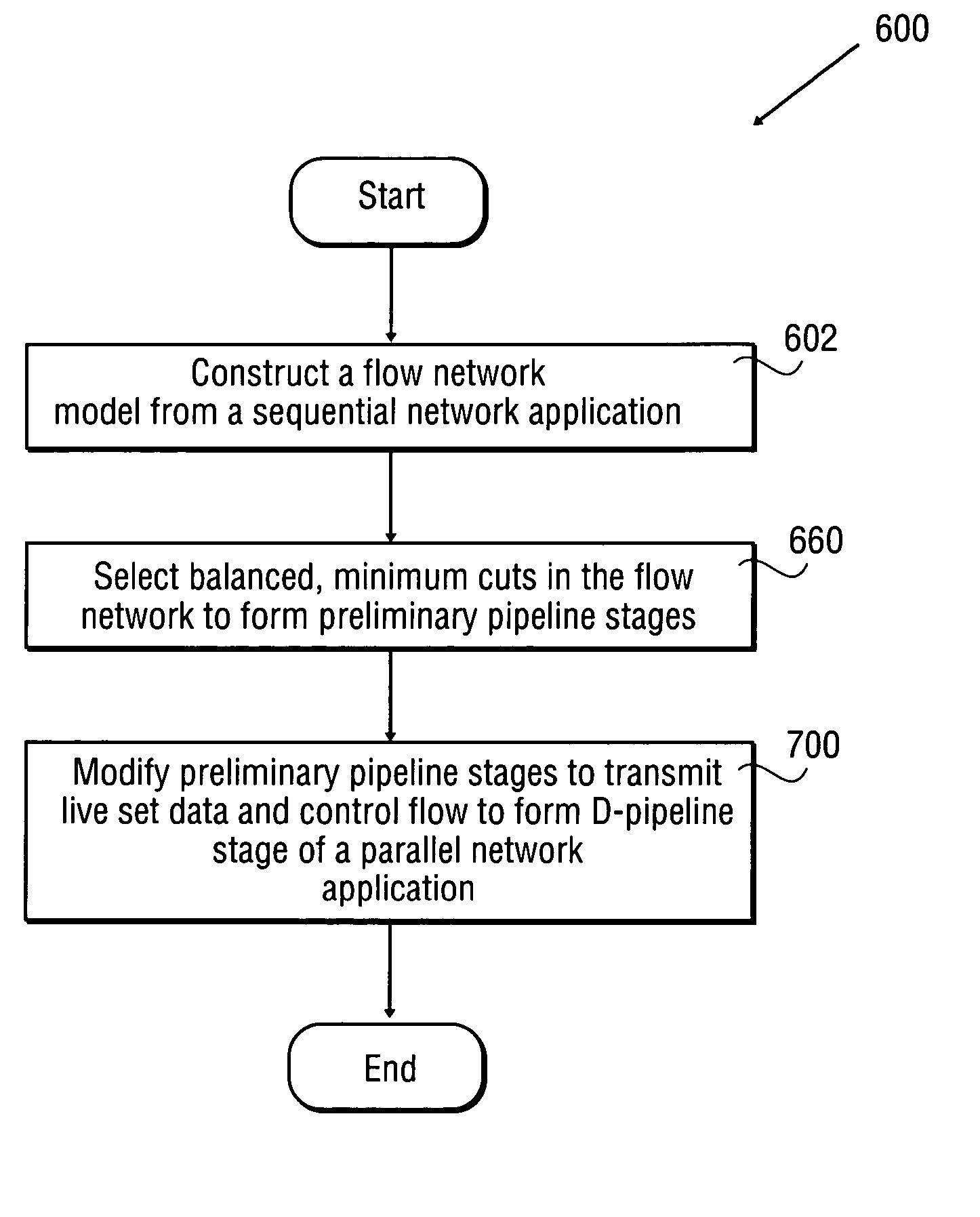

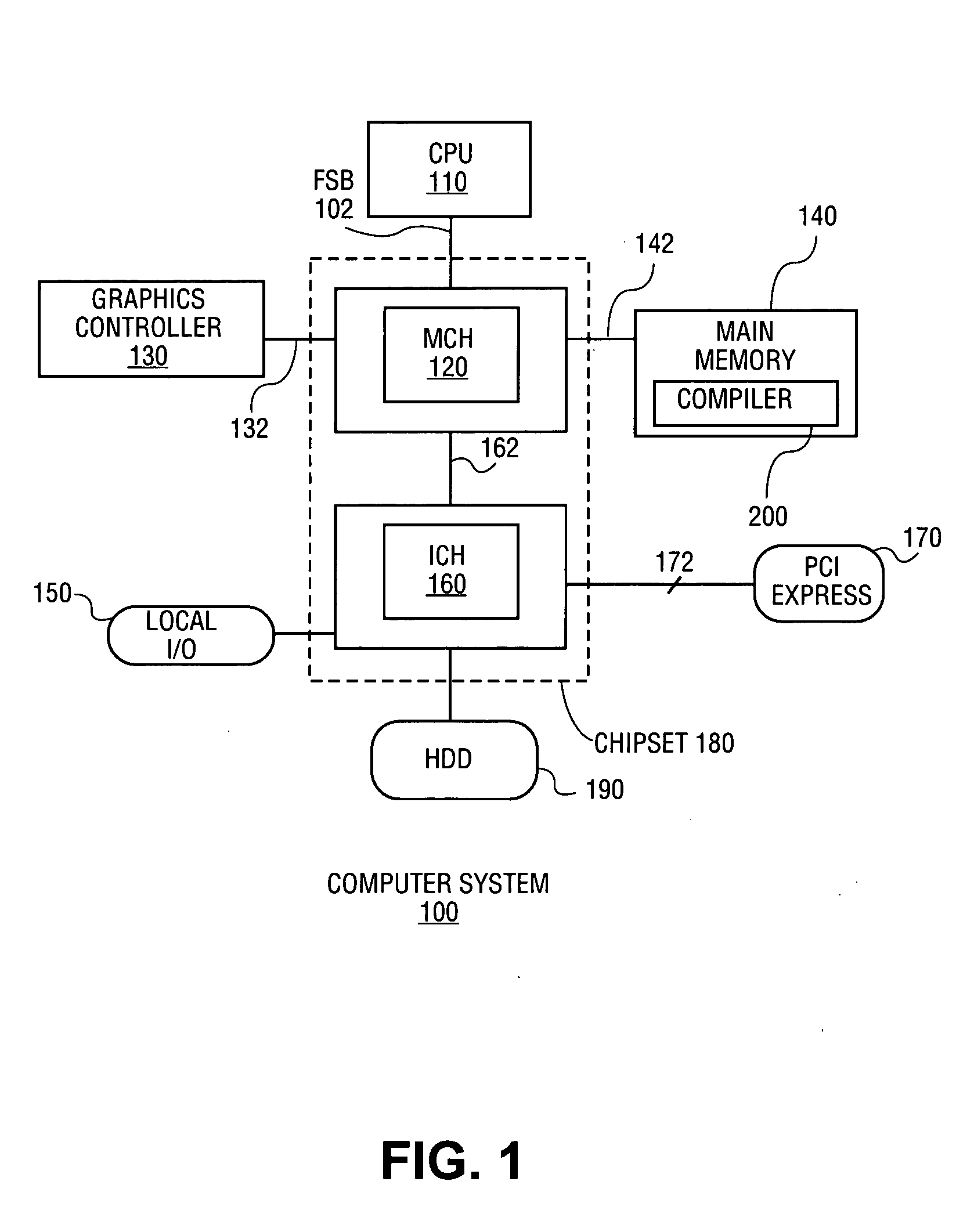

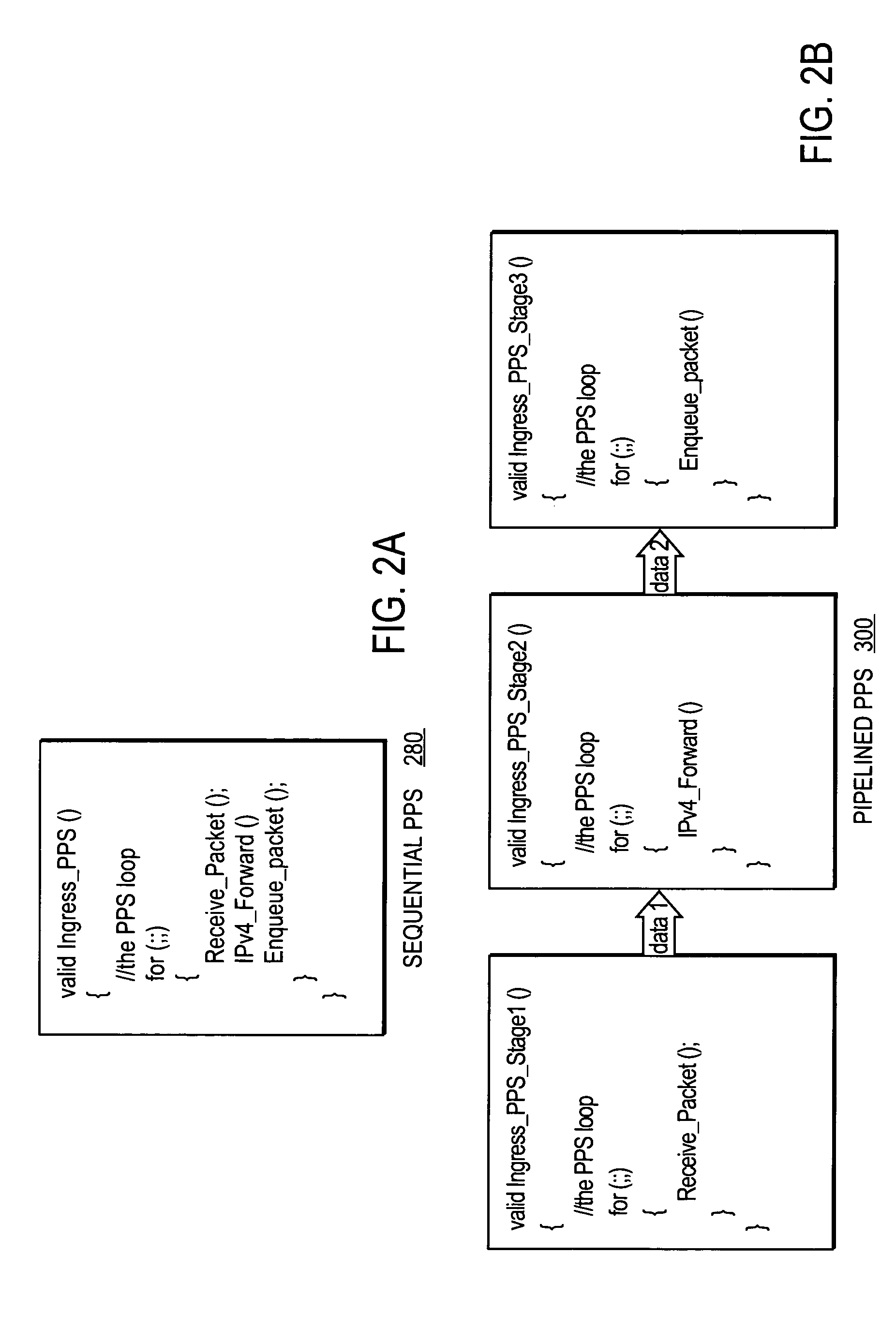

Apparatus and method for automatically parallelizing network applications through pipelining transformation

InactiveUS20050108696A1Software engineeringDigital computer detailsComputational scienceParallel computing

In some embodiments, a method and apparatus for automatically parallelizing a sequential network application through pipeline transformation are described. In one embodiment, the method includes the configuration of a network processor into a D-stage processor pipeline. Once configured, a sequential network application program is transformed into D-pipeline stages. Once transformed, the D-pipeline stages are executed in parallel within the D-stage processor pipeline. In one embodiment, transformation of a sequential application program is performed by modeling the sequential network program as a flow network model and selecting from the flow network model into a plurality of preliminary pipeline stages. Other embodiments are described and claimed.

Owner:INTEL CORP

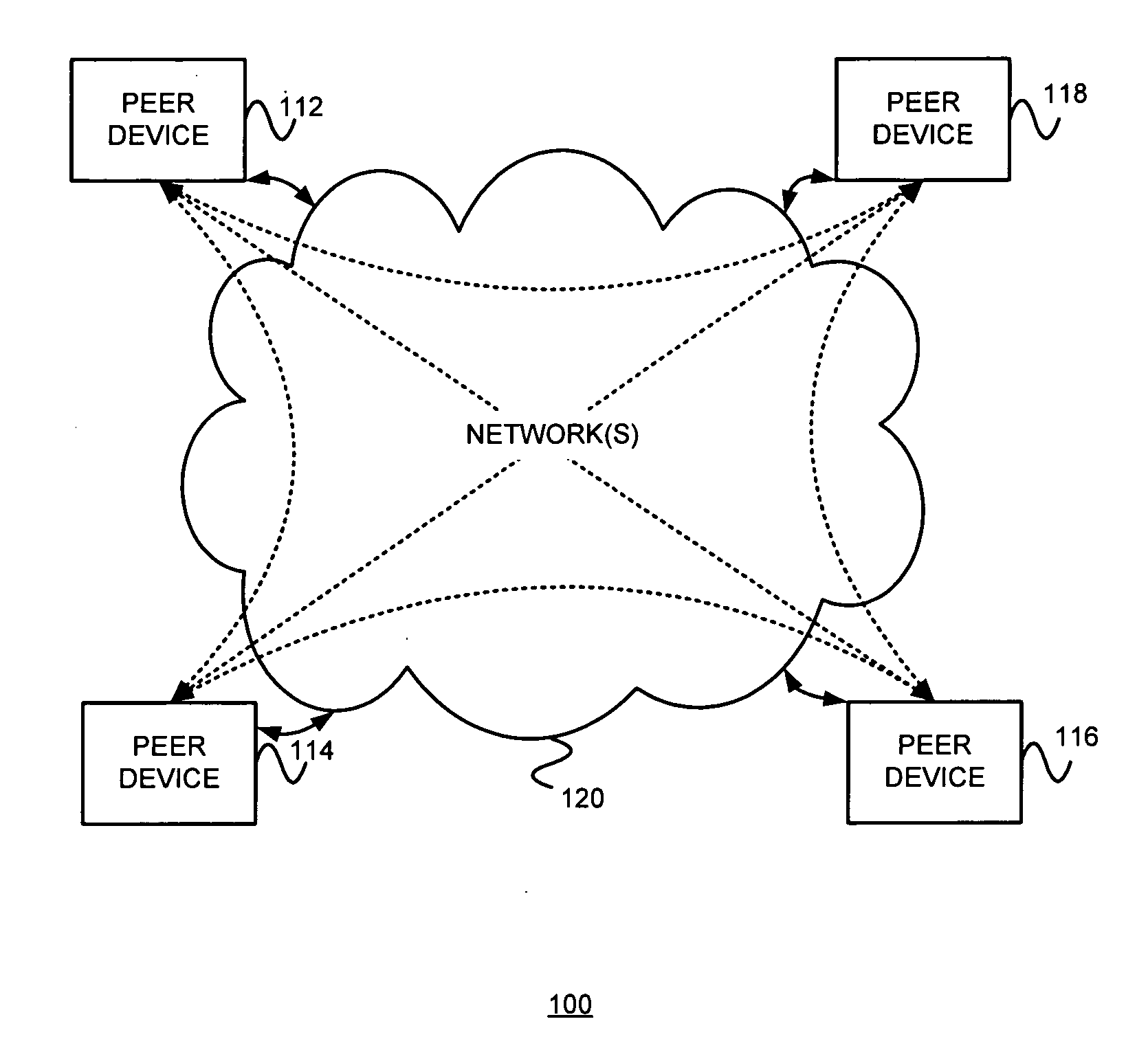

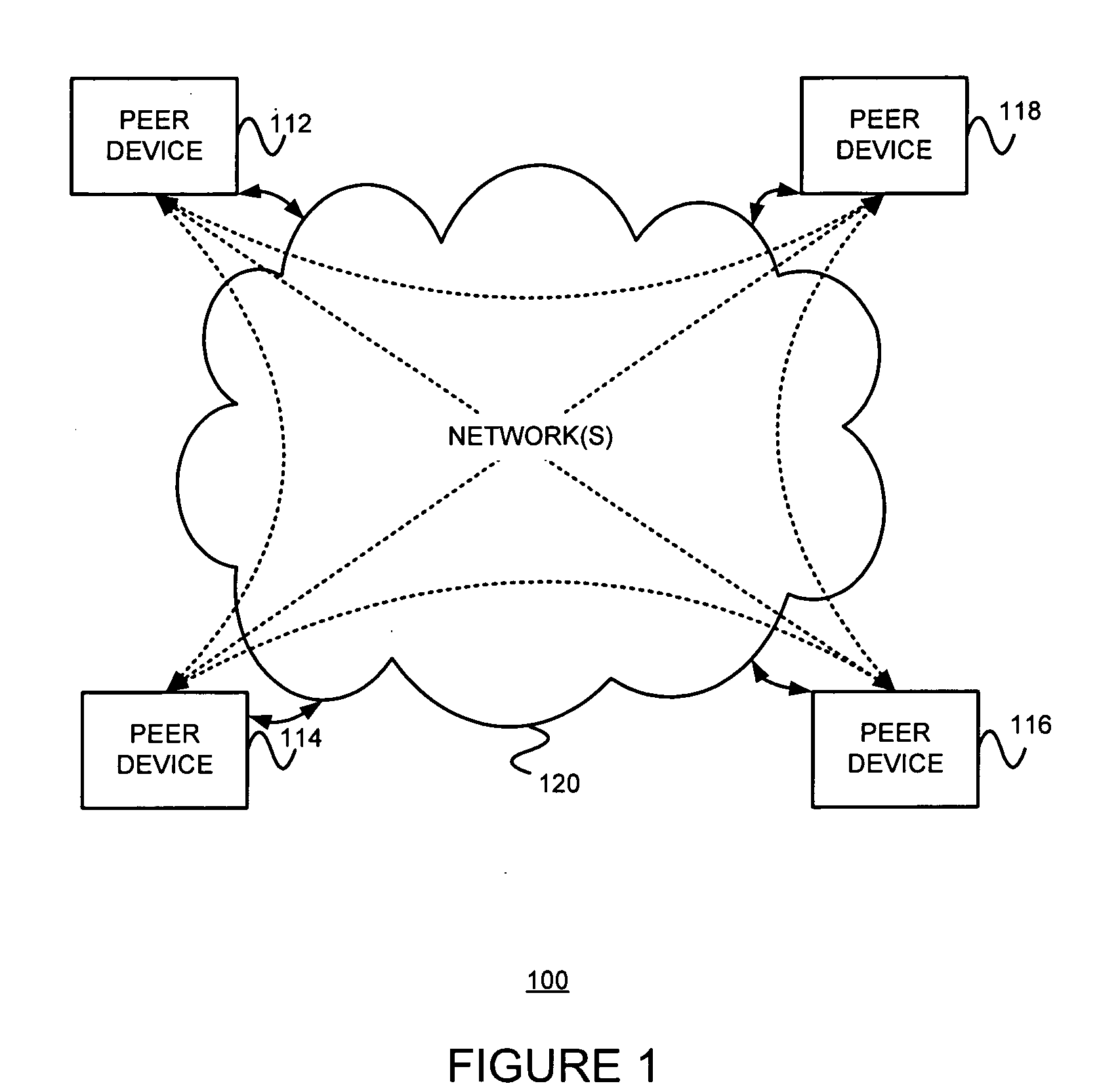

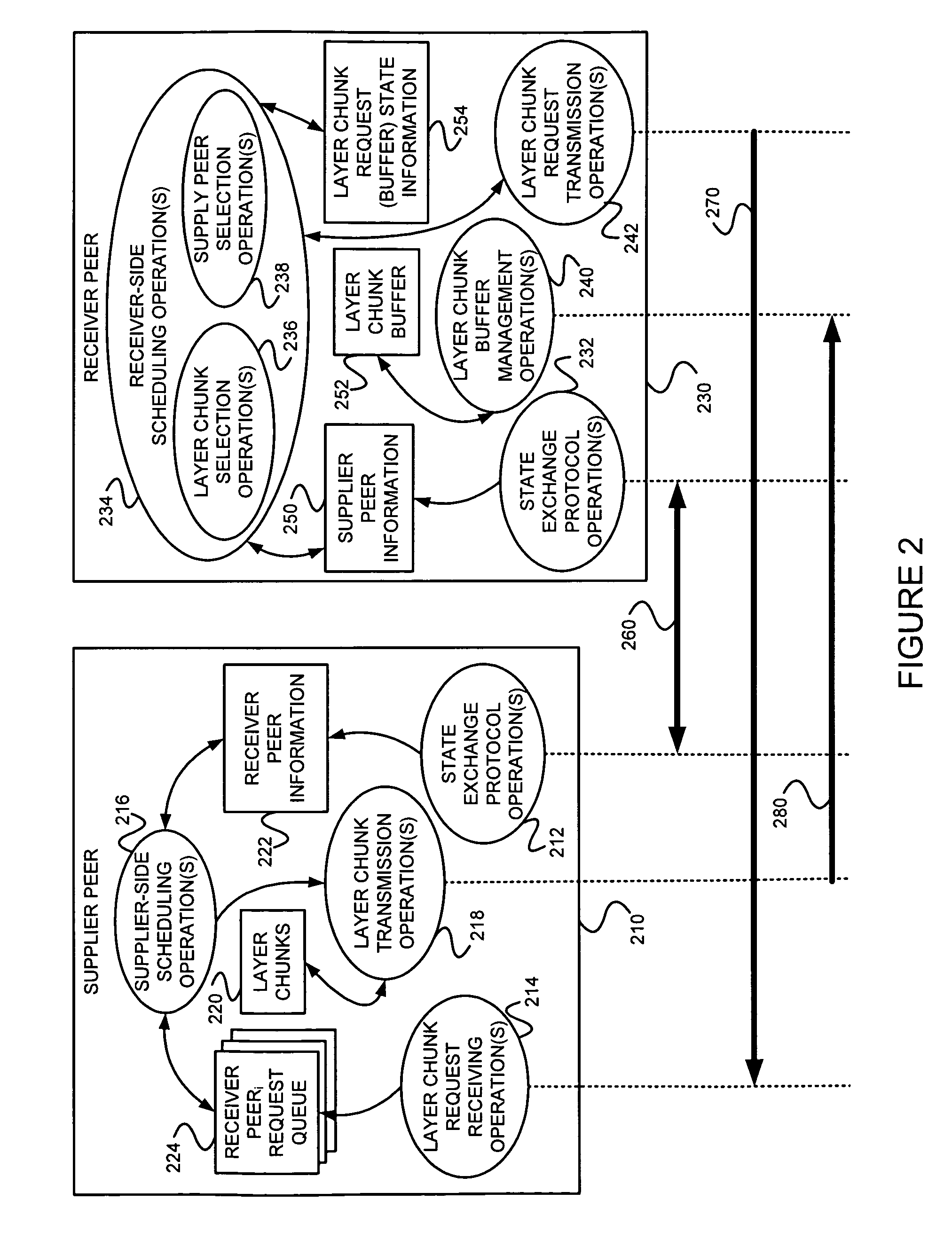

Using layered multi-stream video coding to provide incentives in p2p live streaming

InactiveUS20090037968A1Improve video qualityLow (butTwo-way working systemsSelective content distributionVideo encodingVideo quality

A distributed incentive mechanism is provided for peer-to-peer (P2P) streaming networks, such as mesh-pull P2P live streaming networks. Video (or audio) may be encoded into multiple sub-streams such as layered coding and multiple description coding. The system is heterogeneous with peers having different uplink bandwidths. Peers that upload more data (to a peer) receive more substreams (from that peer) and consequently better video quality. Unlike previous approaches in which each peer receives the same video quality no matter how much bandwidth it contributes to the system, differentiated video quality, commensurate with a peer's contribution to other peers, is provided, thereby discouraging free-riders.

Owner:POLYTECHNIC INST OF NEW YORK

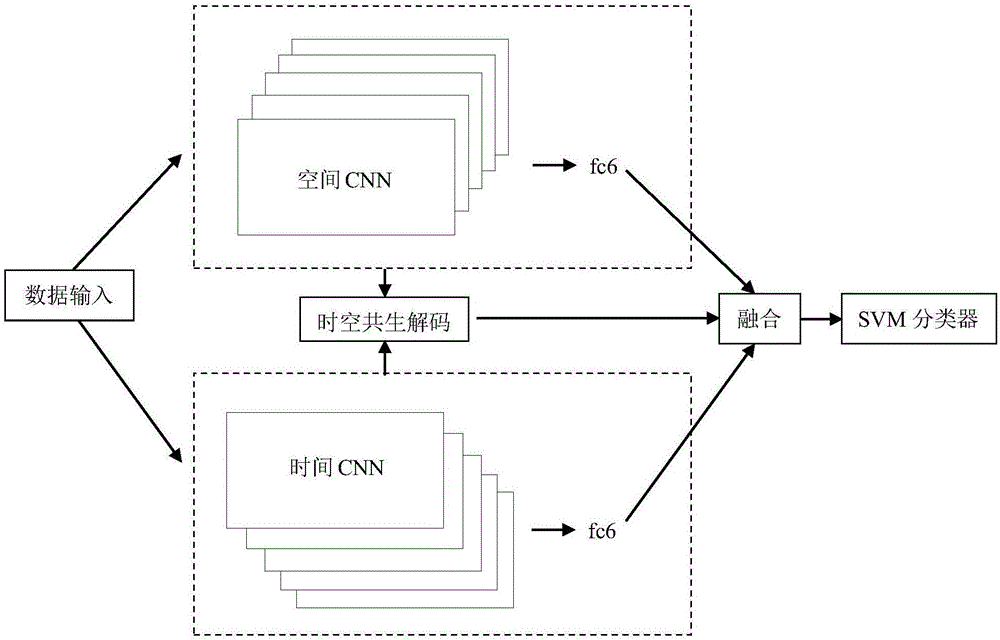

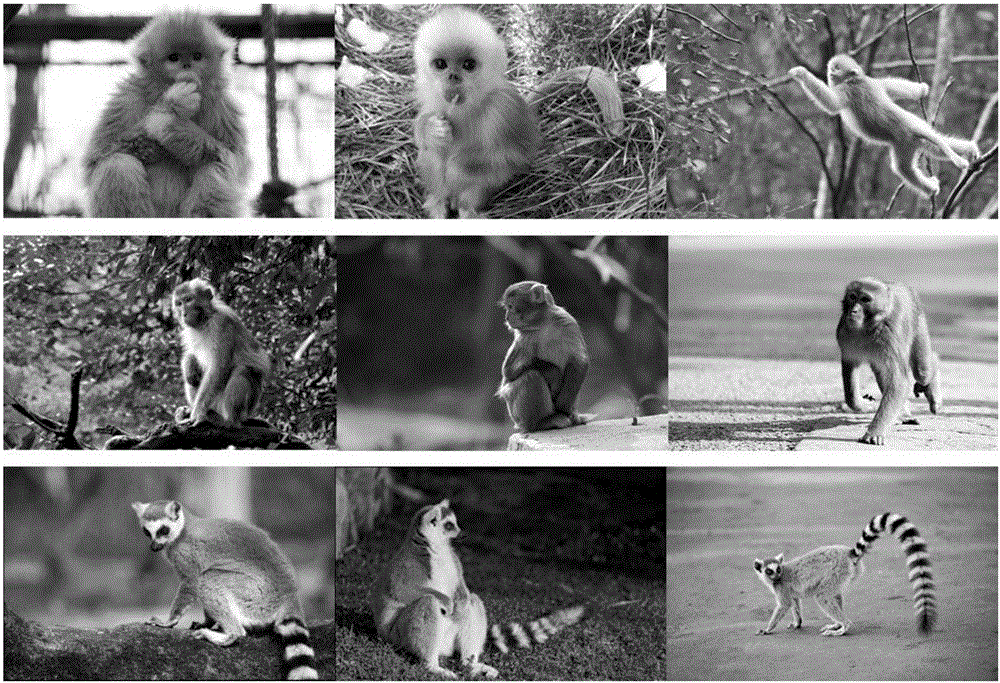

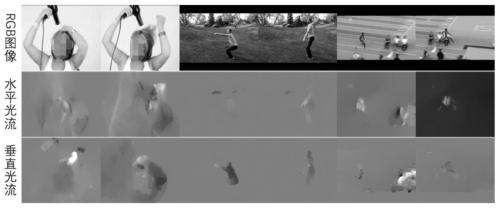

Video image classification method based on time-space co-occurrence double-flow network

InactiveCN106469314ACharacter and pattern recognitionNeural learning methodsFeature vectorAccuracy improvement

The invention provides a video image classification method based on a time-space co-occurrence double-flow network. The main content of the video image classification method comprises data input, a time-space double-flow network, fusion and an SVM classifier. The process comprises the steps of firstly inputting image and light stream information, performing early fusion by being combined with a time network and a space network, enabling fusion output to act as a feature vector, inputting the feature vector into the SVM classifier, and acquiring a final classification result. According to the invention, a method that the early fusion double-flow network is combined with time information and space information (time-space co-occurrence) is adopted, a video data set of the monkey is utilized, and more frames, namely, more space data, are utilized from each piece of video so as to generate significant improvement in precision; the space information and the time information are combined and form mutual complementation, and the precision reaches 65.8%. A smaller number of separated clusters are formed through using the co-occurrence method, the separated clusters generally stay together more closely, and the time information is better utilized.

Owner:SHENZHEN WEITESHI TECH

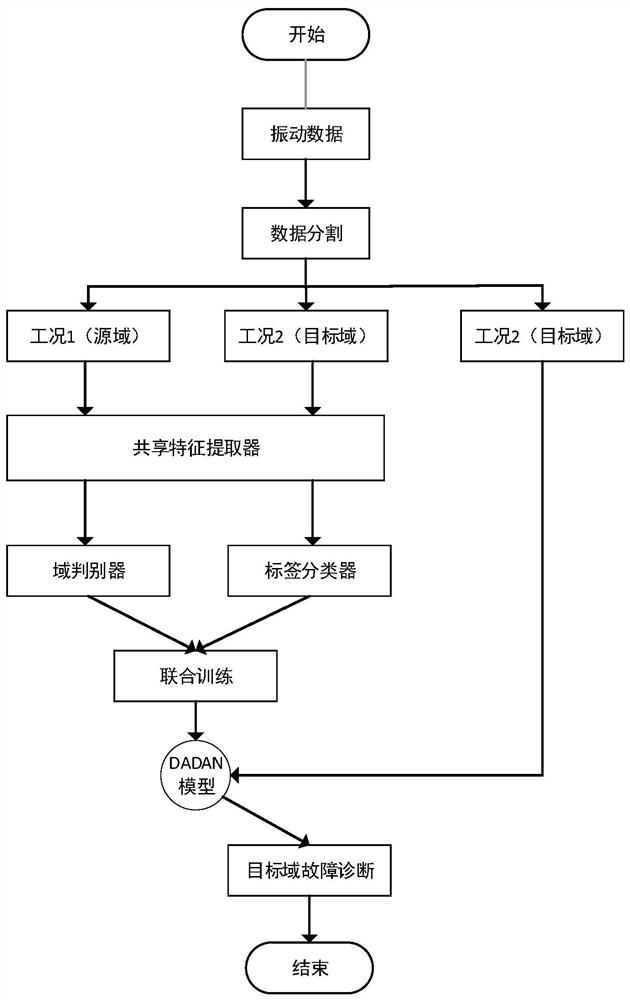

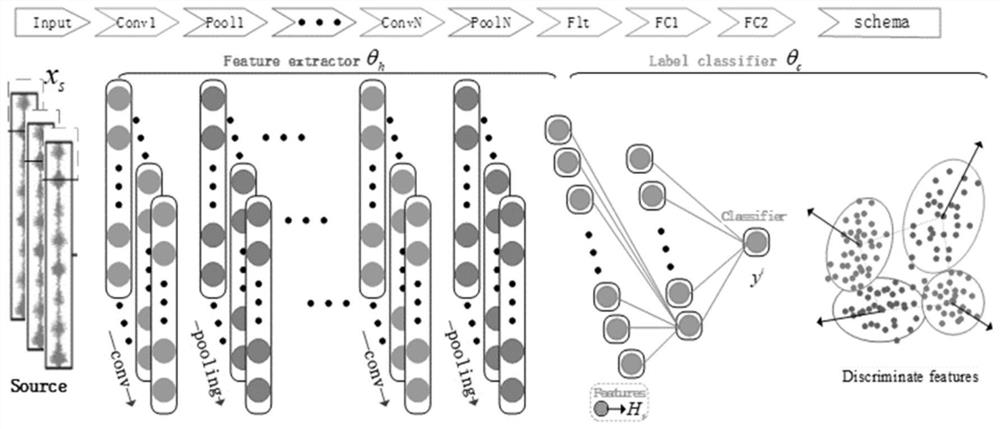

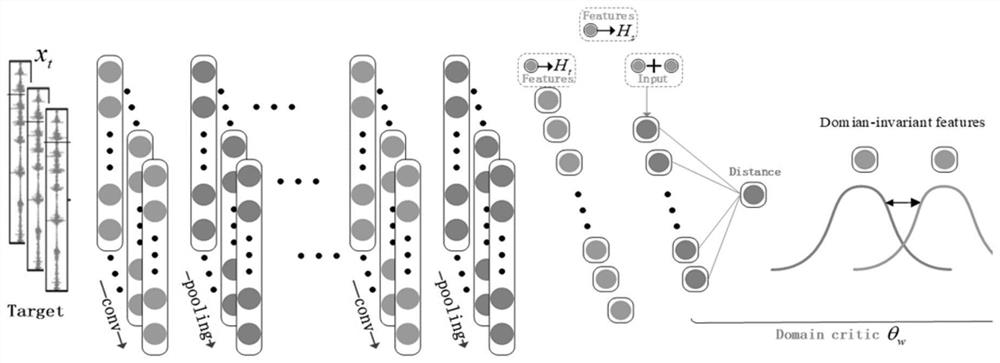

Intelligent fault diagnosis method based on deep adversarial domain self-adaption

ActiveCN111898634AAvoid dependenceAvoid instabilityCharacter and pattern recognitionNeural architecturesData setData information

The invention provides an intelligent fault diagnosis method based on deep adversarial domain self-adaption. The method comprises the steps: collecting vibration signals of a rotating machine under different working conditions through a sensor, and carrying out the signal segmentation of a data set under different working conditions through a moving time window; discriminative features in the dataset are extracted; constructing a deep adversarial domain adaptive network by combining a feature extractor and a domain discriminator, and extracting domain invariant features under two working conditions; adopting a training strategy of the adversarial network to jointly train the two-stream network model until the model converges, and using the trained category classifier to identify the bearing health state of the target domain data set lacking the fault label. According to the method, fault diagnosis is carried out on the working condition with insufficient data information by means of the working condition with rich data information, migration of diagnosis knowledge is completed. Meanwhile, a deep learning network is constructed, dependence on expert knowledge in a traditional diagnosis method is overcome, and an effective tool is provided for reducing the cost of a future intelligent fault diagnosis system.

Owner:XI AN JIAOTONG UNIV

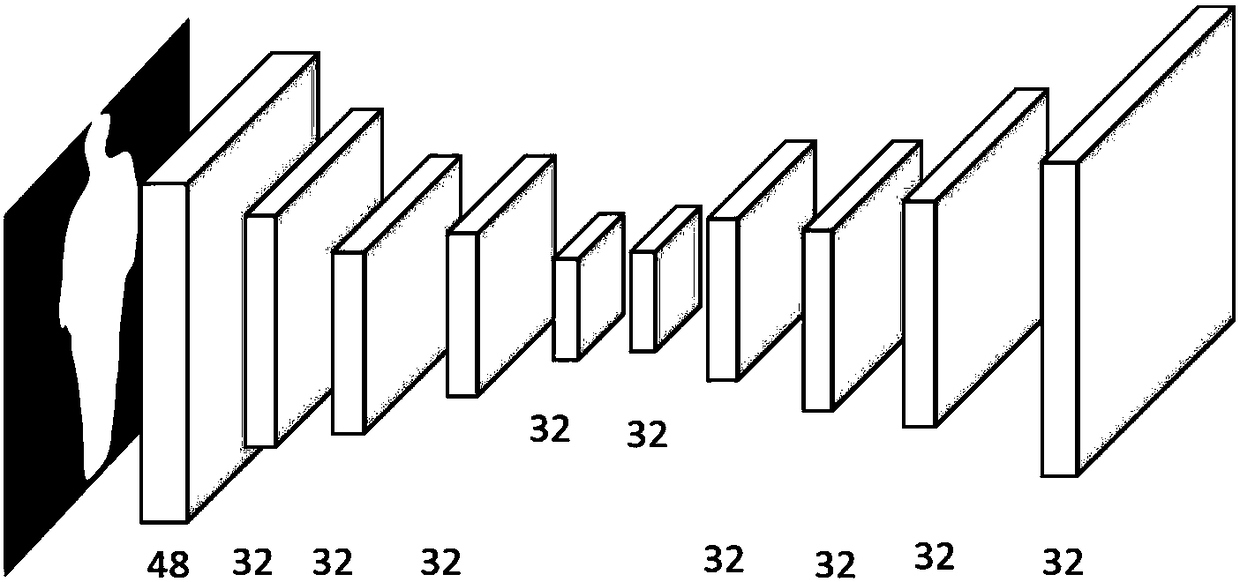

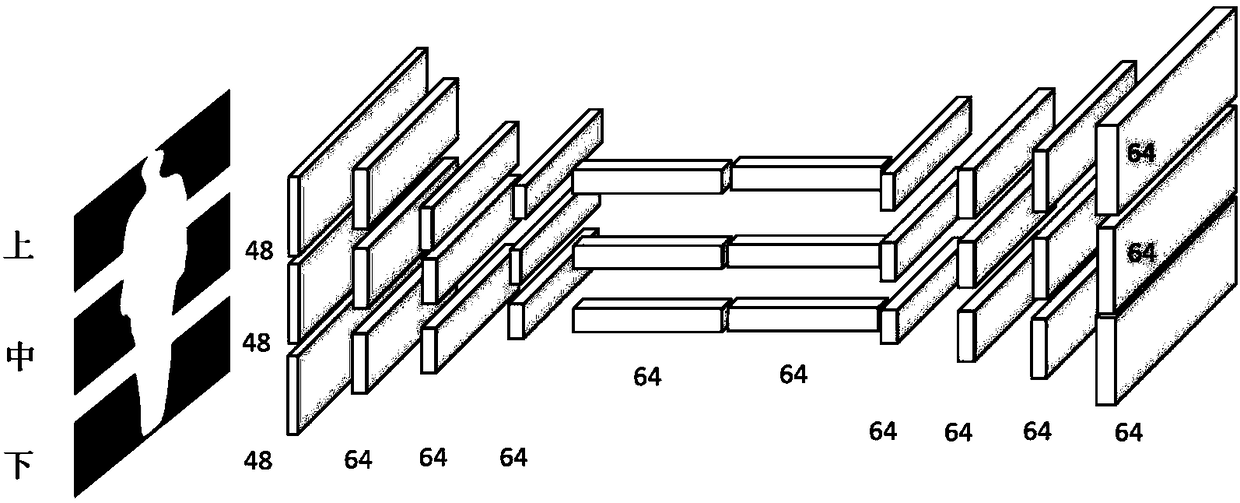

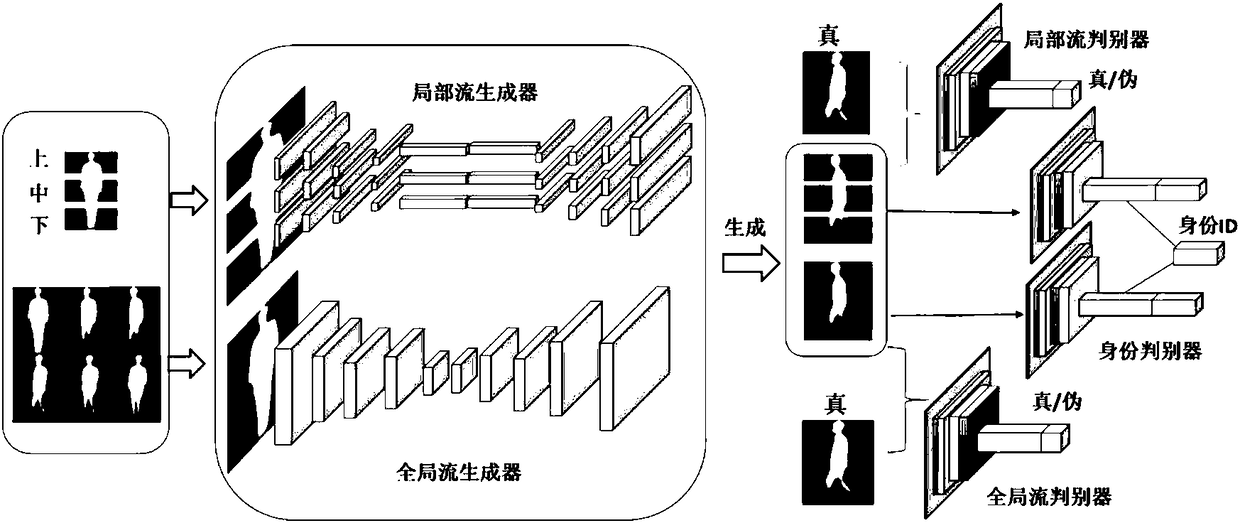

Cross-view gait identification device based on dual-flow generation confrontation network and training method

ActiveCN108596026AImprove recognition accuracyImprove accuracyCharacter and pattern recognitionPattern recognitionAlgorithm

The invention belongs to the computer vision and mode identification field, particularly relates to a cross-view gait identification device based on the dual-flow generation confrontation network anda training method and aims to solve a problem of non-high cross-view gait identification accuracy. The method specifically comprises steps that a global flow gait energy image of a standard angle is learned through a global flow generation confrontation network model, and local flow gait energy images of standard angles are learned through utilizing three local flow generation confrontation network models. Global gait characteristics can be learned by a global flow model, on the basis of the global flow model, the local flow networks are added, so local gait characteristics can be learned; gait details can be restored through adding pixel-level constraints to a generator of the dual-flow generation confrontation network; through fusing the global gait characteristics and the local gait characteristics, gait identification accuracy can be improved. The method is advantaged in that quite strong robustness for the gait images is realized, and a cross-view gait identification problem can be better solved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

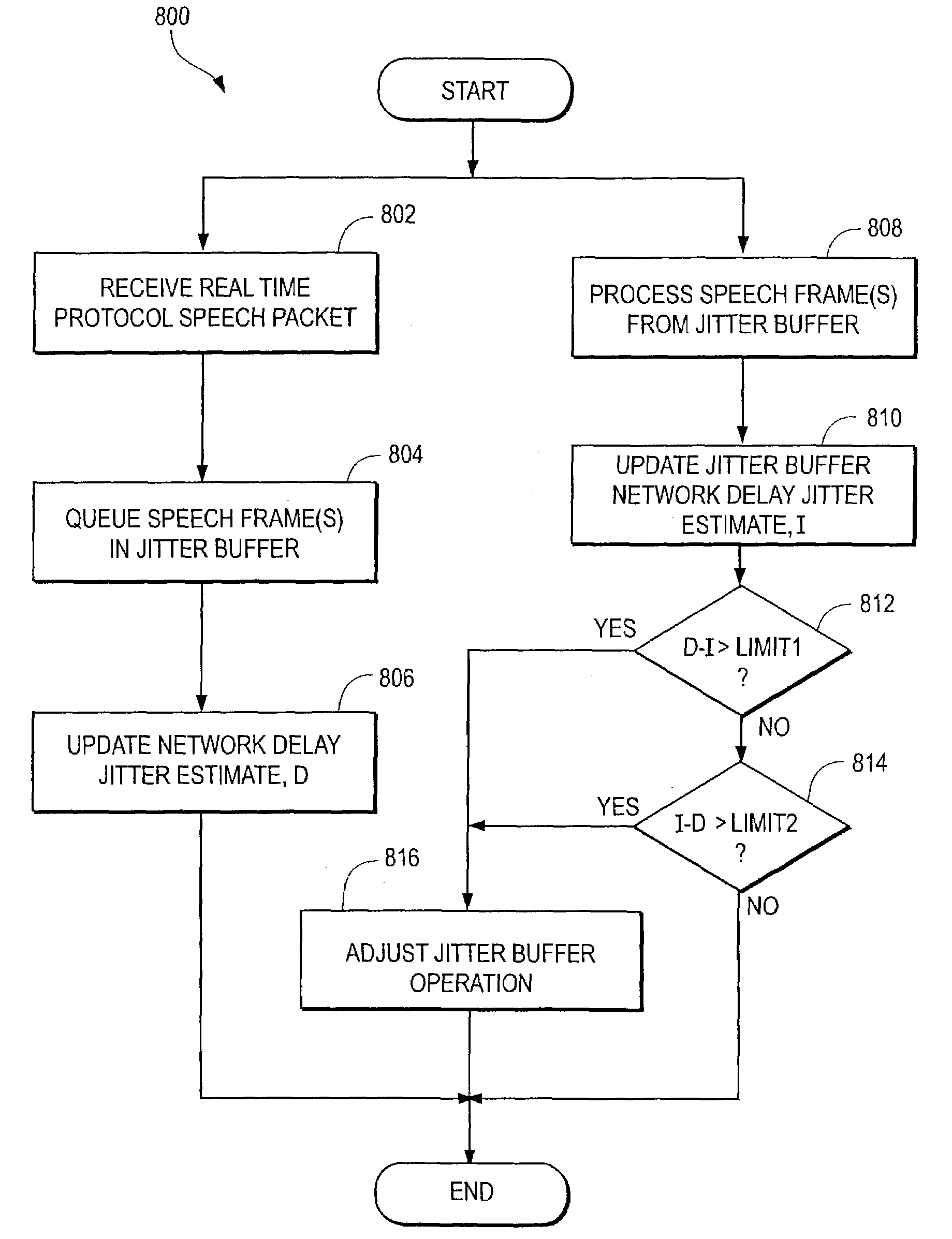

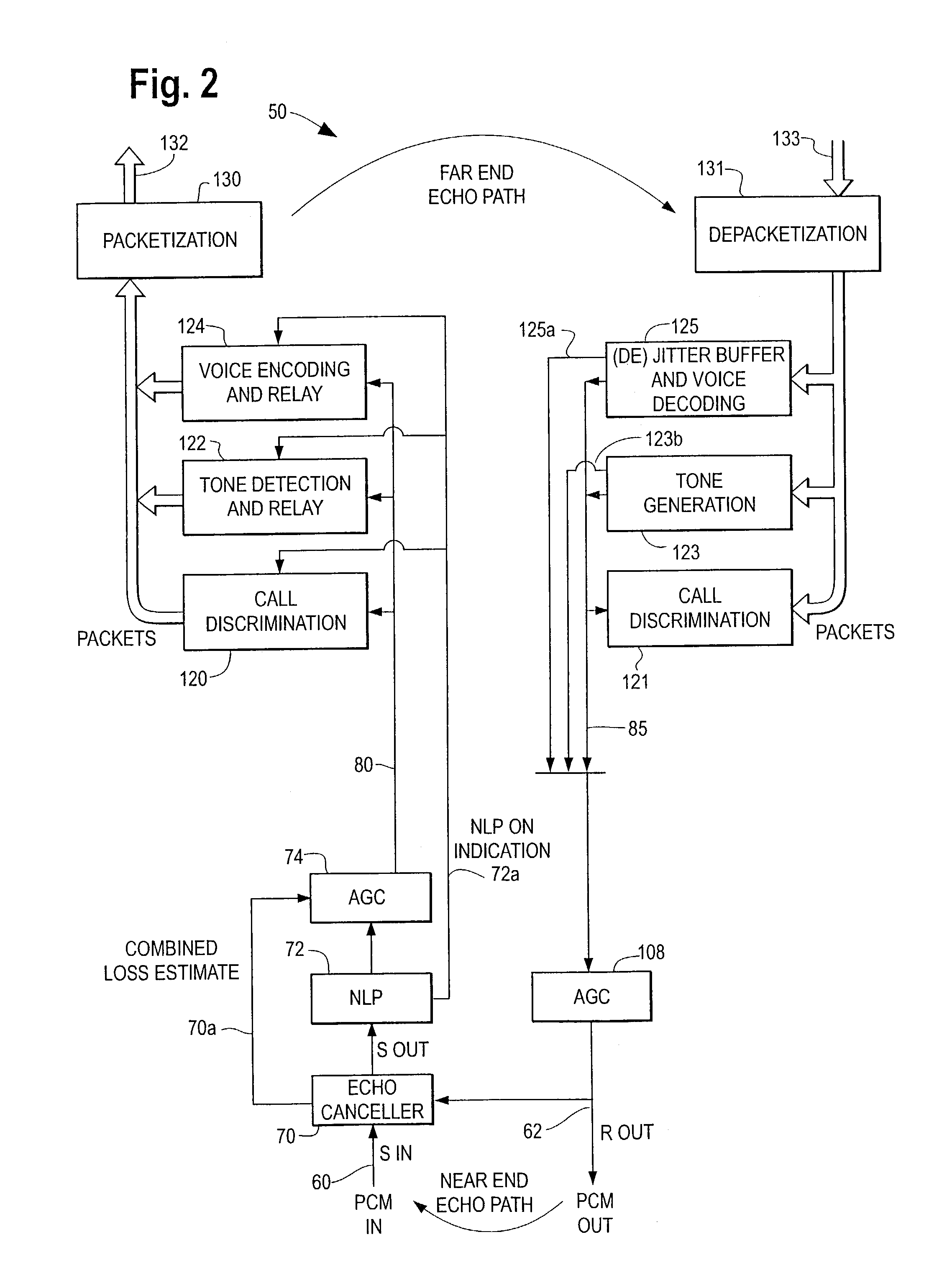

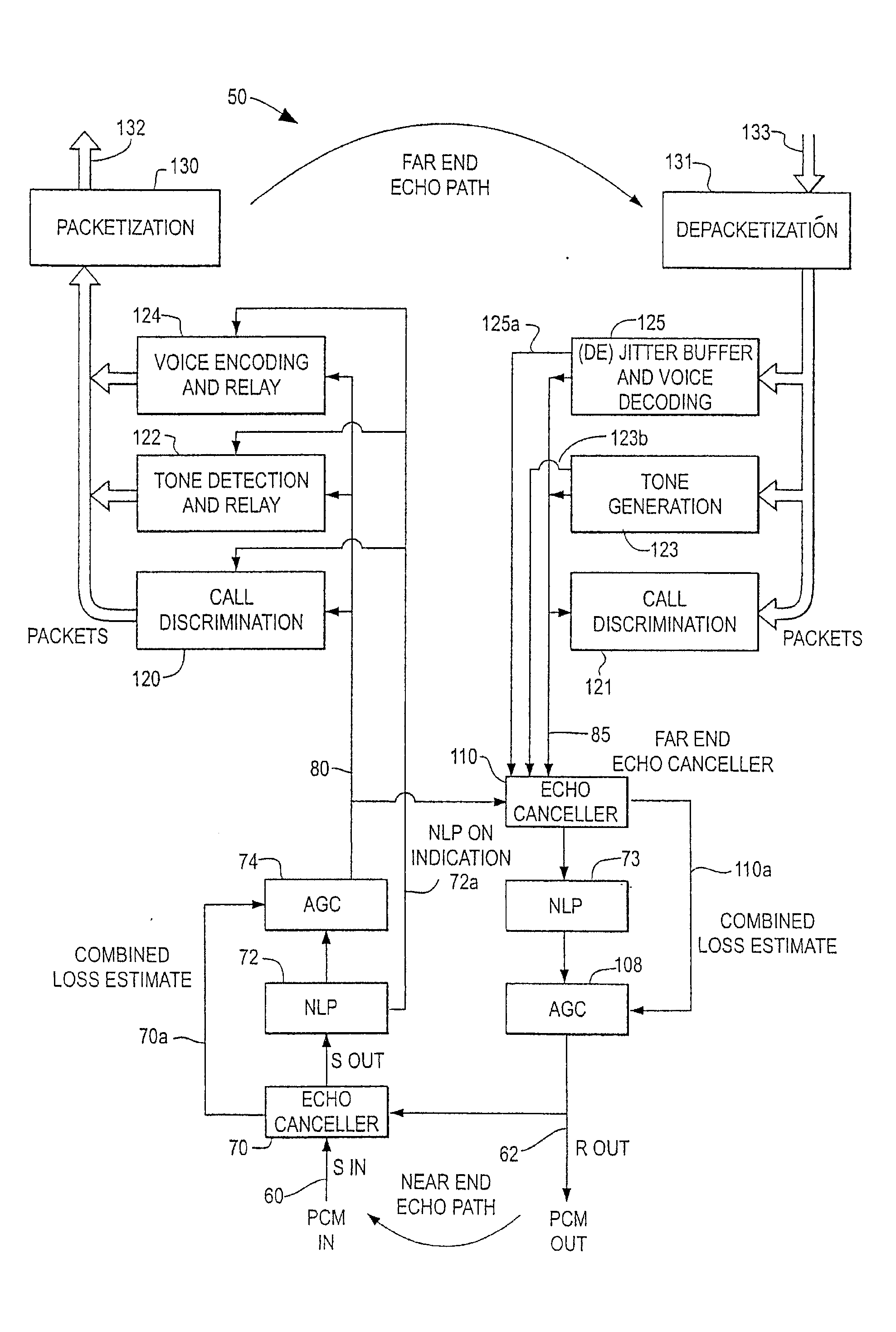

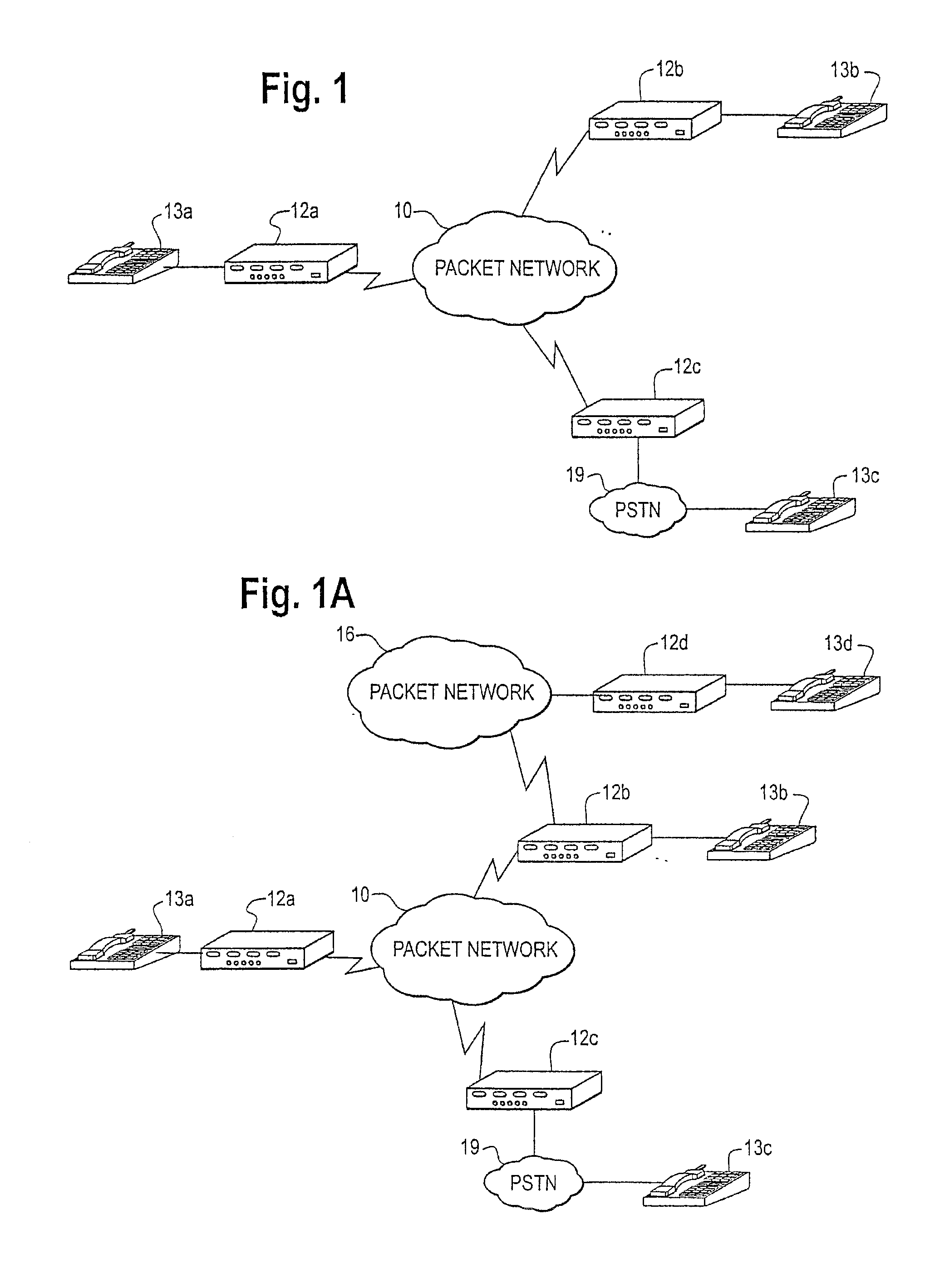

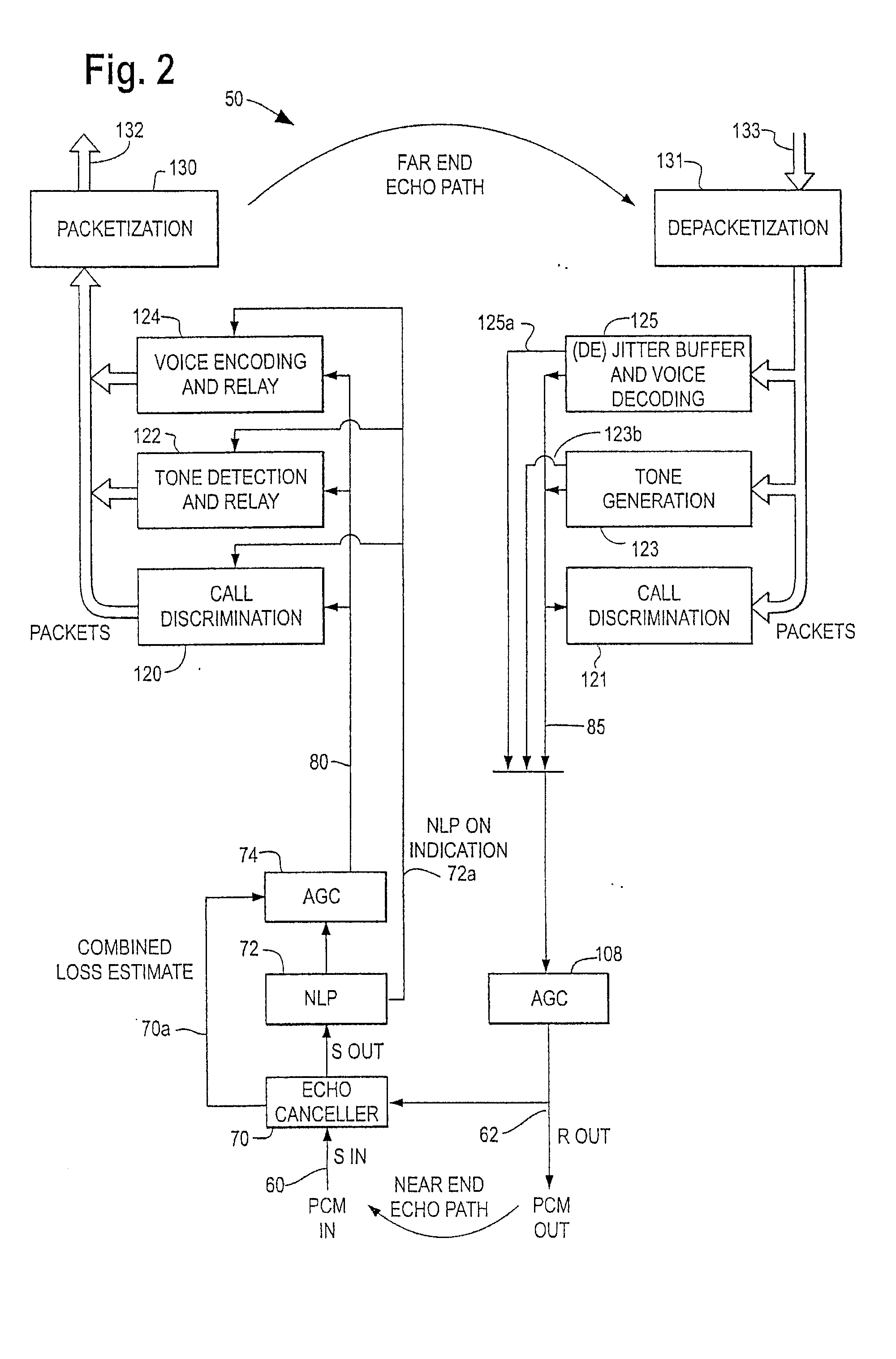

Using RTCP statistics for media system control

ActiveUS7525918B2Improve playback qualityInterconnection arrangementsError preventionReal time communication systemsComputer science

Methods for using communication network statistics in the operation of a real-time communication system are disclosed. Embodiments of the invention may provide improved playback of real-time media streams by incorporating into the algorithms used for playback of the media stream network statistics typically calculated by some transport protocols.An additional aspect of the present invention may include machine-readable storage having stored thereon a computer program having a plurality of code sections executable by a machine for causing the machine to perform the foregoing.

Owner:AVAGO TECH INT SALES PTE LTD

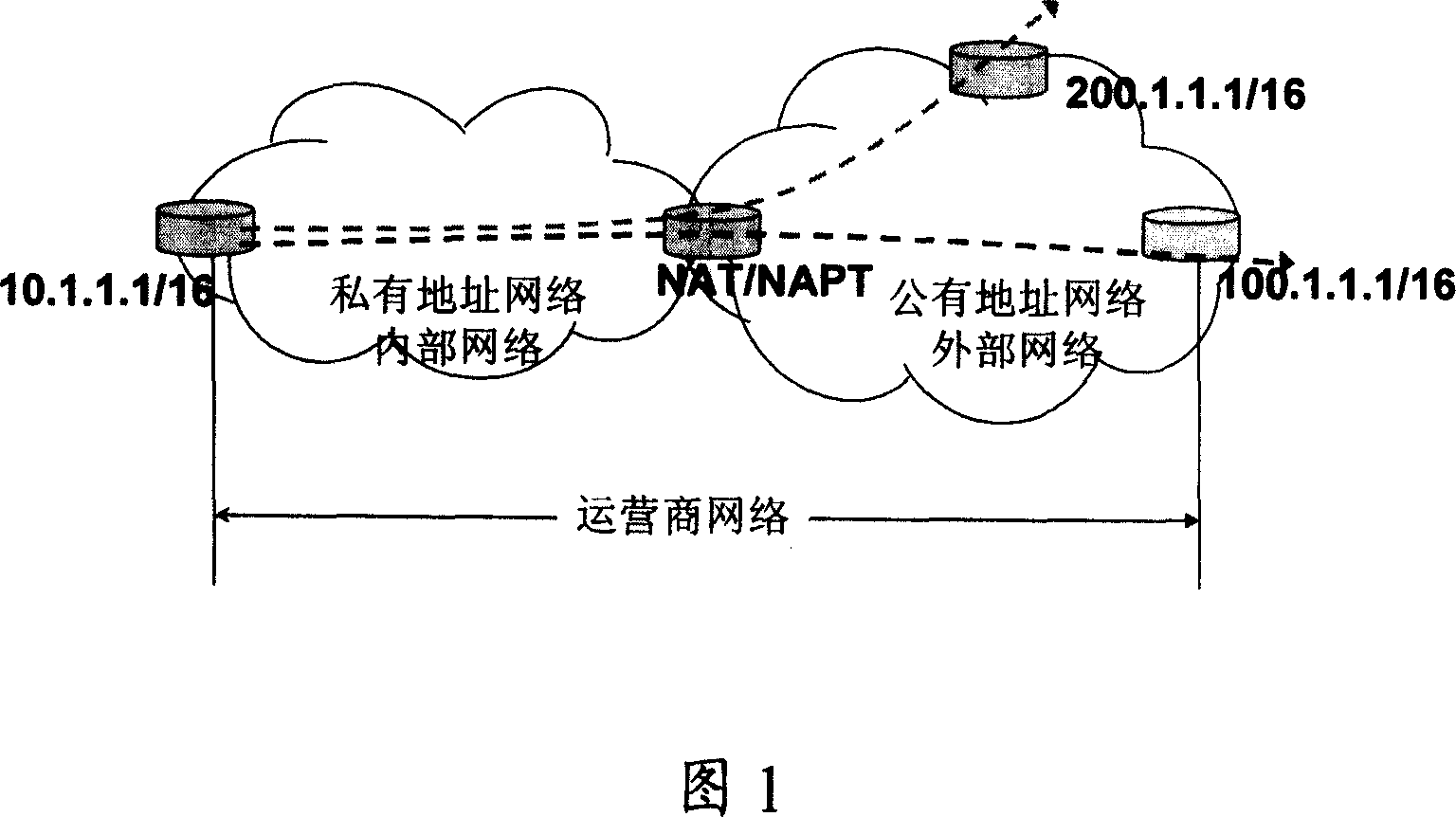

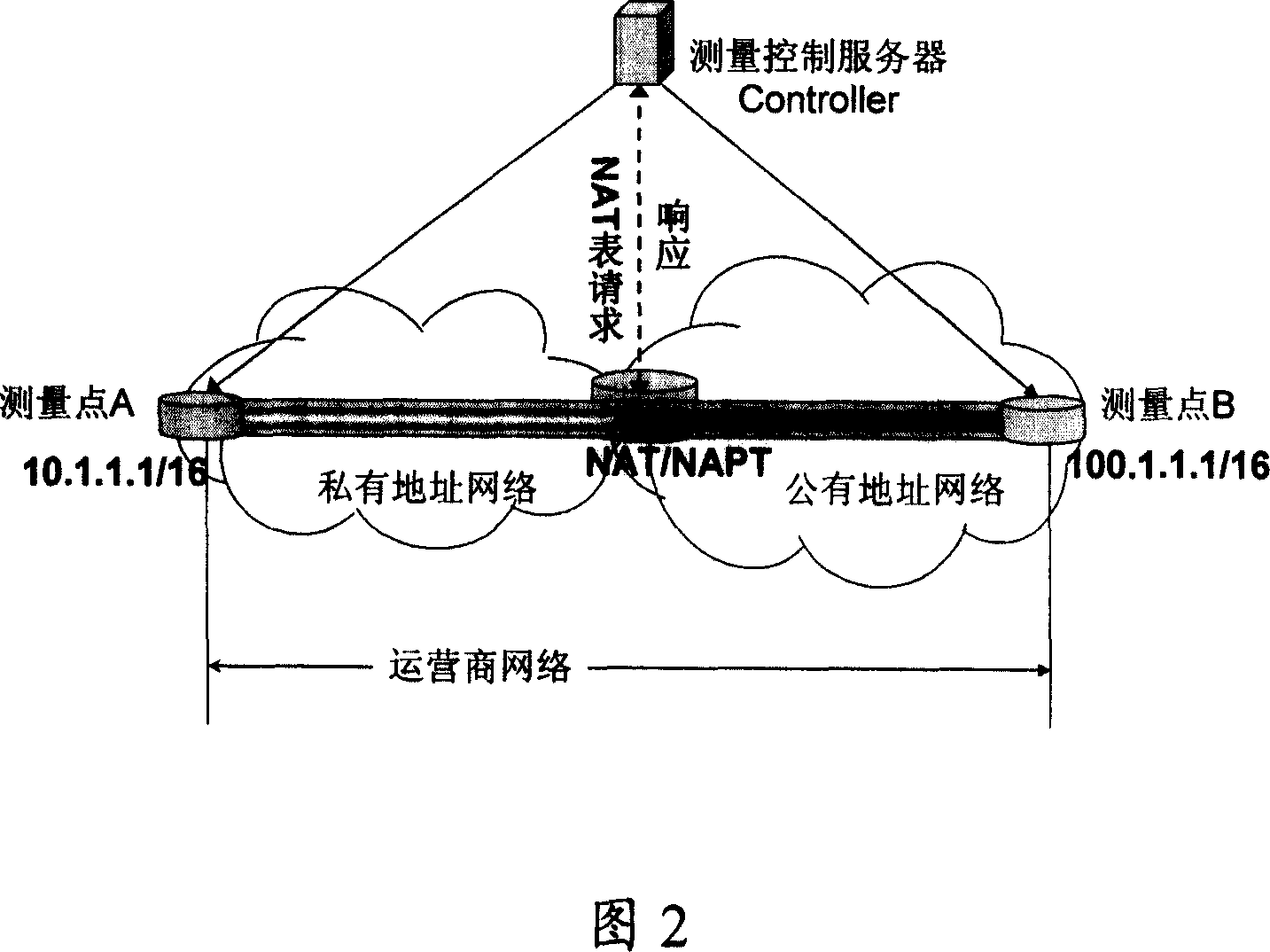

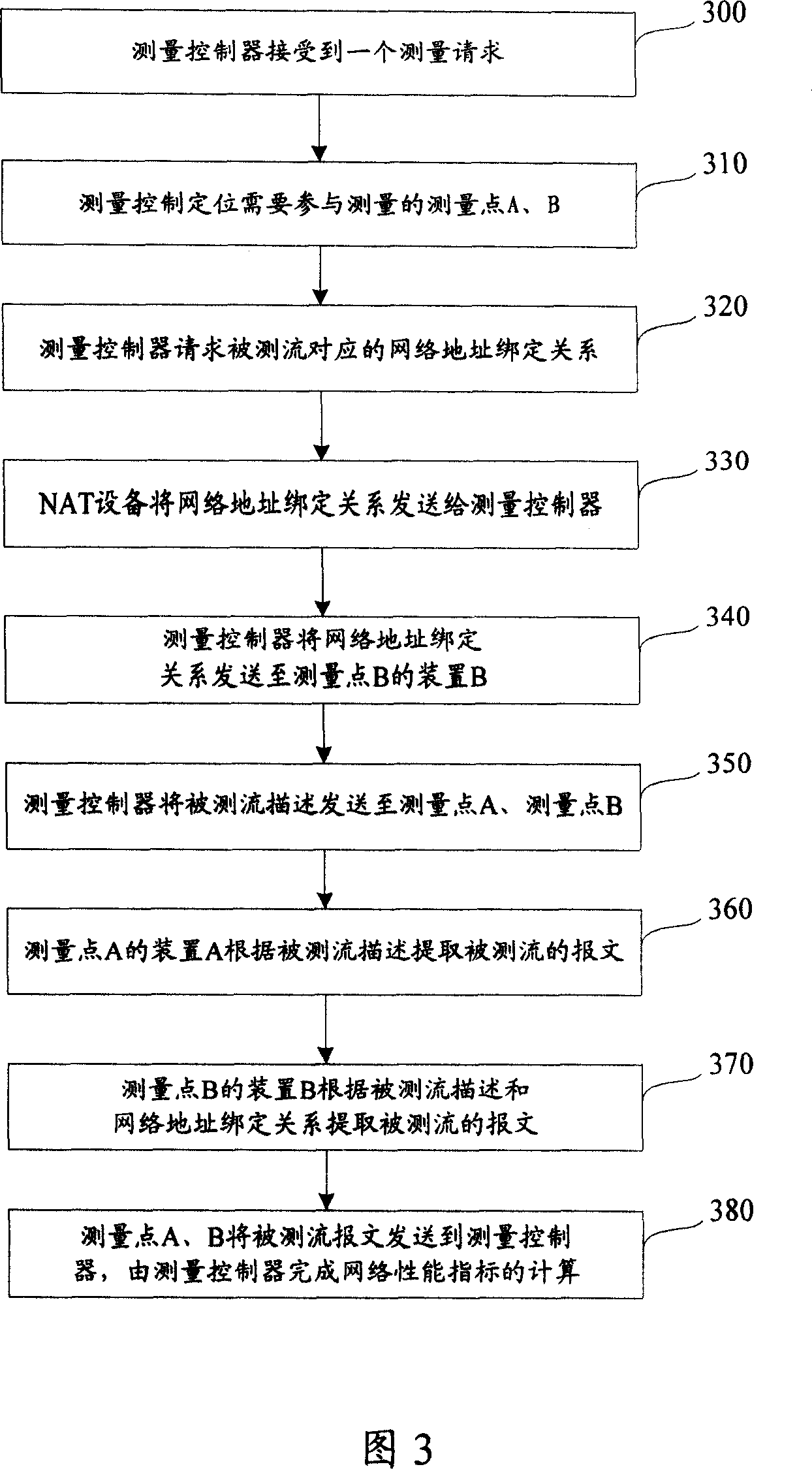

A network performance measurement method and system

ActiveCN101056218AFully consider the impactRadio transmission for post communicationNetwork connectionsMeasurement pointNetwork addressing

The invention discloses a network performance measurement method to solve the problem of available technologies that the same measured stream can't be positioned at both sides of switching equipment due to the changing of network address, and unable to fulfill the reactive measurement for the network performances; this method may obtain the measured stream network address binding relationship from the network address switching device, and configure the measured stream description and the network address binding relationship to the measurement point; the measurement point may extract the objective message of measured stream, and generate the conformable message identifier for the same message for different measurement point with the network address binding relationship, and generate the message abstract data according to the stream identifier and message identifier and submit; calculate the relevant network performance indexes according to the message abstract data submitted from the measurement point. The invention may still disclose a network system.

Owner:HUAWEI TECH CO LTD

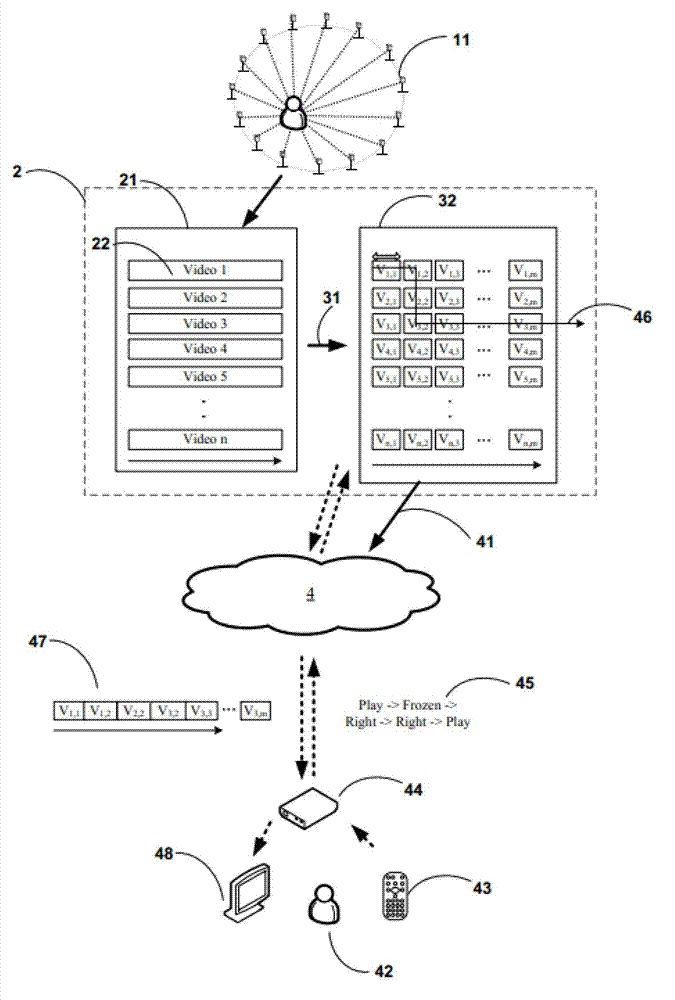

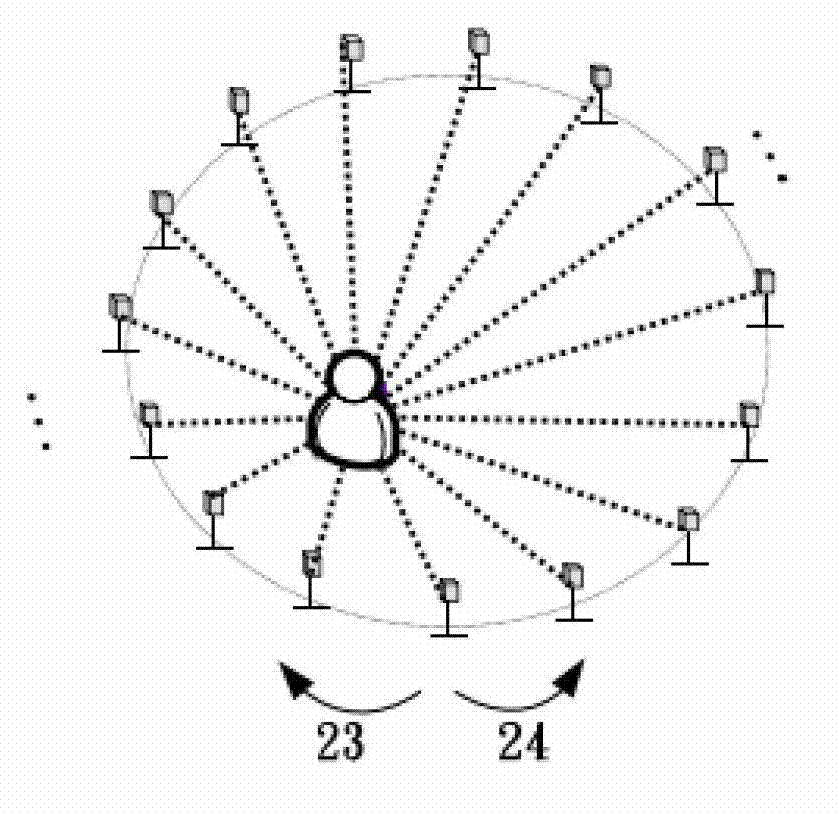

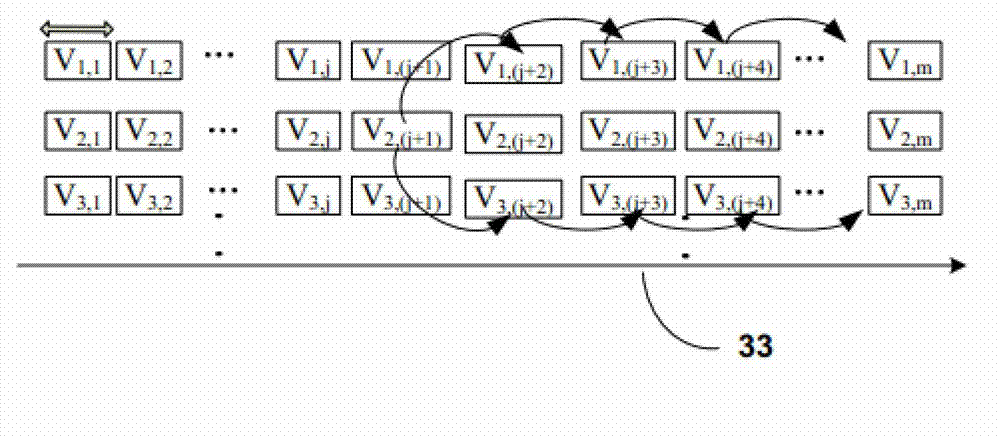

Video and audio streaming method of multi-angle interactive TV

InactiveCN102833584AInteraction reachedDiversified serviceSelective content distributionVideo deliveryCable television

A video and audio streaming method of a multi-angle interactive TV can transmit different-angle videos and audios according to user' needs. By using an image cutting method, the method simultaneously synchronizes a plurality of cameras to images photographed from different angle, cuts each group of consecutive images into small videos, and then transmits the consecutive small videos with specific angle to clients through a streaming network transmission mode in accordance with needs of watching and angle switching of the clients. The clients receive videos through a hardware player and select watching angle. The method can provide interactions between the clients and the TV and the autonomy to choose watching angles without additional network load, so as to improve user' watching quality and abundance.

Owner:CHUNGHWA TELECOM CO LTD

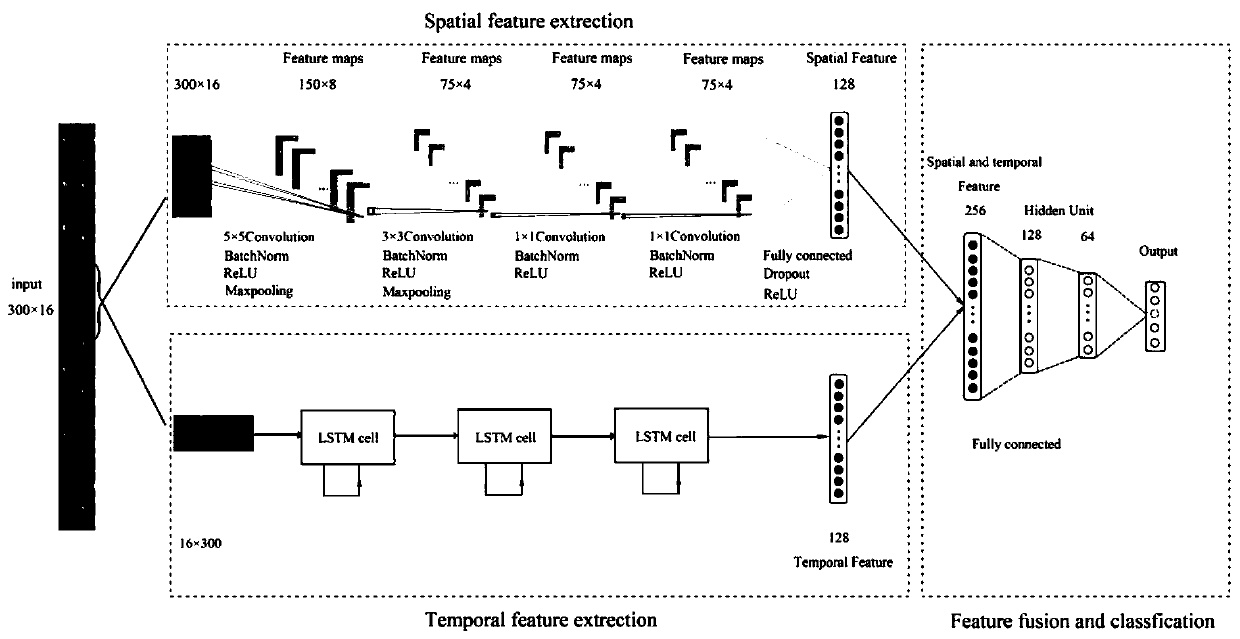

Electromyographic signal gesture recognition method based on double-flow network

PendingCN110658915AImprove plasticityEasy to identifyInput/output for user-computer interactionNeural architecturesAcquisition apparatusAlgorithm

The invention discloses an electromyographic signal gesture recognition method based on a double-flow network. The electromyographic signal gesture recognition method comprises the following steps: 1)collecting electromyographic signals of various gestures of multiple persons, wherein each gesture action of a subject lasts for 12 seconds by wearing a 16-channel acquisition device, steady-state data of 10 seconds are extracted, data preprocessing is carried out, a 300ms time window is selected, and the size of each frame of electromyogram is 300*16, so that a training set is constructed. 2) constructing a double-flow network model, wherein the model is mainly composed of three parts, the first part is a multi-layer CNN and is responsible for extracting spatial features; the second part isa multi-layer LSTM and is responsible for learning time characteristics; and the last part is a feature merging layer which is responsible for feature fusion. 3) training the double-flow network model, and performing gradient descent optimization by adopting an Adam optimizer until convergence, and 4) performing gesture recognition on the sEMG of the arm by using the trained double-flow network model.

Owner:ZHEJIANG UNIV OF TECH

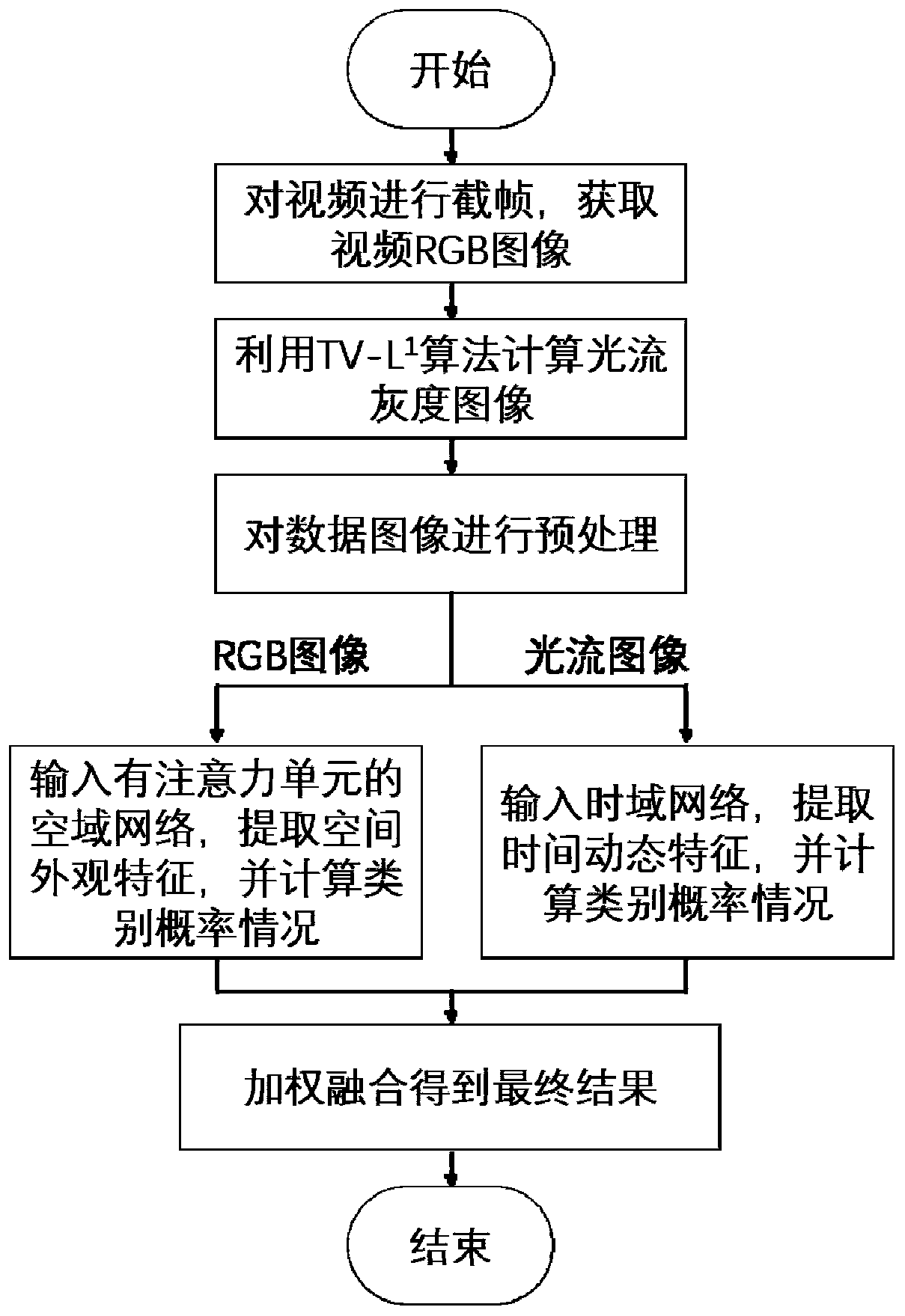

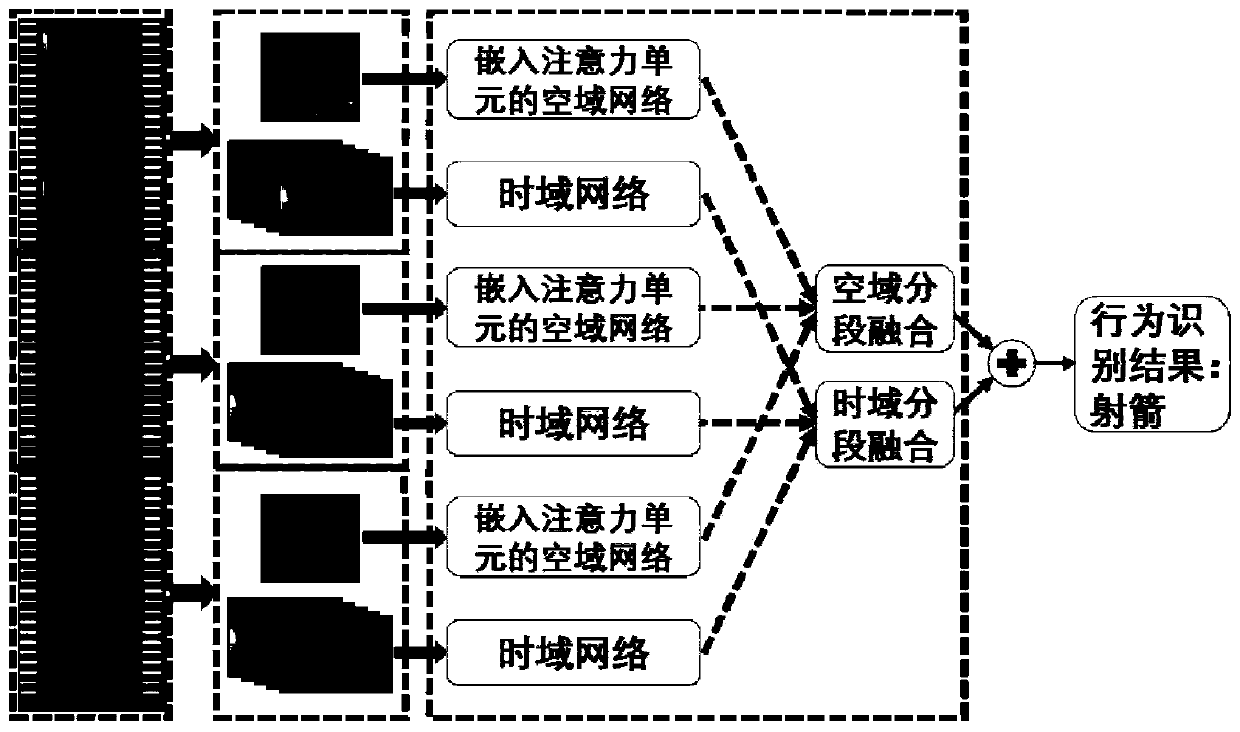

Behavior recognition method and system based on attention mechanism double-flow network

InactiveCN111462183ATake advantage ofImprove the accuracy of behavior recognitionImage enhancementImage analysisTime domainRgb image

Owner:SHANDONG UNIV

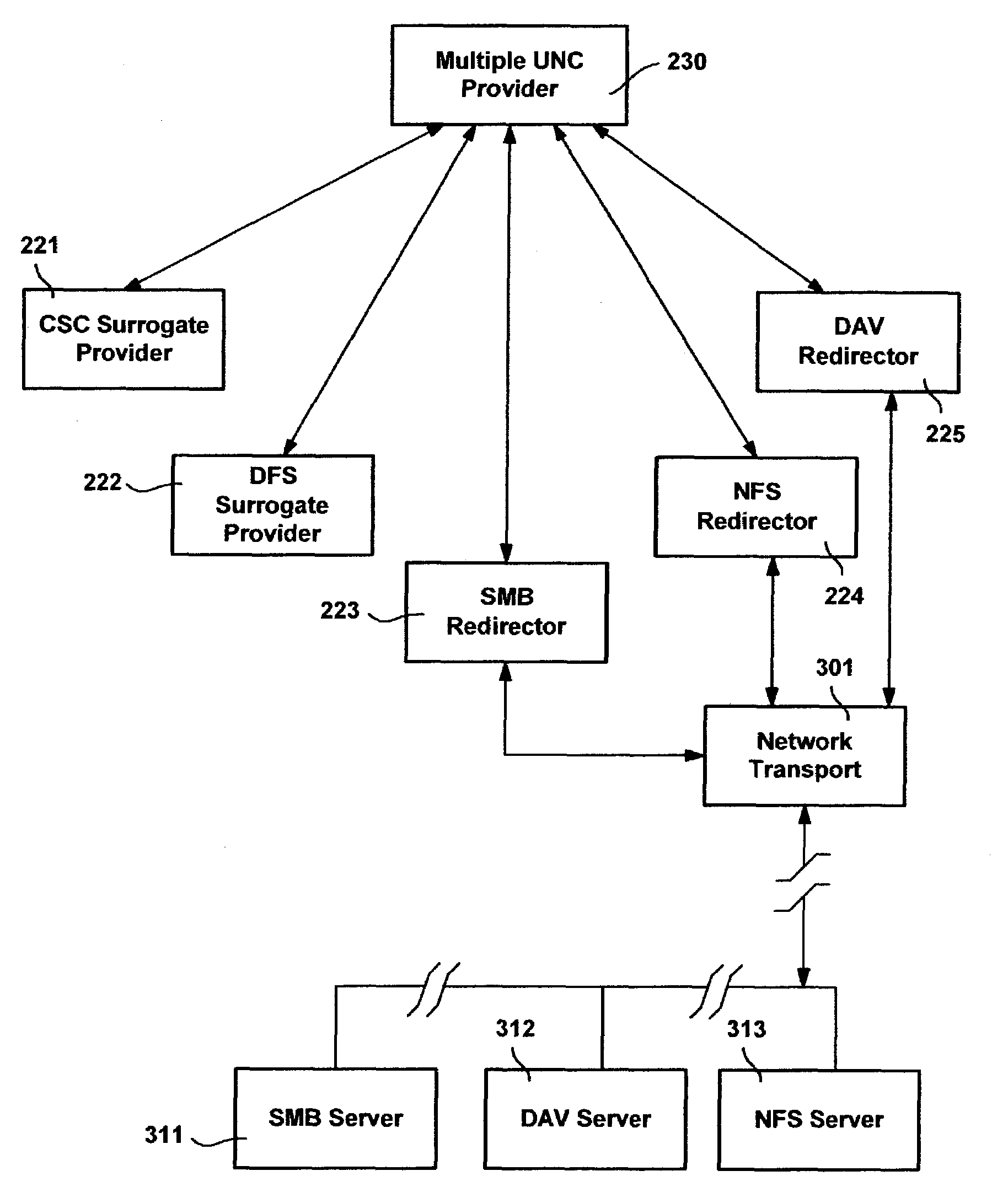

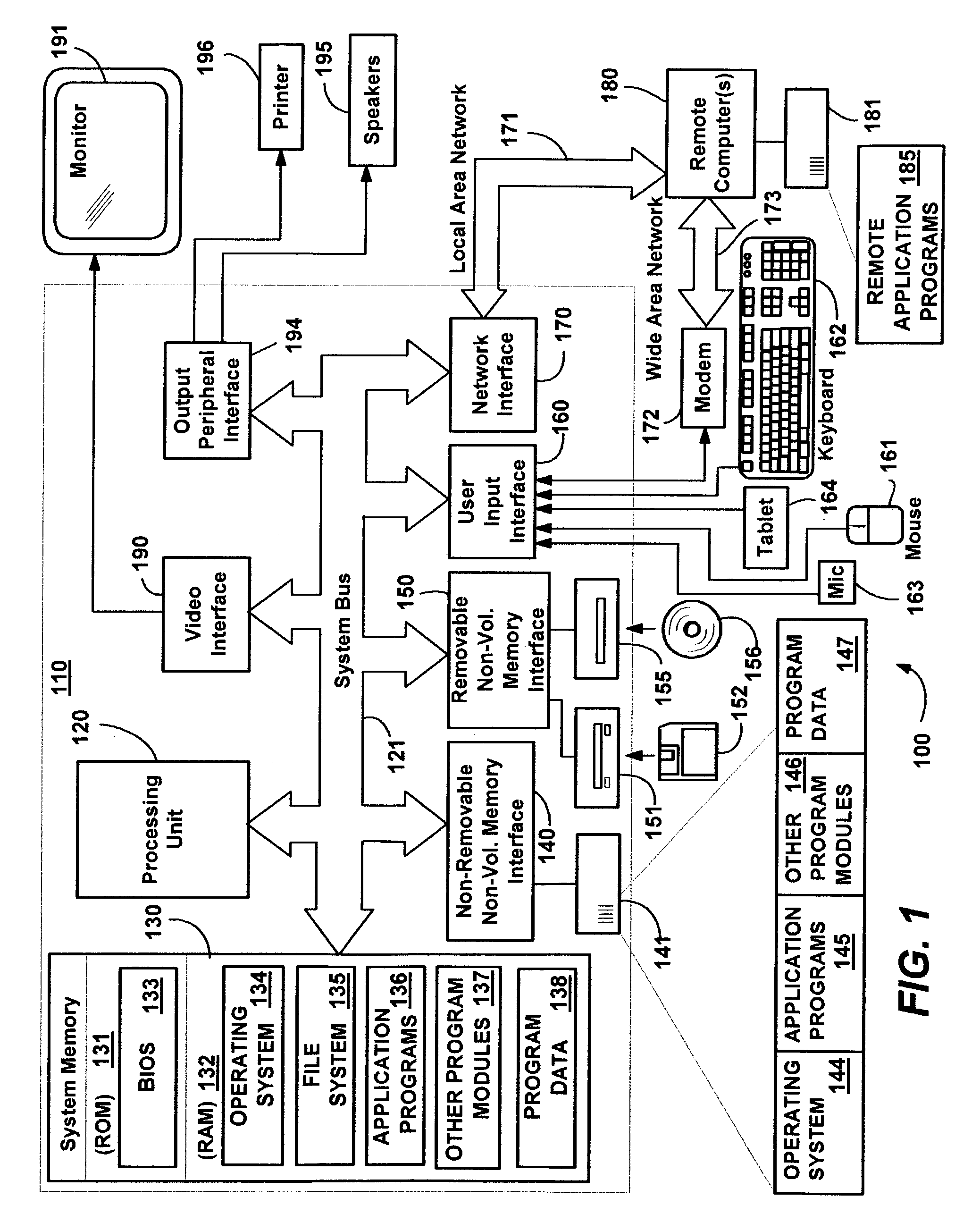

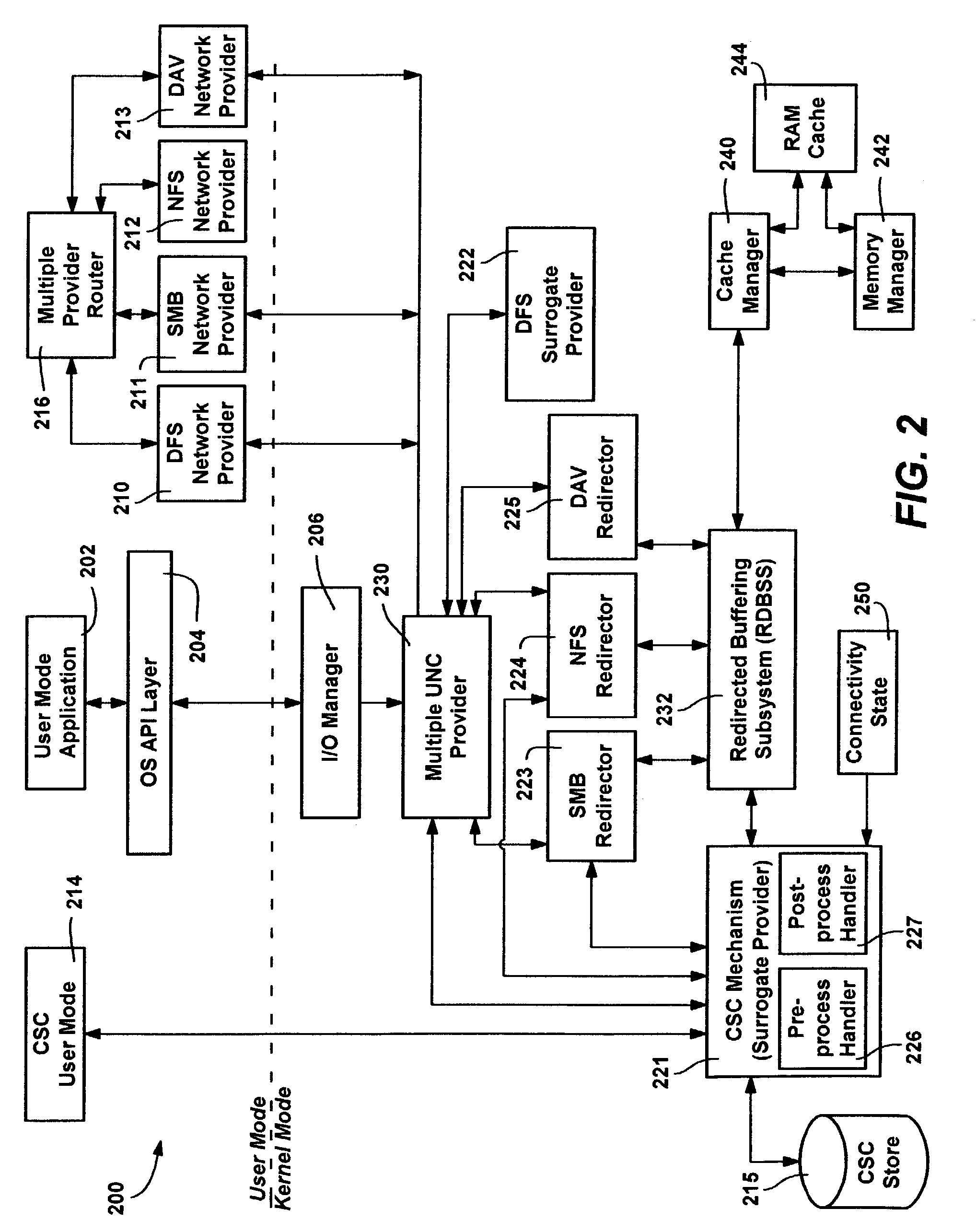

Protocol-independent client-side caching system and method

InactiveUS7349943B2Digital data information retrievalMultiple digital computer combinationsNetwork redirectorNetwork booting

A system and method that automatically and transparently handle client-side caching of network file data, independent of any remote file handling protocol. A protocol-independent client-side caching mechanism is inserted as a service that handles file-related requests directed to a network, and attempts to satisfy the requests via a client-side caching persistent store. By way of pre-process and post-process calls on a file create request, the caching mechanism creates file-related data structures, and overwrites the information in those structures that a buffering service uses to call back to a network redirector, whereby the client-side caching mechanism inserts itself into the communication flow between the network redirector and the buffering service. Once in the flow of communication, network-directed file read and write requests may be transparently handled by the client-side caching mechanism when appropriate, yet the redirector may be instructed to communicate with the server when needed to satisfy the request.

Owner:MICROSOFT TECH LICENSING LLC

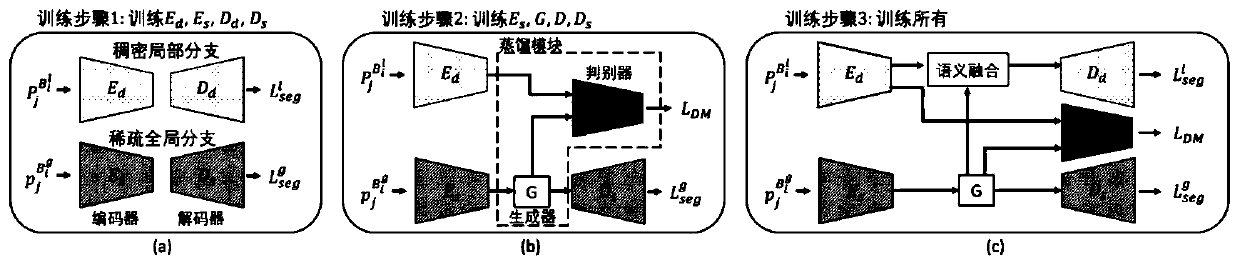

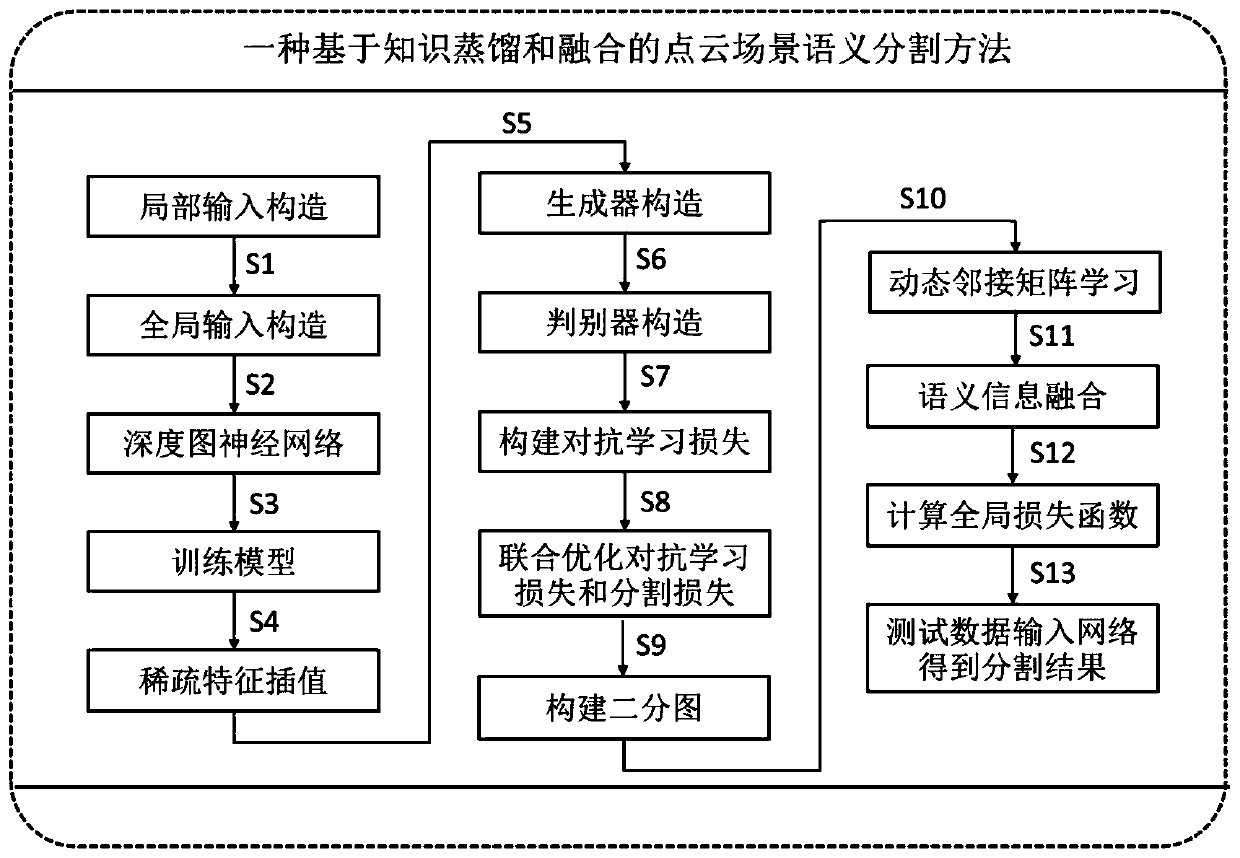

Point cloud scene segmentation method based on knowledge distillation and semantic fusion

ActiveCN111462137AAvoid increasing the amount of calculationHeavy calculationImage enhancementImage analysisAlgorithmData mining

The invention provides a point cloud scene segmentation method based on knowledge distillation and semantic fusion. The method comprises the steps: constructing a double-flow network frame which comprises dense local branches and sparse global branches; wherein the input of the dense local branch is the local region dense point cloud in the global scene, and the input of the sparse global branch is the sampled global scene point cloud. Secondly, designing a distillation module based on irregular data, performing knowledge distillation by using an Euclidean distance and an adversarial learningloss function, and transmitting local dense detail information to a sparse global branch; and finally, a dynamic graph context semantic information fusion module is designed, and the global features and the local features after detail information enhancement are fused. According to the method, rich detail information of a local scene and rich context semantic information of a global scene are fully and complementarily utilized. Meanwhile, increase of the calculation amount is avoided, and the point cloud segmentation result of a large-scale indoor scene can be effectively improved.

Owner:中科人工智能创新技术研究院(青岛)有限公司

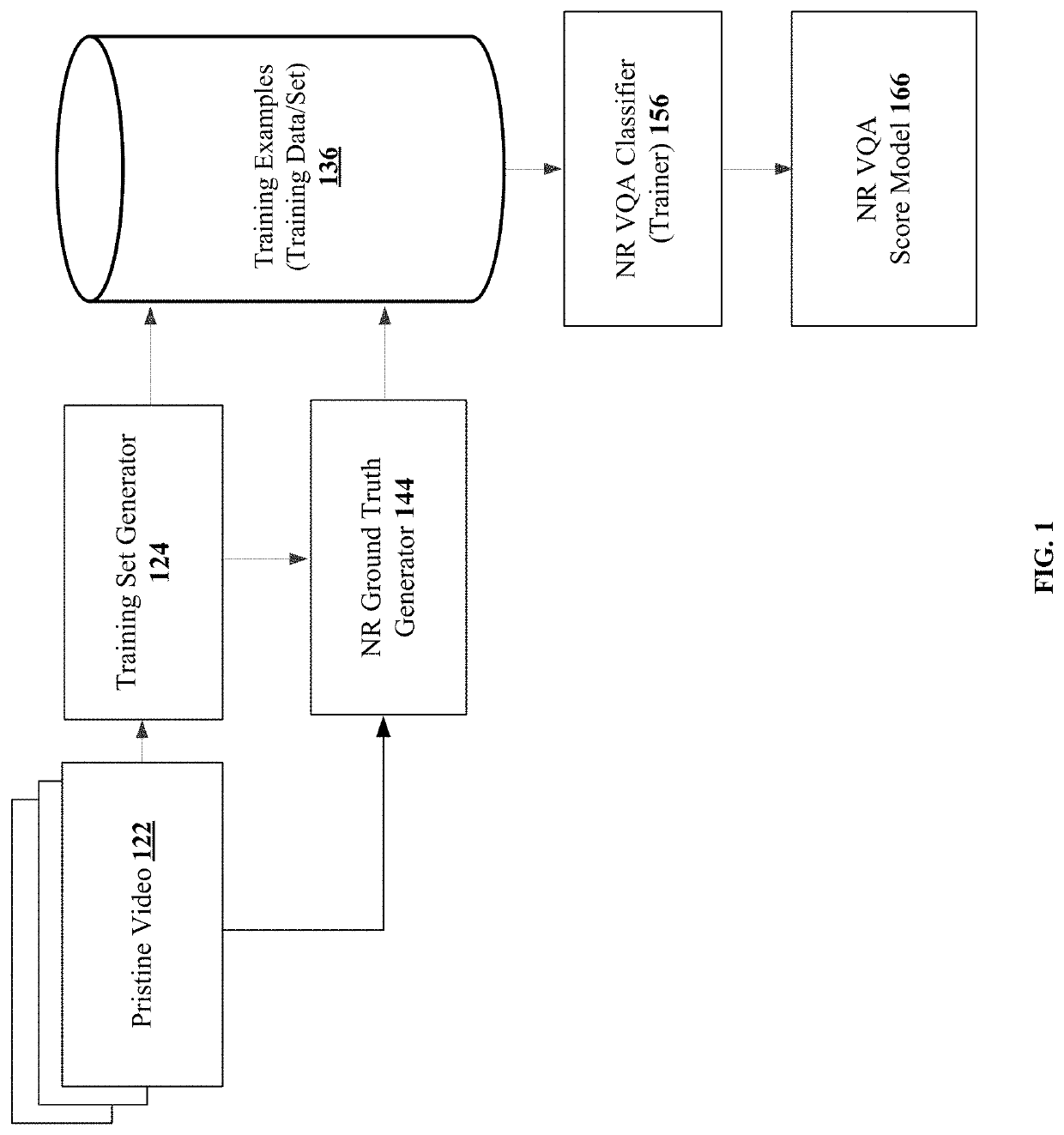

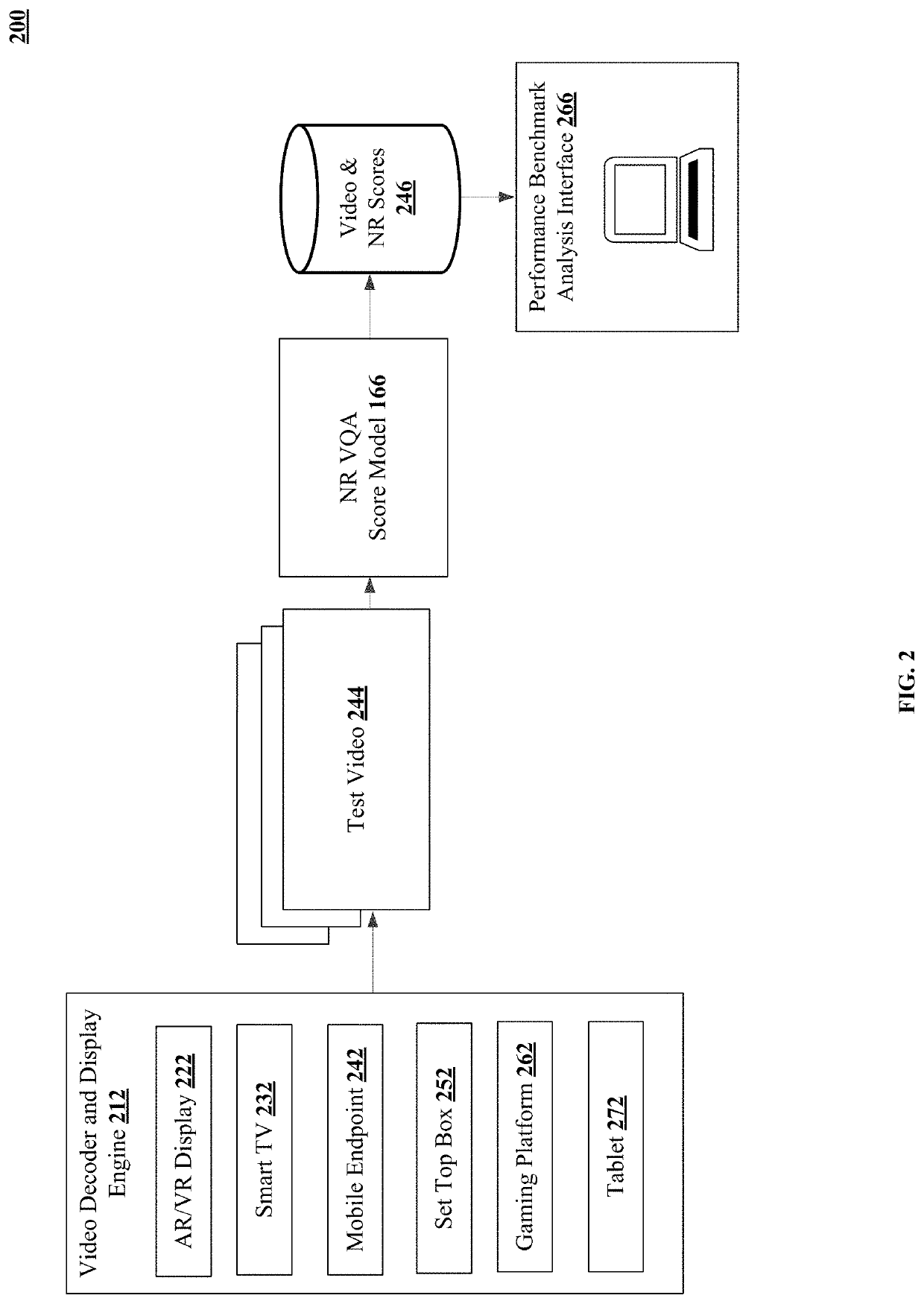

Training an encrypted video stream network scoring system with non-reference video scores

ActiveUS20200322694A1Accurate monitoringEvaluate the impact of throttling video servicesSelective content distributionBalancing networkCustomer relationship management

At least three uses of the technology disclosed are immediately recognized. First, a video stream classifier can be trained that has multiple uses. Second, a trained video stream classifier can be applied to monitor a live network. It can be extended by the network provider to customer relations management or to controlling video bandwidth. Third, a trained video stream classifier can be used to infer bit rate switching of codecs used by video sources and content providers. Bit rate switching and resulting video quality scores can be used to balance network loads and to balance quality of experience for users, across video sources. Balancing based on bit rate switching and resulting video quality scores also can be used when resolving network contention.

Owner:SPIRENT COMM

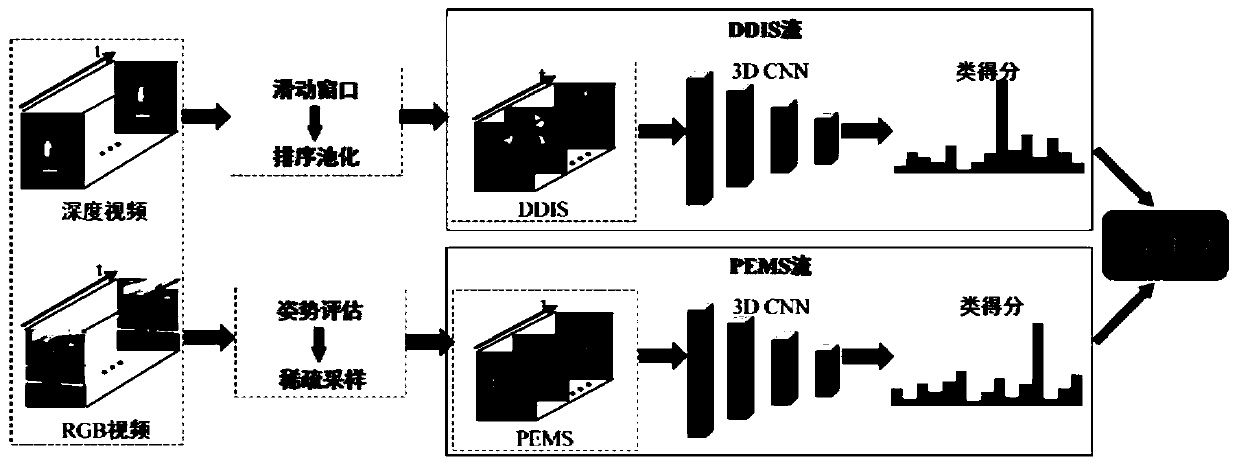

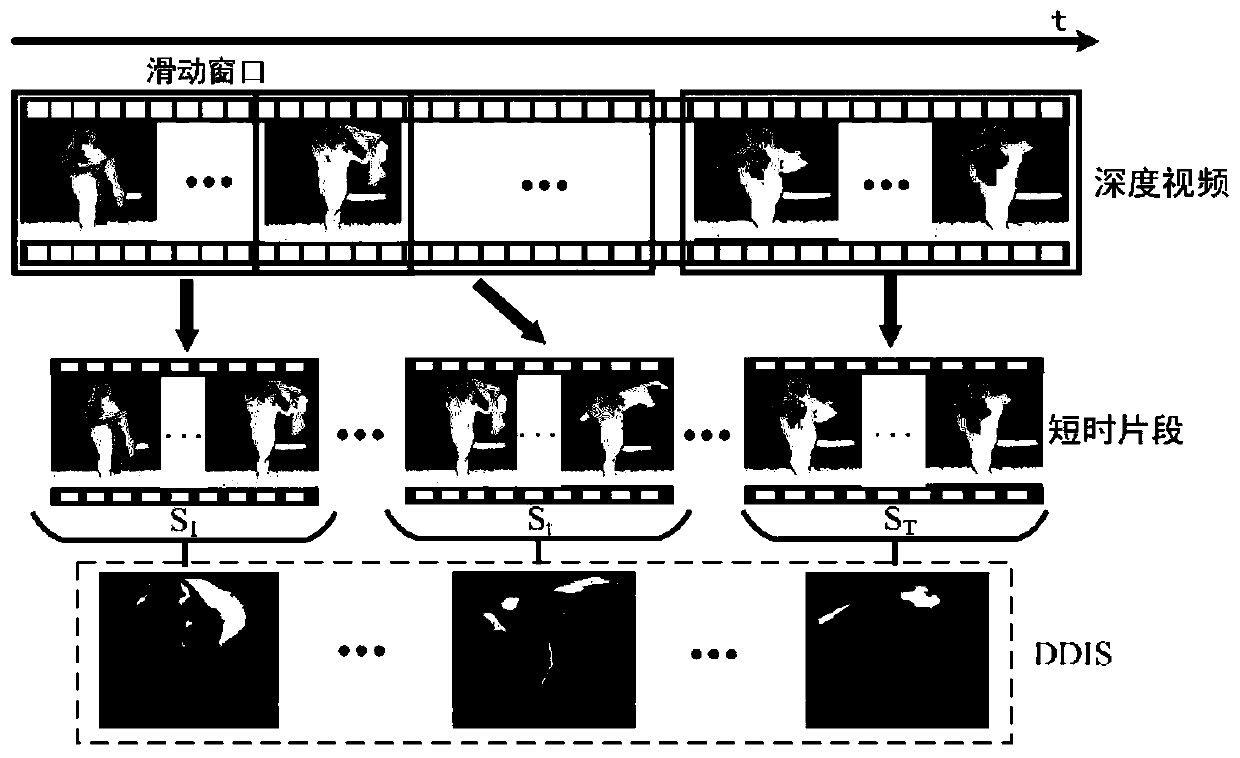

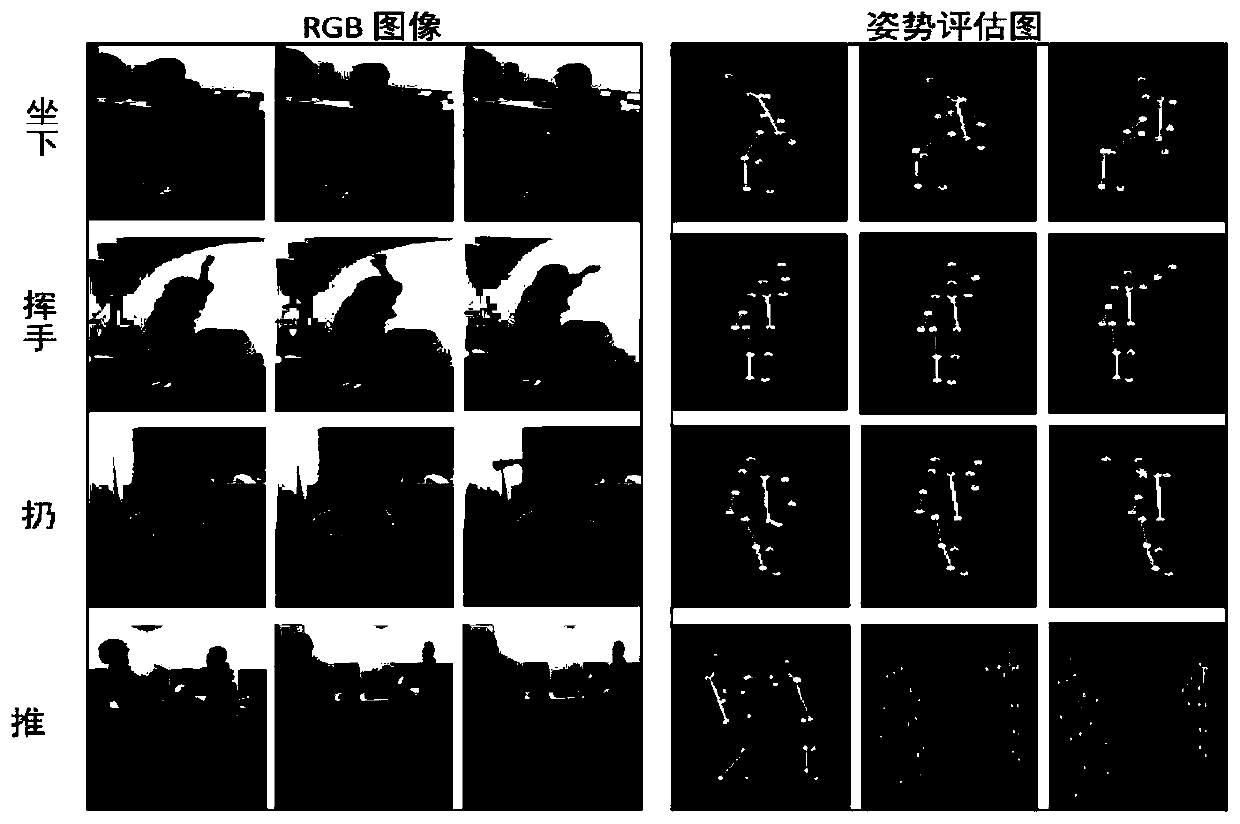

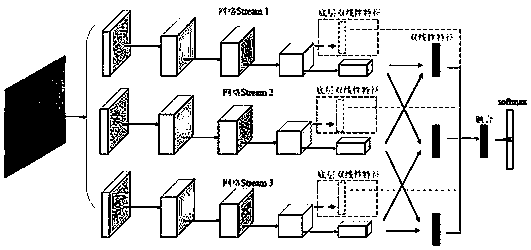

Video human body behavior recognition method and system based on multi-mode double-flow 3D network

InactiveCN110705463AEliminate distractionsClear captureCharacter and pattern recognitionNeural architecturesMulti modalityStream network

The invention discloses a video human body behavior recognition method and system based on a multi-mode double-flow 3D network. The method comprises the steps of generating a depth dynamic image sequence DDIS based on a depth video; generating a posture evaluation graph sequence PEMS based on the RGB video; respectively inputting the depth dynamic graph sequence and the posture evaluation graph sequence into a 3D convolutional neural network, constructing a DDIS flow and a PEMS flow, and obtaining respective classification results; and fusing the obtained classification results to obtain a final behavior recognition result. The method has the beneficial effects that the DDIS can well describe the human body motion and the contour of the interactive object in the long-term behavior video by modeling the local space-time structure information of the video. The PEMS can clearly capture the change of the human body posture and eliminate the interference of the messy background. The multi-mode double-flow 3D network architecture can effectively model the global space-time dynamic of the behavior video in different data modes, and has excellent recognition performance.

Owner:SHANDONG UNIV

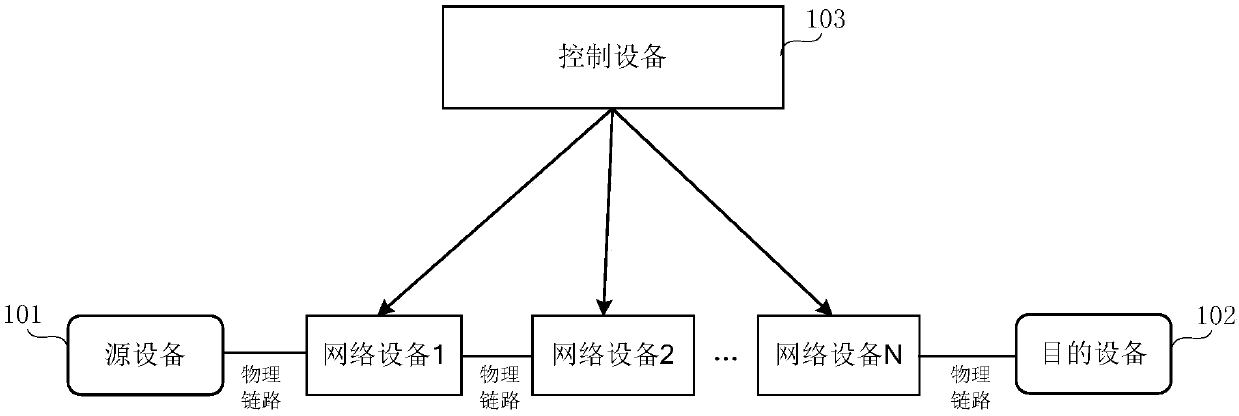

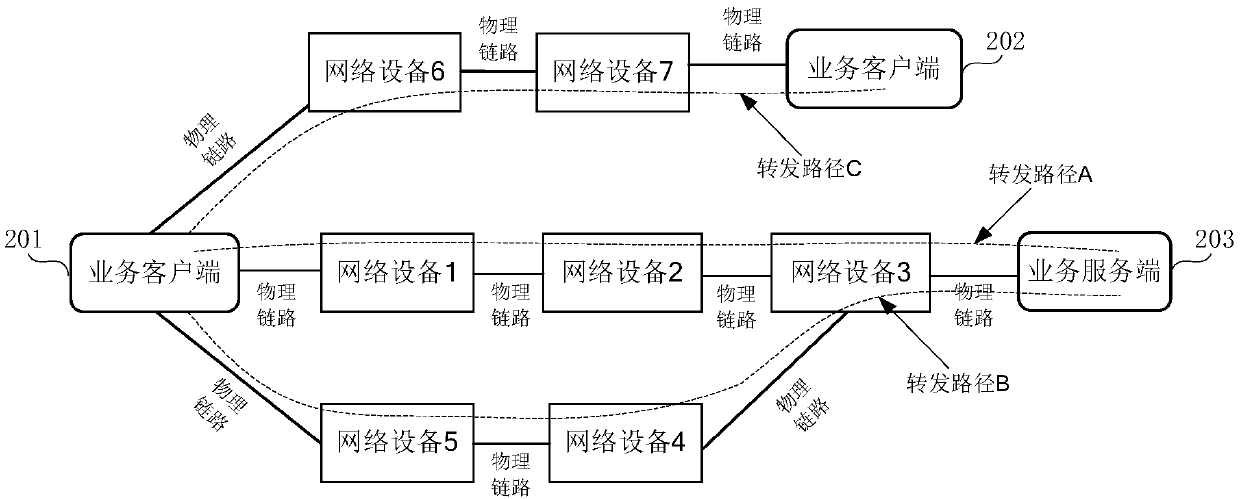

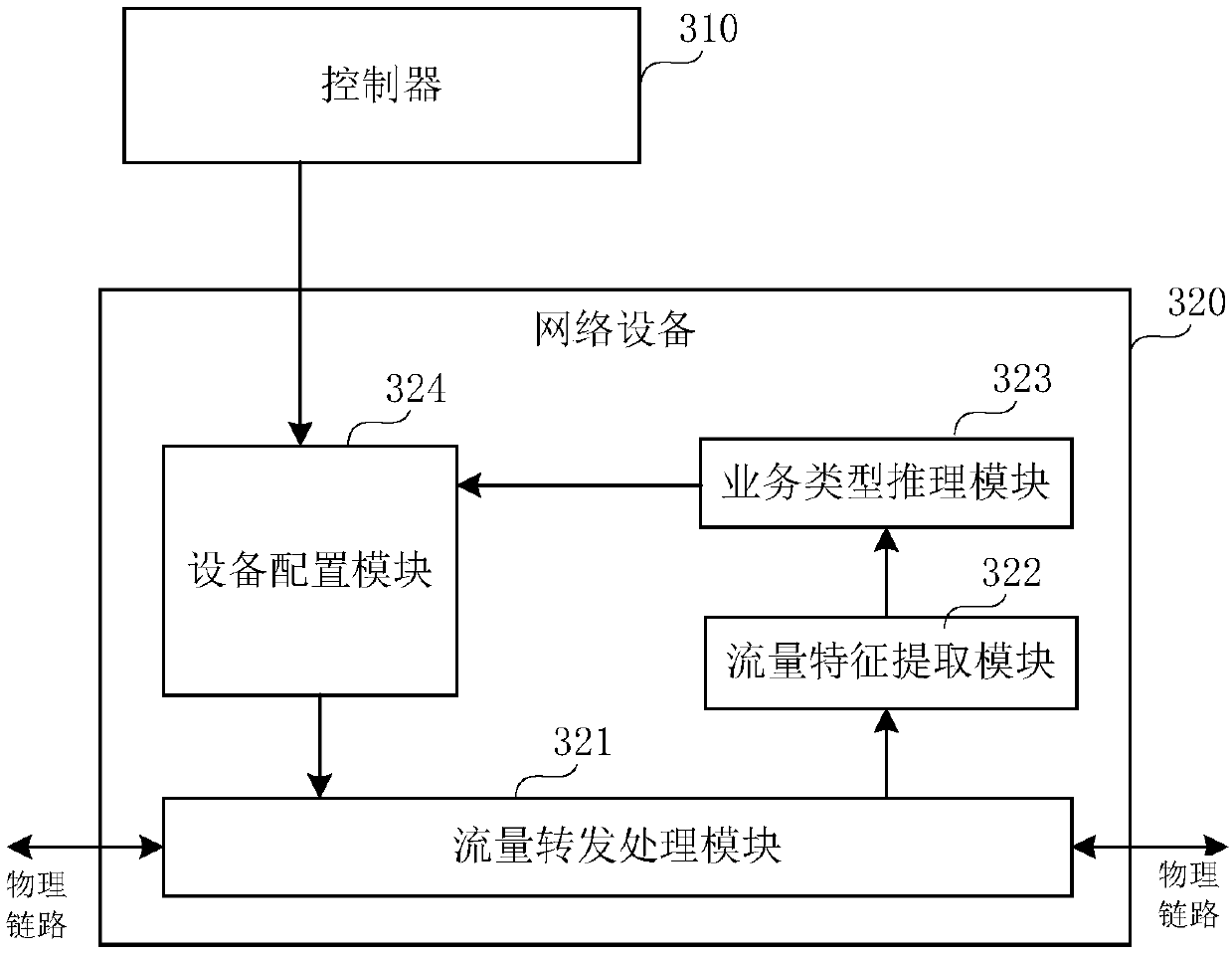

Service flow processing method and device

The invention discloses a service flow processing method and device. The method comprises the following steps: network equipment receives a first service flow; the network equipment extracts flow characteristics of the first service flow, and the network equipment obtains a first service type corresponding to the first service flow according to a reasoning model and the flow characteristics of thefirst service flow, wherein the reasoning model is obtained by training according to the flow characteristics of the historical service flow and the service type of the historical service flow; the network equipment determines a first forwarding strategy corresponding to the first service type according to a first corresponding relation table and the first service type, wherein the first corresponding relation table comprises a corresponding relation between the service types and the forwarding strategies; and the network equipment forwards the first service flow according to the first forwarding strategy. Thus, the network equipment can identify the service type of the first service flow online through the reasoning model, the timeliness in forwarding the first service flow can be improved, and different service types of service flows can be forwarded automatically and timely.

Owner:HUAWEI TECH CO LTD

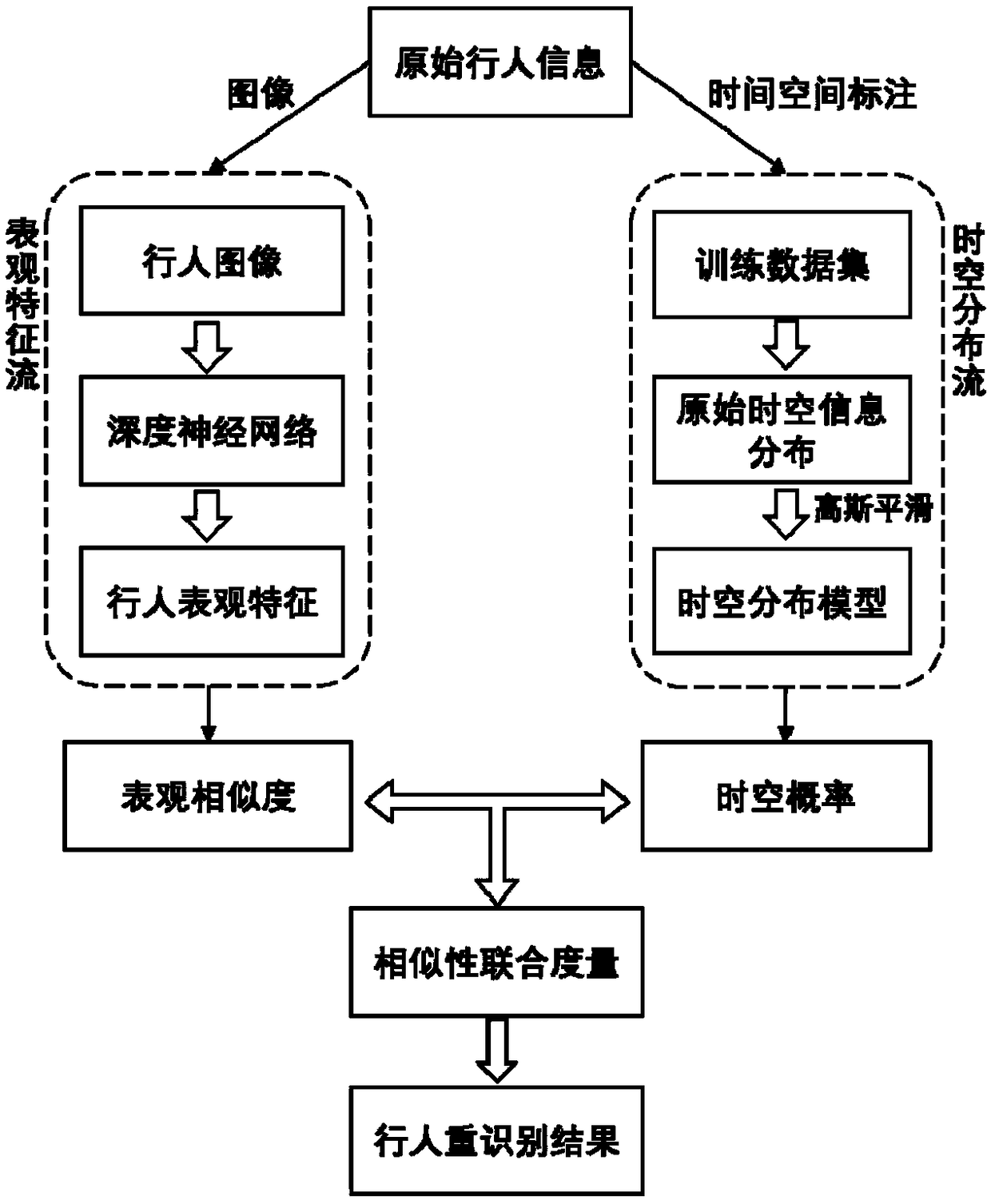

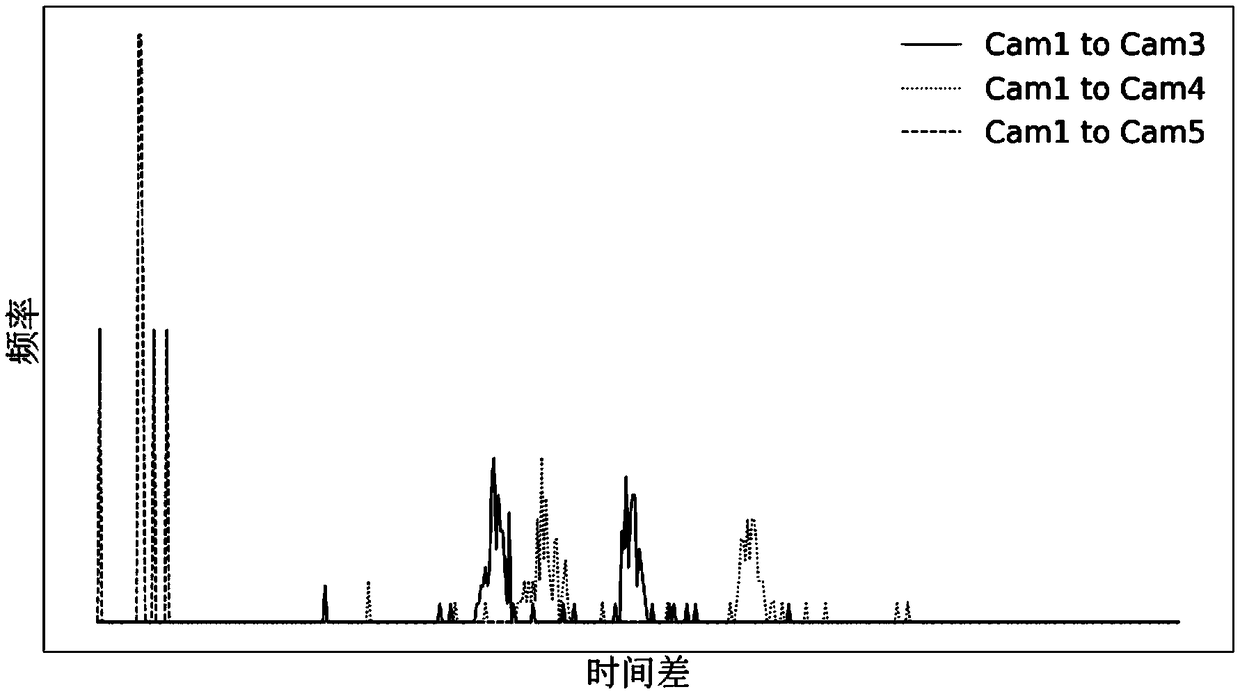

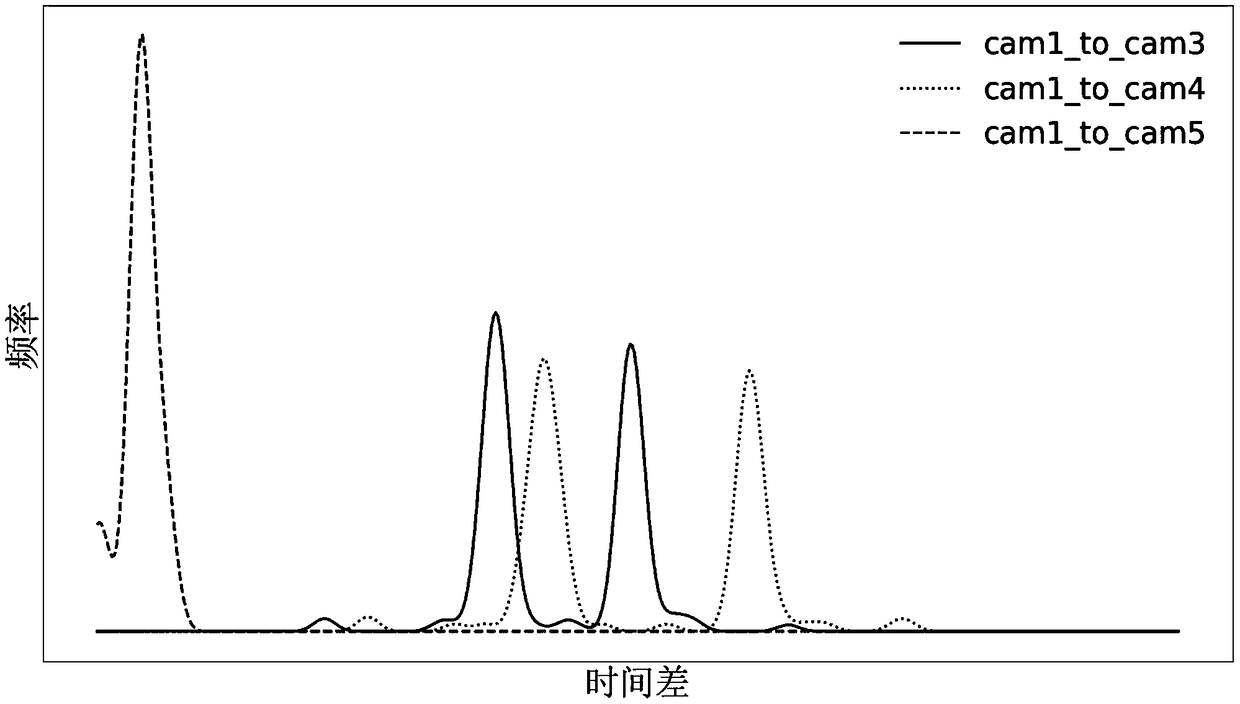

A double-flow network pedestrian re-identification method combining the apparent characteristics and the temporal-spatial distribution

ActiveCN109325471ARobustImprove generalizationBiometric pattern recognitionNeural architecturesData setRe identification

The invention discloses a double-flow network pedestrian re-identification method combining the apparent characteristics and the temporal-spatial distribution. The method mainly comprises the following steps of extracting the apparent characteristics of the pedestrian images by using a depth neural network and calculating the apparent similarity of image pairs; learning the spatio-temporal distribution model of a training dataset by Gaussian smoothing based statistical method; obtaining the final similarity by calculating the apparent similarity and the spatio-temporal probability with the joint measurement method based on logical smoothing; sorting the final similarity to get the result of pedestrian re-recognition. The main contributions comprises proposing a pedestrian re-identificationframework based on dual-stream network which combines the apparent features and spatial-temporal distribution; (2) proposing a new spatio-temporal learning method based on Gaussian smoothing; (3) proposing a new joint similarity measurement method based on logical smoothing. The experimental results show that the can be applied to the accuracy of Rank1 of the proposed method on the DukeMTMC-reIDand Market1501 datasets are respectively increased from 83.8% and 91.2% to 94.4% and 98.0%, and a significant performance improvement is realized over other methods.

Owner:SUN YAT SEN UNIV

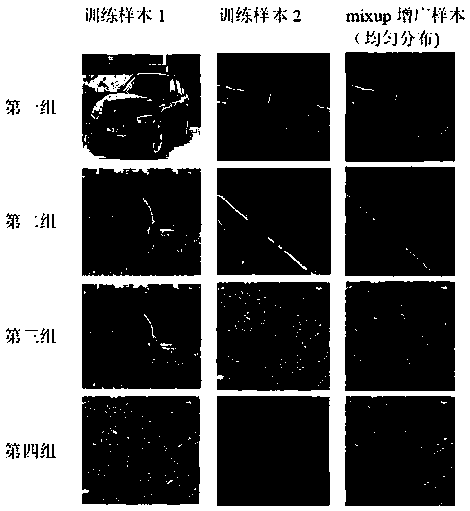

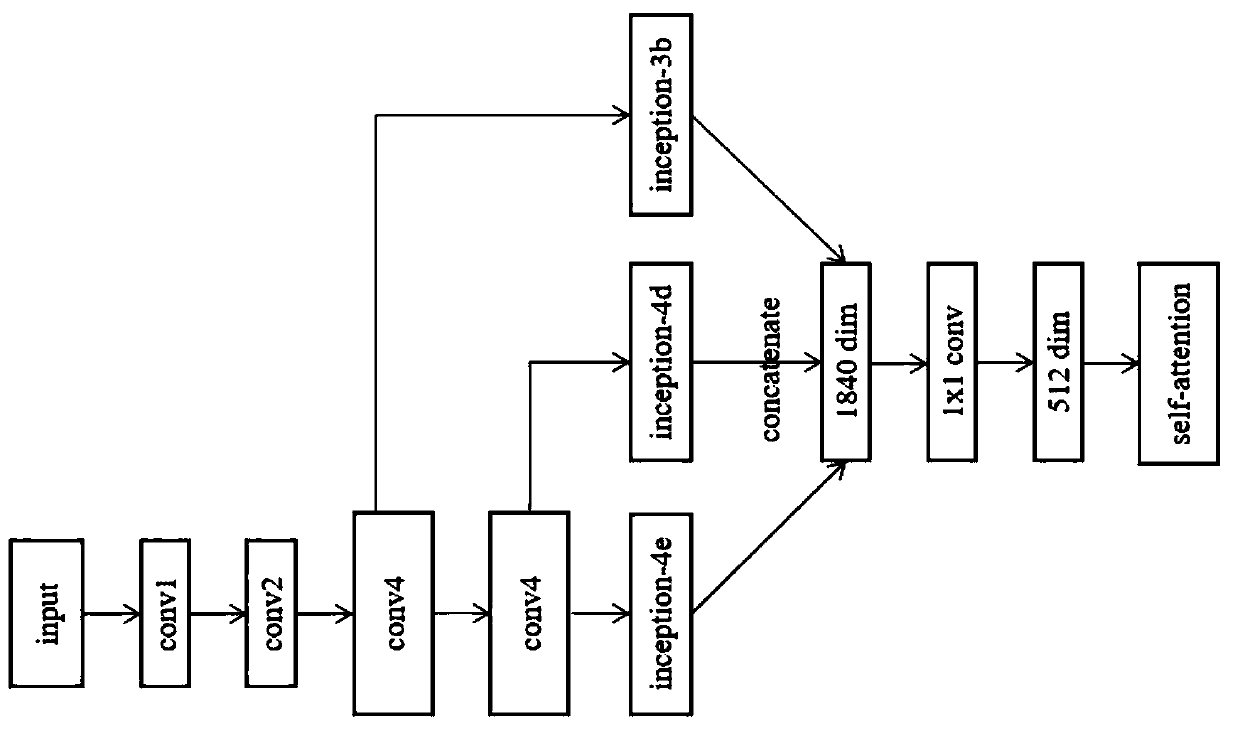

Image fine-grain identification method based on multi-stream multi-scale cross bilinear features

ActiveCN110188816AFine local featuresFeature underutilization problem solvingCharacter and pattern recognitionEnergy efficient computingFeature extractionData set

The invention provides an image fine-grain identification method based on multi-stream multi-scale cross bilinear features. Aiming at the problems of insufficient extraction and insufficient feature utilization of fine-grain features of the image, the image fine-grain identification method utilizes a multi-stream network to extract cross bilinear features, and the features can represent finer local features of the image, thus solving the problem of insufficient feature extraction. The image fine-grain identification method solves the problem of insufficient feature utilization by using a method for enhancing and fusing multi-scale bottom layer bilinear features through image random mixing. Experimental verification shows that the fine-grain identification method based on multi-stream network fused multi-scale cross bilinear features provided by the invention remarkably improves the identification accuracy on a CUB-200-2011 public data set, compared with an existing method, and achievesthe optimal fine-grain identification accuracy.

Owner:SOUTHEAST UNIV

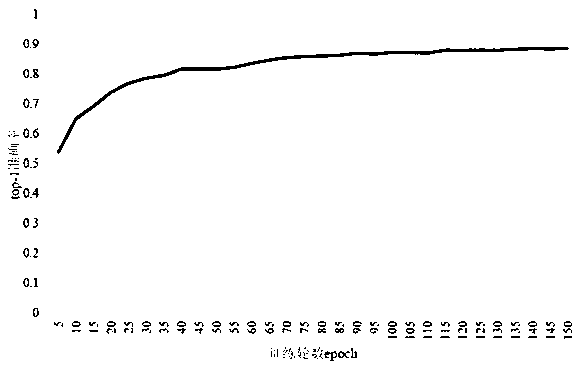

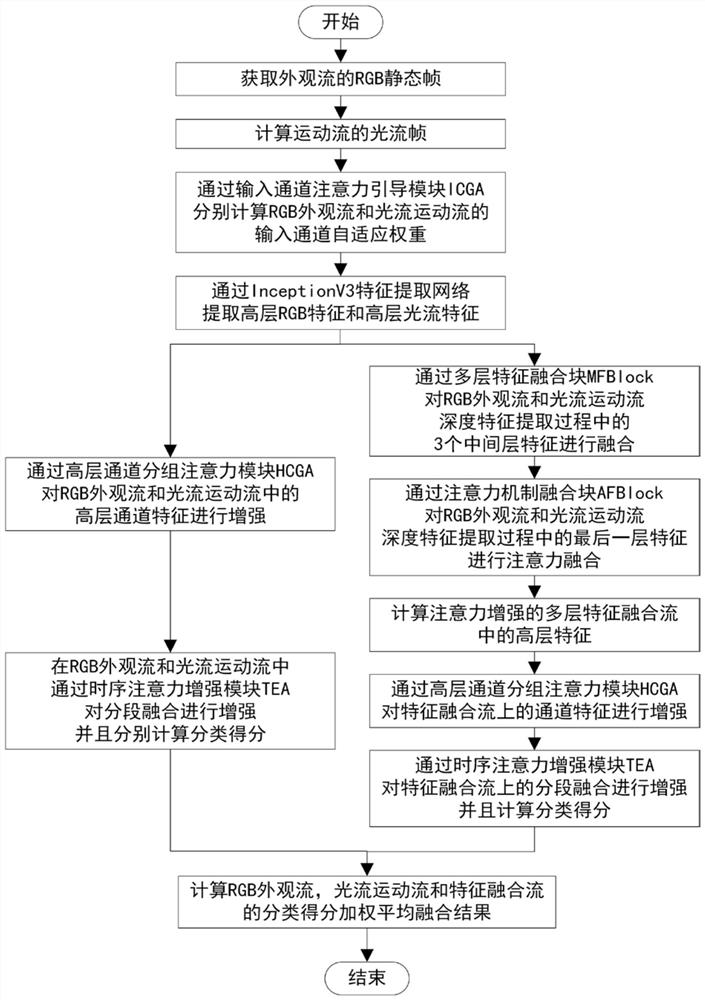

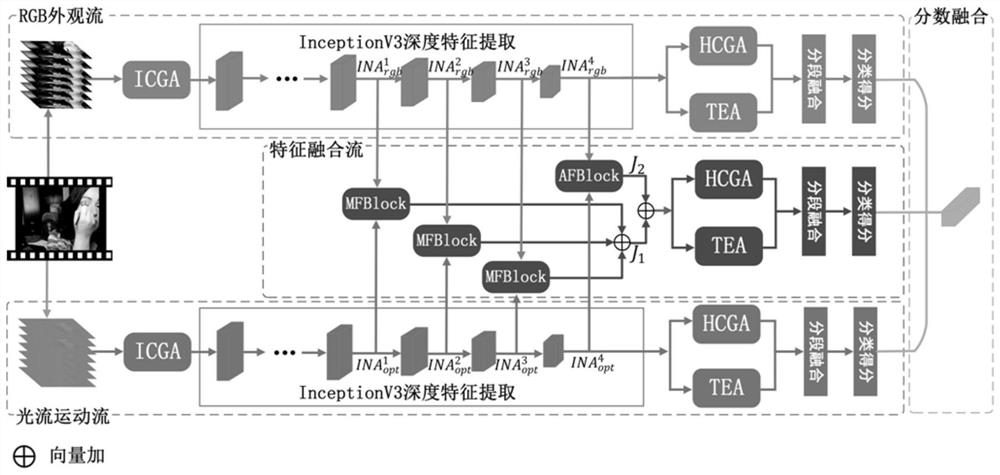

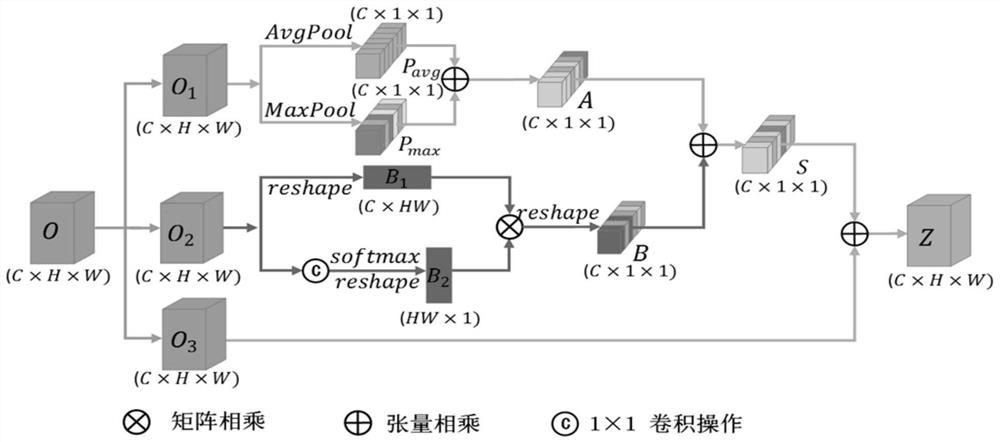

Behavior recognition method based on space-time attention enhancement feature fusion network

ActiveCN111709304AEnhanced ability to extract valid channel featuresImprove the problem of easy feature overfittingCharacter and pattern recognitionNeural architecturesFrame sequenceMachine vision

The invention discloses a behavior recognition method based on a space-time attention enhancement feature fusion network, and belongs to the field of machine vision. According to the method, a networkarchitecture based on an appearance flow and motion flow double-flow network is adopted, and is called as a space-time attention enhancement feature fusion network. Aiming at a traditional double-flow network, simple feature or score fusion is adopted for different branches, an attention-enhanced multi-layer feature fusion flow is constructed to serve as a third branch to supplement a double-flowstructure. Meanwhile, aiming at the problem that the traditional deep network neglects modeling of the channel characteristics and cannot fully utilize the mutual relation between the channels, the channel attention modules of different levels are introduced to establish the mutual relation between the channels to enhance the expression capability of the channel characteristics. In addition, thetime sequence information plays an important role in segmentation fusion, and the representativeness of important time sequence features is enhanced by performing time sequence modeling on the frame sequence. Finally, the classification scores of different branches are subjected to weighted fusion.

Owner:JIANGNAN UNIV

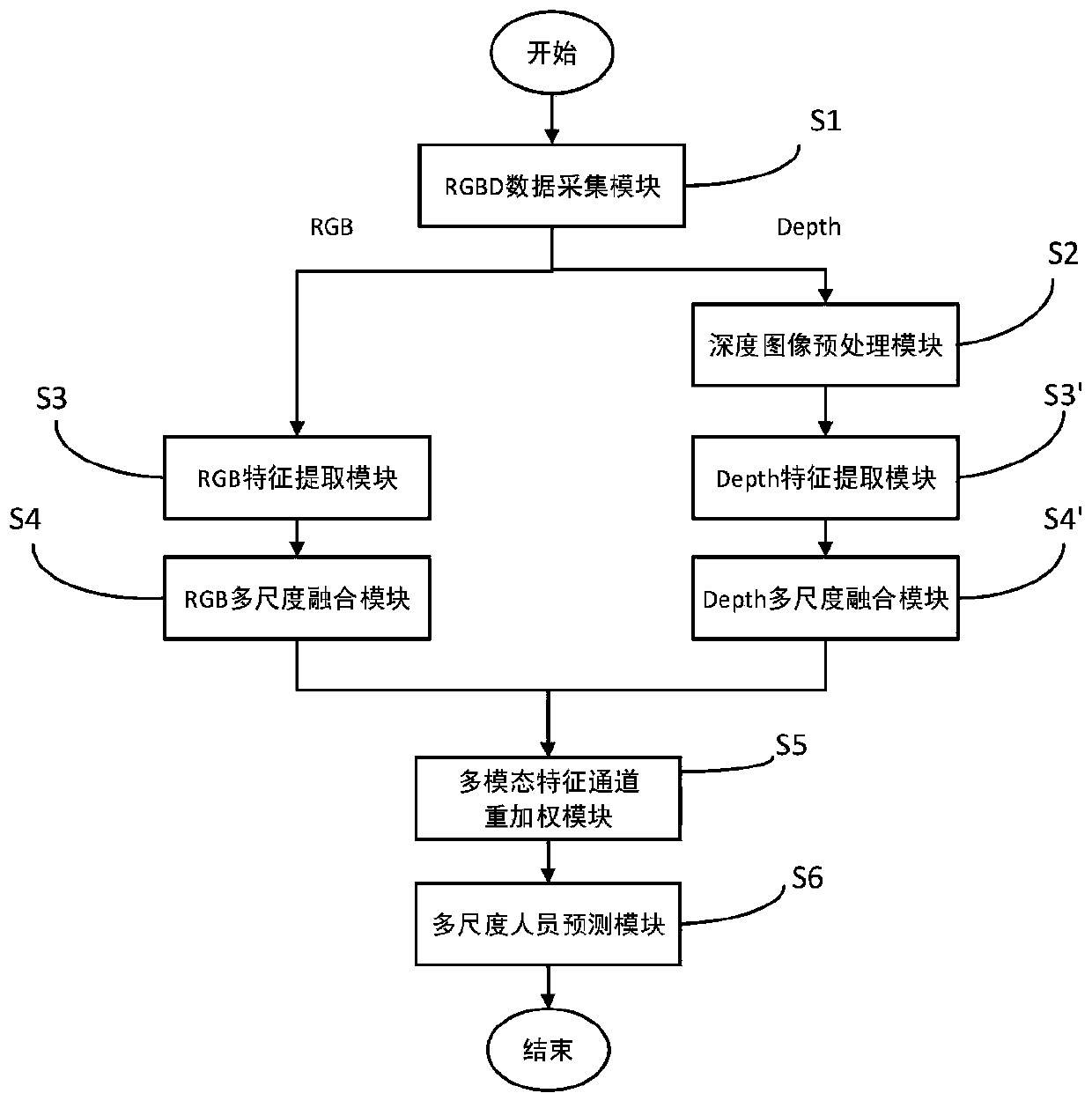

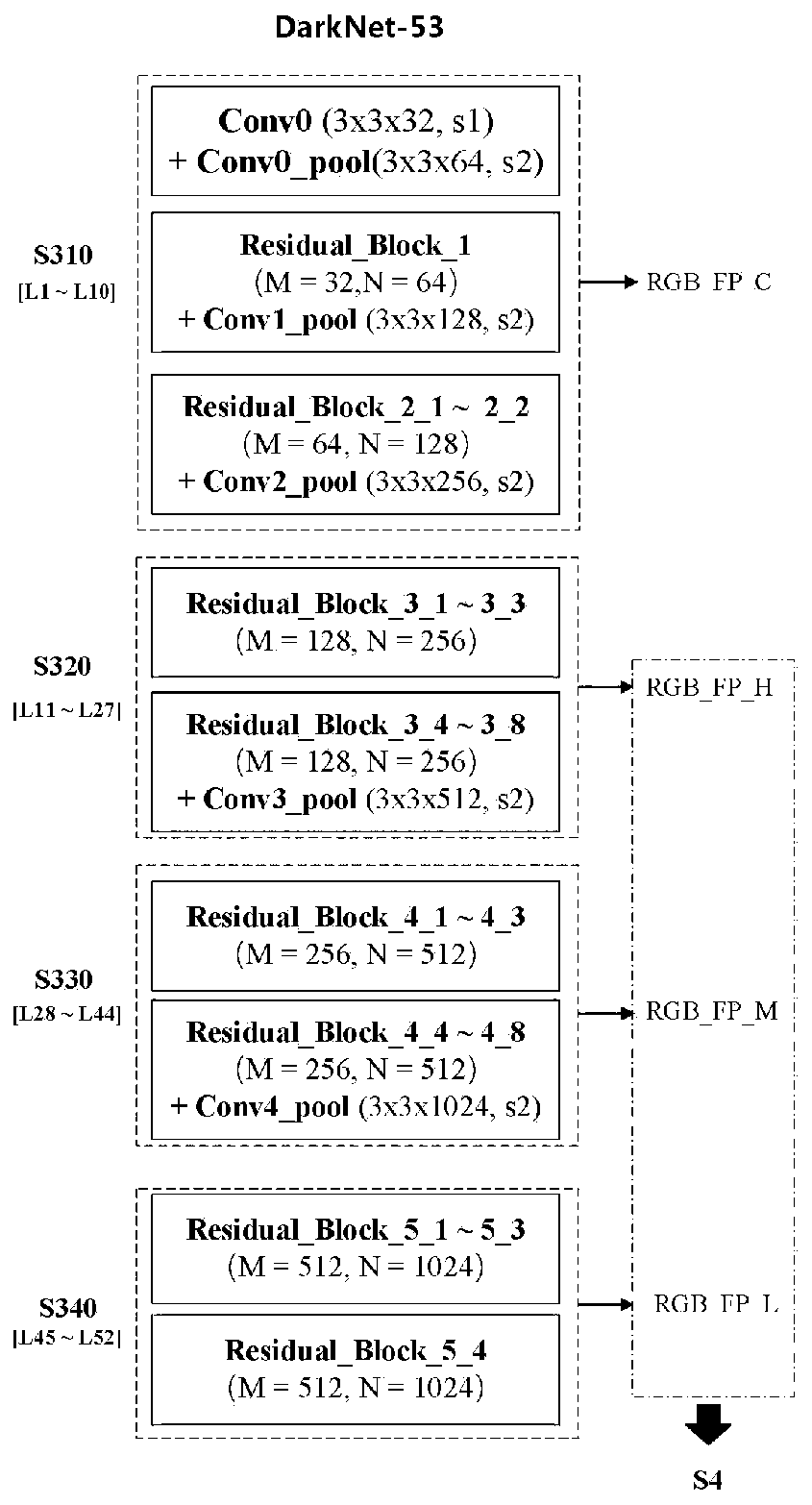

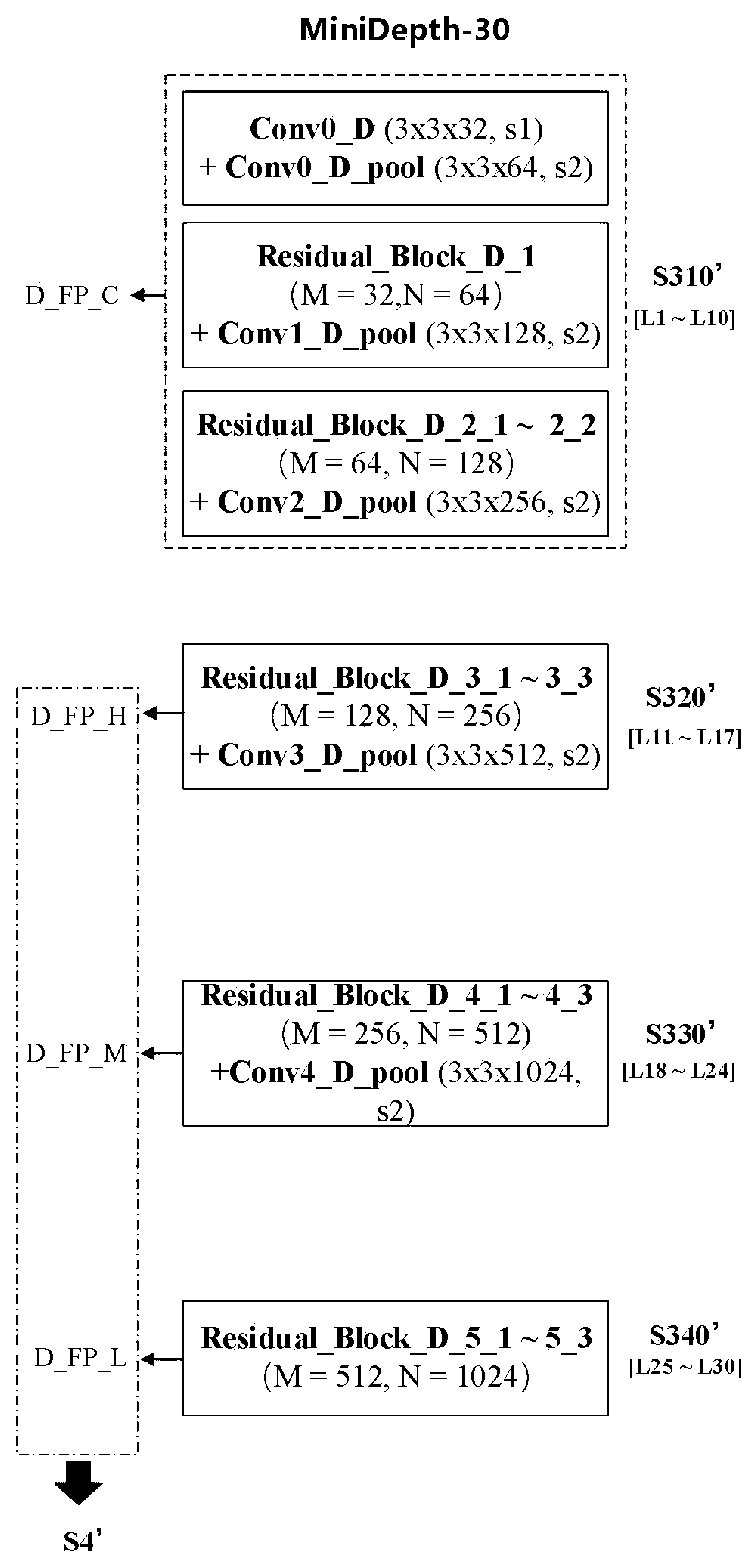

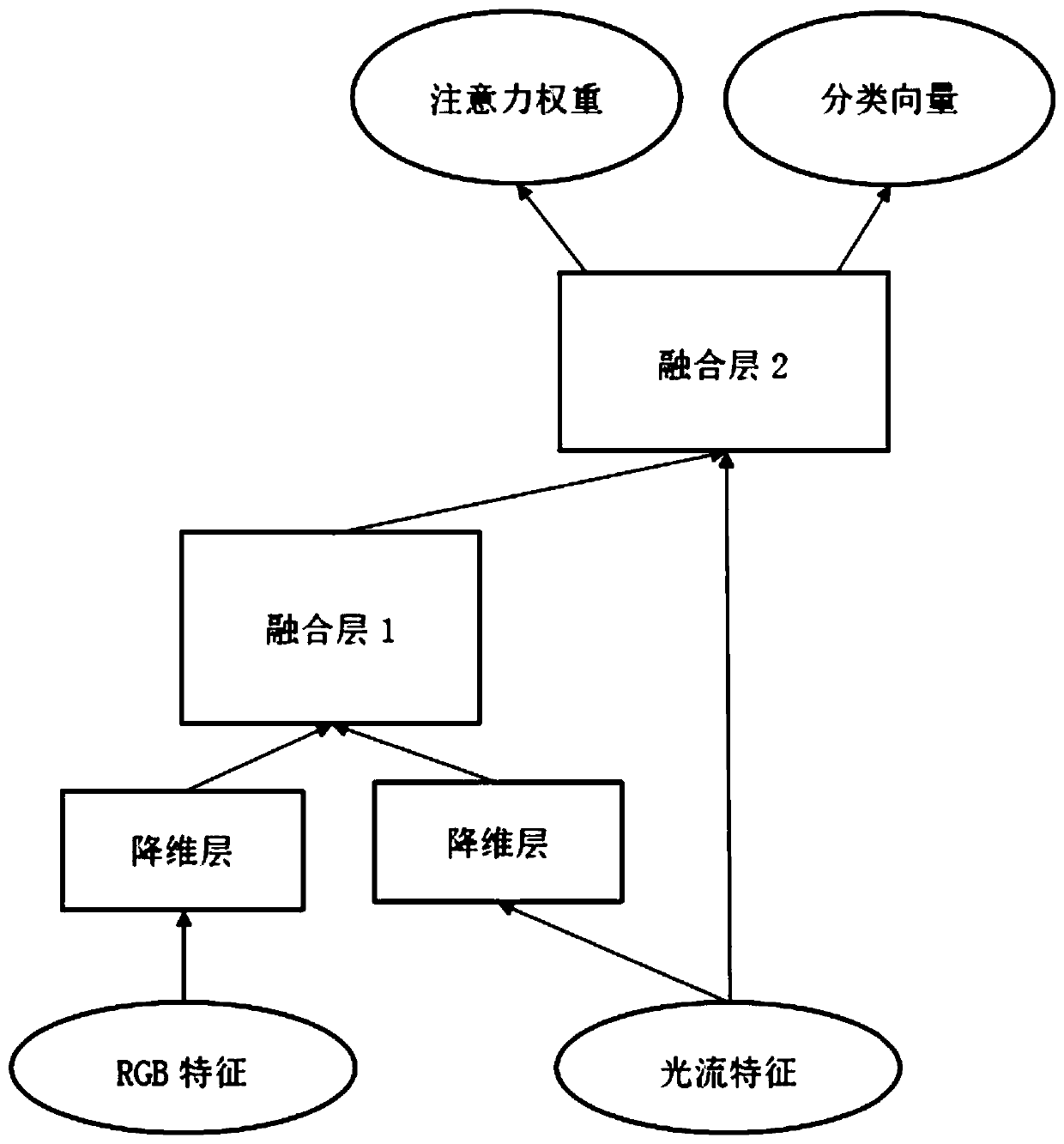

RGB-D multi-mode fusion person detection method based on asymmetric double-flow network

PendingCN110956094AReduce the risk of fittingFew parametersBiometric pattern recognitionNeural architecturesPerson detectionVisual perception

The invention discloses an RGB-D multi-modal fusion person detection method based on an asymmetric double-flow network, and belongs to the field of computer vision and image processing. The method comprises the steps of RGBD image acquisition, depth image preprocessing, RGB feature extraction and Depth feature extraction, RGB multi-scale fusion and Depth multi-scale fusion, multi-modal feature channel reweighting and multi-scale personnel prediction. According to the method, an asymmetric RGBD double-flow convolutional neural network model is designed to solve the problem that a traditional symmetric RGBD double-flow network is prone to causing depth feature loss. Multi-scale fusion structures are designed for RGBD double-flow networks respectively, so multi-scale information complementation is achieved. A multi-modal reweighting structure is constructed, the RGB and Depth feature maps are combined, and weighted assignment is performed on each combined feature channel to realize modelautomatic learning contribution proportion. Person classification and frame regression are carried out by using multi-modal features, so the accuracy of personnel detection is improved while the real-time performance is ensured, and the robustness of detection under low illumination at night and personnel shielding is enhanced.

Owner:BEIJING UNIV OF TECH

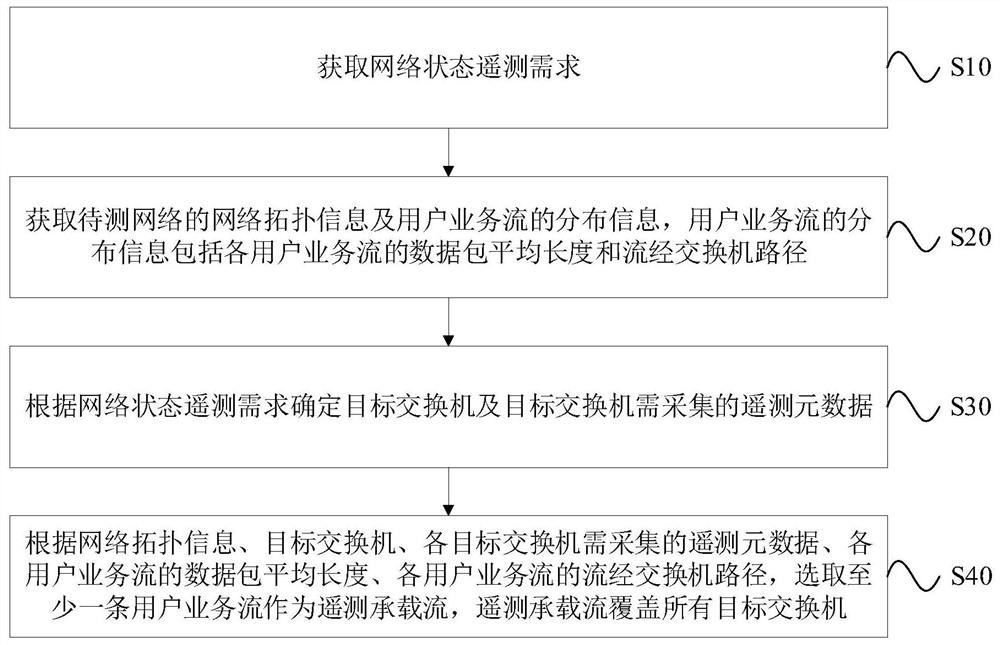

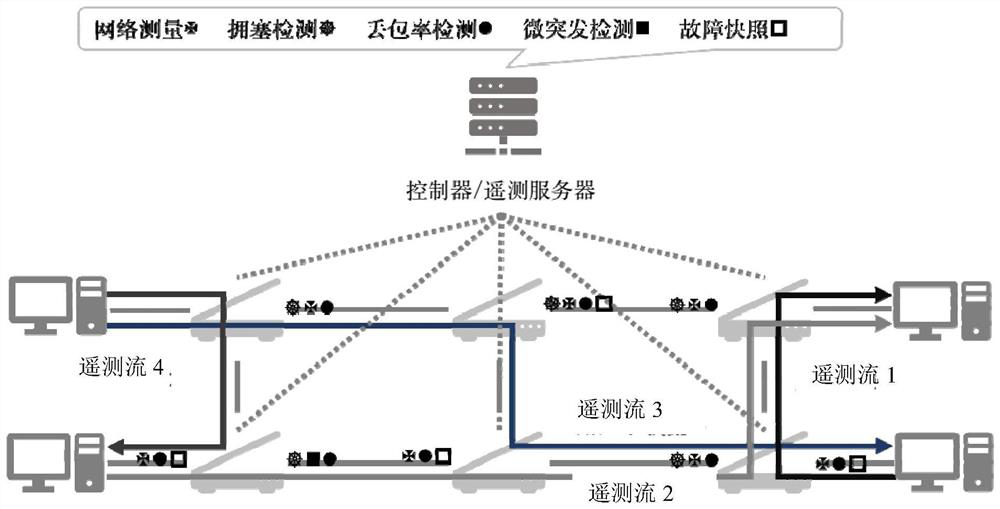

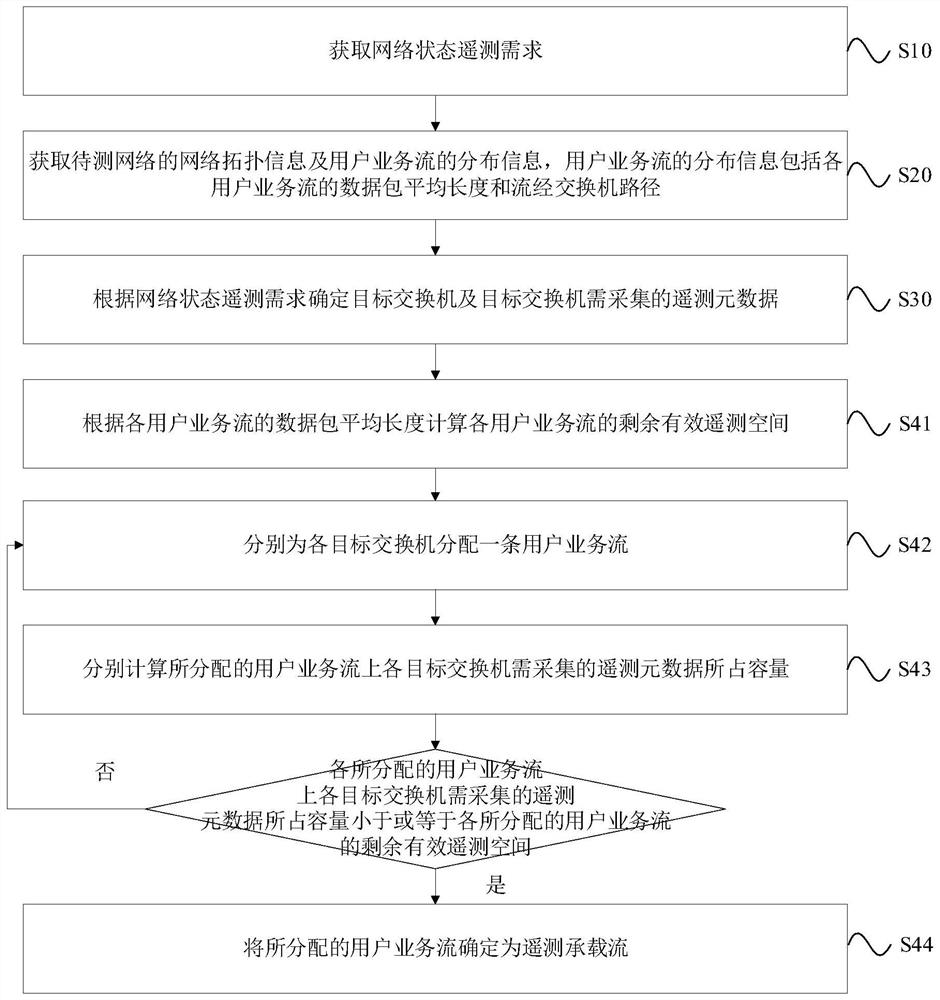

In-band network telemetry bearer flow selection method and system

The invention provides an in-band network telemetry bearer flow selection method and system, and the method comprises the steps: obtaining a network state telemetry demand; acquiring network topologyinformation of a to-be-tested network and distribution information of user service flows, wherein the distribution information of the user service flows comprises average length of data packets of each user service flow and paths flowing through a switch; determining a target switch and telemetry metadata needing to be collected by the target switch according to a network state telemetry requirement; and according to the network topology information, the target switch, the telemetry metadata needing to be collected by each target switch, the average length of the data packets of each user service flow, and the path of each user service flow passing through the switch, selecting at least one user service flow as a telemetry bearer flow, the telemetry bearer flow covering all the target switches. According to the user service flow selected by the in-band network telemetry bearer flow selection method provided by the invention, a network administrator can acquire the required network state in time.

Owner:BEIJING JIAOTONG UNIV

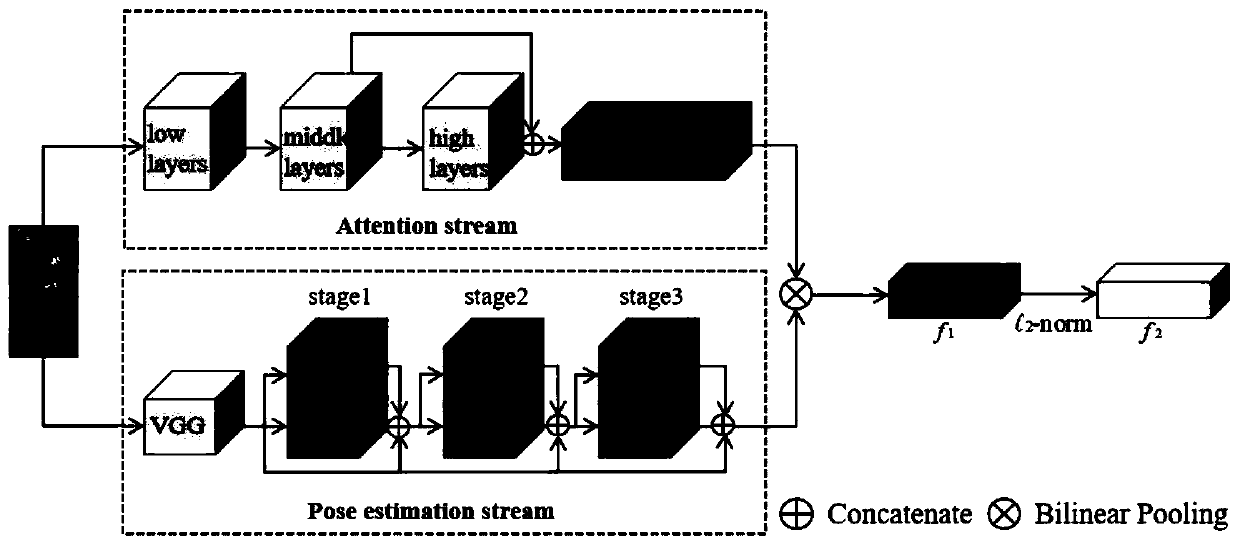

Pedestrian re-recognition method combining posture and attention based on double-flow network

InactiveCN110781736ARich semantic informationConvenience to followCharacter and pattern recognitionNeural architecturesPattern recognitionNetwork structure

The invention discloses a pedestrian re-recognition method combining posture and attention based on a double-flow network. The method comprises the following steps of: 1, preprocessing an input imageand inputting the input image into a double-flow network to extract features; and 2, combining middle-layer features and high-layer features and associating global information through an attention mechanism. And 3, fusing the attention flow and the attitude estimation flow through bilinear pooling operation to obtain a final feature map. And 4, model training: training neural network parameters byusing a back propagation algorithm. The invention provides a neural network model for pedestrian re-recognition, particularly provides a network structure combining an attention mechanism and attitude estimation based on a double-flow network, and obtains an effect of competitive power in the field of pedestrian re-recognition at present.

Owner:HANGZHOU DIANZI UNIV

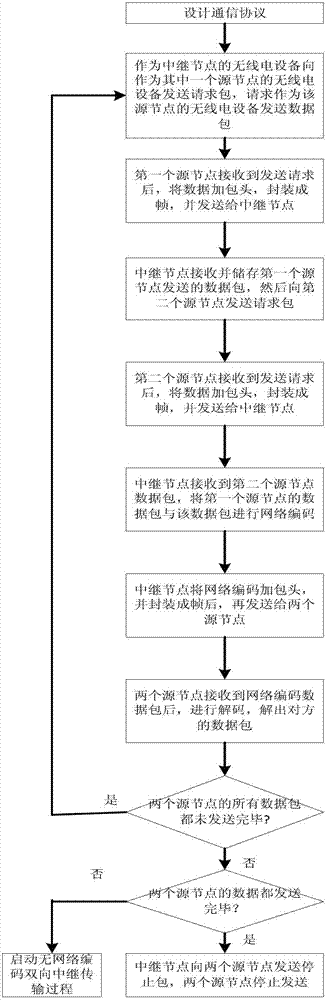

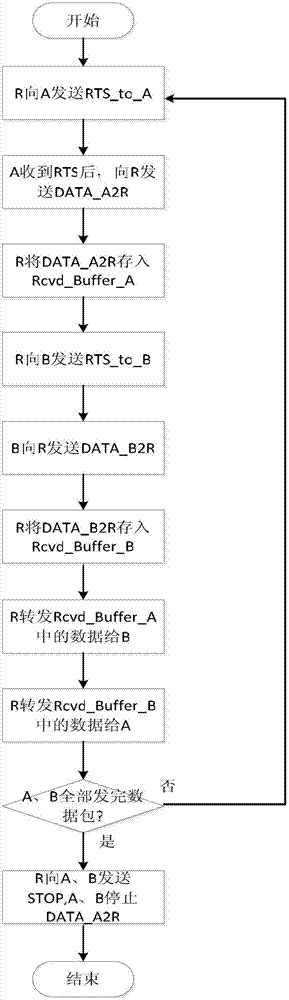

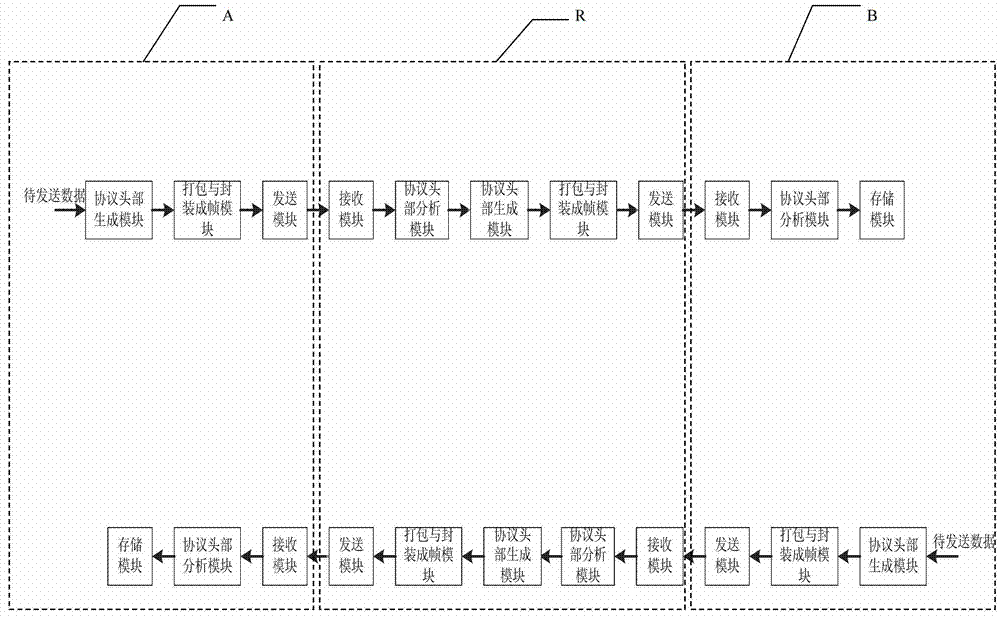

Relay transmission system and method capable of adaptively selecting relay schemes

InactiveCN102892142AImprove throughputNetwork traffic/resource managementAssess restrictionRadio equipmentFile transmission

The invention discloses a relay transmission system and a relay transmission method capable of adaptively selecting relay schemes, and belongs to the technical field of wireless communication. The system comprises three pieces of radio equipment. Each piece of radio equipment consists of a universal software radio peripheral (USRP) and a computer. The three pieces of radio equipment are used as three nodes, two nodes are source nodes, the other node is used as a relay node, and the source nodes perform relay communication through the relay node. The relay node can adaptively select a network encoding-free two-way relay transmission mode or an inter-stream network encoding two-way relay transmission mode. A plurality of relay modes suitable for a software radio platform is created, a plurality of relay transmission schemes are implemented on the software radio platform, and three types of services of data transmission, file transmission and video transmission can be supported. Throughput can be improved; and by the software radio platform, experimental data can be acquired and analyzed under an office condition.

Owner:宋清洋

Using RTCP Statistics For Media System Control

InactiveUS20090240826A1Improve playback qualityInterconnection arrangementsError preventionReal time communication systemsReal-time communication

Methods for using communication network statistics in the operation of a real-time communication system are disclosed. Embodiments of the invention may provide improved playback of real-time media streams by incorporating into the algorithms used for playback of the media stream network statistics typically calculated by some transport protocols.An additional aspect of the present invention may include machine-readable storage having stored thereon a computer program having a plurality of code sections executable by a machine for causing the machine to perform the foregoing.

Owner:AVAGO TECH INT SALES PTE LTD

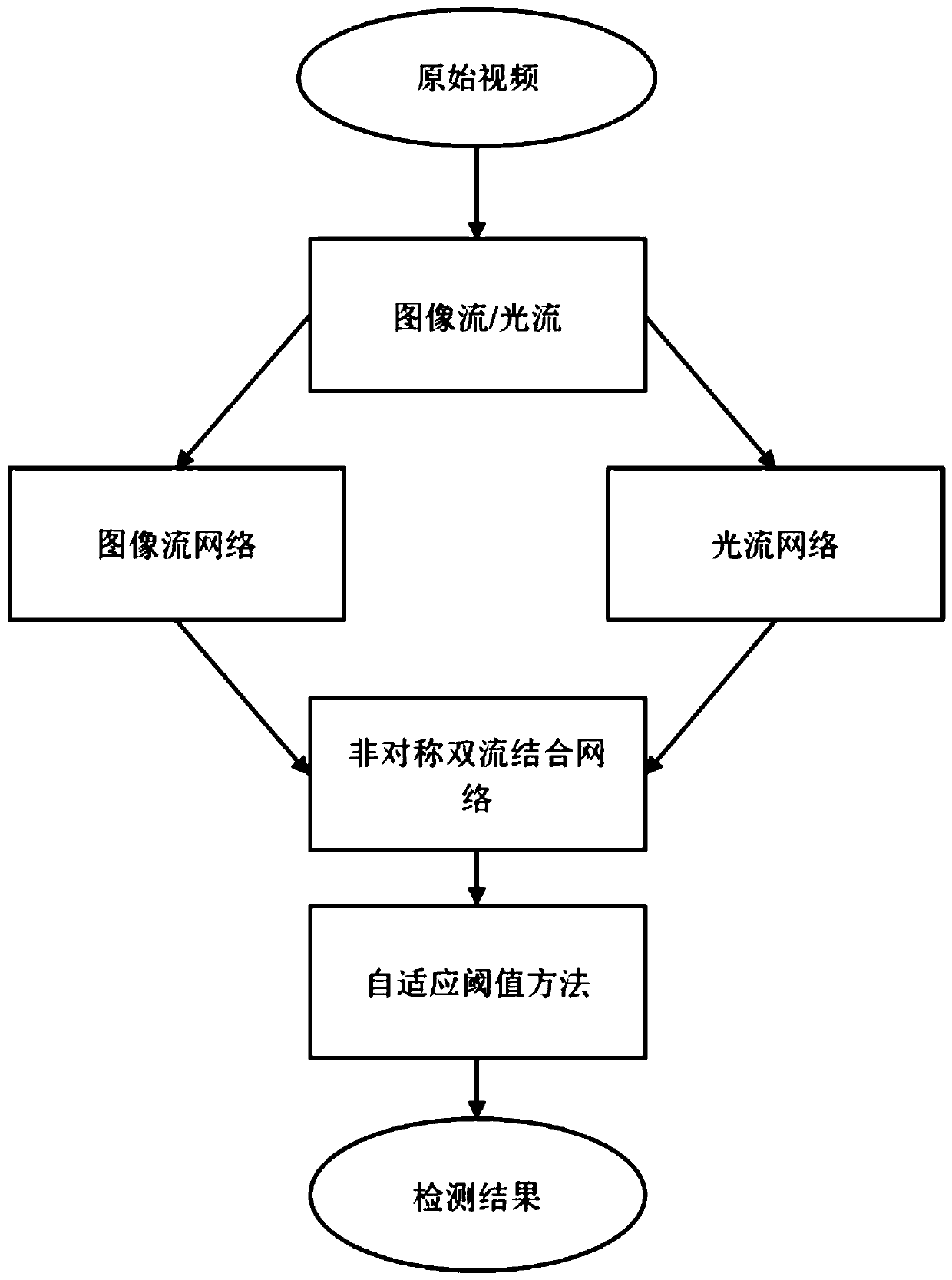

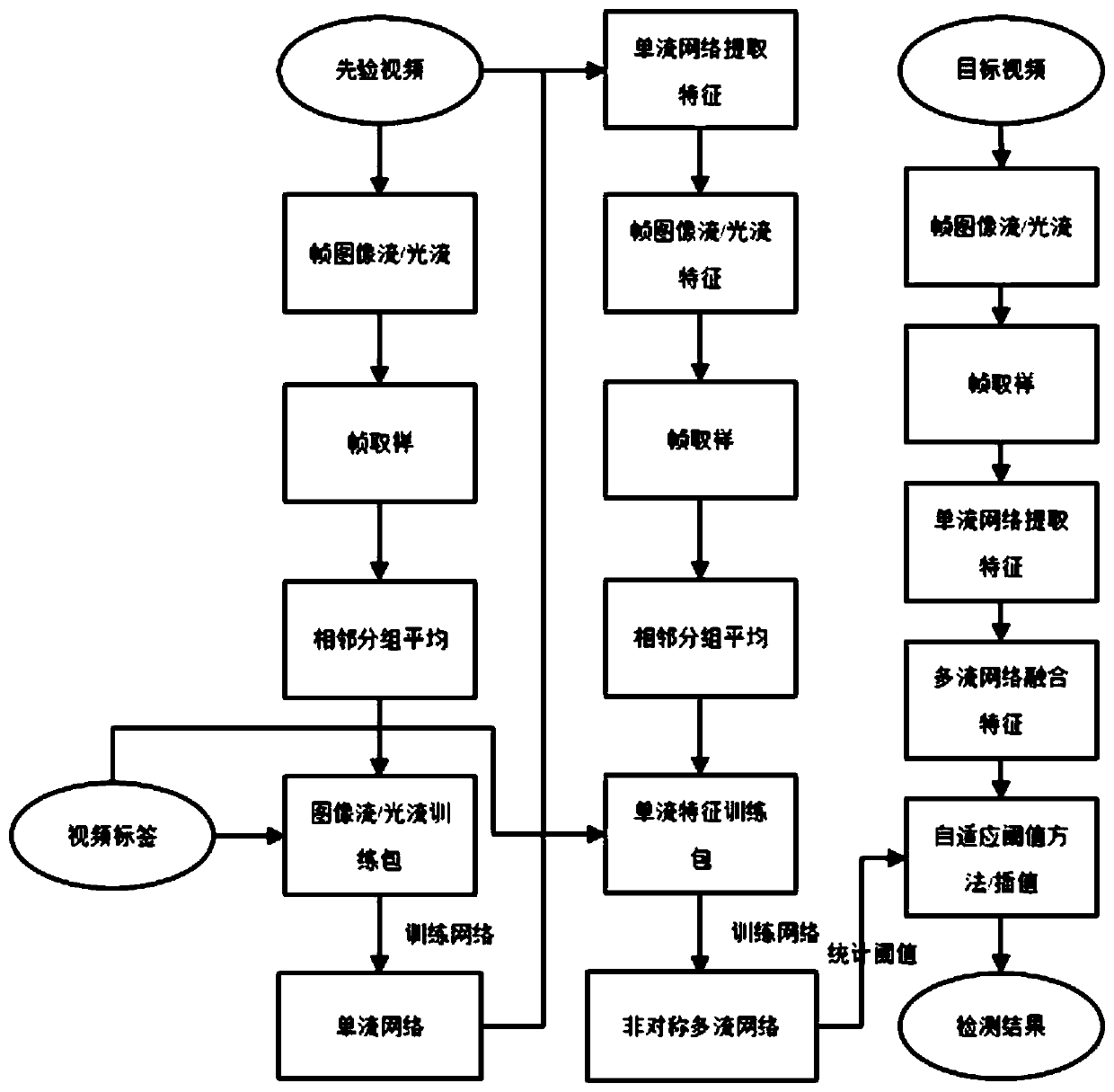

Action detection method based on asymmetric multi-flow

ActiveCN110263666AImprove accuracyImprove reliabilityCharacter and pattern recognitionNeural architecturesOptical flowStream network

The invention discloses an action detection method based on asymmetric multi-stream, which comprises the following steps: extracting an RGB image and an optical stream from a prior video, and training to obtain a trained RGB image single-stream network and an optical stream single-stream network; extracting image flow characteristic information and optical flow characteristic information of each frame in the priori video, and training an asymmetric double-flow network by combining an action label; respectively extracting image flow characteristic information and optical flow characteristic information of each frame in the target video to be detected through the trained RGB image single-flow network and optical flow single-flow network, obtaining segment characteristics of the target video, inputting the segment characteristics into the trained asymmetric double-flow network, and calculating to obtain a video classification vector; selecting potential actions from the video classification vectors to obtain an action recognition sequence of the potential actions; and completing the action detection is completed through the action recognition sequence. According to the action detection method, the asymmetry between the image flow and the optical flow is considered, and the accuracy of action recognition and action detection can be improved.

Owner:XI AN JIAOTONG UNIV

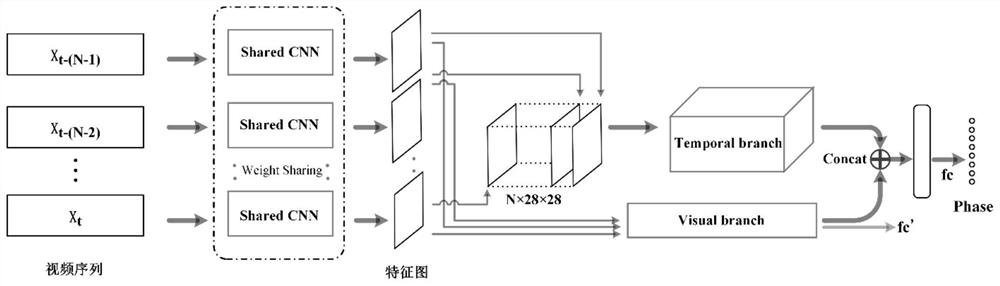

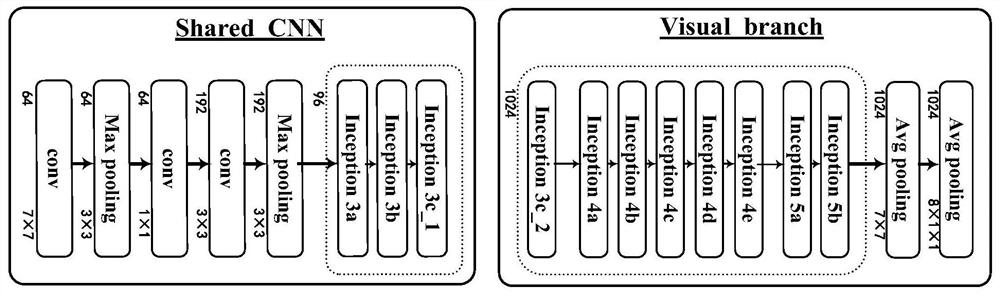

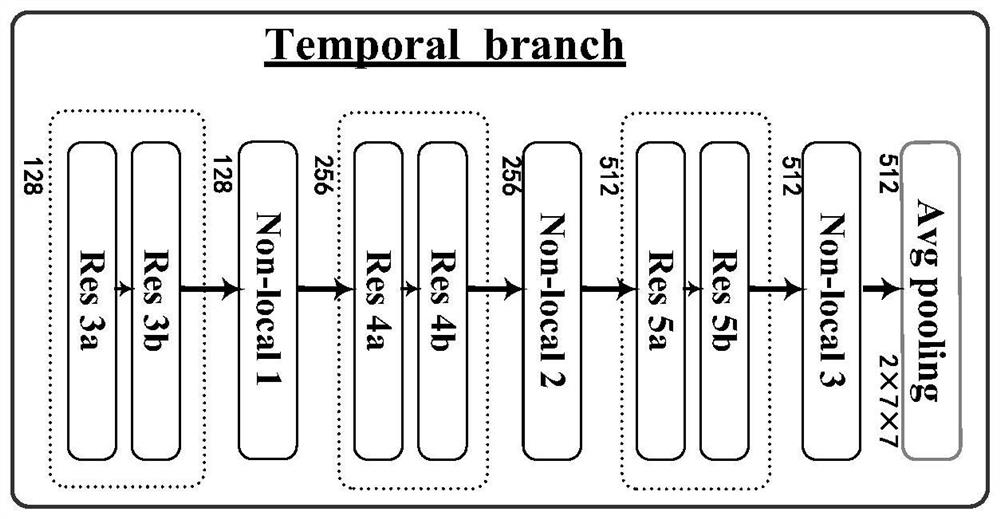

Laparoscopic surgery stage automatic recognition method and device based on double-flow network

PendingCN111783520AReduce the amount of parametersAchieve training optimizationNeural architecturesNeural learning methodsEngineeringVideo sequence

According to the automatic recognition method and device for the laparoscopic surgery stage based on the double-flow network, the requirement of an recognition task can be met, end-to-end training optimization of the network is achieved, and the recognition accuracy of the laparoscopic surgery stage is greatly improved. The method comprises the following steps: acquiring a laparoscopic cholecystectomy video to obtain a video key frame sequence; preliminarily extracting visual features of the N images at the same time by utilizing a shared convolutional CNN (Convolutional Neural Network), and taking an obtained feature map as input of a subsequent double-flow network structure; respectively extracting time correlation information and deep visual semantic information of the video sequence byusing a double-flow network structure, further extracting the deep visual semantic information by using a visual branch undertaking a Shared CNN, and fully capturing the time correlation informationof adjacent N images by using three-dimensional convolution and non-local convolution by using a time sequence branch; wherein the deep visual semantic information extracted by the double-flow networkstructure and the time associated information supplement each other, and obtaining an operation stage recognition result by using the fused features.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

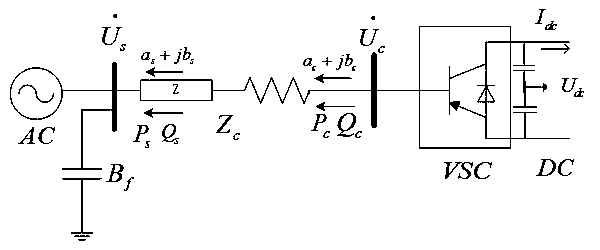

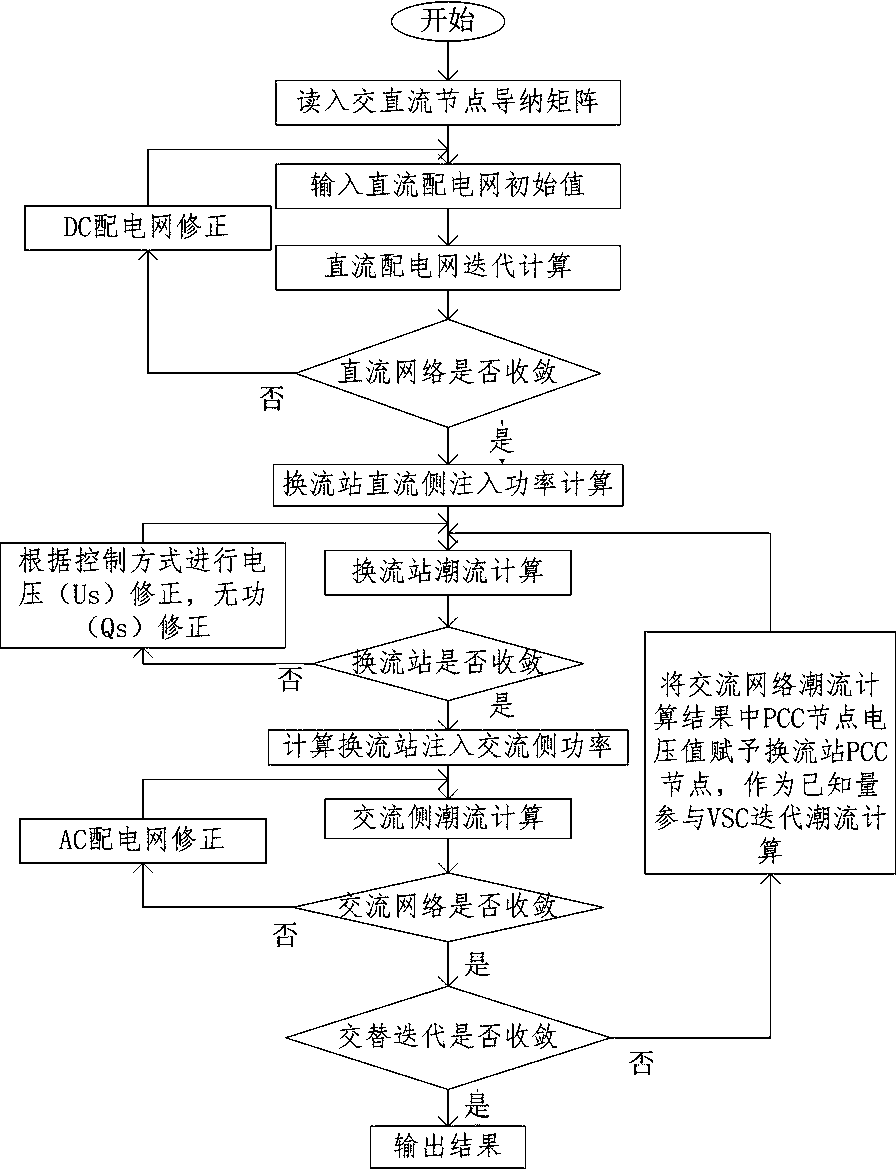

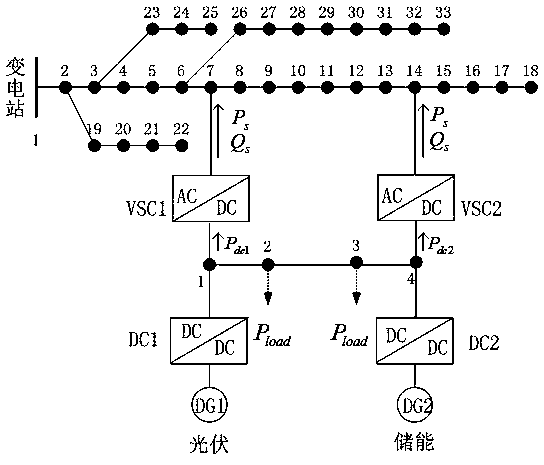

Load flow calculation method based on VSC internal correction equation matrix and alternate iteration method under augmented rectangular coordinates

ActiveCN110932282AFast convergenceImprove computing efficiencyAc networks with different sources same frequencyPower flowNetwork structure

The invention relates to a load flow calculation method based on a VSC internal correction equation matrix and an alternate iteration method under augmented rectangular coordinates. The calculation efficiency can be improved by utilizing the linearization relationship between the node voltage and the node injection current in the augmented rectangular coordinate model and the extremely high sparsity rate of the Jacobian matrix under the model; a correction equation and a Jacobian matrix for internal load flow calculation of a VSC (voltage source converter) under an augmented rectangular coordinate are provided; a VSC internal power flow model is established under an augmented rectangular coordinate; a VSC is decoupled from an AC network and a DC network; active power control is adjusted; original reactive power control is adopted, a network structure is kept unchanged, an AC / DC decoupling power flow algorithm model under augmented rectangular coordinates is finally obtained, only one-loop DC side power flow calculation and one-loop VSC and AC network alternate iterative calculation need to be carried out, the Jacobian matrix scale is reduced, and the convergence and calculation efficiency of power flow calculation are improved.

Owner:FUZHOU UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com