Behavior recognition method and system based on attention mechanism double-flow network

A recognition method and flow network technology, applied in the field of behavior recognition based on the attention mechanism dual-stream network, can solve the problems of low utilization of video data, affecting the classification accuracy, and the dual-stream network does not consider the weight of image features, so as to suppress irrelevant information. The effect of improving the accuracy of behavior recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment 1

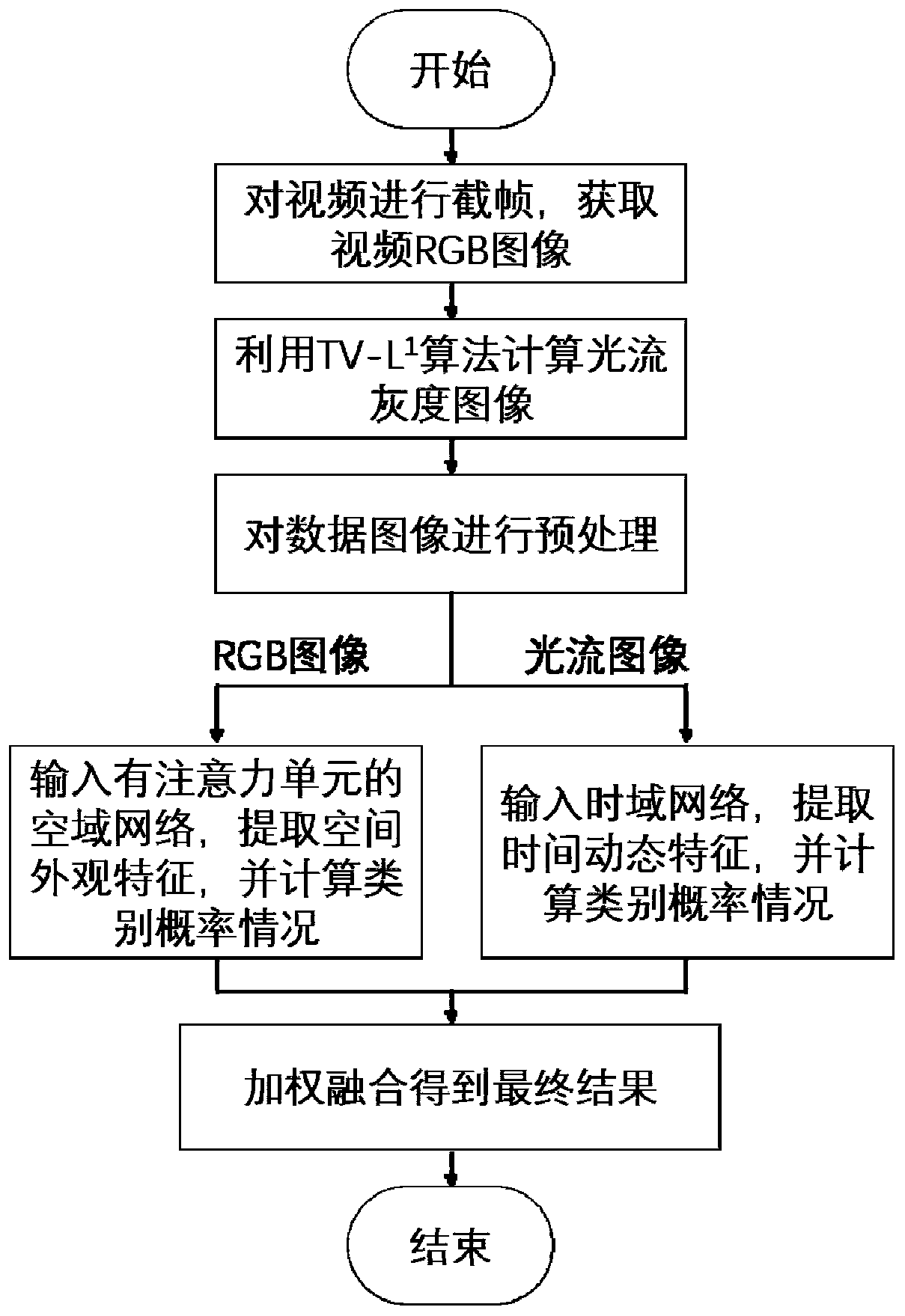

[0033] Such as figure 1 As shown, Embodiment 1 of the present disclosure provides a behavior recognition method based on an attention mechanism dual-stream network, including the following steps:

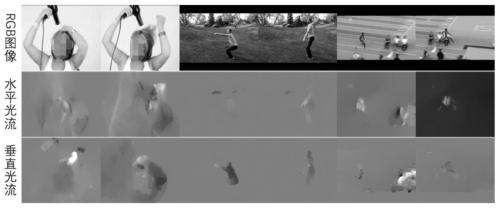

[0034] Divide the acquired entire video into multiple video clips of equal length, extract the RGB image and optical flow grayscale image of each frame of each video clip, and perform preprocessing;

[0035] Randomly sampling the preprocessed image to obtain at least one RGB image and at least one optical flow grayscale image of each video clip;

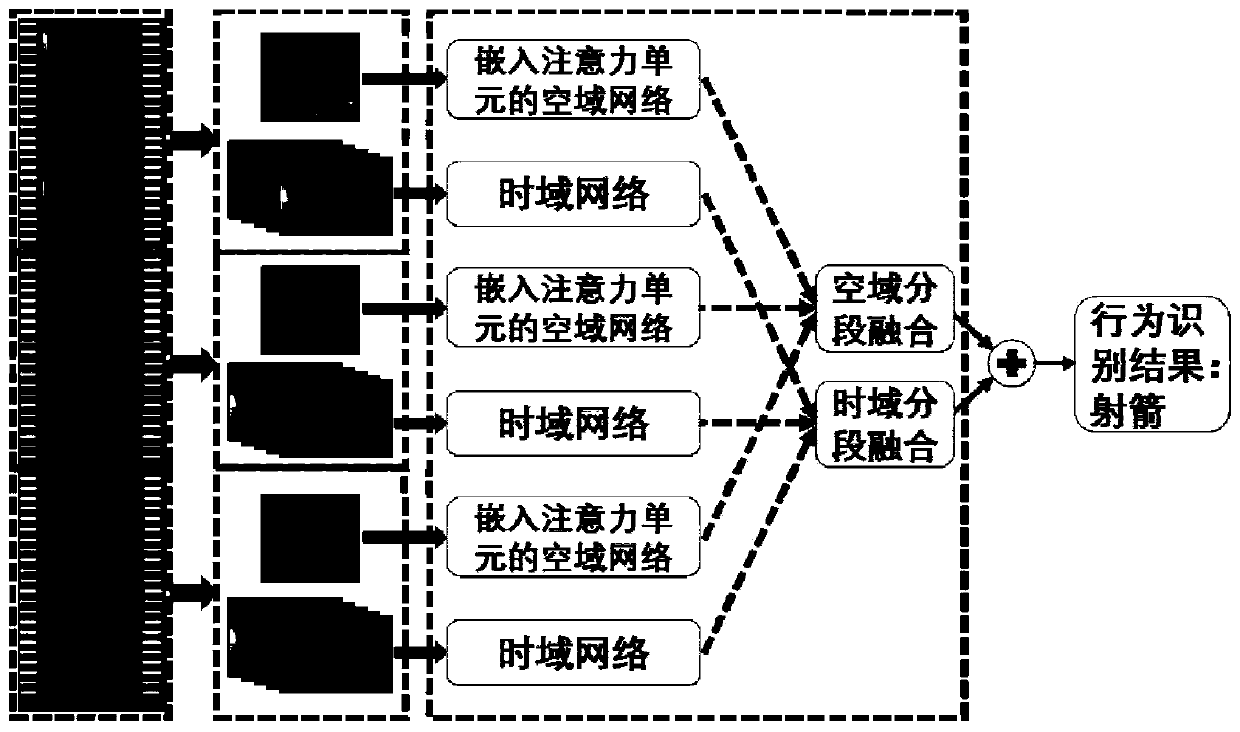

[0036] Using the dual-stream network model that introduces the attention mechanism, the appearance features and temporal dynamic features of the sampled images are extracted, and the extracted features of each video clip are fused according to the type of time domain network and space domain network, and the fusion results of the time domain network are Weighted fusion is performed with the fusion result of the airspace network to obtain ...

Embodiment 2

[0064] Embodiment 2 of the present disclosure provides a behavior recognition system based on an attention mechanism dual-stream network, including:

[0065] The data acquisition module is configured to: divide the acquired whole video into a plurality of video clips of equal length, extract RGB images and optical flow grayscale images of each frame of each video clip, and perform preprocessing;

[0066] The image sampling module is configured to: randomly sample the preprocessed image to obtain at least one RGB image and at least one stacked optical flow grayscale image of each video segment;

[0067] The behavior recognition module is configured to: use the dual-stream network model that introduces the attention mechanism to extract the appearance features and time dynamic features of the sampled images, and fuse the extracted features of each video segment according to the time domain network and the space domain network type. , the fusion result of the time domain network ...

Embodiment 3

[0070] Embodiment 3 of the present disclosure provides a medium on which a program is stored, and when the program is executed by a processor, the steps in the behavior recognition method based on the attention mechanism dual-stream network as described in Embodiment 1 of the present disclosure are implemented.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com