Patents

Literature

2315 results about "Multi modality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multimodality is a theory which looks at the many different modes that people use to communicate with each other and to express themselves.

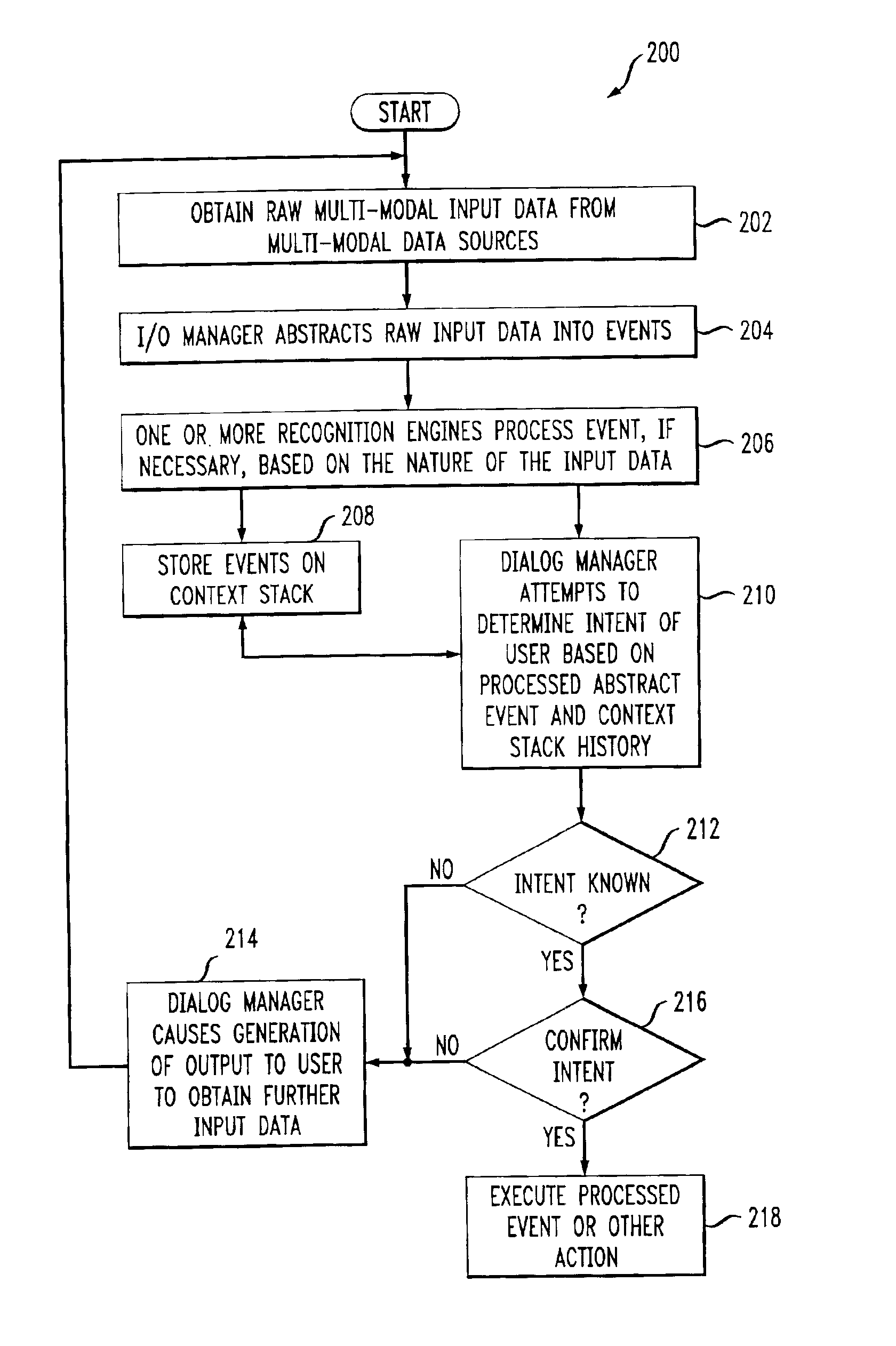

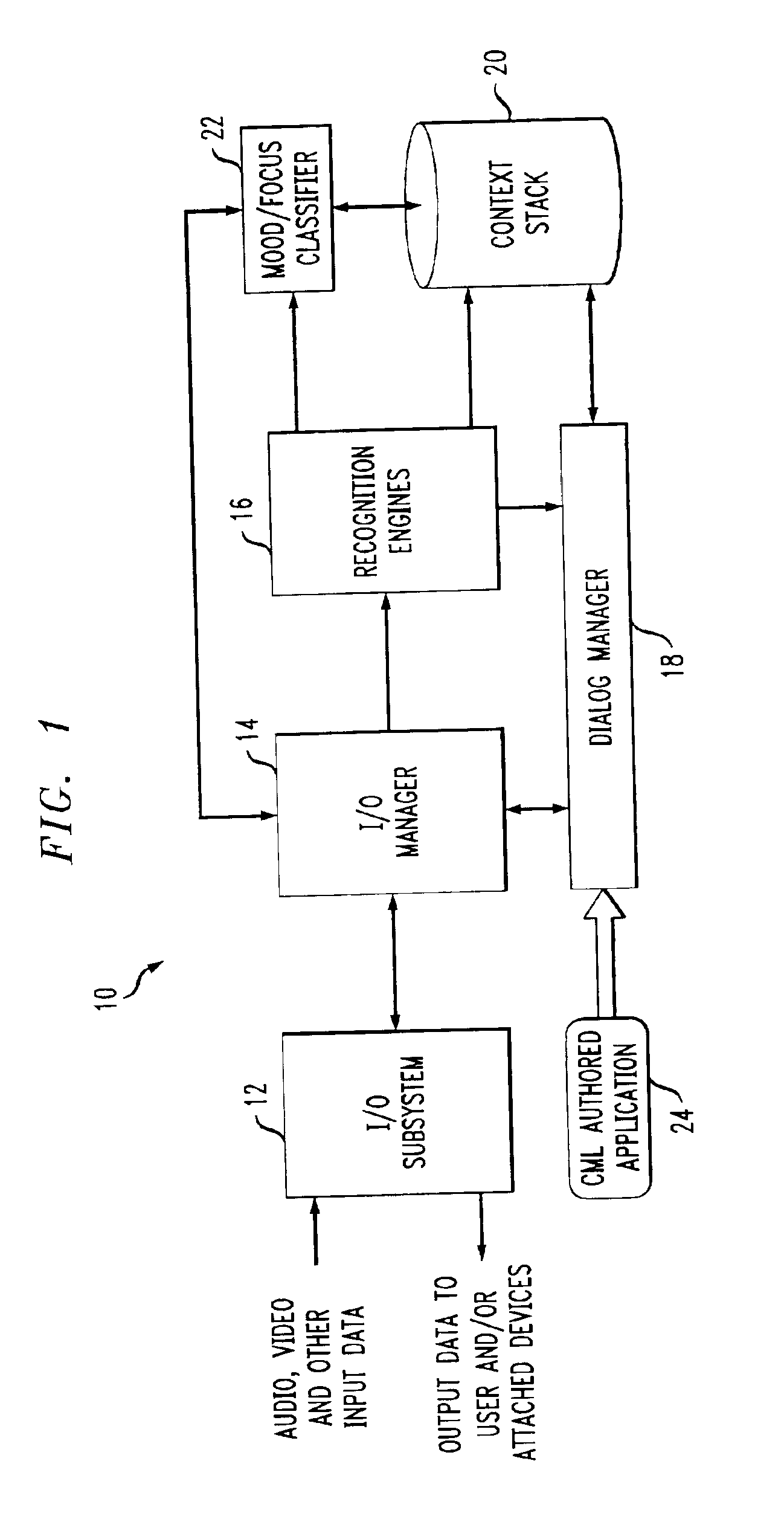

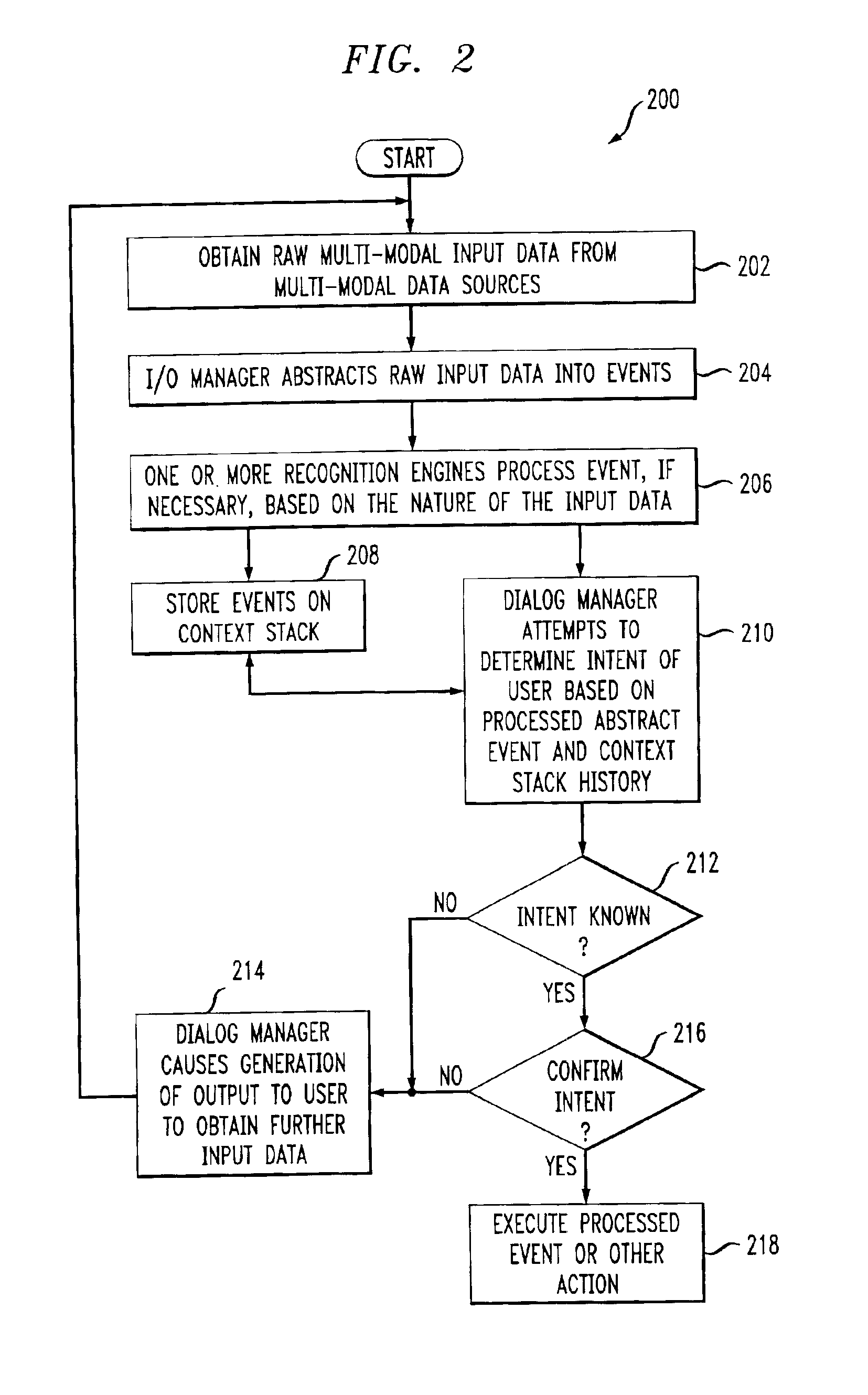

System and method for multi-modal focus detection, referential ambiguity resolution and mood classification using multi-modal input

InactiveUS6964023B2Effective conversational computing environmentInput/output for user-computer interactionData processing applicationsOperant conditioningComputer science

Owner:IBM CORP

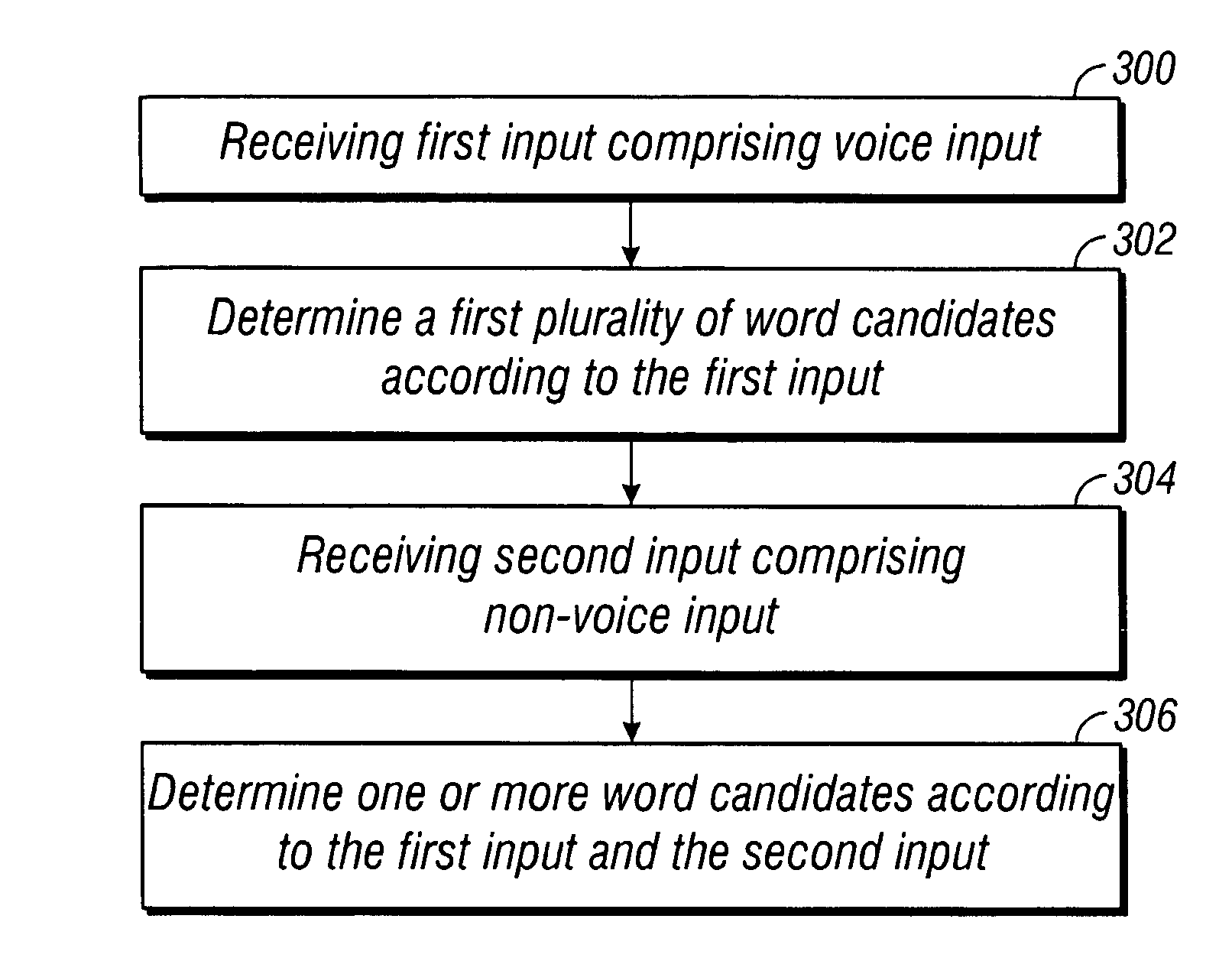

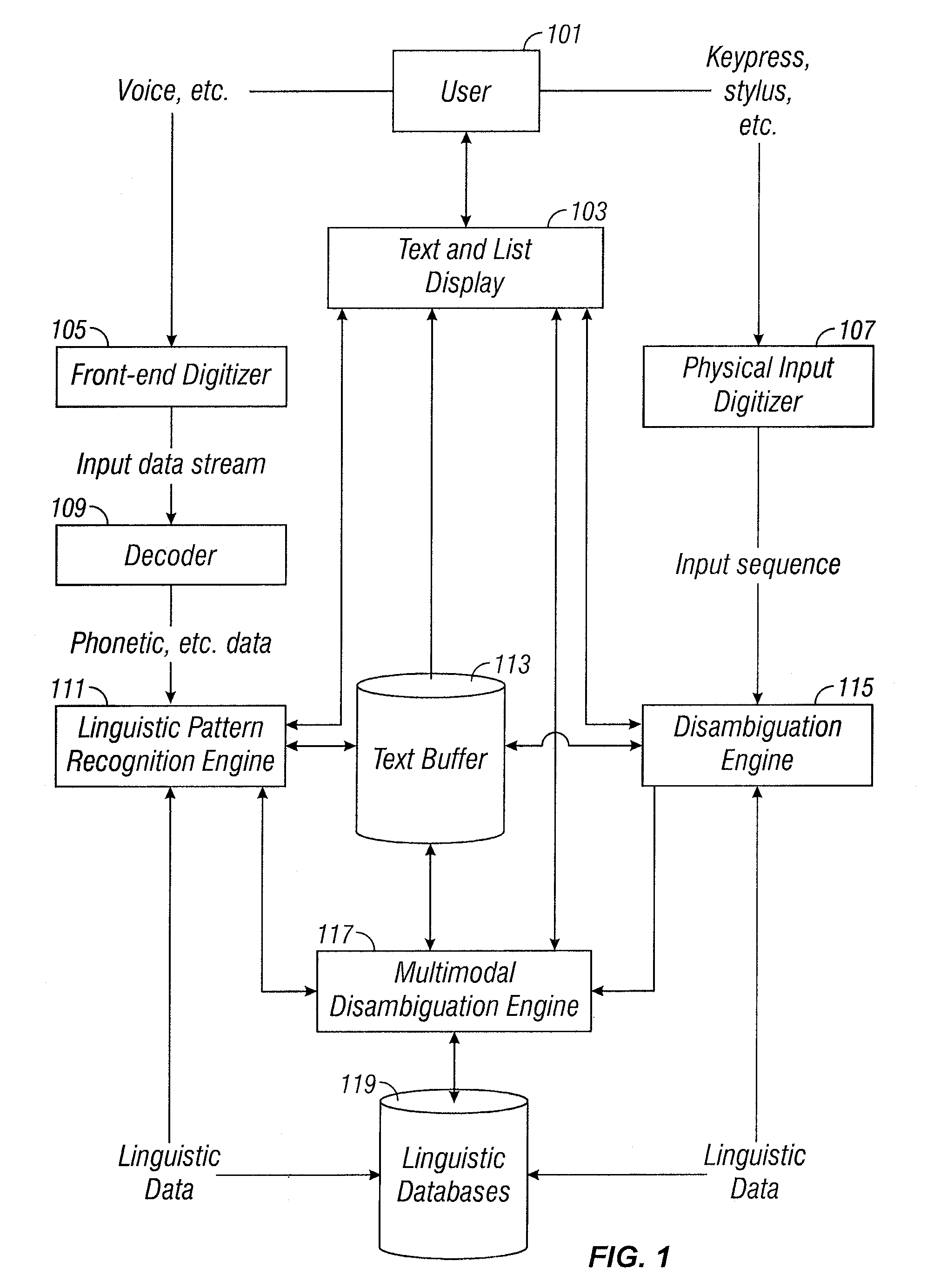

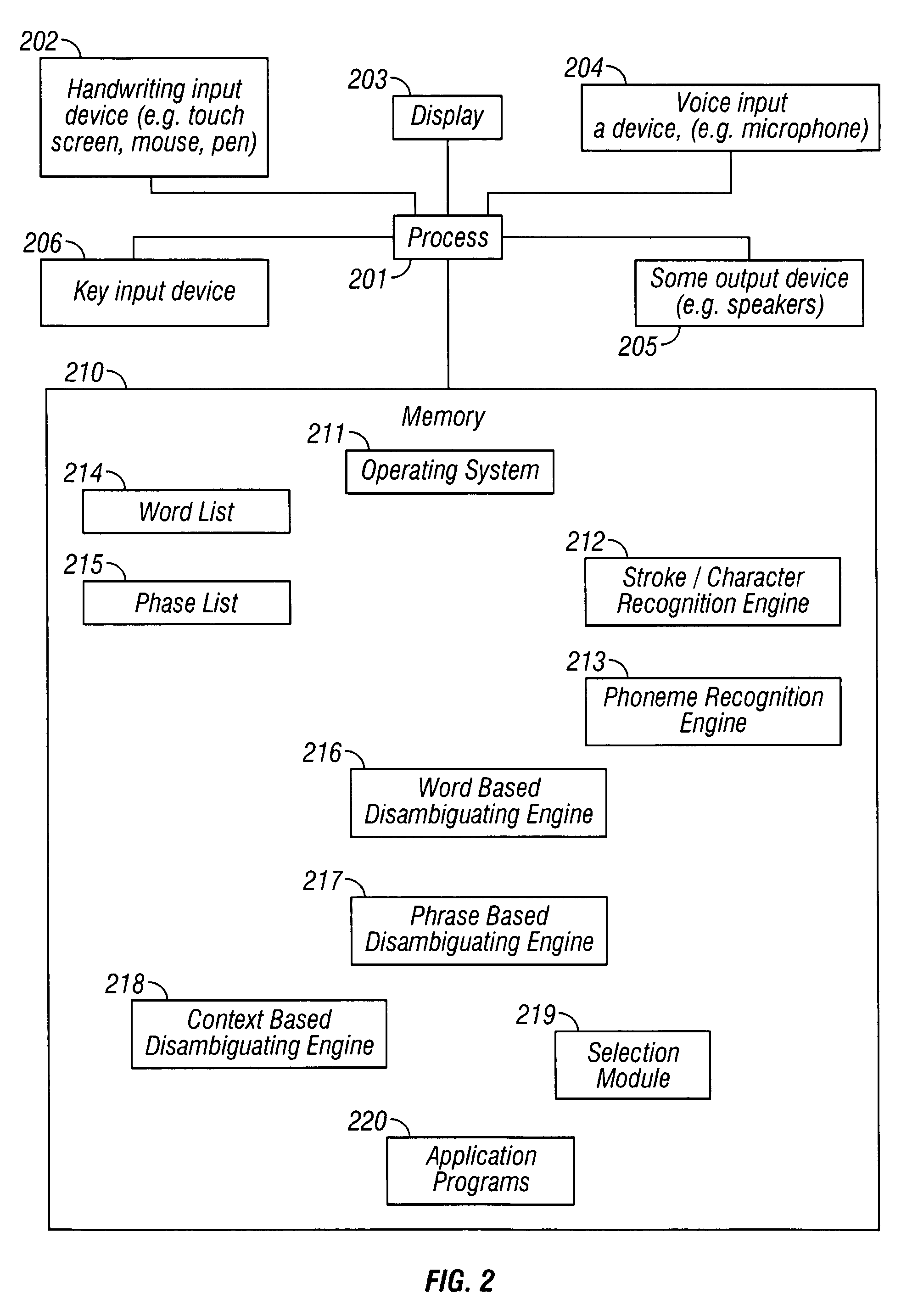

Multimodal disambiguation of speech recognition

InactiveUS7881936B2Efficient and accurate text inputLow accuracyCathode-ray tube indicatorsSpeech recognitionEnvironmental noiseText entry

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:TEGIC COMM

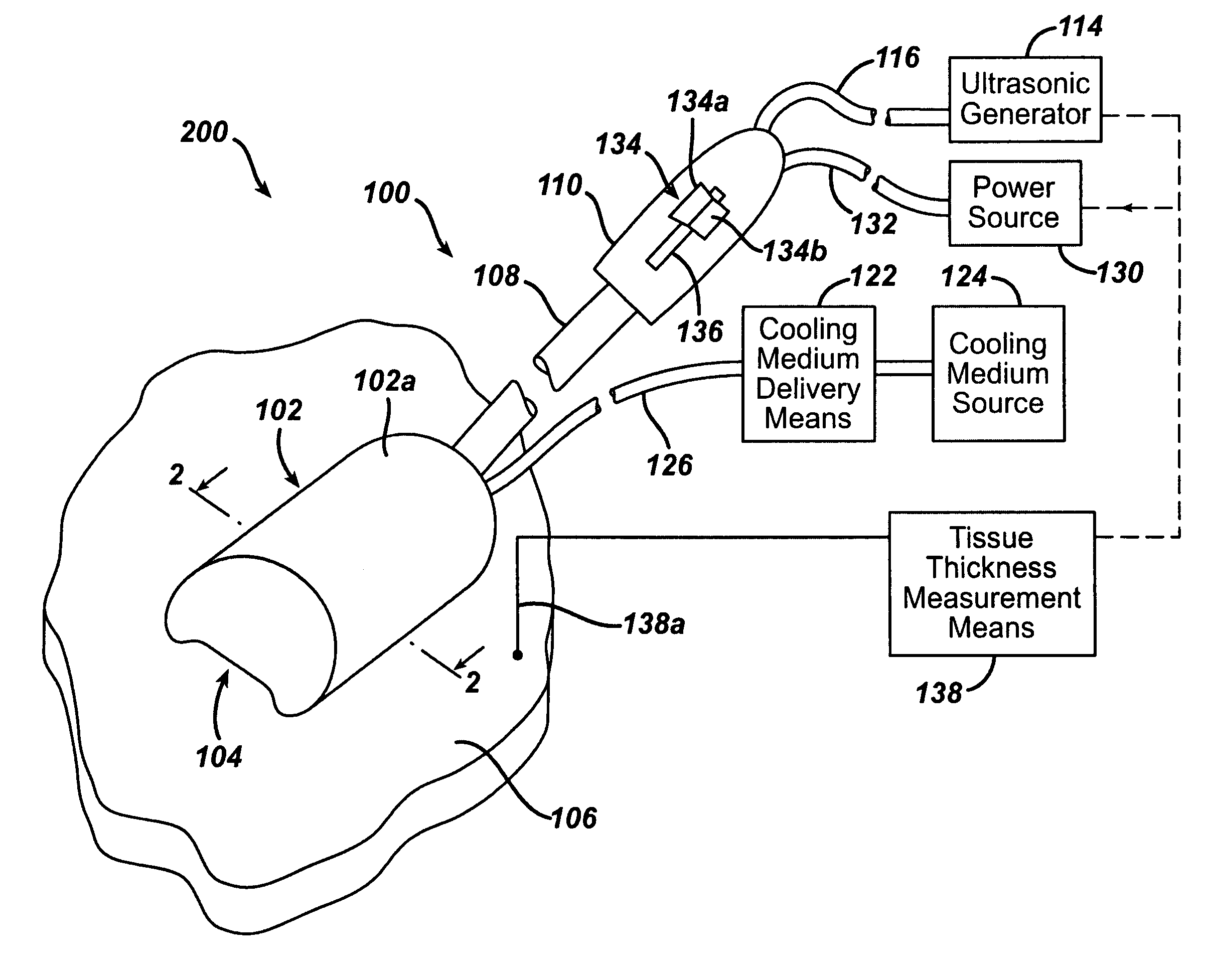

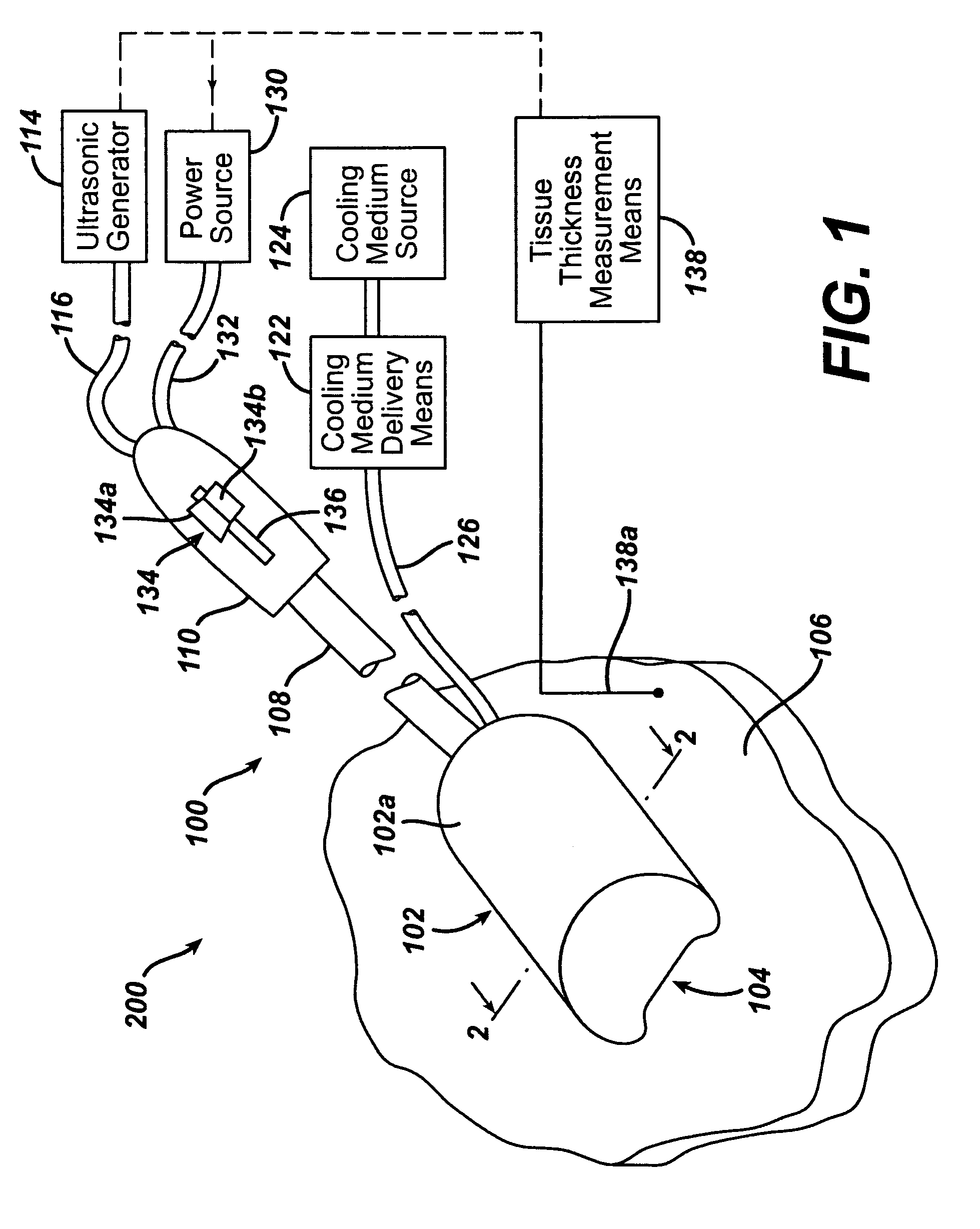

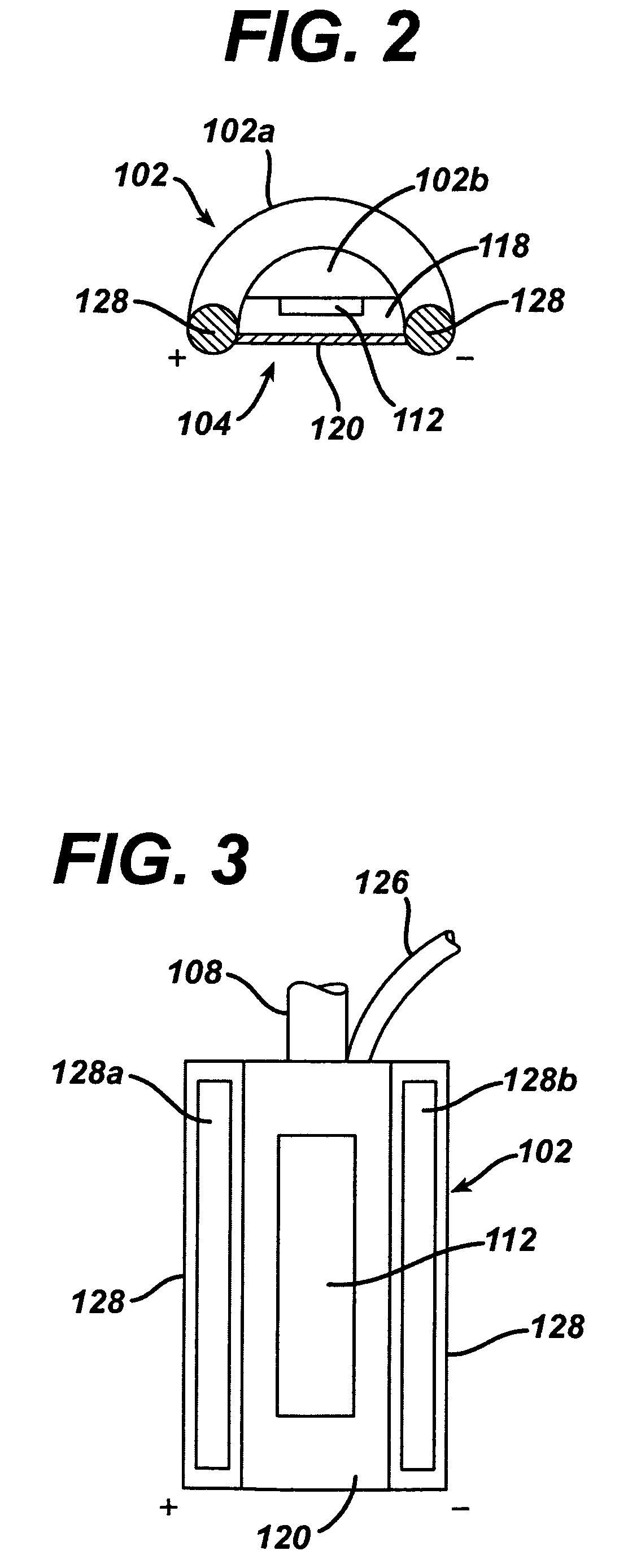

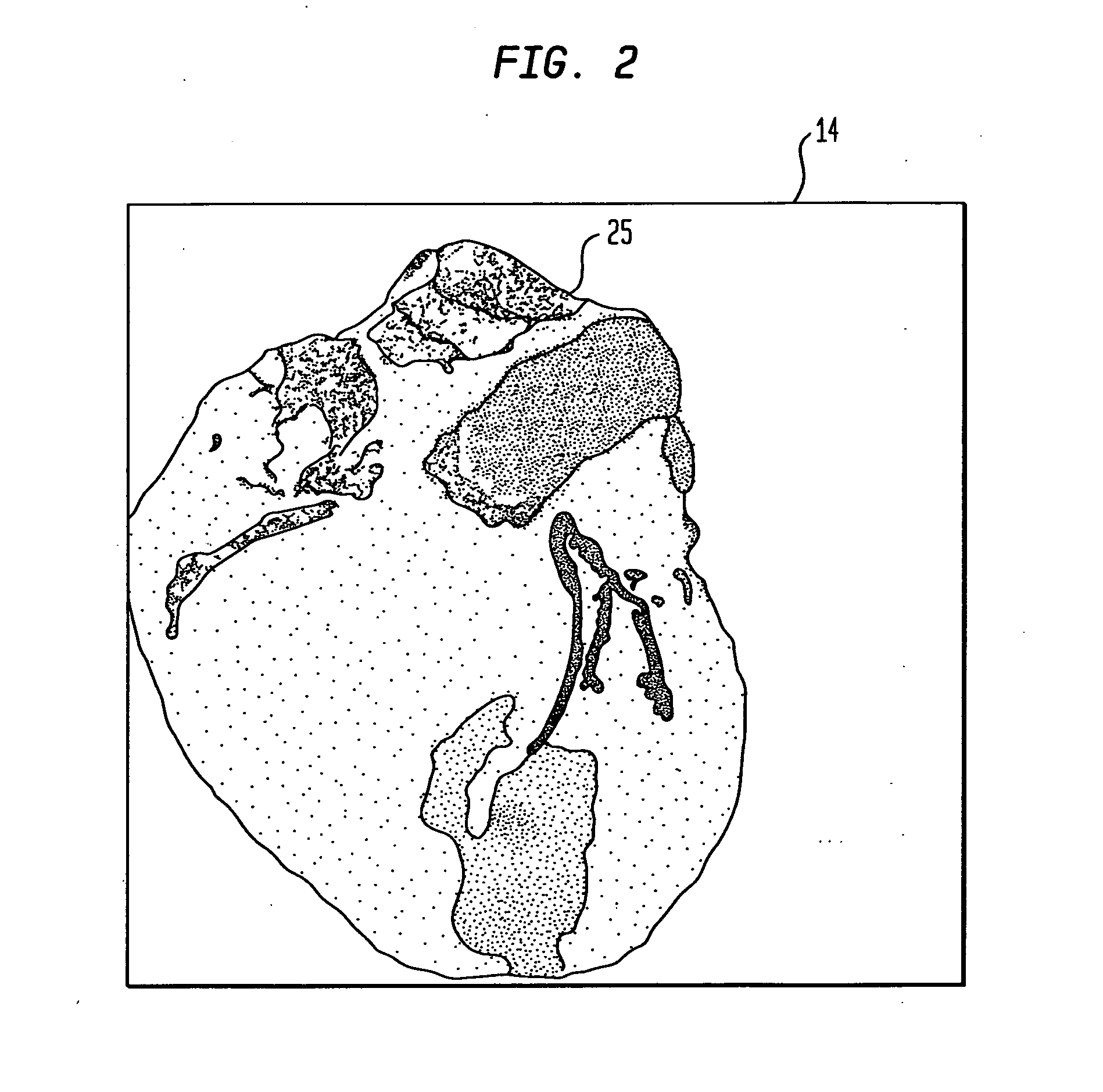

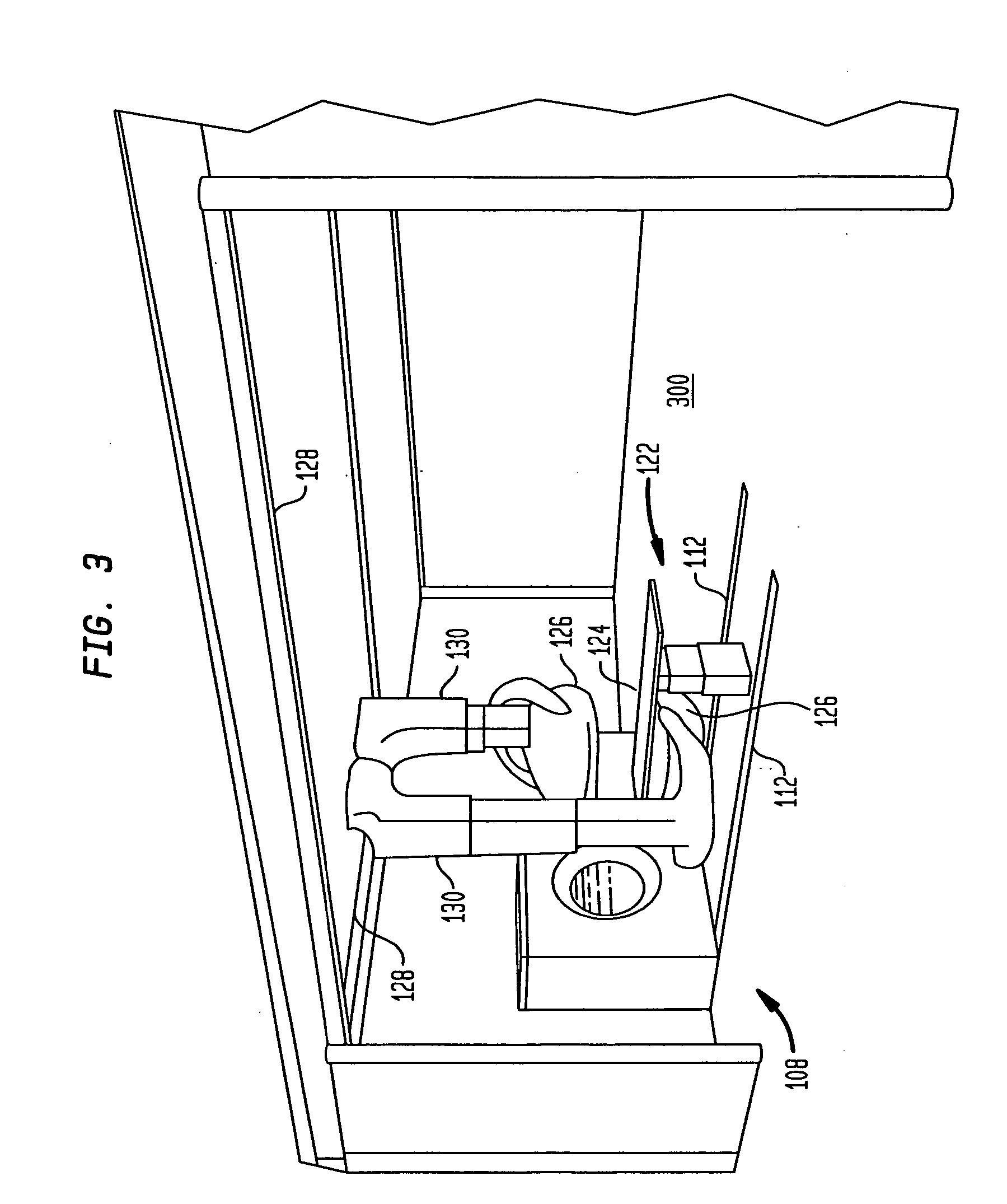

Multi-modality ablation device

ActiveUS7074218B2Minimum activation timeEffects damageUltrasonic/sonic/infrasonic diagnosticsUltrasound therapyRadio frequencyUltrasound energy

An instrument for ablation of tissue. The instrument including: a body having at least one surface for contacting a tissue surface, the at least one surface being substantially planar; an ultrasonic transducer disposed in the body for generating ultrasonic energy and directing at least a portion of the ultrasonic energy to the tissue surface, the ultrasonic transducer being operatively connected to an ultrasonic generator; at least one radio-frequency electrode disposed on the at least one surface for directing radio frequency energy to the tissue surface, the at least one radio-frequency electrode being operatively connected to a power source; and one or more switches for selectively coupling at least one of the ultrasonic transducer to the ultrasonic generator and the at least one radio-frequency electrode to the power source.

Owner:ETHICON INC

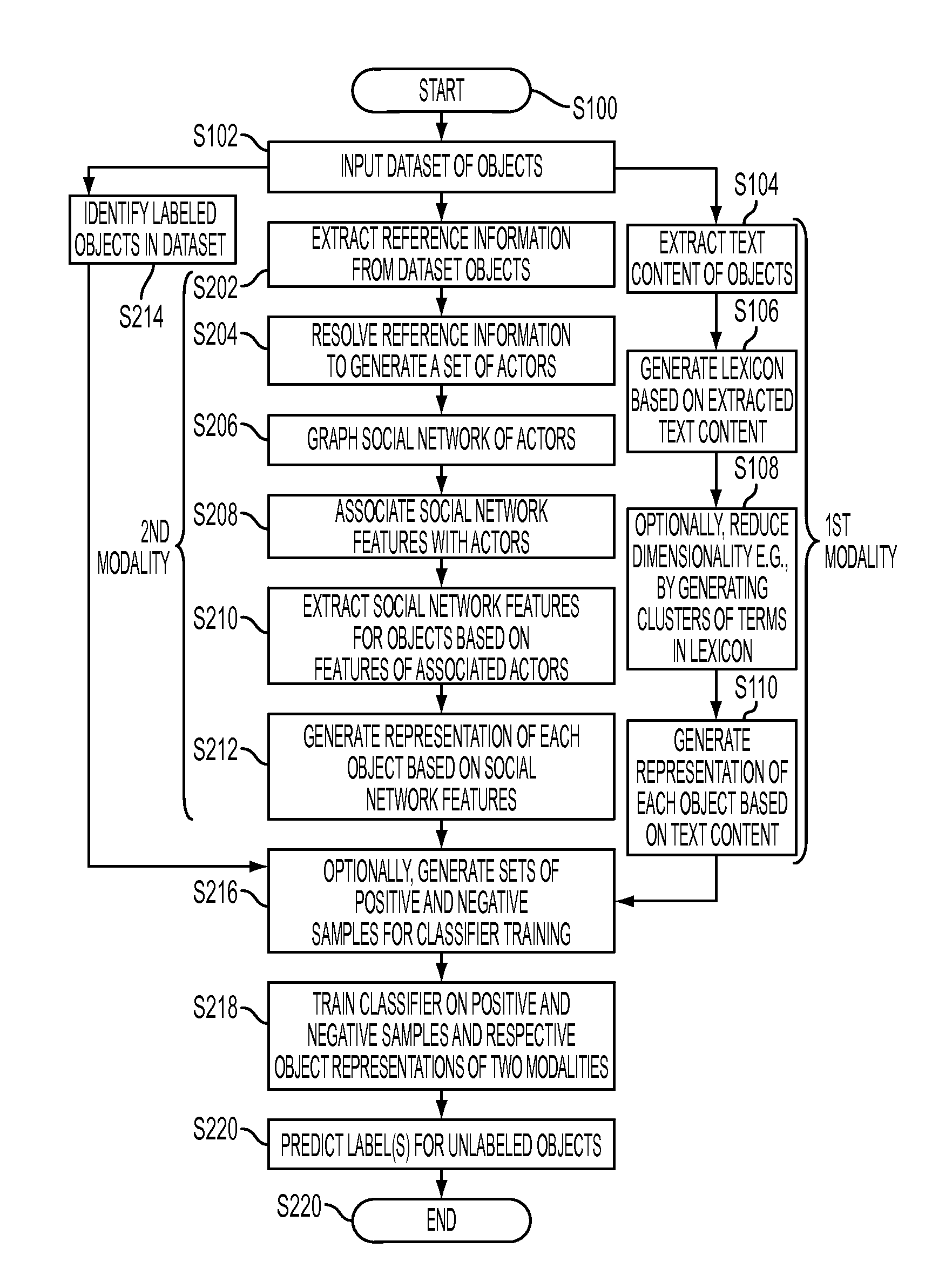

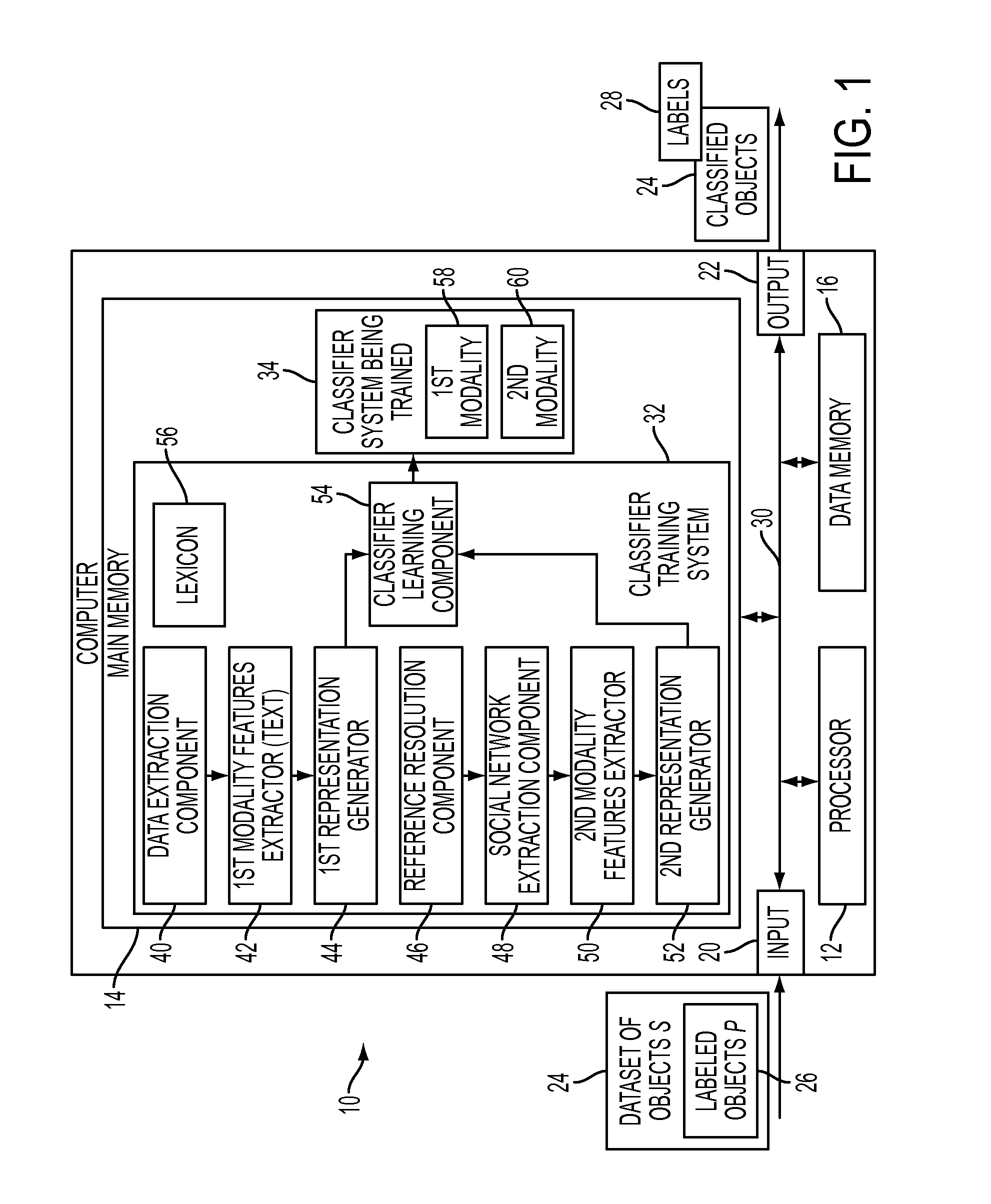

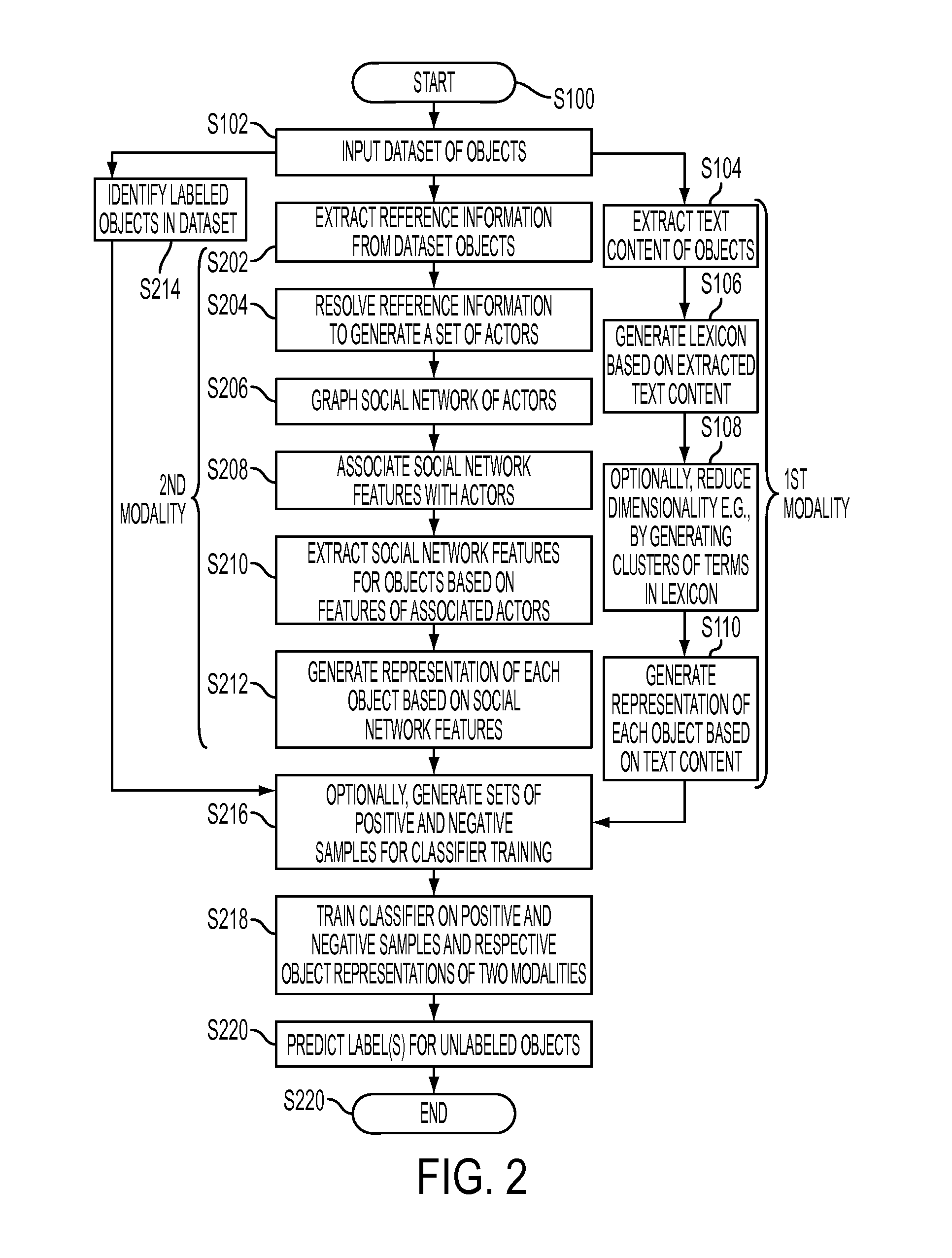

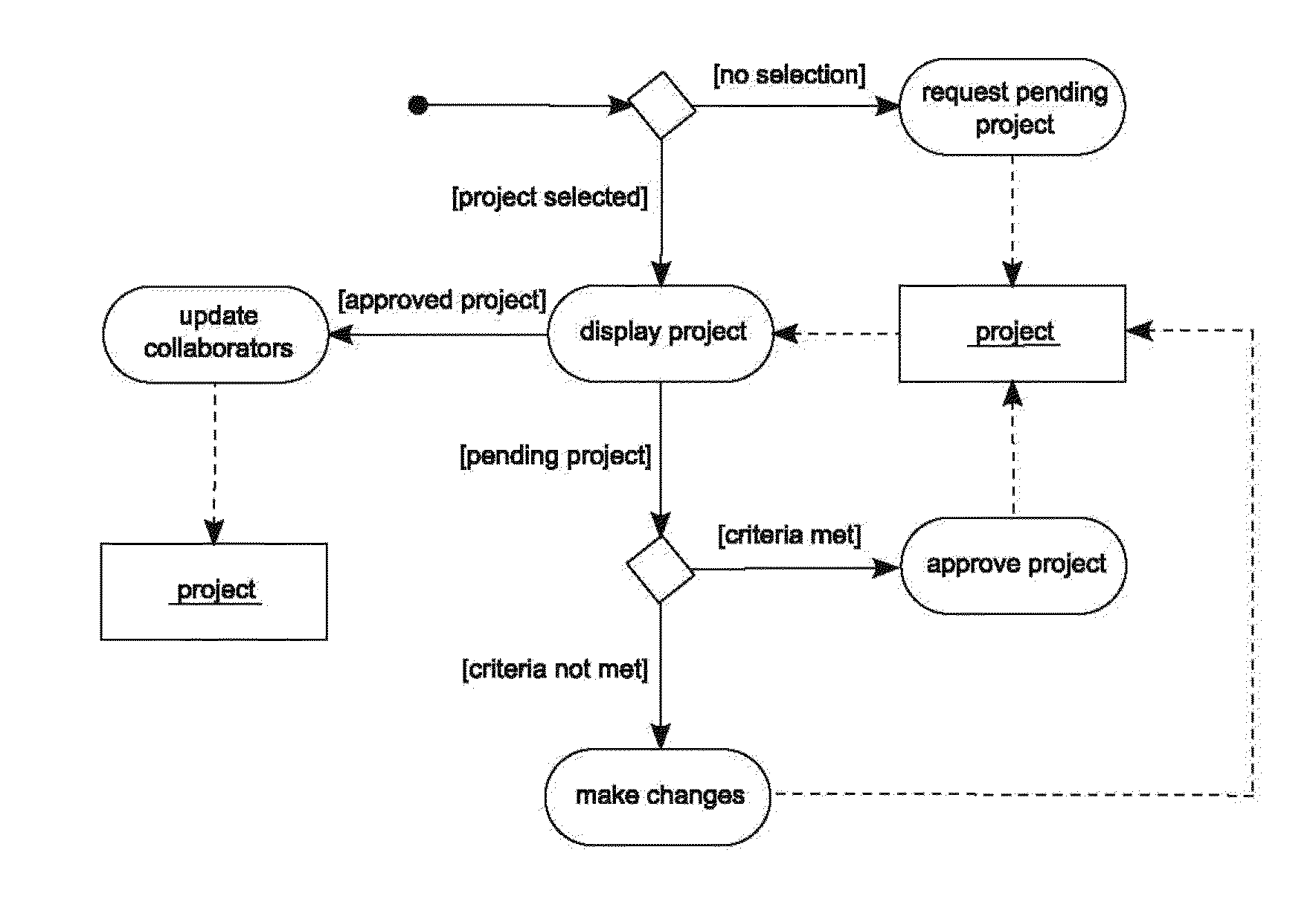

Multi-modality classification for one-class classification in social networks

ActiveUS20110103682A1Character and pattern recognitionData switching networksOne-class classificationData mining

A classification apparatus, method, and computer program product for multi-modality classification are disclosed. For each of a plurality of modalities, the method includes extracting features from objects in a set of objects. The objects include electronic mail messages. A representation of each object for that modality is generated, based on its extracted features. At least one of the plurality of modalities is a social network modality in which social network features are extracted from a social network implicit in the set of electronic mail messages. A classifier system is trained based on class labels of a subset of the set of objects and on the representations generated for each of the modalities. With the trained classifier system, labels are predicted for unlabeled objects in the set of objects.

Owner:XEROX CORP

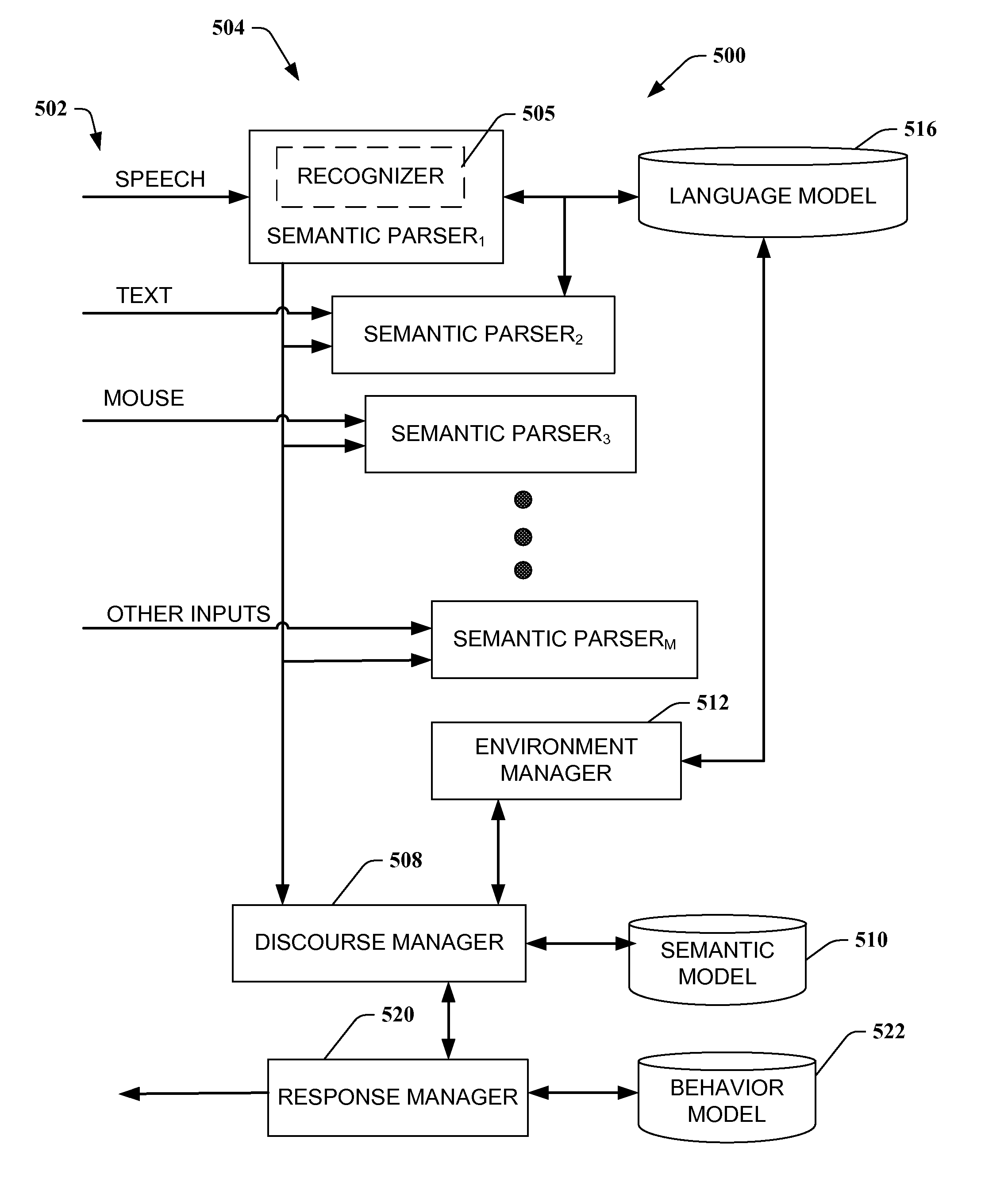

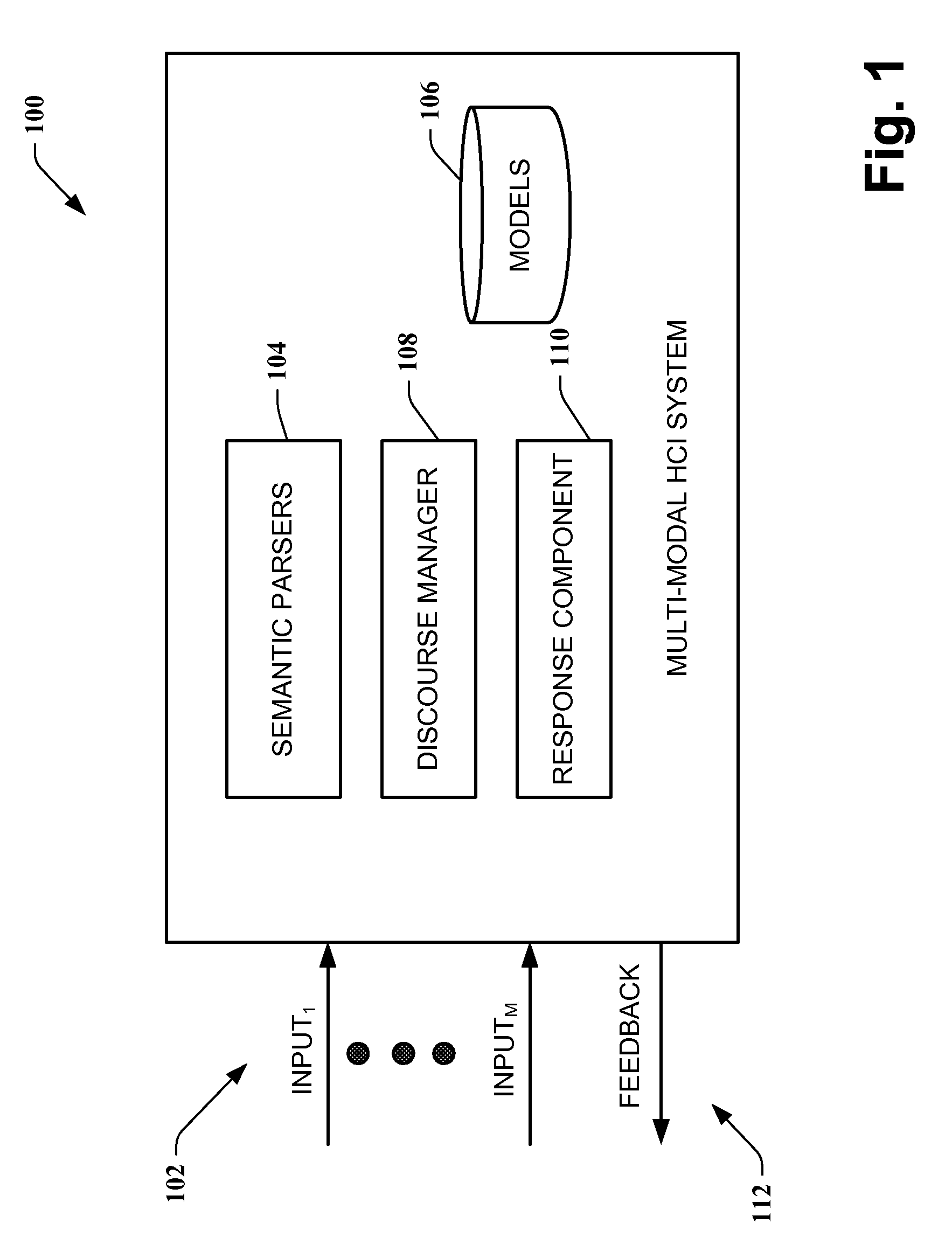

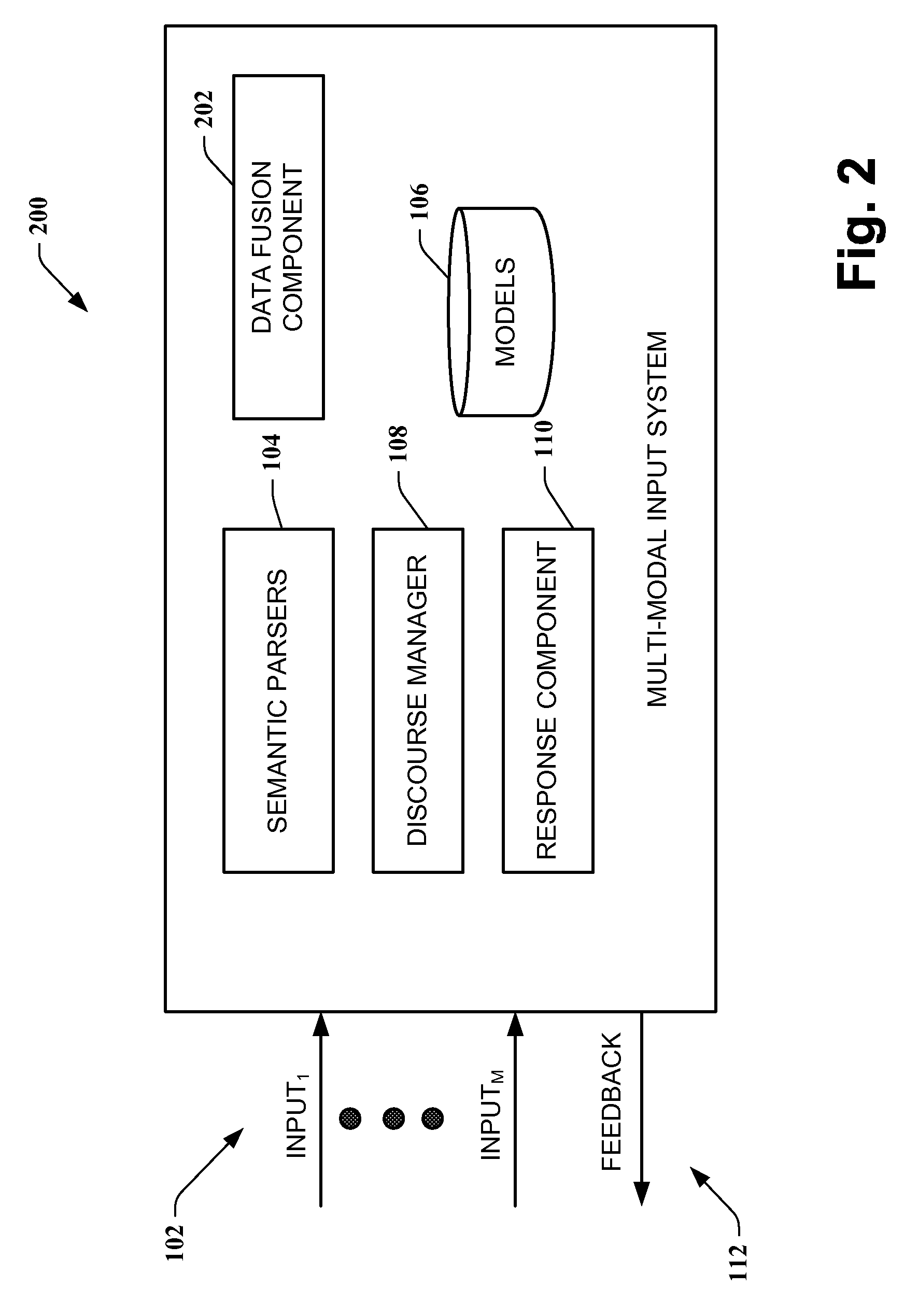

Speech-centric multimodal user interface design in mobile technology

ActiveUS8219406B2Easy to transportIncrease probabilitySpeech recognitionInput/output processes for data processingInterface designMobile technology

A multi-modal human computer interface (HCI) receives a plurality of available information inputs concurrently, or serially, and employs a subset of the inputs to determine or infer user intent with respect to a communication or information goal. Received inputs are respectively parsed, and the parsed inputs are analyzed and optionally synthesized with respect to one or more of each other. In the event sufficient information is not available to determine user intent or goal, feedback can be provided to the user in order to facilitate clarifying, confirming, or augmenting the information inputs.

Owner:MICROSOFT TECH LICENSING LLC

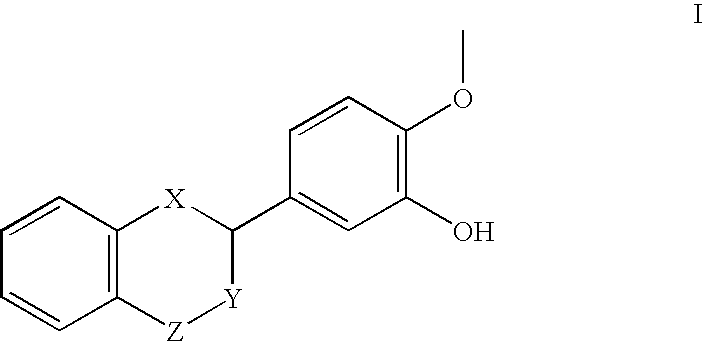

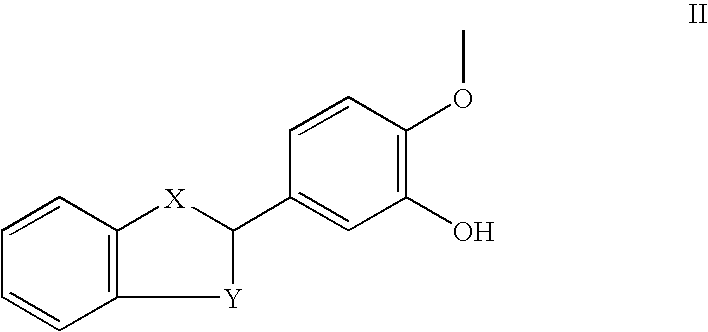

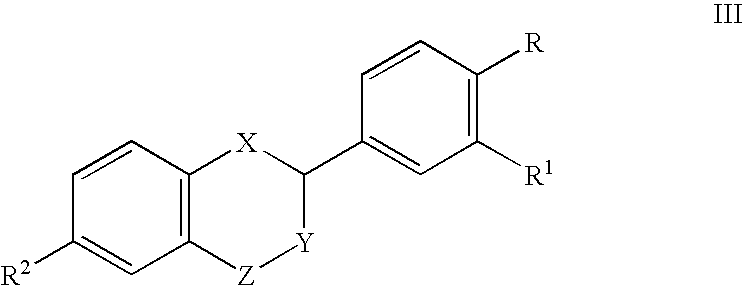

Multi-modality marking material and method

InactiveUS20050033157A1Enhance multi-modality imaging characteristicSurgeryDiagnostic markersDiagnostic Radiology ModalityImaging modalities

The present invention provides markers and methods of using markers to identify or treat anatomical sites in a variety of medical processes, procedures and treatments. The markers of embodiments of the present invention are permanently implantable, and are detectable in and compatible with images formed by at least two imaging modalities, wherein one of the imaging modalities is a magnetic field imaging modality. Images of anatomical sites marked according to embodiments of the present invention may be formed using various imaging modalities to provide information about the anatomical sites.

Owner:CARBON MEDICAL TECH

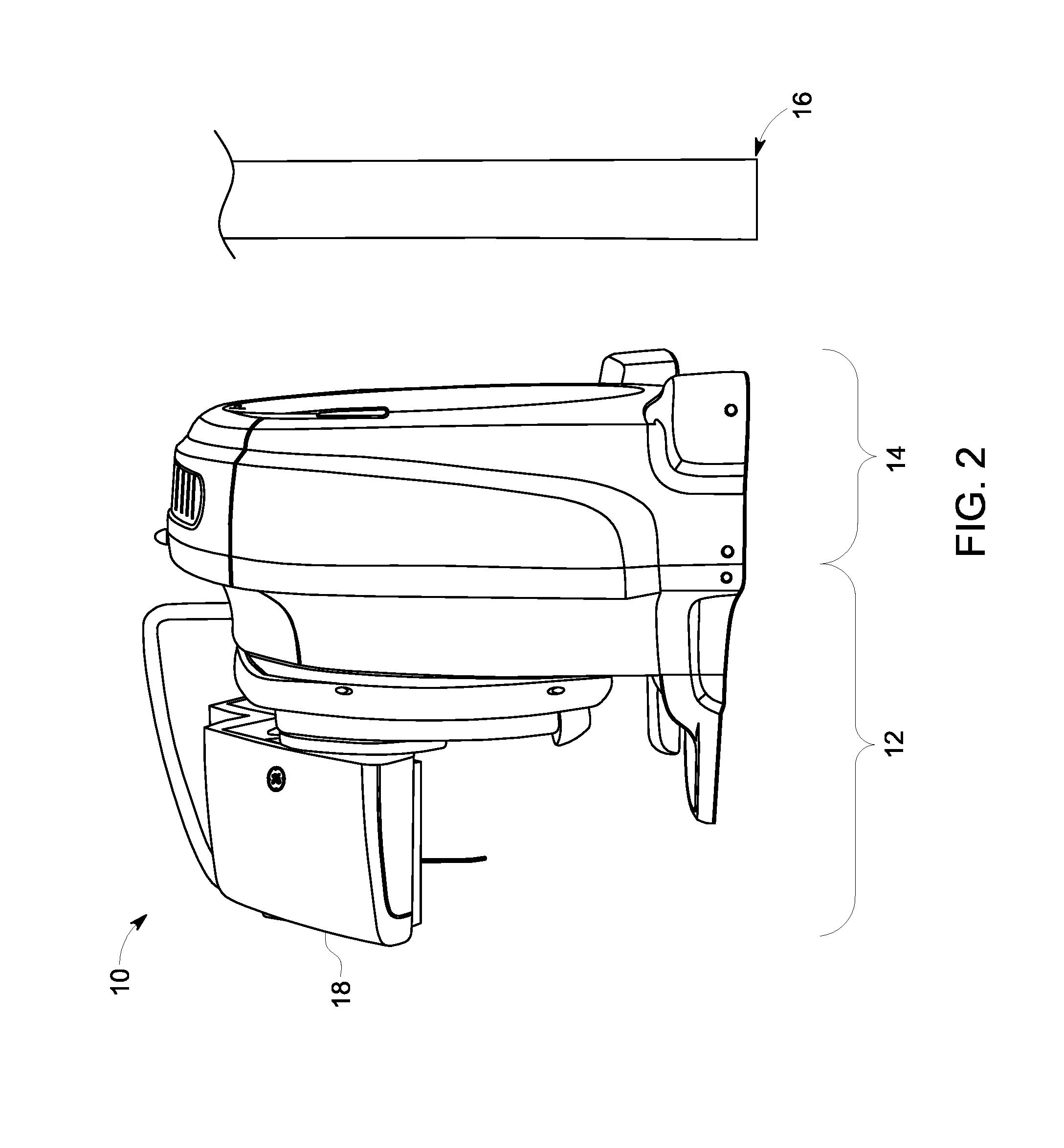

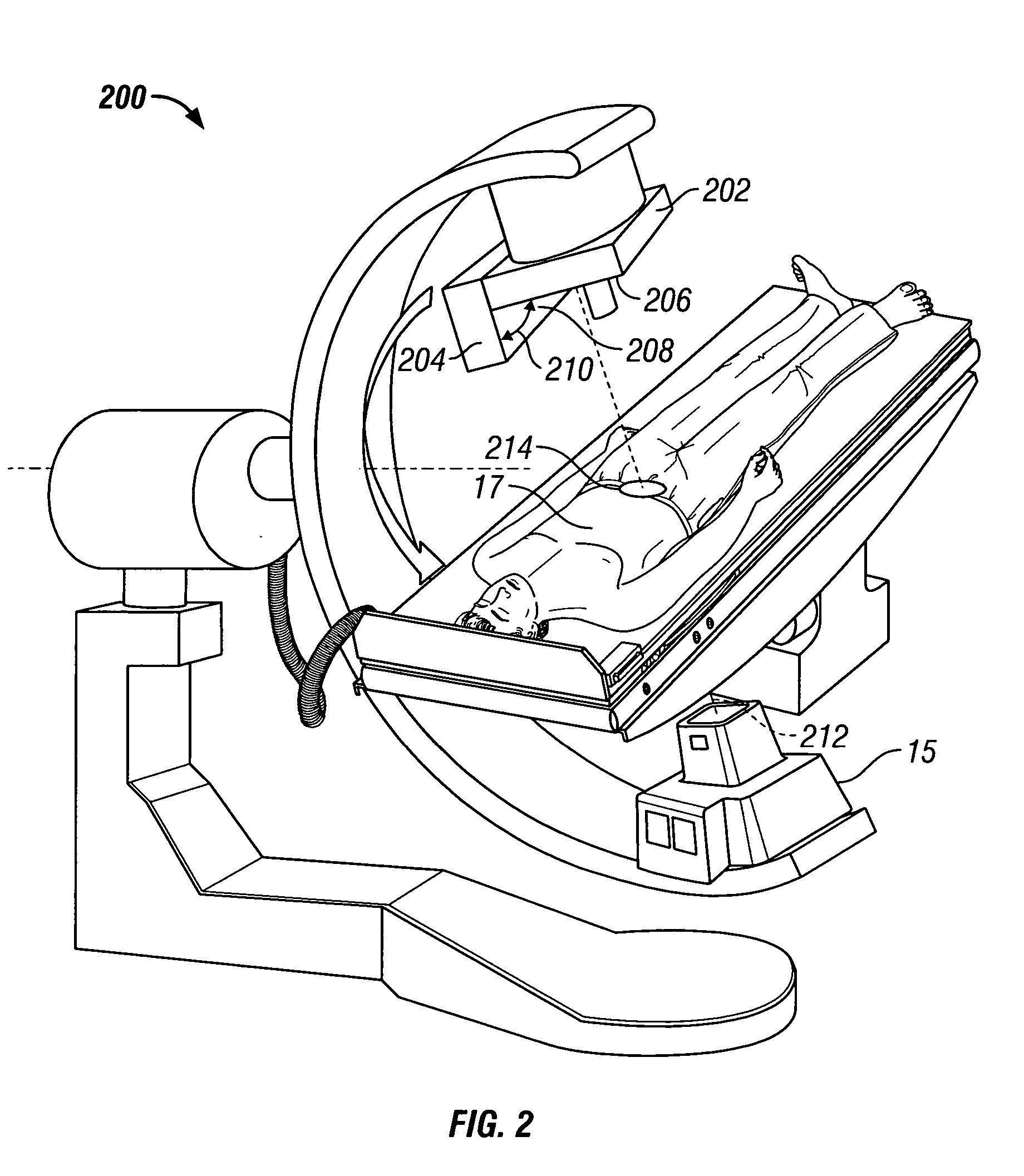

Ct system for use in multi-modality imaging system

InactiveUS20120256092A1Reduced footprintMaterial analysis using wave/particle radiationRadiation/particle handlingImaging qualityX-ray

A computed tomography (CT) imaging system is disclosed. The CT imaging system may be used in a multi-modality imaging context or other context. In one embodiment, the CT imaging system provides for both fast rotation of the rotating X-ray source and detection components and low dose of X-rays generated by the source providing several clinical and economic benefits such as low dose and sufficient image quality and no or insignificant investment in room shielding associated with diagnostic CT dose.

Owner:GENERAL ELECTRIC CO

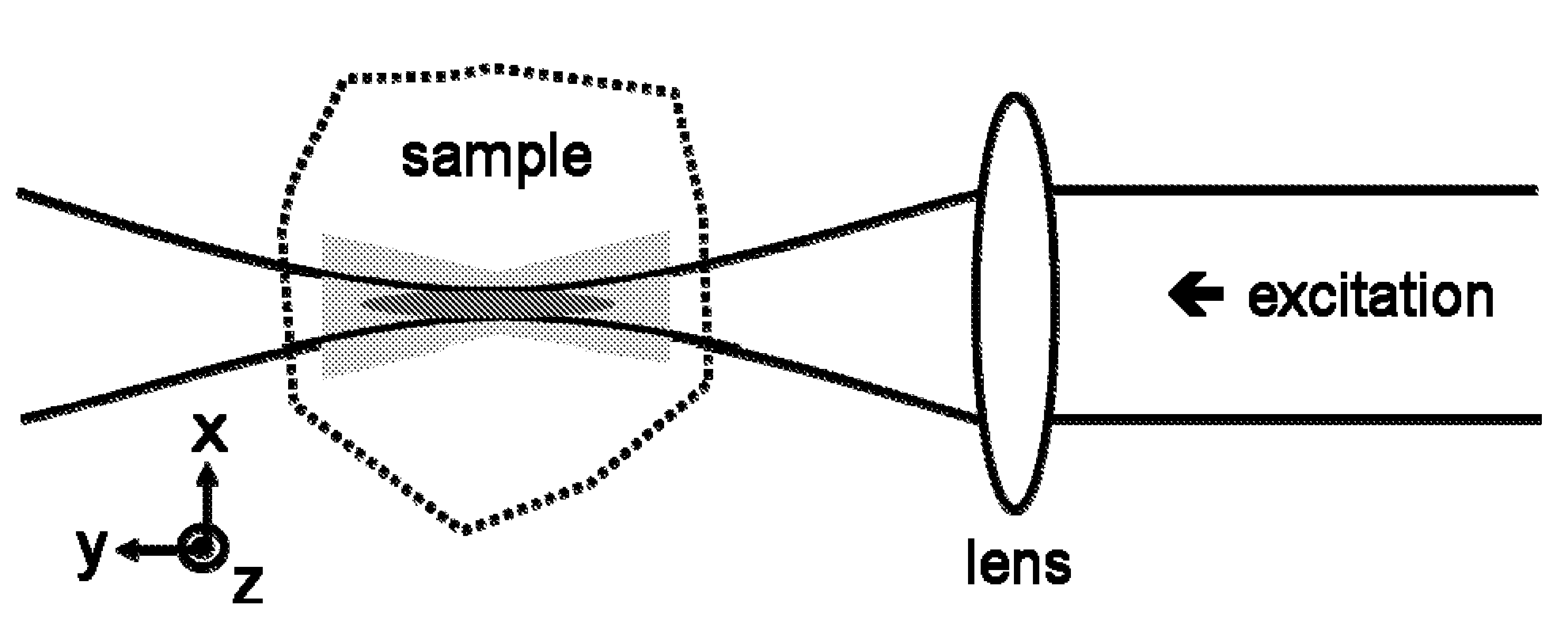

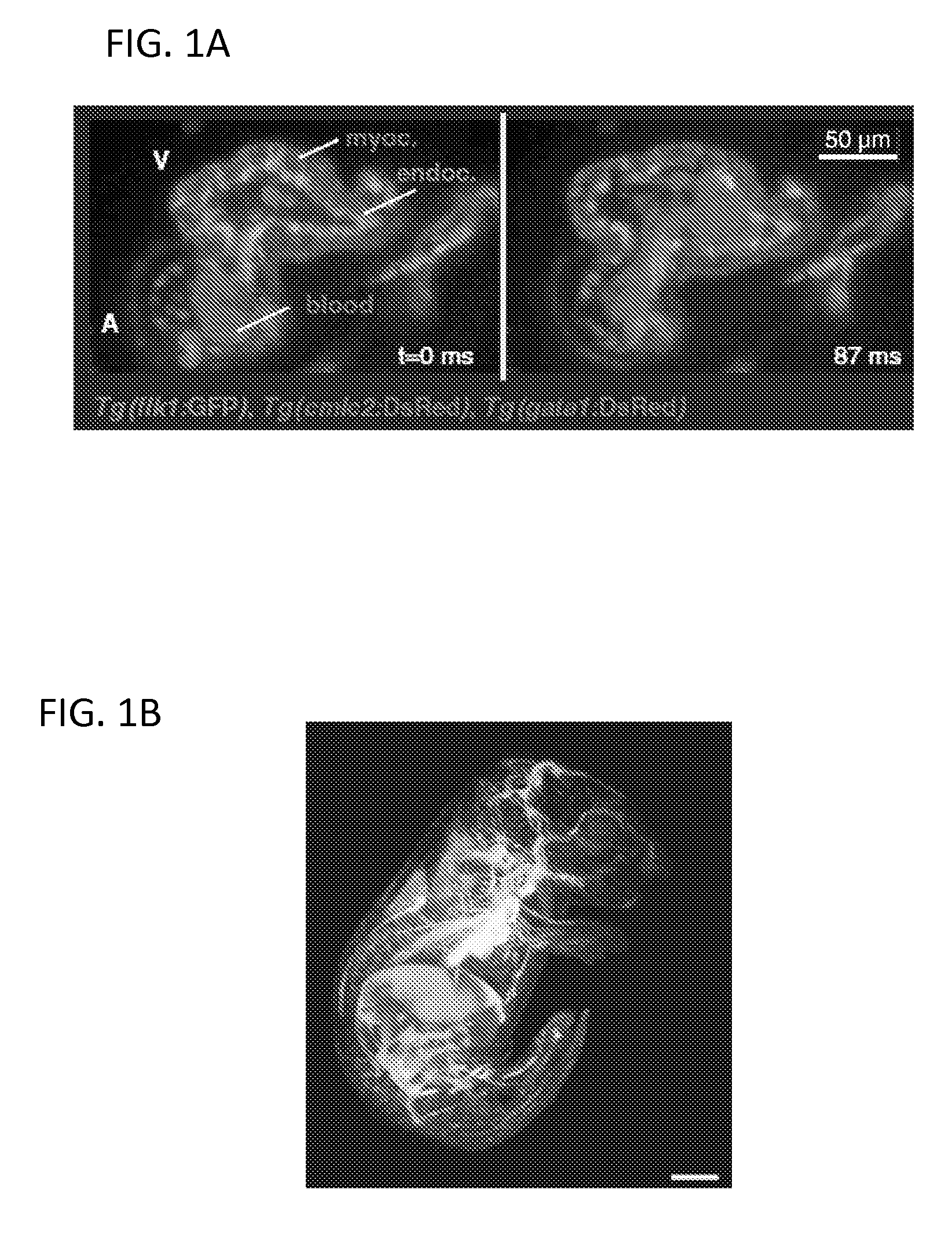

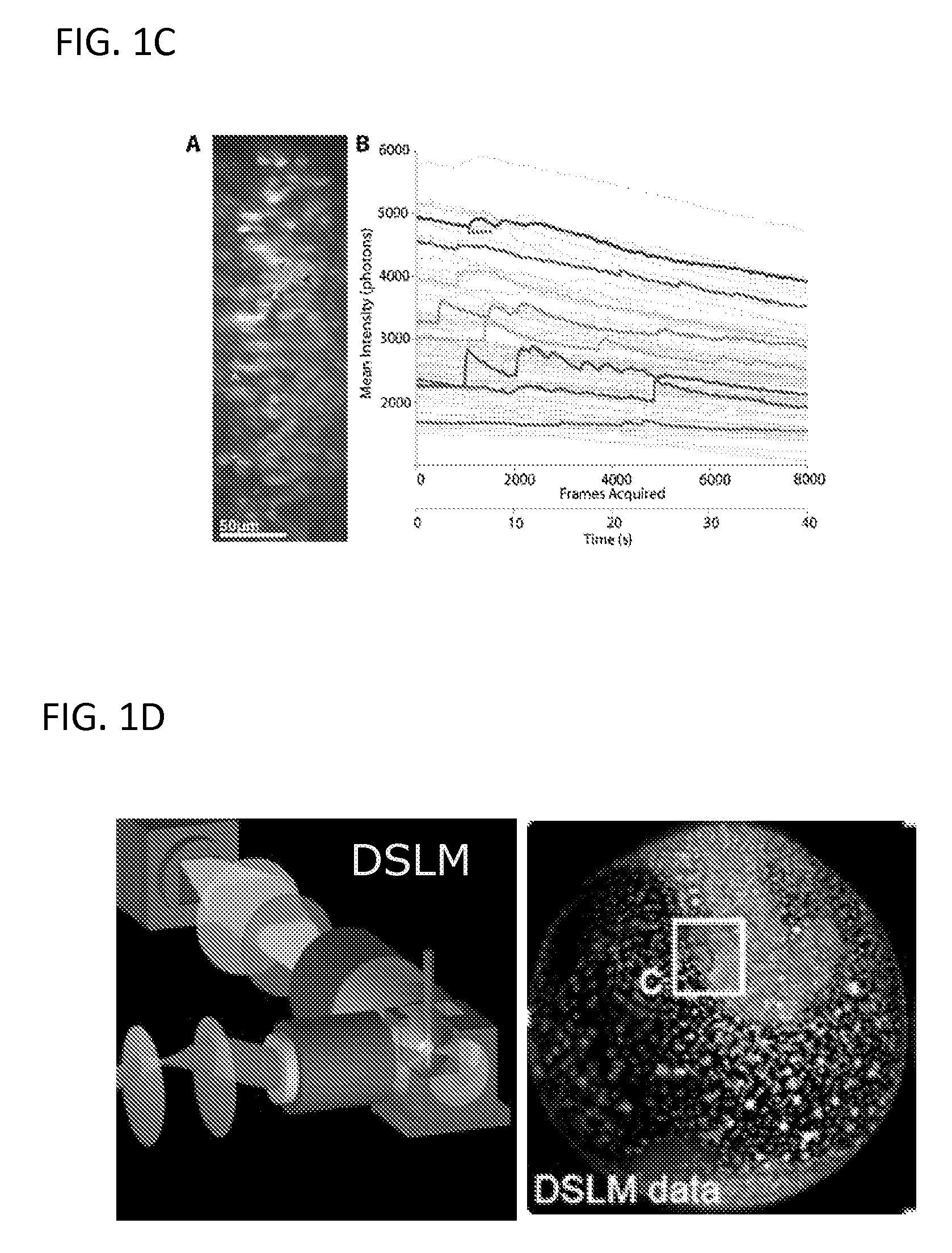

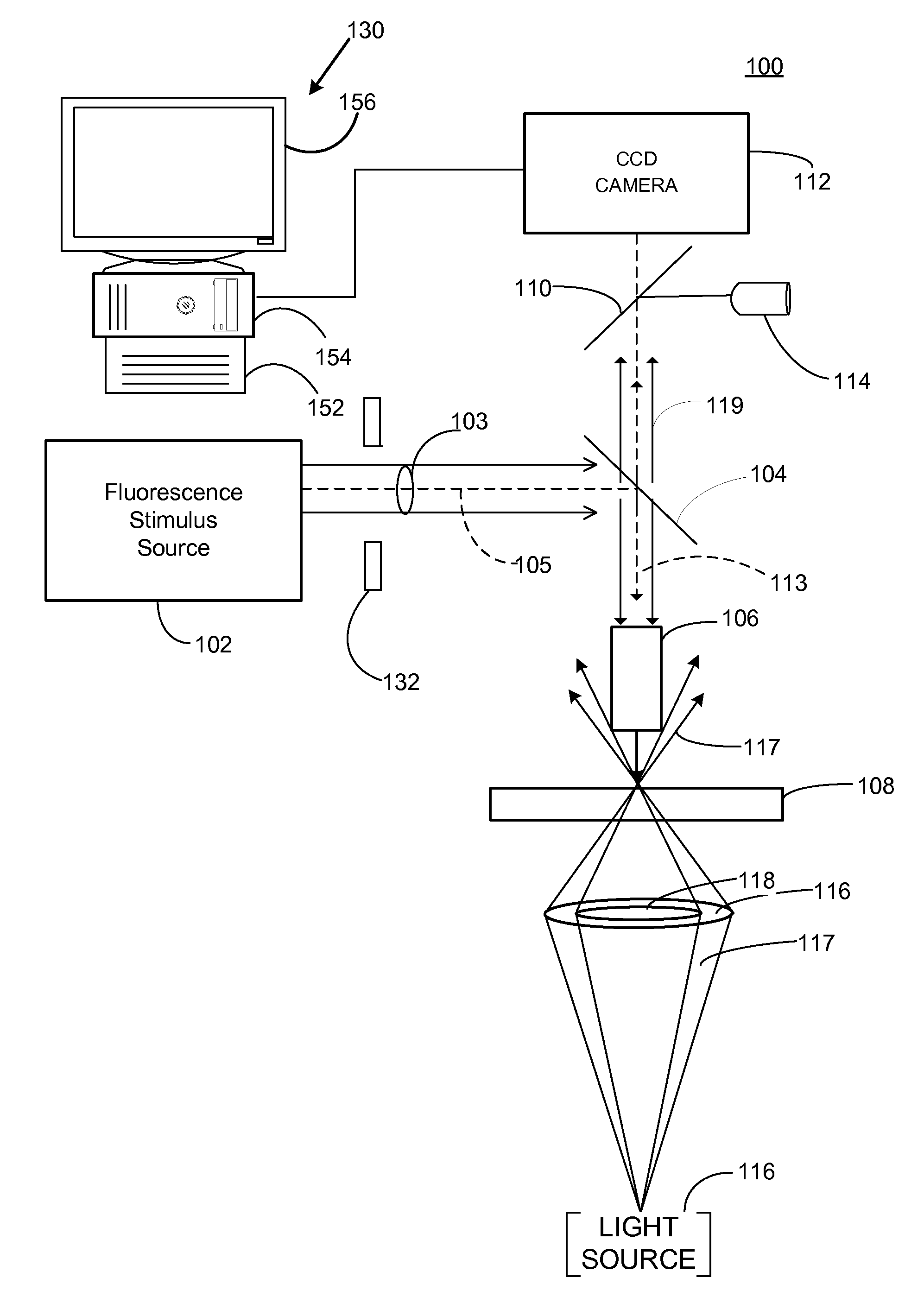

Multiple-photon excitation light sheet illumination microscope

ActiveUS20110122488A1Radiation pyrometrySpectrum investigationDiagnostic Radiology ModalityFluorescence

An apparatus for and method of performing multi-photon light sheet microscopy (MP-LISH), combining multi-photon excited fluorescence with the orthogonal. illumination of light sheet microscopy are provided. With live imaging of whole Drosophila and zebrafish embryos, the high performance of MP-LISH compared to current state-of-the-art imaging techniques in maintaining good signal and high spatial resolution deep inside biological tissues (two times deeper than one-photon light sheet microscopy), in acquisition speed (more than one order of magnitude faster than conventional two-photon laser scanning microscopy), and in low phototoxicity are demonstrated. The inherent multi-modality of this new imaging technique is also demonstrated second harmonic generation light sheet microscopy to detect collagen in mouse tail tissue. Together, these properties create the potential for a wide range of applications for MP-LISH in 4D imaging of live biological systems.

Owner:CALIFORNIA INST OF TECH

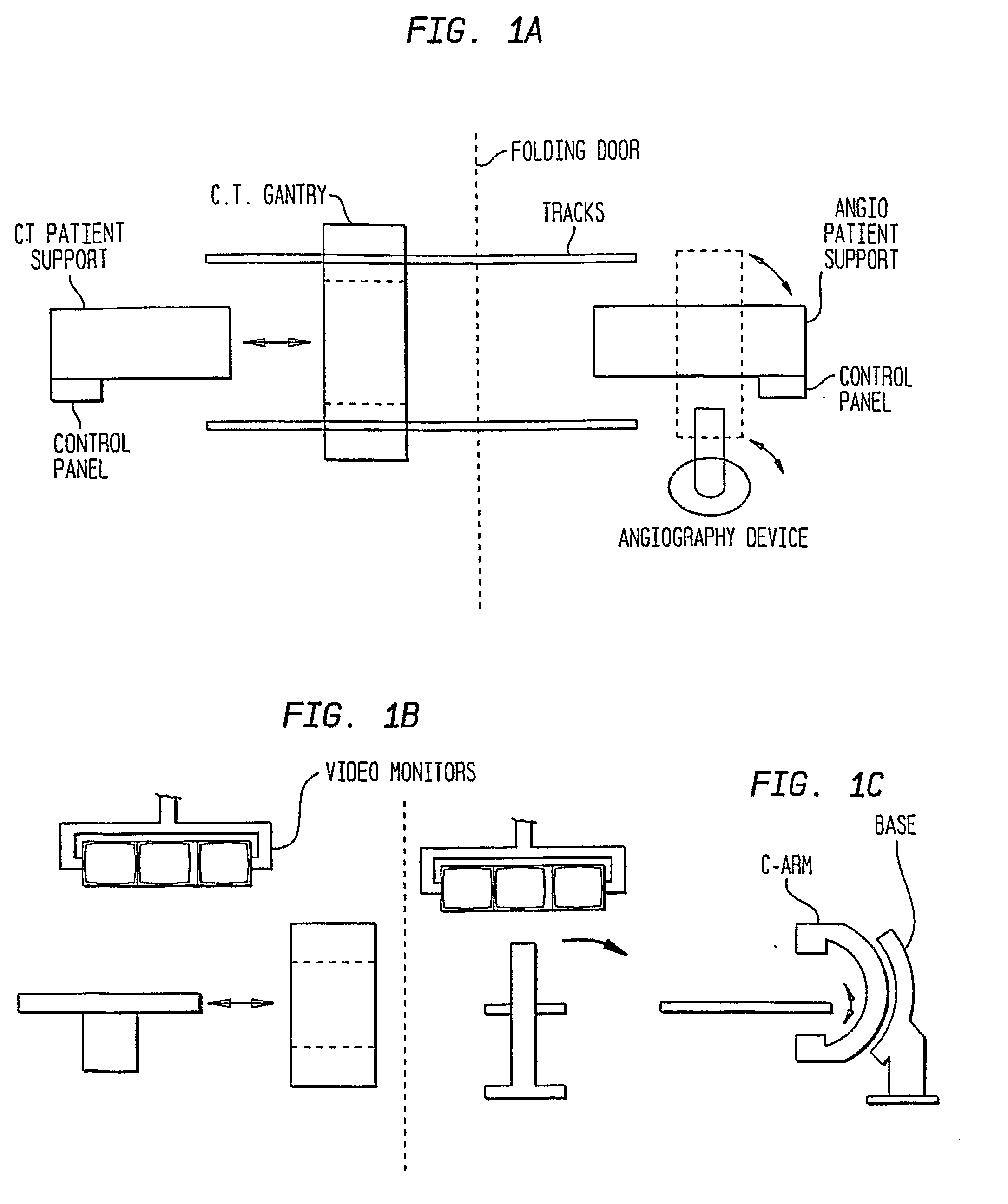

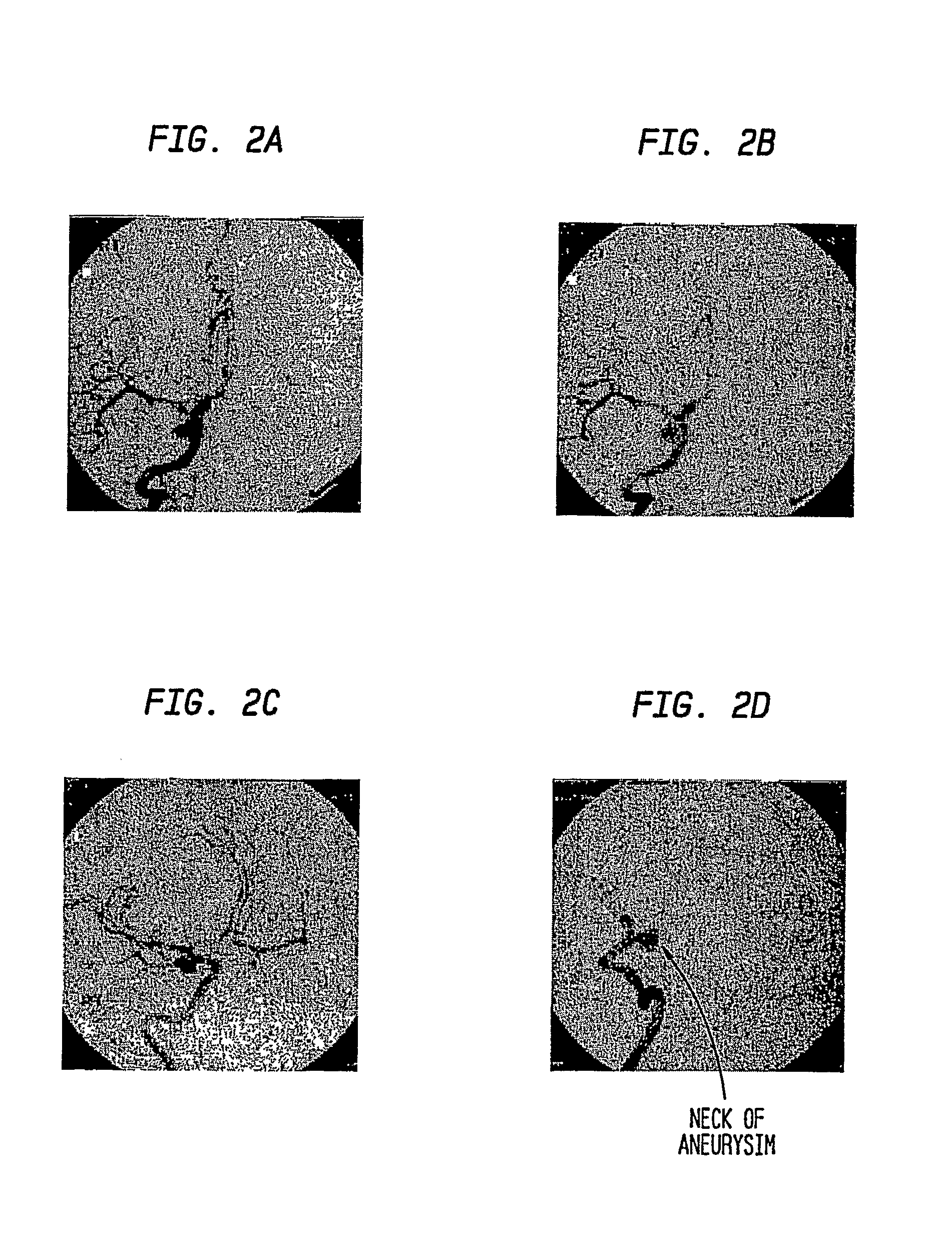

Combination of Multi-Modality Imaging Technologies

InactiveUS20080281181A1Ultrasonic/sonic/infrasonic diagnosticsCharacter and pattern recognitionNuclear medicinePatient care

Different applications for multiple imaging technologies are provided to advance patient care. In one aspect, a 3-D imaging device is used to identify the location of target region in a patient, such as a tumor or aneurysm, for instance. The location information is then used to control a device used in performing a surgical or other intervention, further imaging or a diagnostic or therapeutic procedure (920). The device can operate fully automatically, as a robot, or can assist a manual procedure performed by a physician. In another aspect, the invention provides a technique for obtaining an improved image of the vasculature in a patient (1020). In another aspect, images from multiple imaging technologies are combined or fused (1120) to achieve synergistic benefits.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

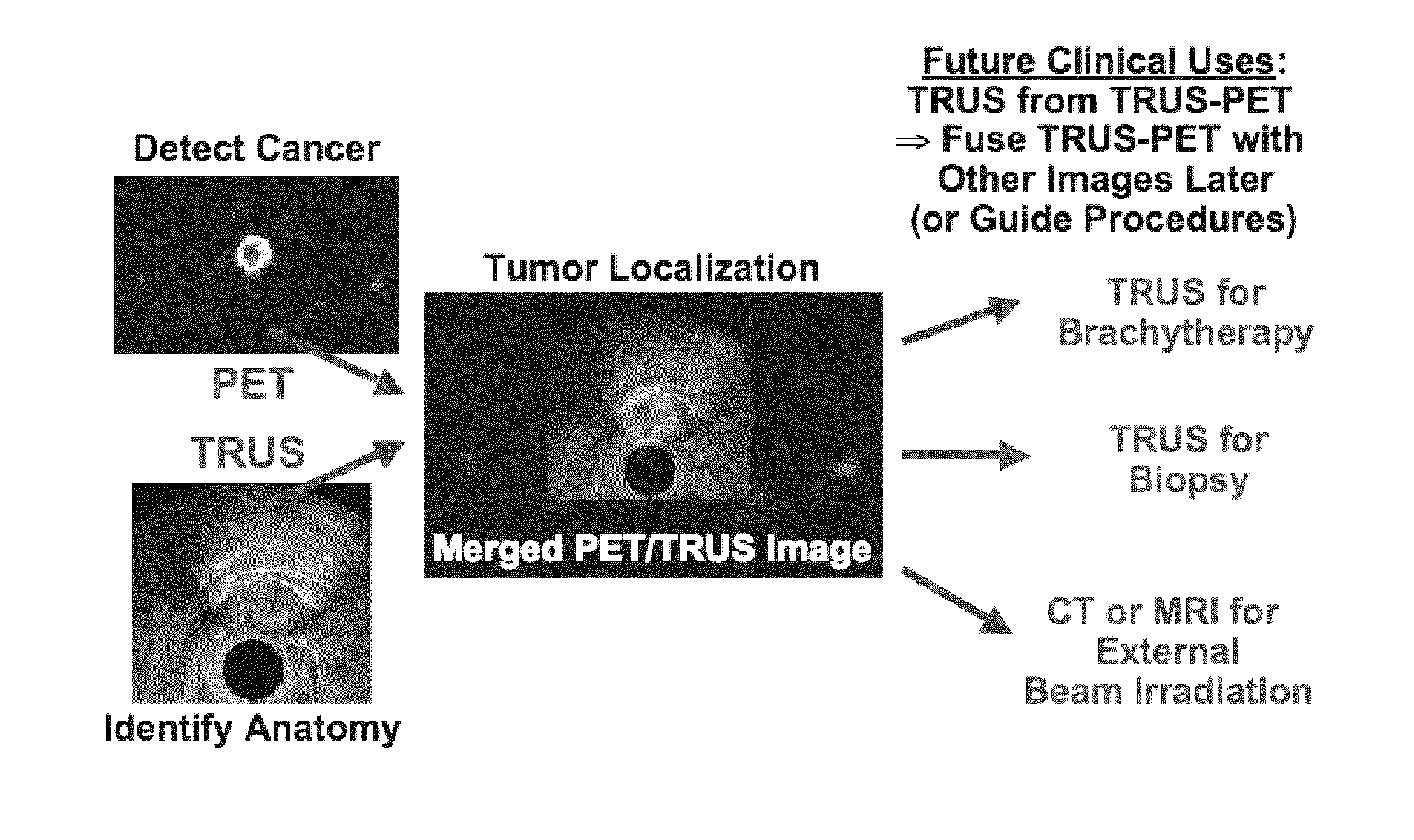

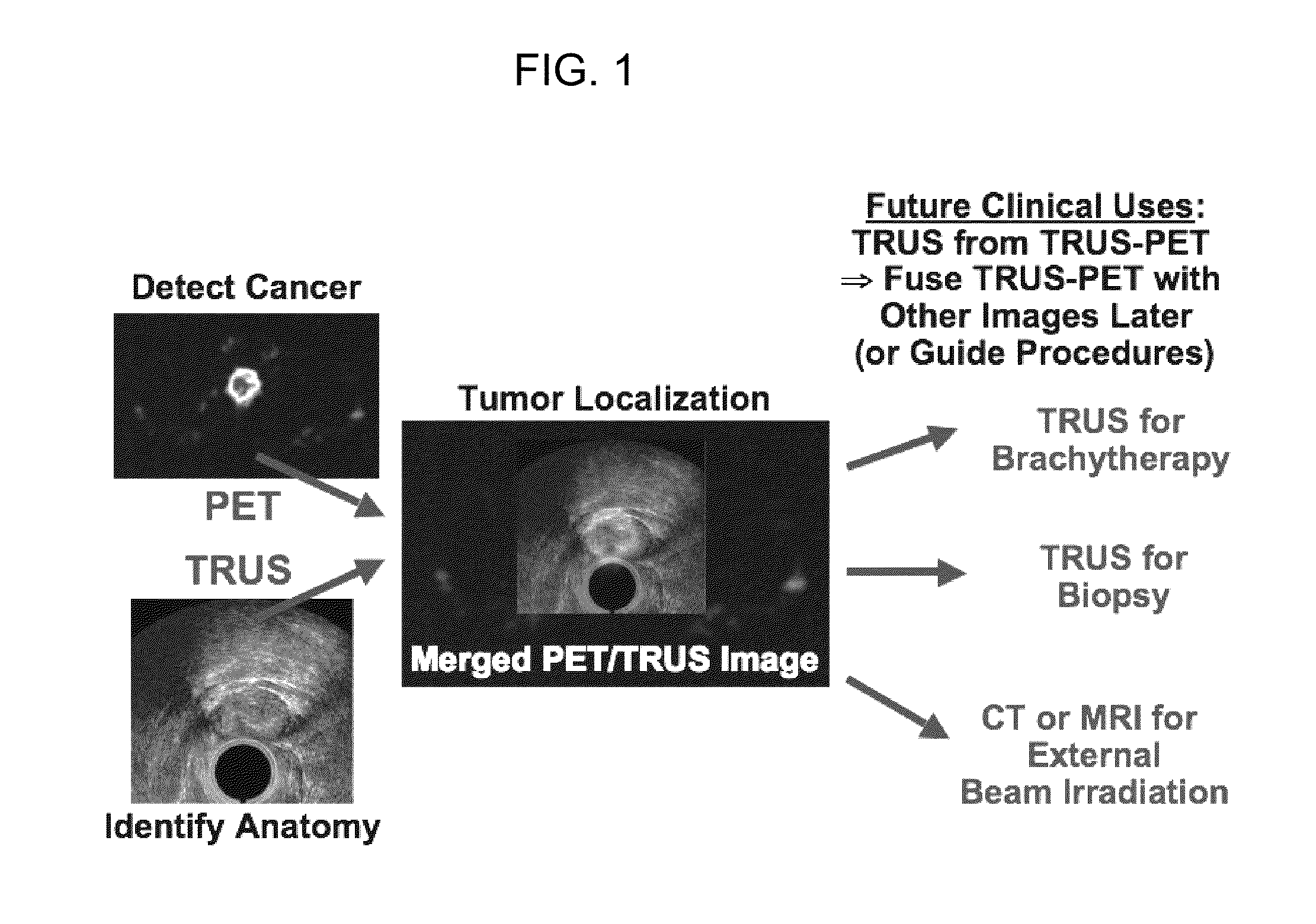

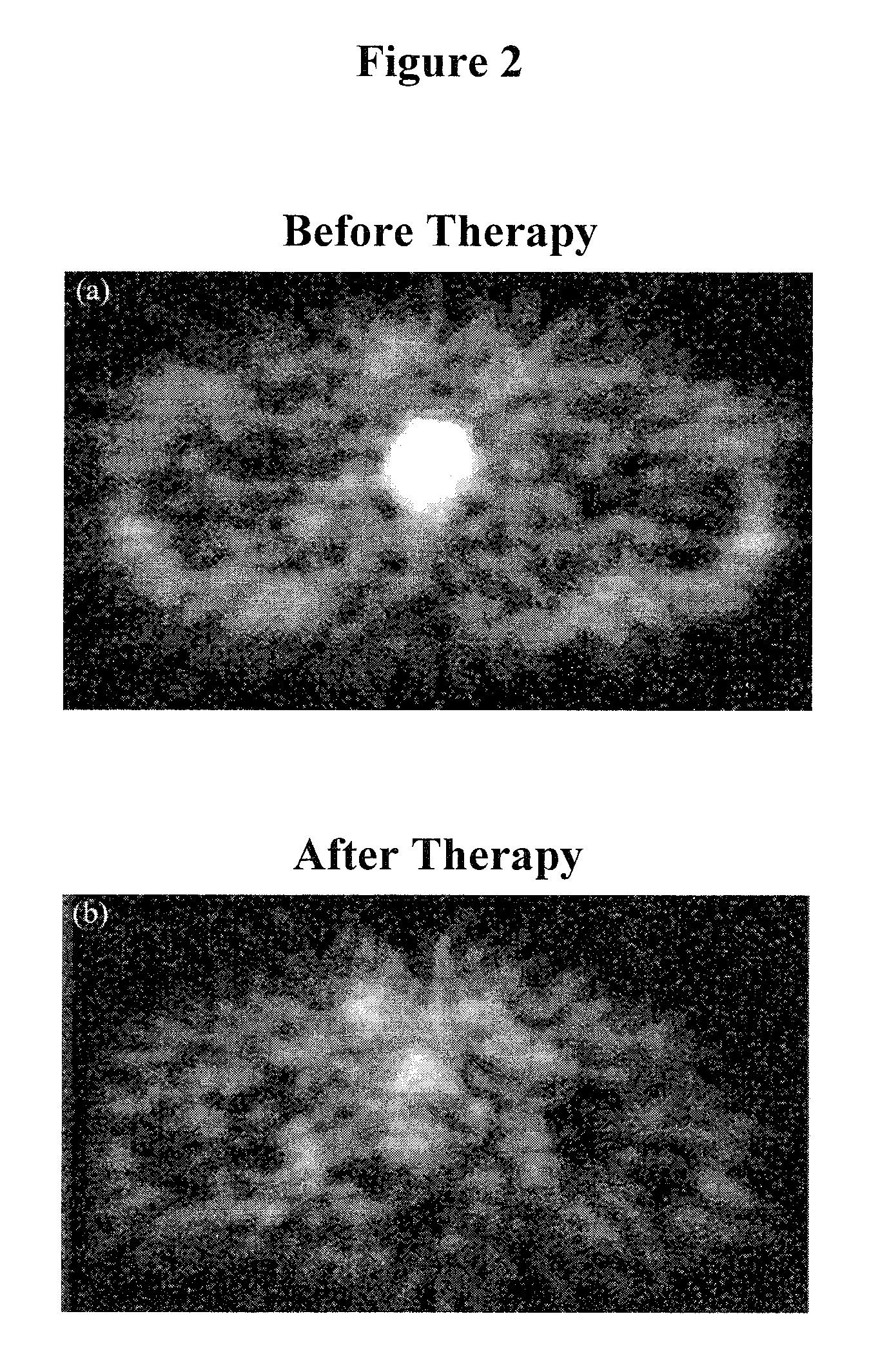

Multi-Modality Phantoms and Methods for Co-registration of Dual PET-Transrectal Ultrasound Prostate Imaging

InactiveUS20100198063A1Precise registrationPrecise positioningUltrasonic/sonic/infrasonic diagnosticsMaterial analysis by optical meansUltrasound imagingSonification

Herein are described methods and tools for acquiring accurately co-registered PET and TRUS images, as well as the construction and use of PET-TRUS prostate phantoms. Ultrasound imaging with a transrectal probe provides anatomical detail in the prostate region that can be accurately co-registered with the sensitive functional information from the PET imaging. Imaging the prostate with both PET and transrectal ultrasound (TRUS) will help determine the location of any cancer within the prostate region. This dual-modality imaging should help provide better detection and treatment of prostate cancer. Multi-modality phantoms are also described.

Owner:RGT UNIV OF CALIFORNIA

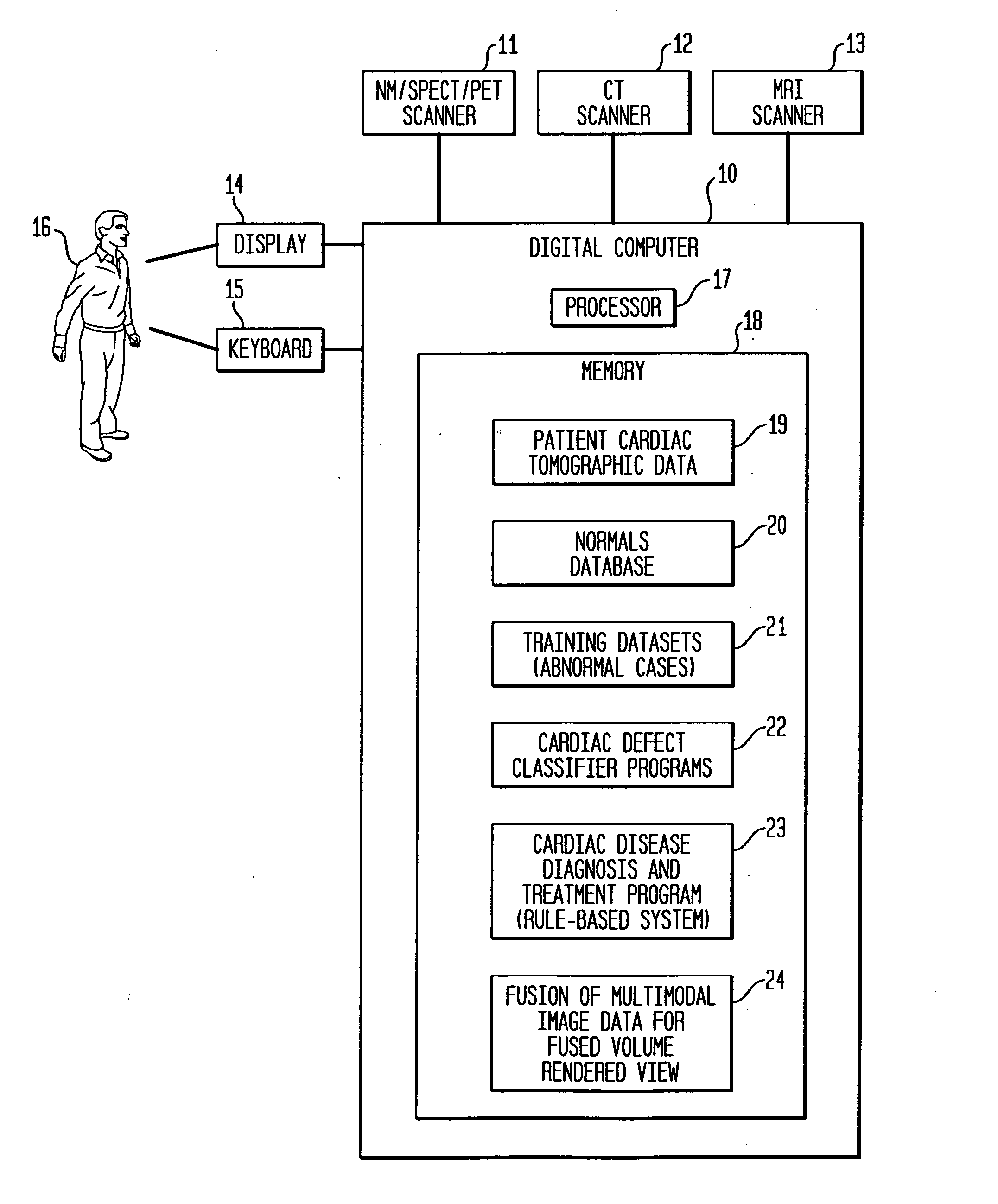

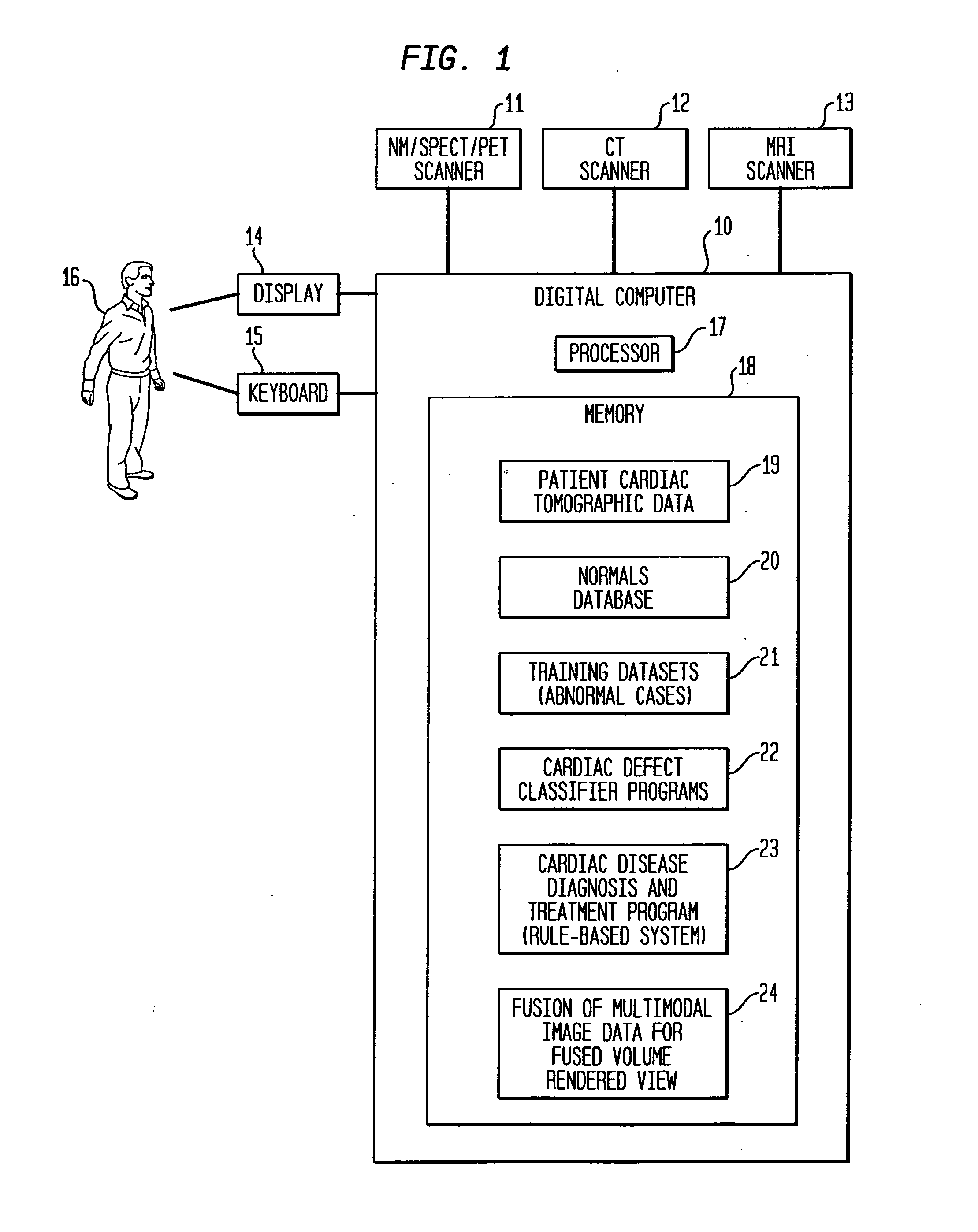

Dedicated display for processing and analyzing multi-modality cardiac data

For diagnosis and treatment of cardiac disease, images of the heart muscle and coronary vessels are captured using different medical imaging modalities; e.g., single photon emission computed tomography (SPECT), positron emission tomography (PET), electron-beam X-ray computed tomography (CT), magnetic resonance imaging (MRI), or ultrasound (US). For visualizing the multi-modal image data, the data is presented using a technique of volume rendering, which allows users to visually analyze both functional and anatomical cardiac data simultaneously. The display is also capable of showing additional information related to the heart muscle, such as coronary vessels. Users can interactively control the viewing angle based on the spatial distribution of the quantified cardiac phenomena or atherosclerotic lesions.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

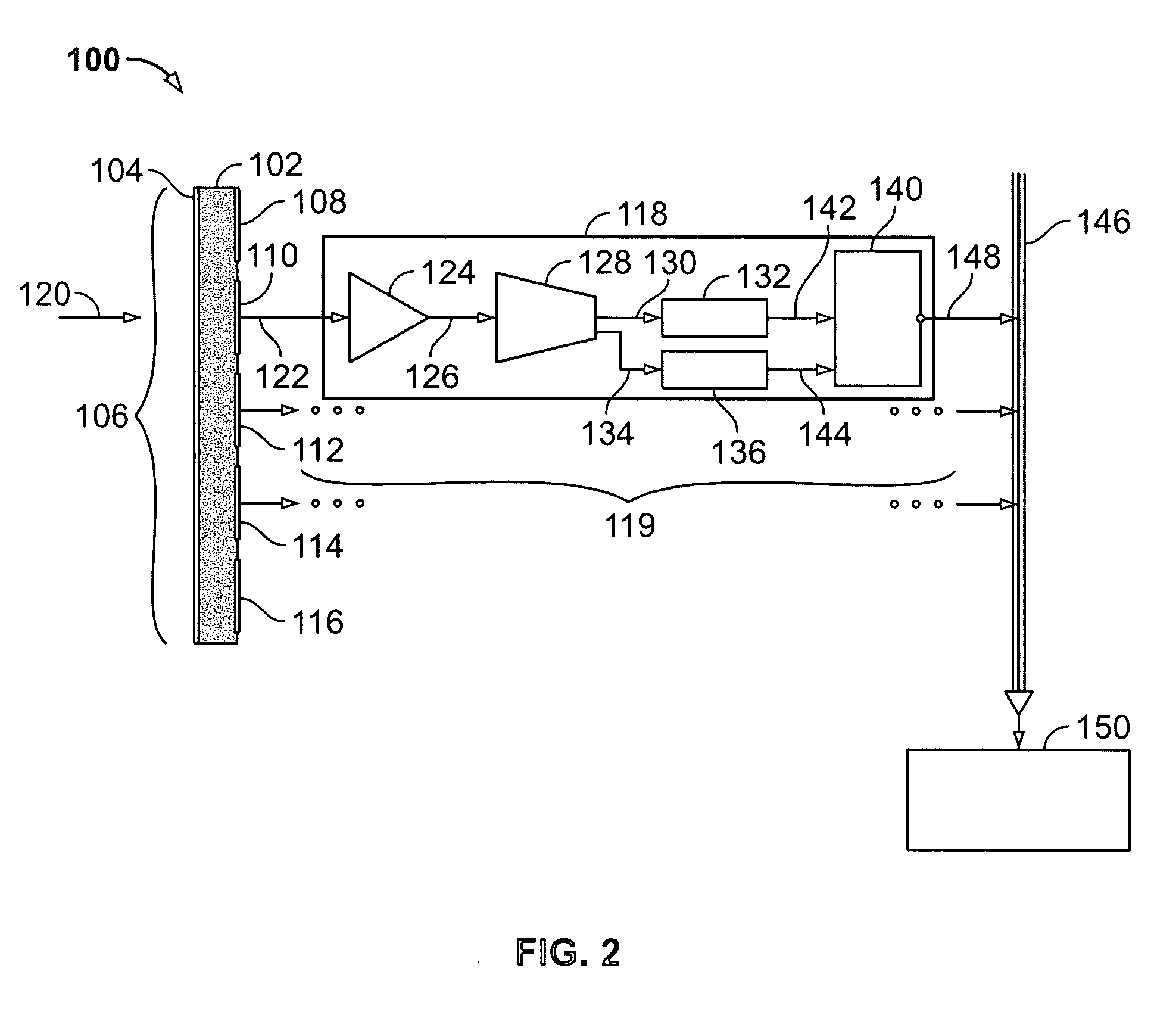

Method and apparatus for acquiring radiation data

Method and apparatus for sensing and acquiring radiation data comprises sensing radiation events with a multi-modality detector. The detector comprises solid state crystals forming a matrix of pixels which may be arranged in rows and columns, and has a radiation detection field for sensing radiation events. The radiation events for each pixel are counted by an electronic module attached to each pixel. The electronic module comprises a threshold analyzer for analyzing the radiation events. The threshold analyzer identifies valid events by comparing an energy level associated with the radiation event to a predetermined threshold. The electronic module further comprises at least a first counter for counting the valid events.

Owner:GE MEDICAL SYST ISRAEL

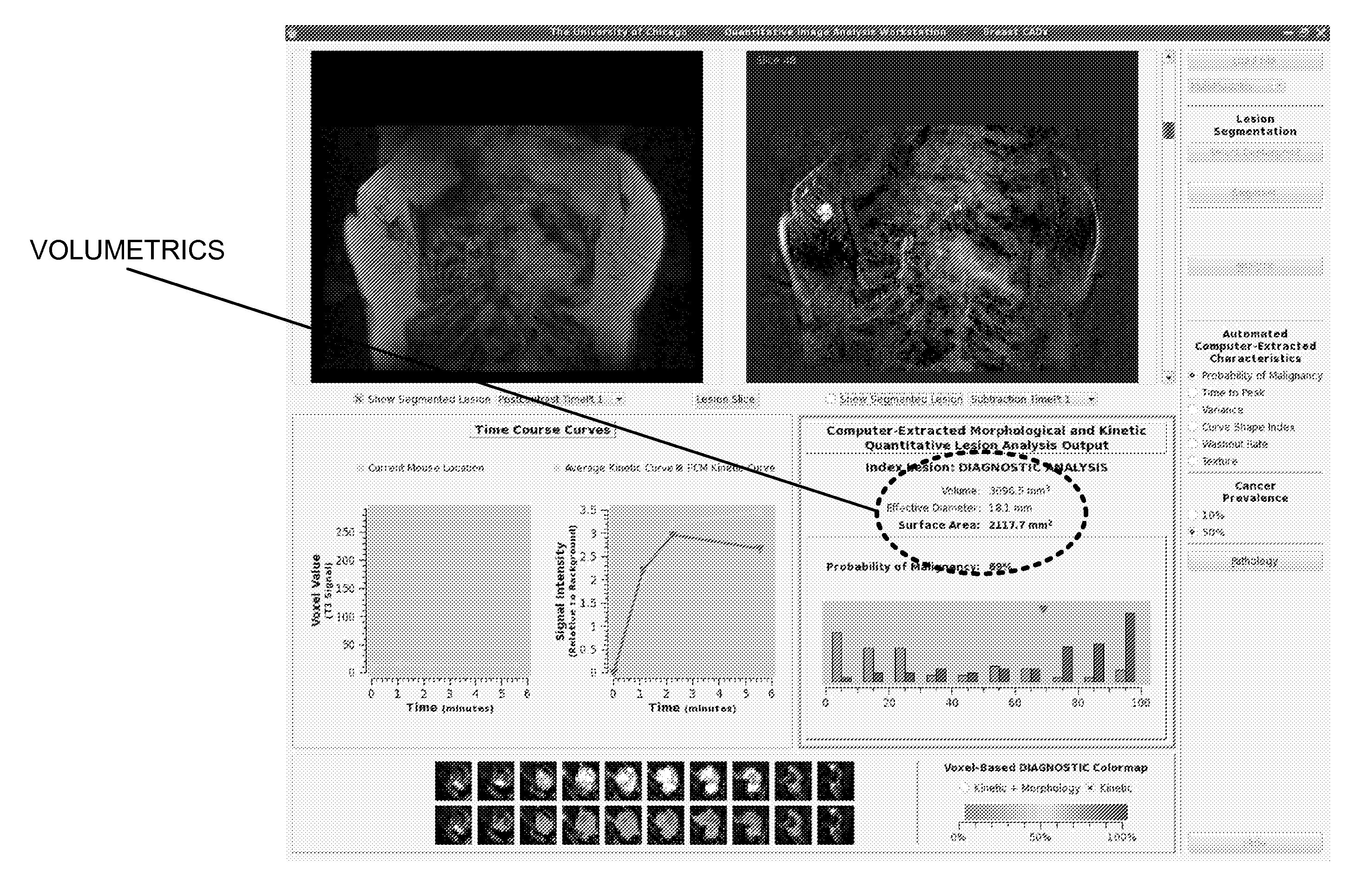

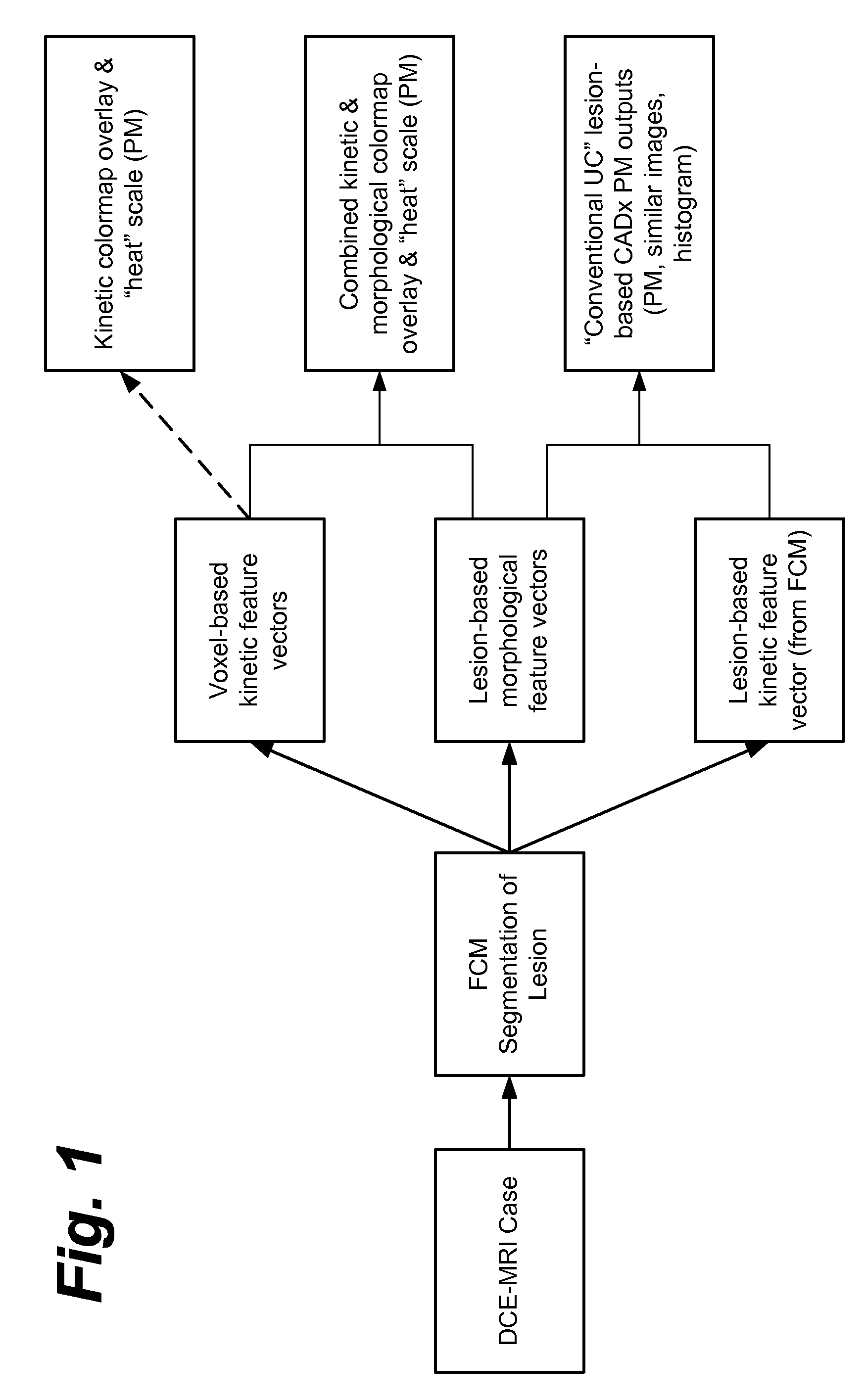

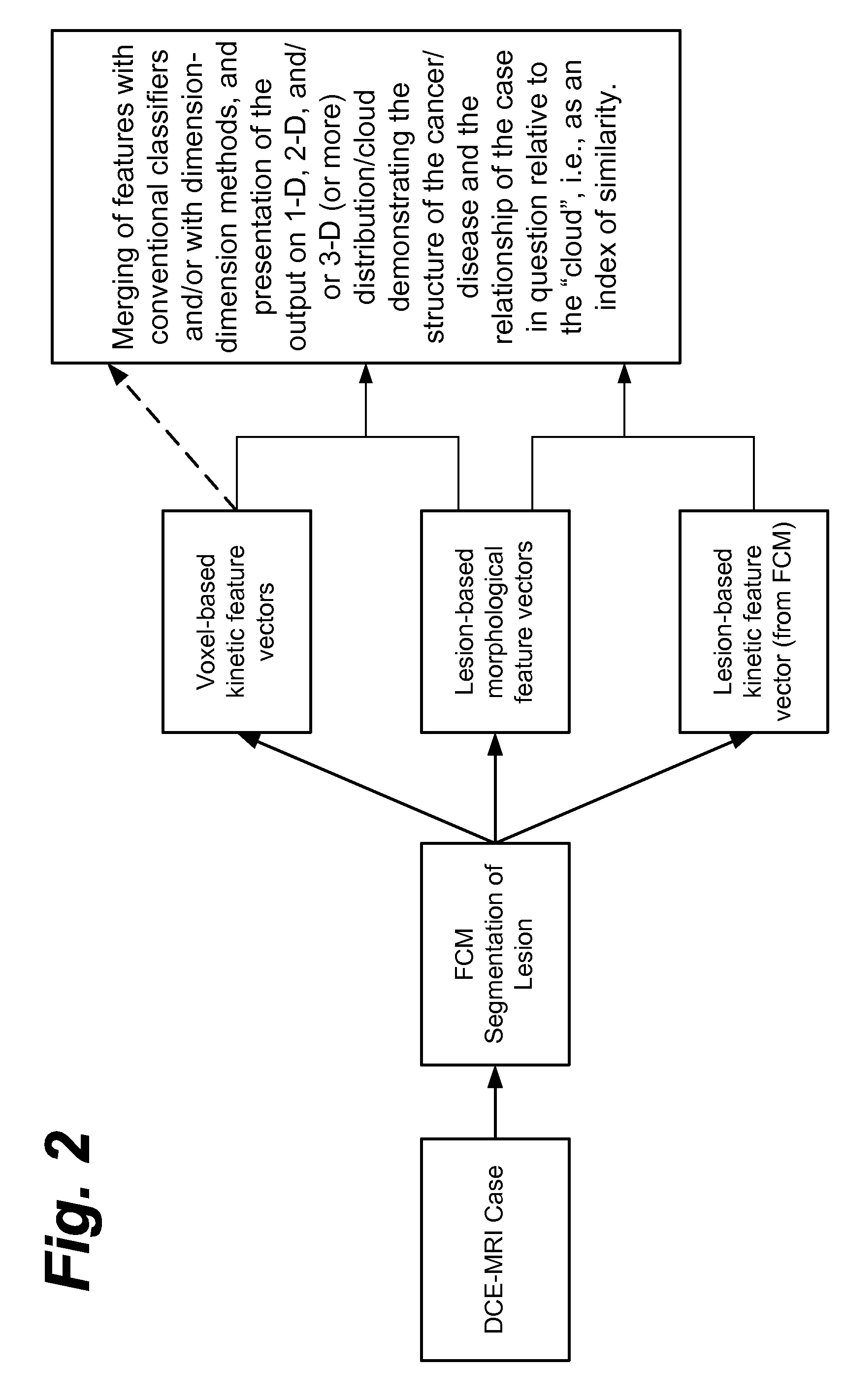

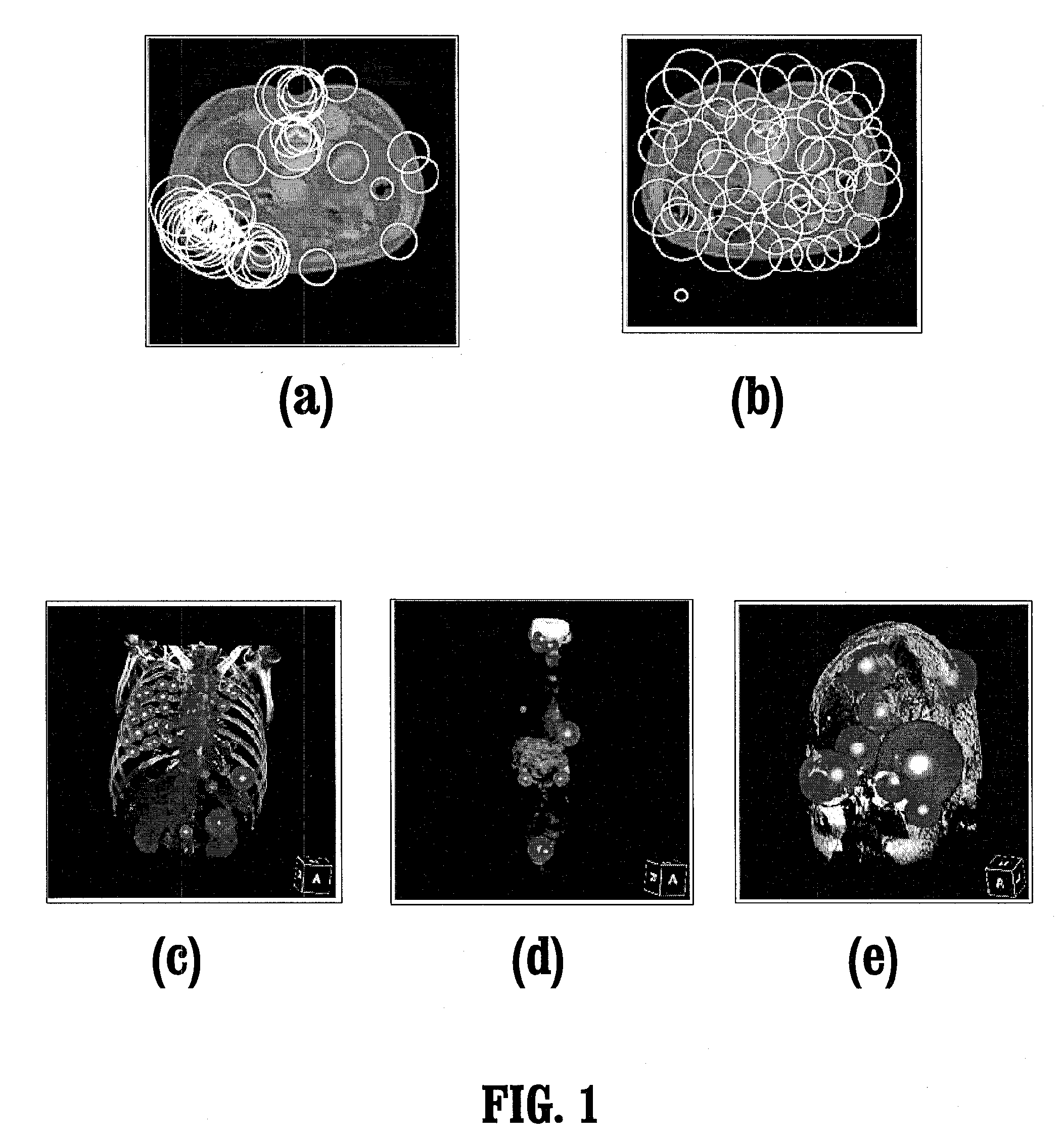

Method, system, software and medium for advanced intelligent image analysis and display of medical images and information

ActiveUS20120189176A1Obtain dataReduced dimensionImage enhancementReconstruction from projectionVoxelLesion

Computerized interpretation of medical images for quantitative analysis of multi-modality breast images including analysis of FFDM, 2D / 3D ultrasound, MRI, or other breast imaging methods. Real-time characterization of tumors and background tissue, and calculation of image-based biomarkers is provided for breast cancer detection, diagnosis, prognosis, risk assessment, and therapy response. Analysis includes lesion segmentation, and extraction of relevant characteristics (textural / morphological / kinetic features) from lesion-based or voxel-based analyses. Combinations of characteristics in several classification tasks using artificial intelligence is provided. Output in terms of 1D, 2D or 3D distributions in which an unknown case is identified relative to calculations on known or unlabeled cases, which can go through a dimension-reduction technique. Output to 3D shows relationships of the unknown case to a cloud of known or unlabeled cases, in which the cloud demonstrates the structure of the population of patients with and without the disease.

Owner:QLARITY IMAGING LLC

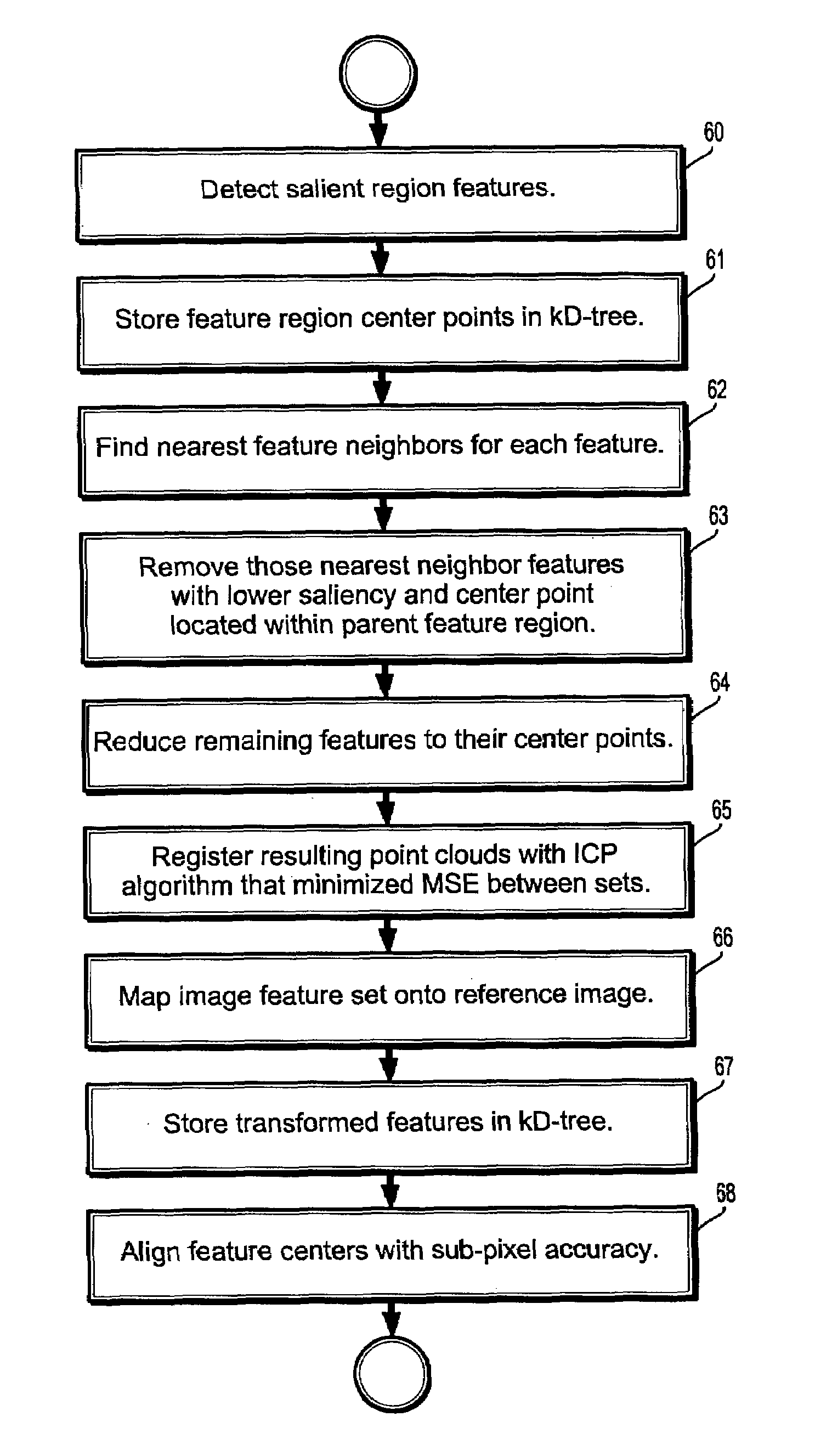

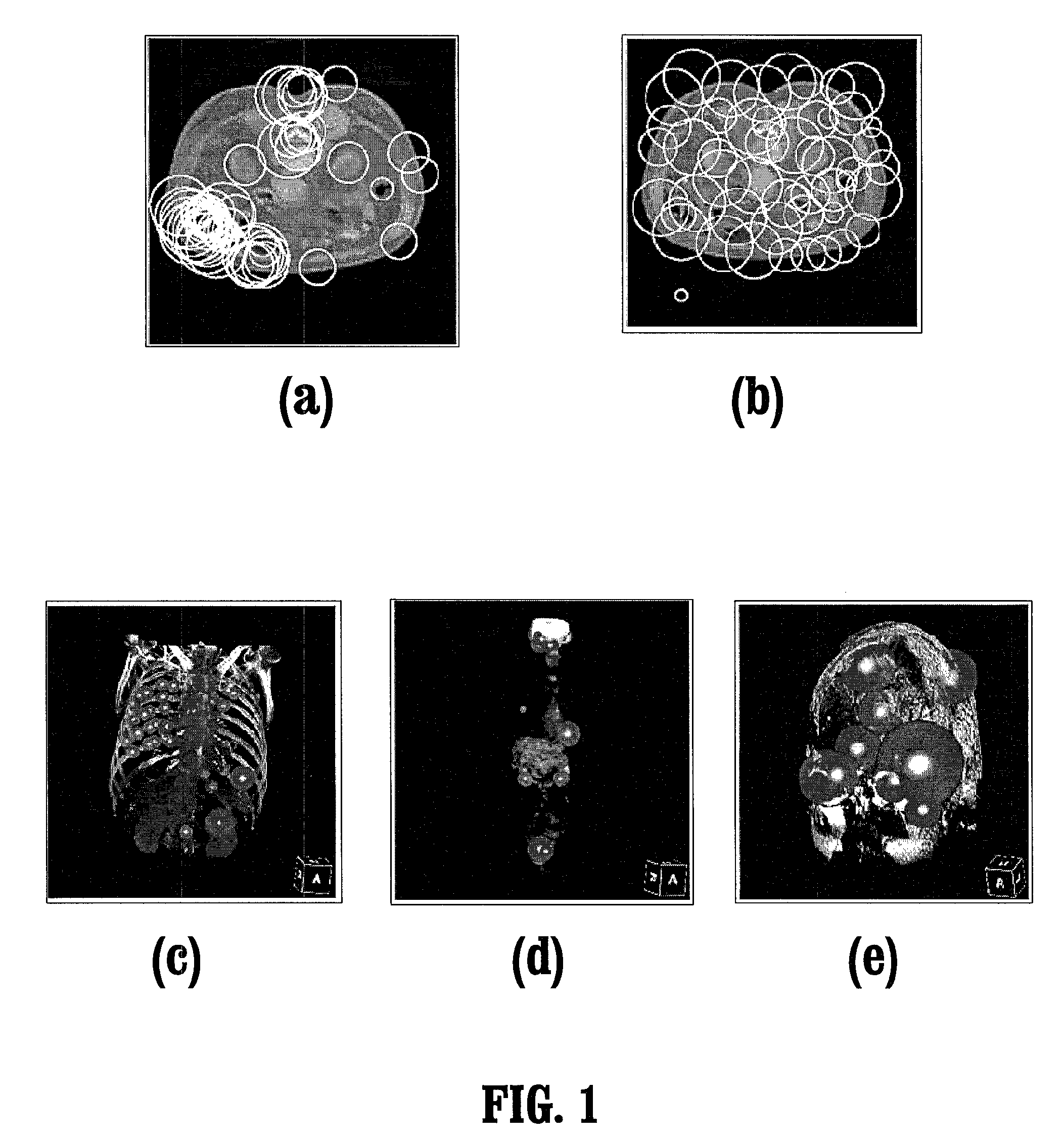

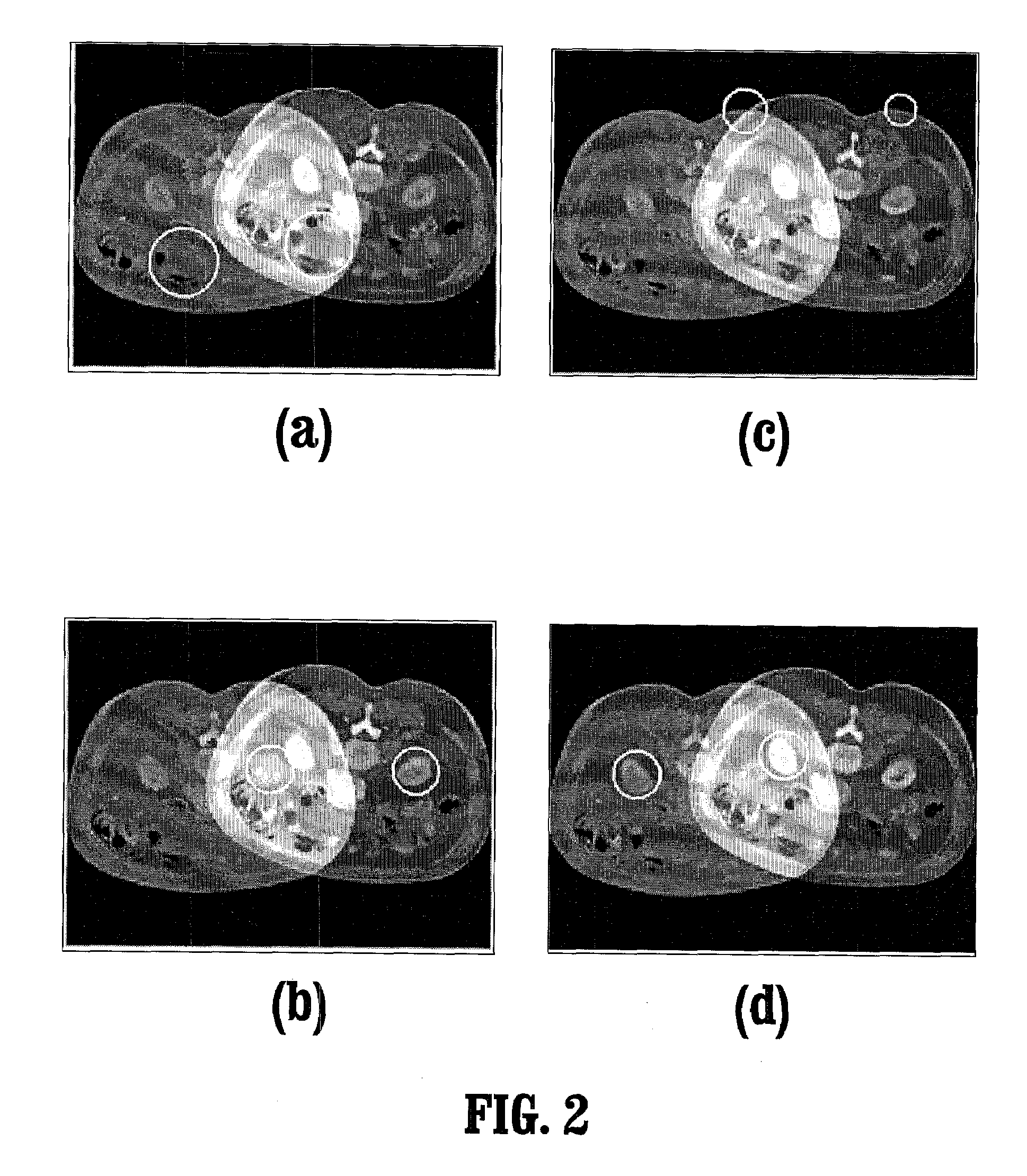

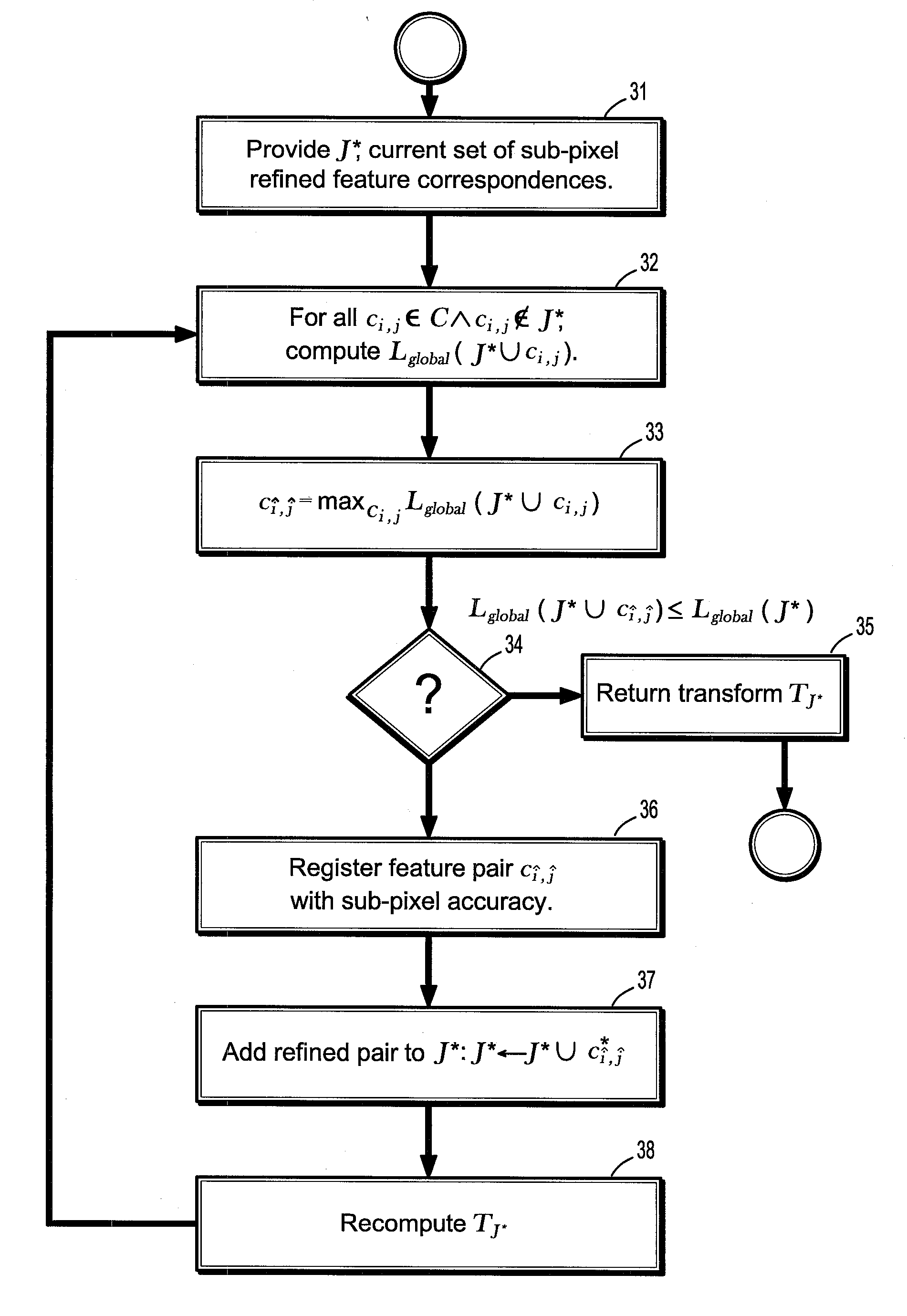

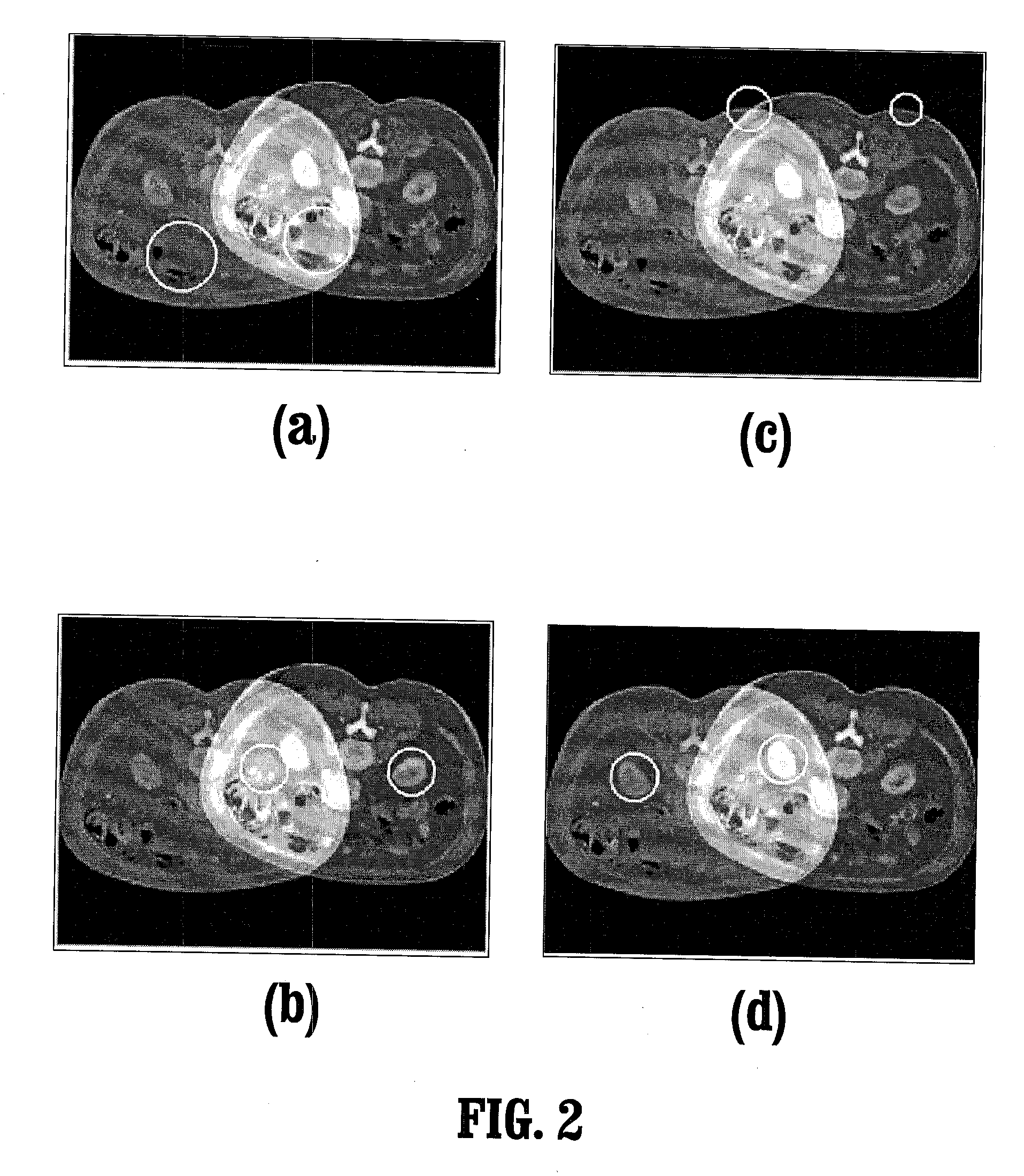

System and method for salient region feature based 3D multi modality registration of medical images

ActiveUS7583857B2Eliminate the problemReduce complexityImage enhancementImage analysisSimilarity measureFeature based

A method for aligning a pair of images includes providing a pair of images, identifying salient feature regions in both a first image and a second image, wherein each region is associated with a spatial scale, representing feature regions by a center point of each region, registering the feature points of one image with the feature points of the other image based on local intensities, ordering said feature pairs by a similarity measure, and optimizing a joint correspondence set of feature pairs by refining the center points to sub-pixel accuracy.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

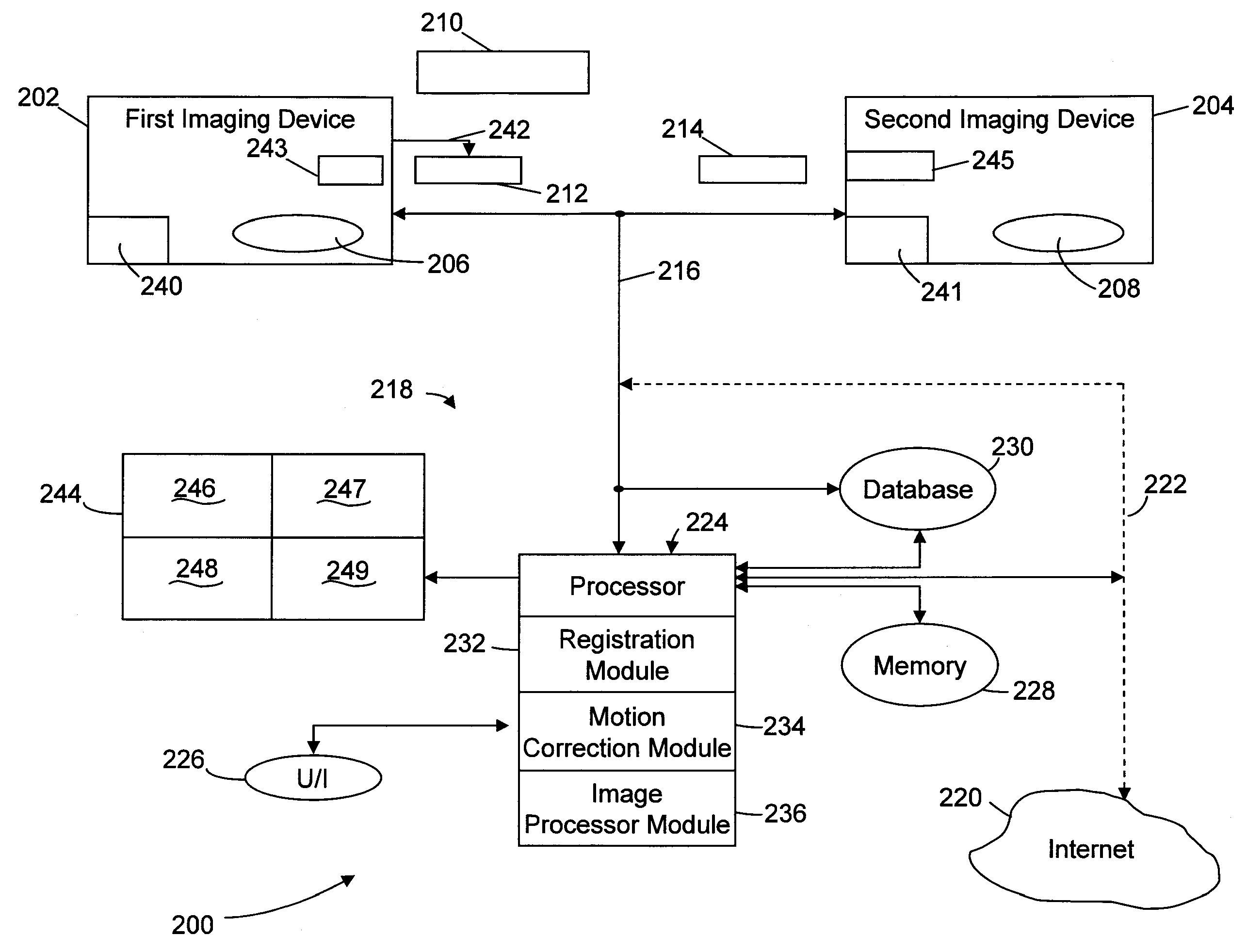

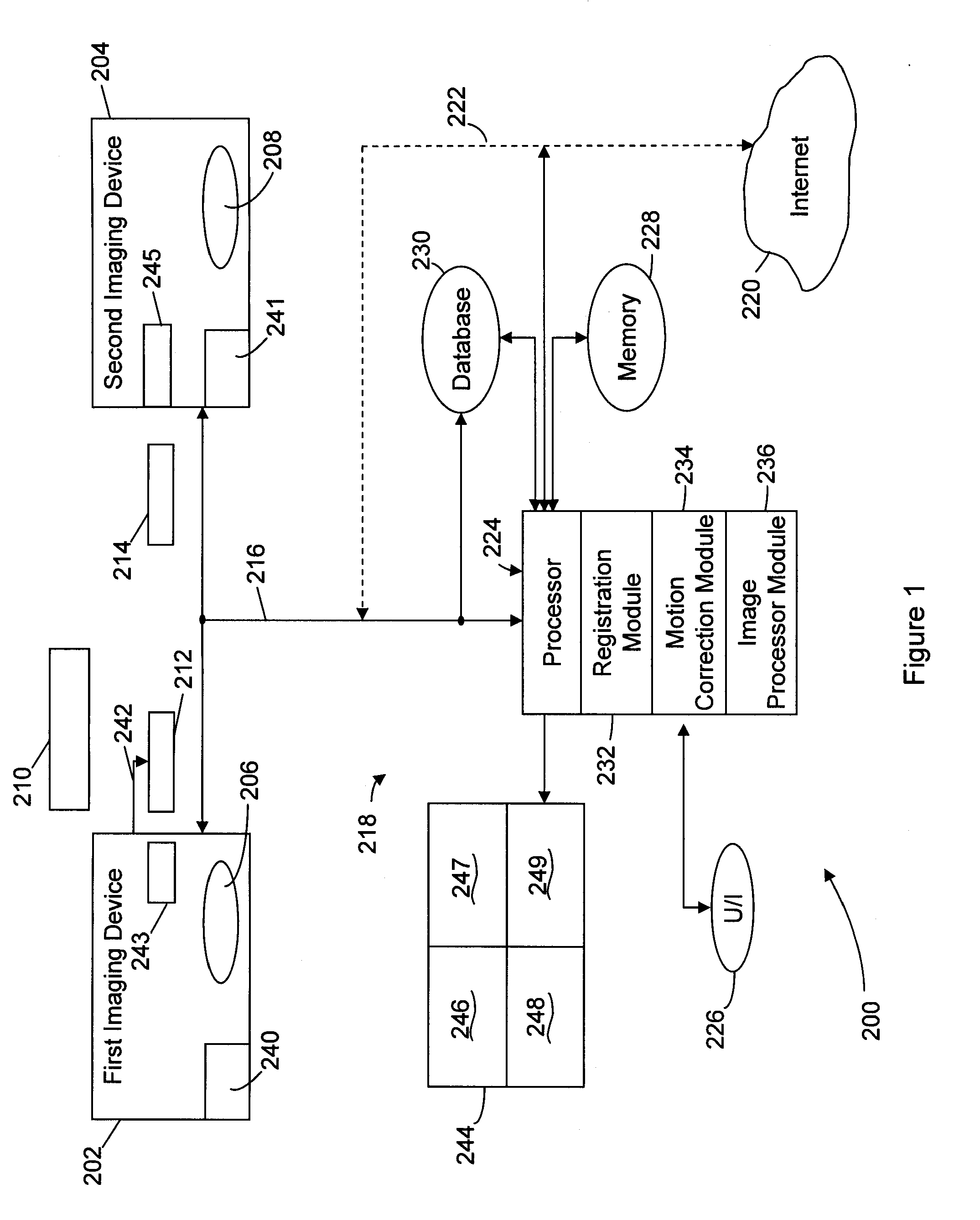

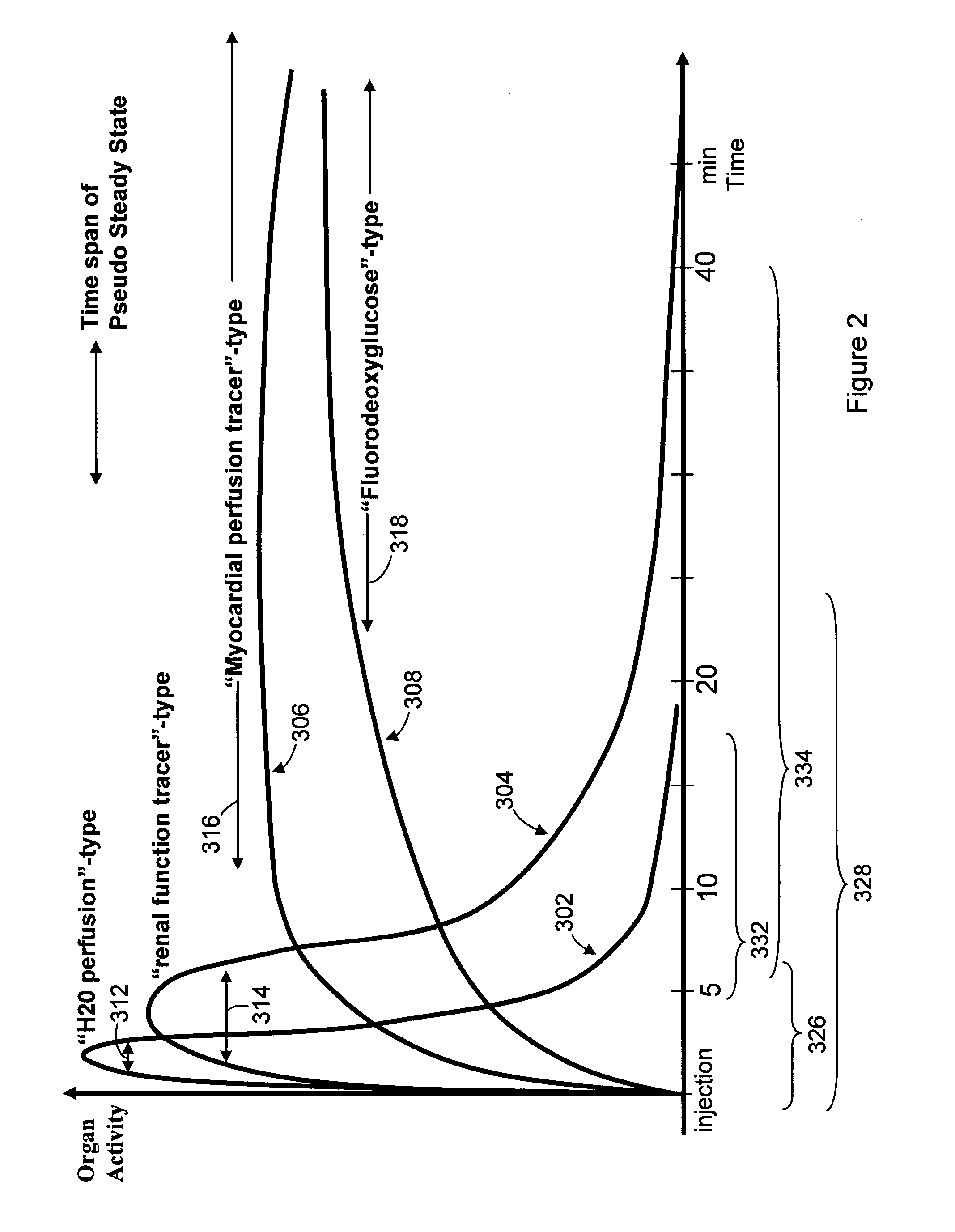

Method & system for multi-modality imaging of sequentially obtained pseudo-steady state data

Methods, protocols and systems are provided for multi-modality imaging based on pharmacokinetics of an imaging agent. An imaging agent is introduced into a subject, and is permitted to collect generally in a region of interest (ROI) in the subject until attaining a pseudo-steady state (PSS) distribution within the ROI. The imaging agent records a first functional state of the ROI at a given point in time. A first image data set is obtained with a first imaging modality during a first acquisition time interval that occurs prior or proximate in time with the PSS time interval. The subject is transferred from the first imaging modality to a second imaging modality during a transfer time interval that overlaps the PSS time interval. Once transfer is complete, a second image data set is obtained with the second imaging modality during a second acquisition time interval that also overlaps the PSS time interval in which the imaging agent maintains the PSS distribution in the ROI. In accordance with a protocol, the transfer time interval and second acquisition time interval substantially fall within the PSS time interval. The imaging agent collects in the ROI during an uptake time interval which may or may not precede the time interval during which first imaging modality obtains at least a portion of the first image data set. The second image data set is obtained while the imaging agent persists in the ROI at the PSS distribution reflective of the first functional state even after the ROI is no longer in the first functional state.

Owner:UNIV ZURICH +1

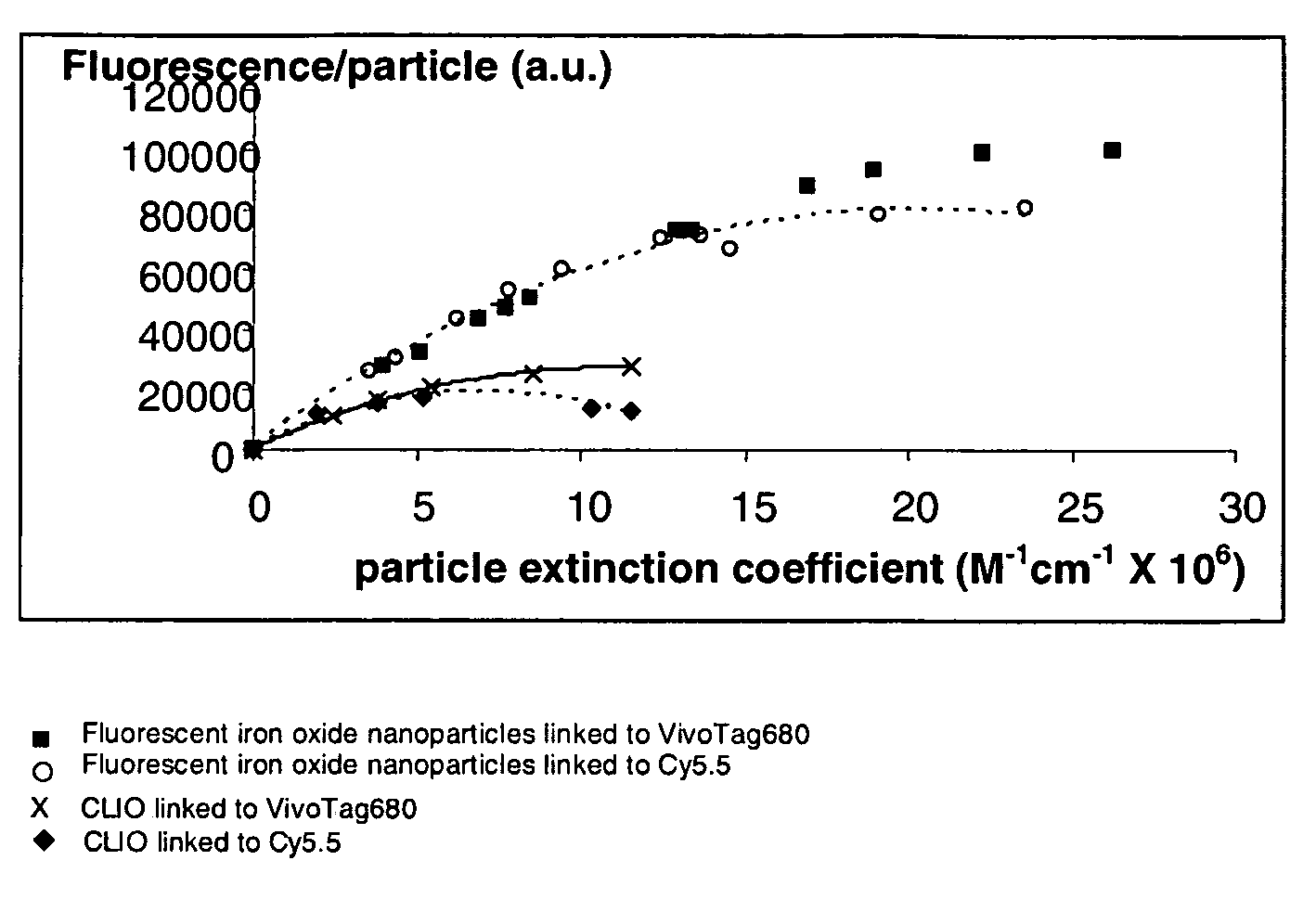

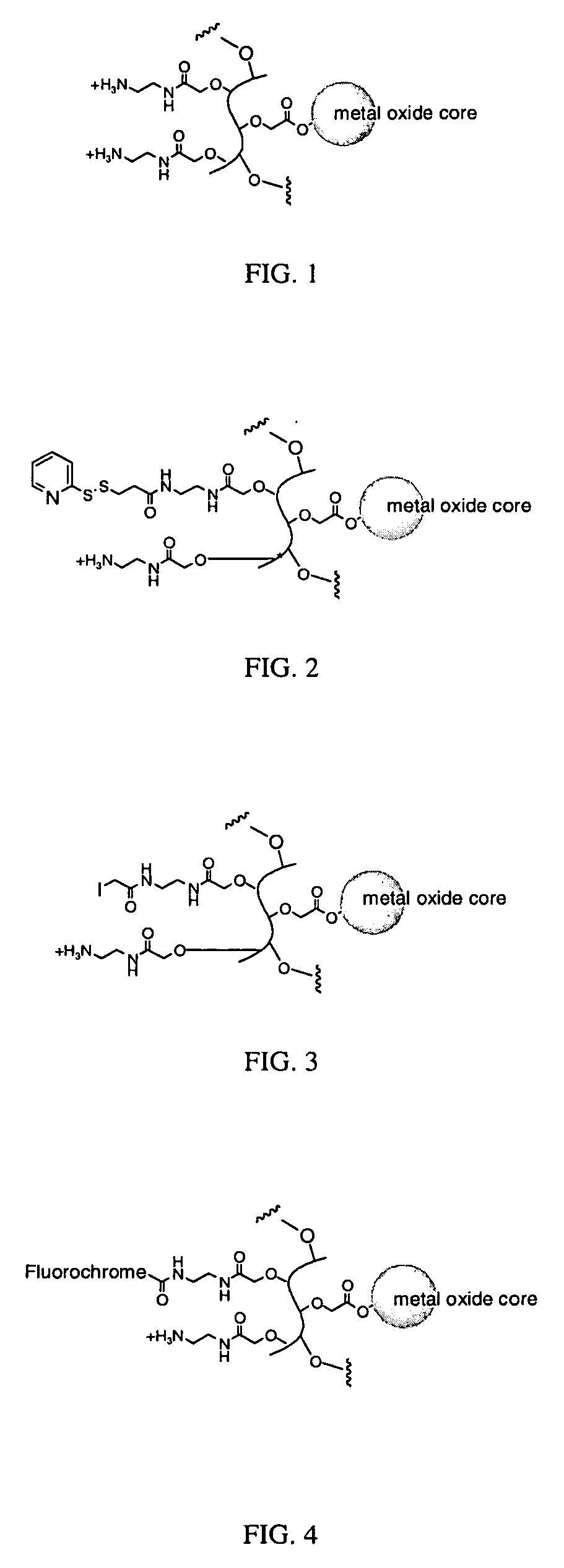

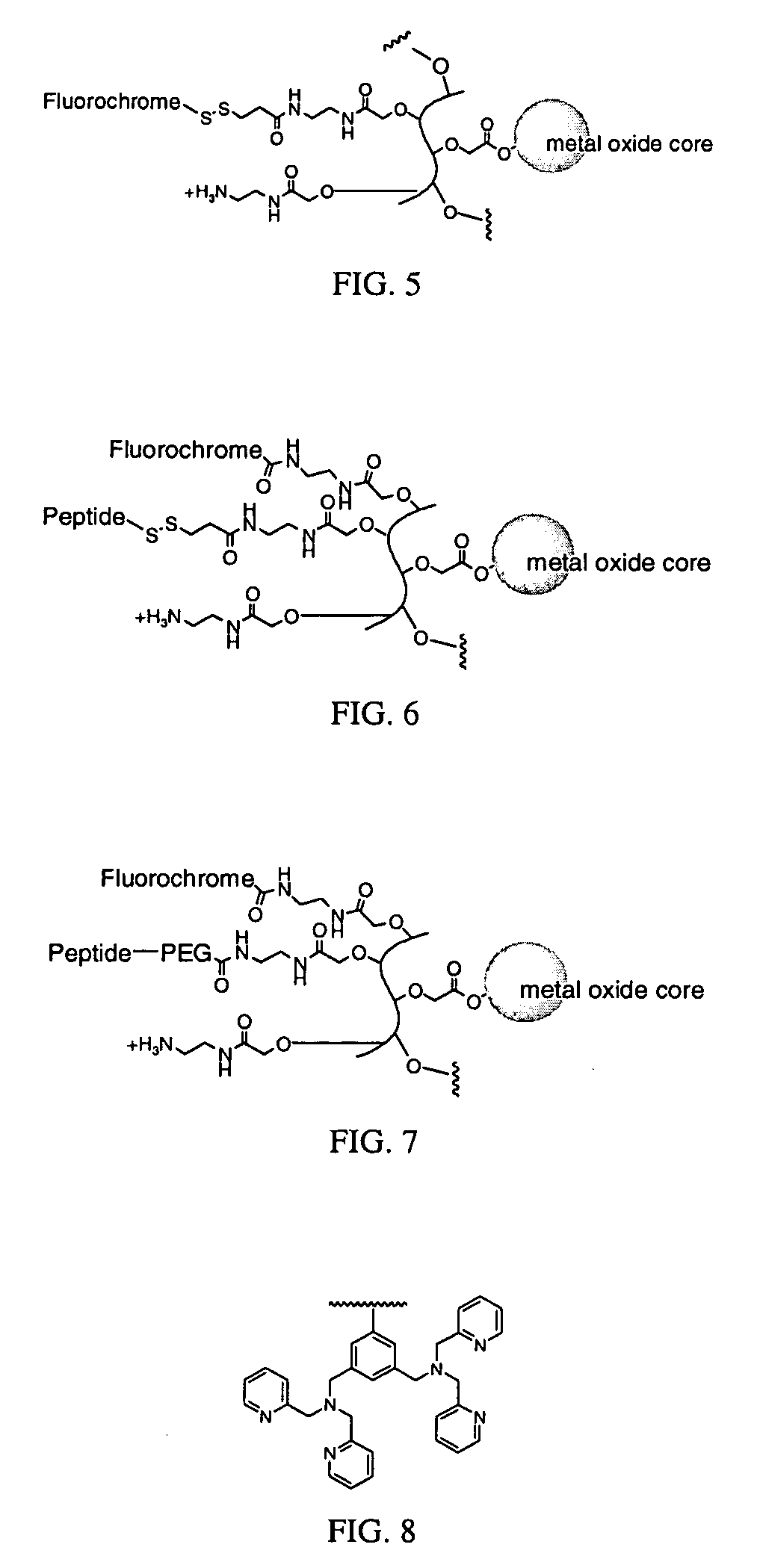

Biocompatible fluorescent metal oxide nanoparticles

ActiveUS20080226562A1Ultrasonic/sonic/infrasonic diagnosticsPowder deliveryMetal oxide nanoparticlesChemical ligation

The invention relates to highly fluorescent metal oxide nanoparticles to which biomolecules and other compounds can be chemically linked to form biocompatible, stable optical imaging agents for in vitro and in vivo applications. The fluorescent metal oxide nanoparticles may also be used for magnetic resonance imaging (MRI), thus providing a multi modality imaging agent.

Owner:VISEN MEDICAL INC

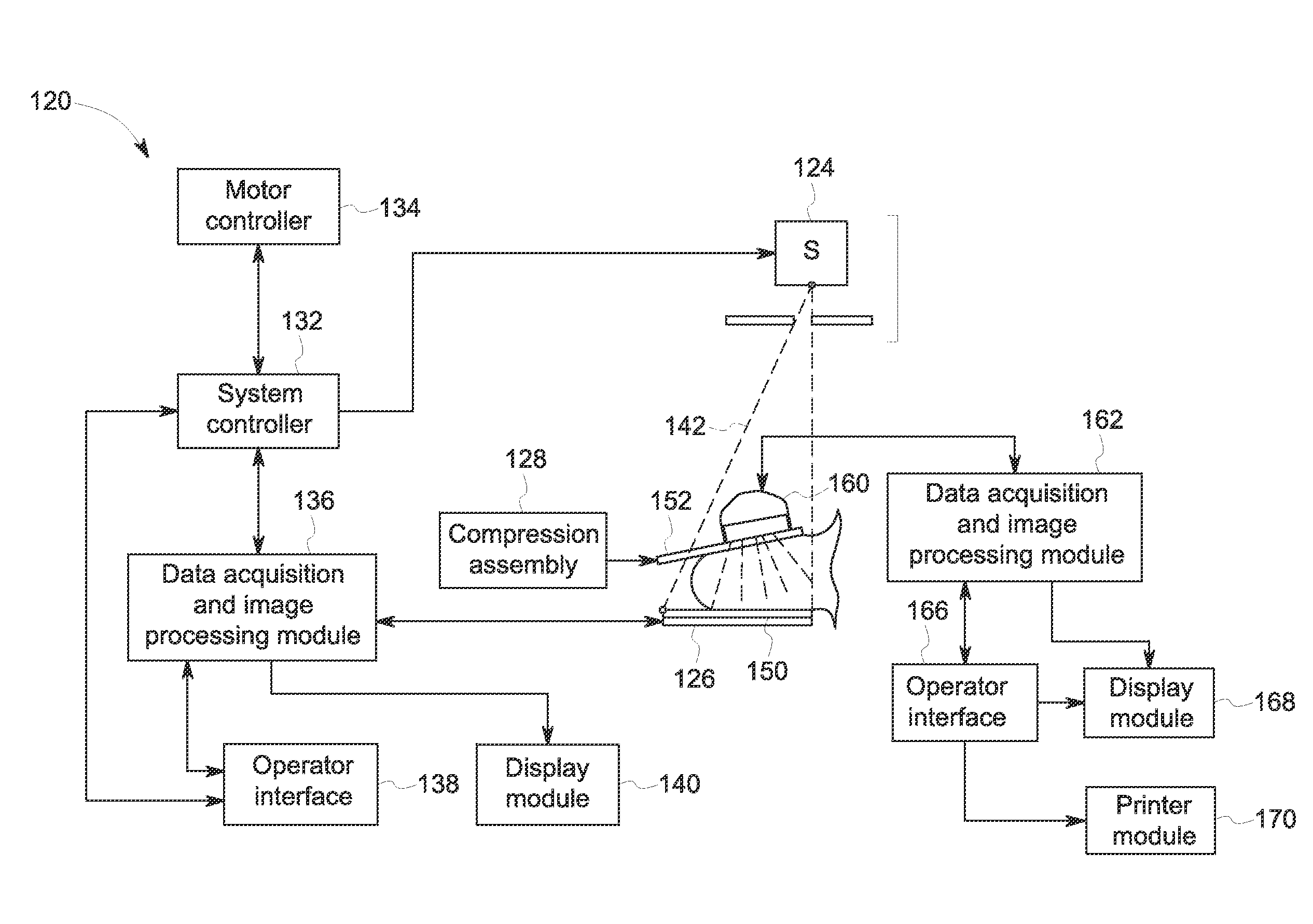

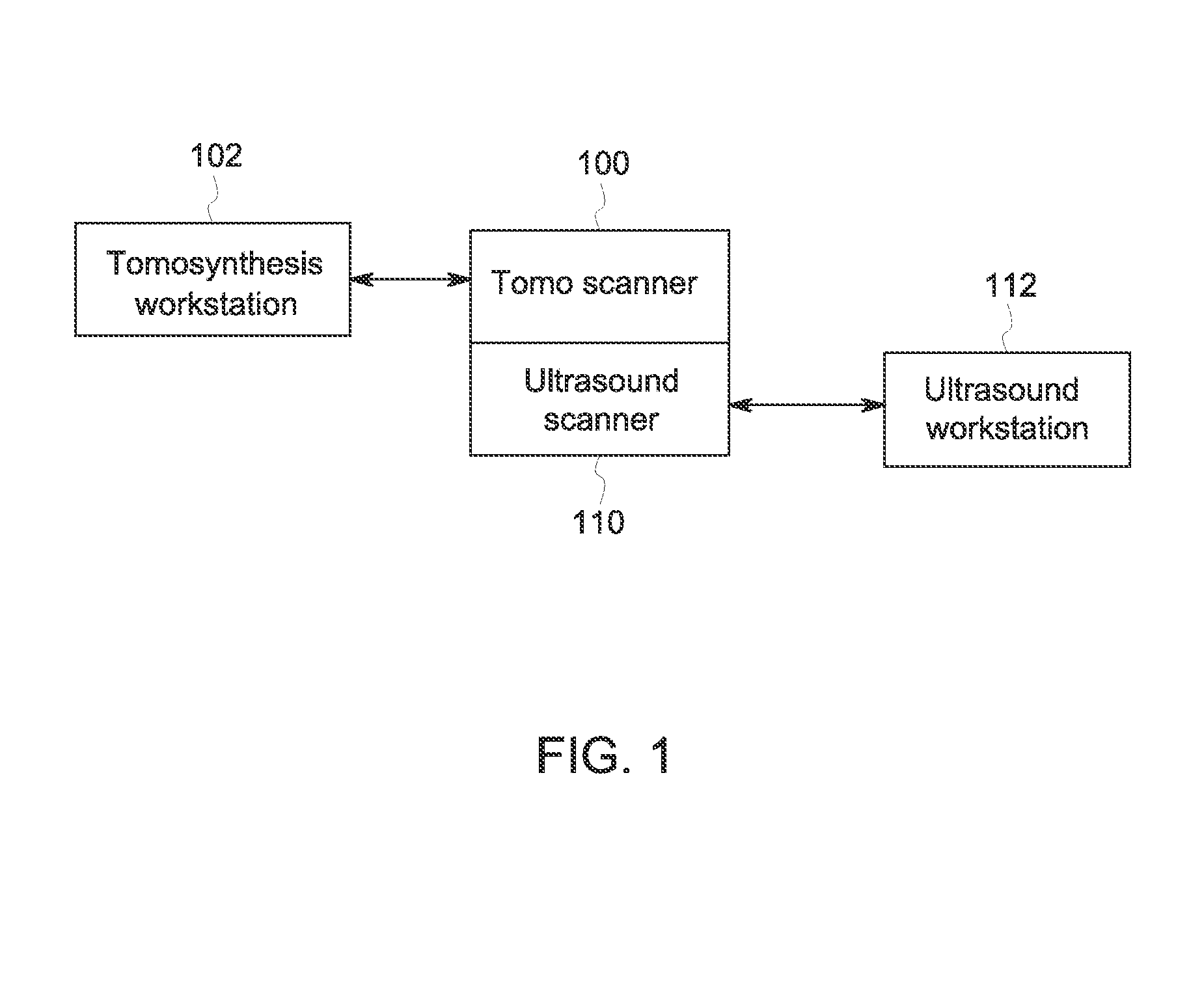

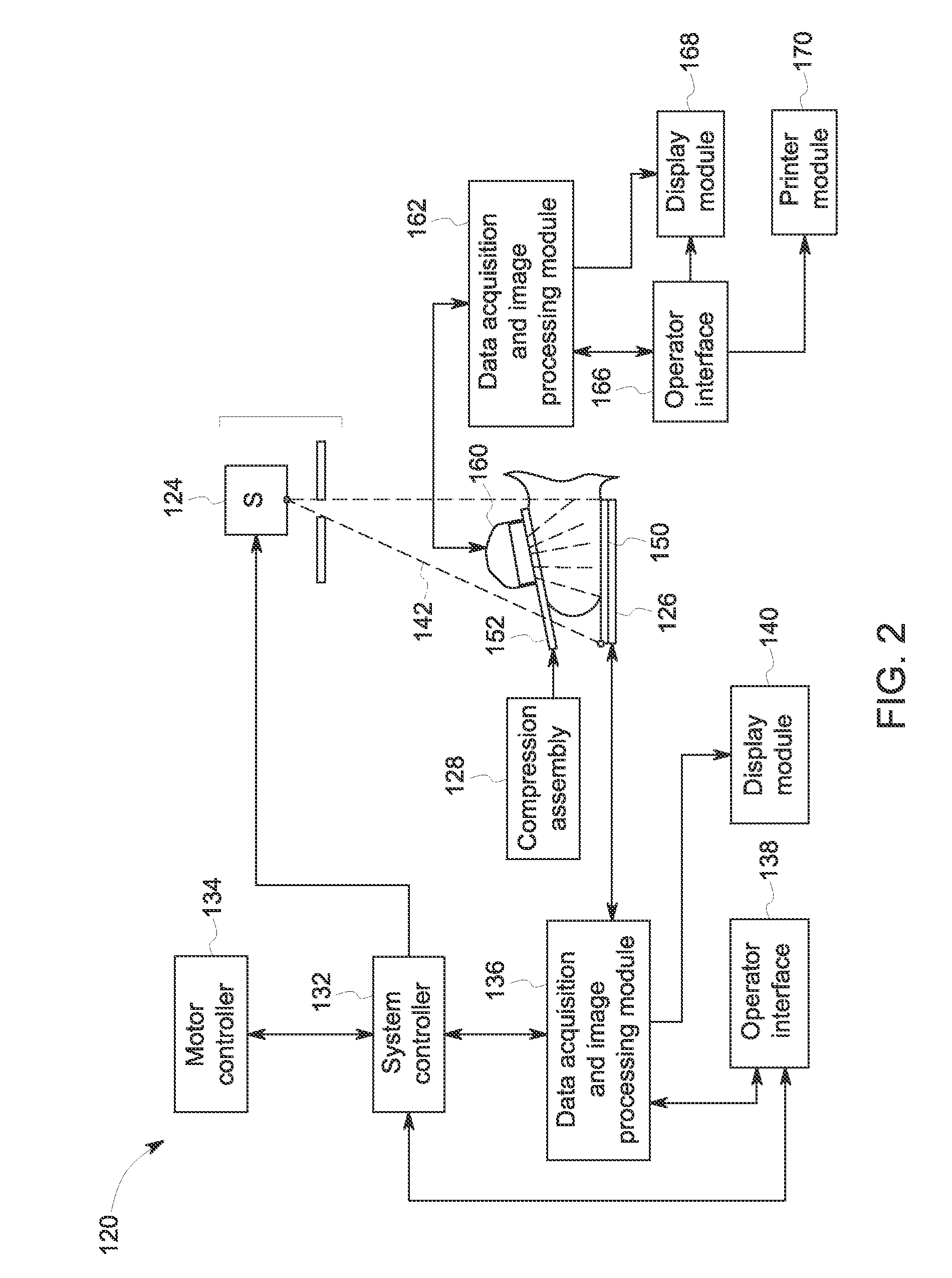

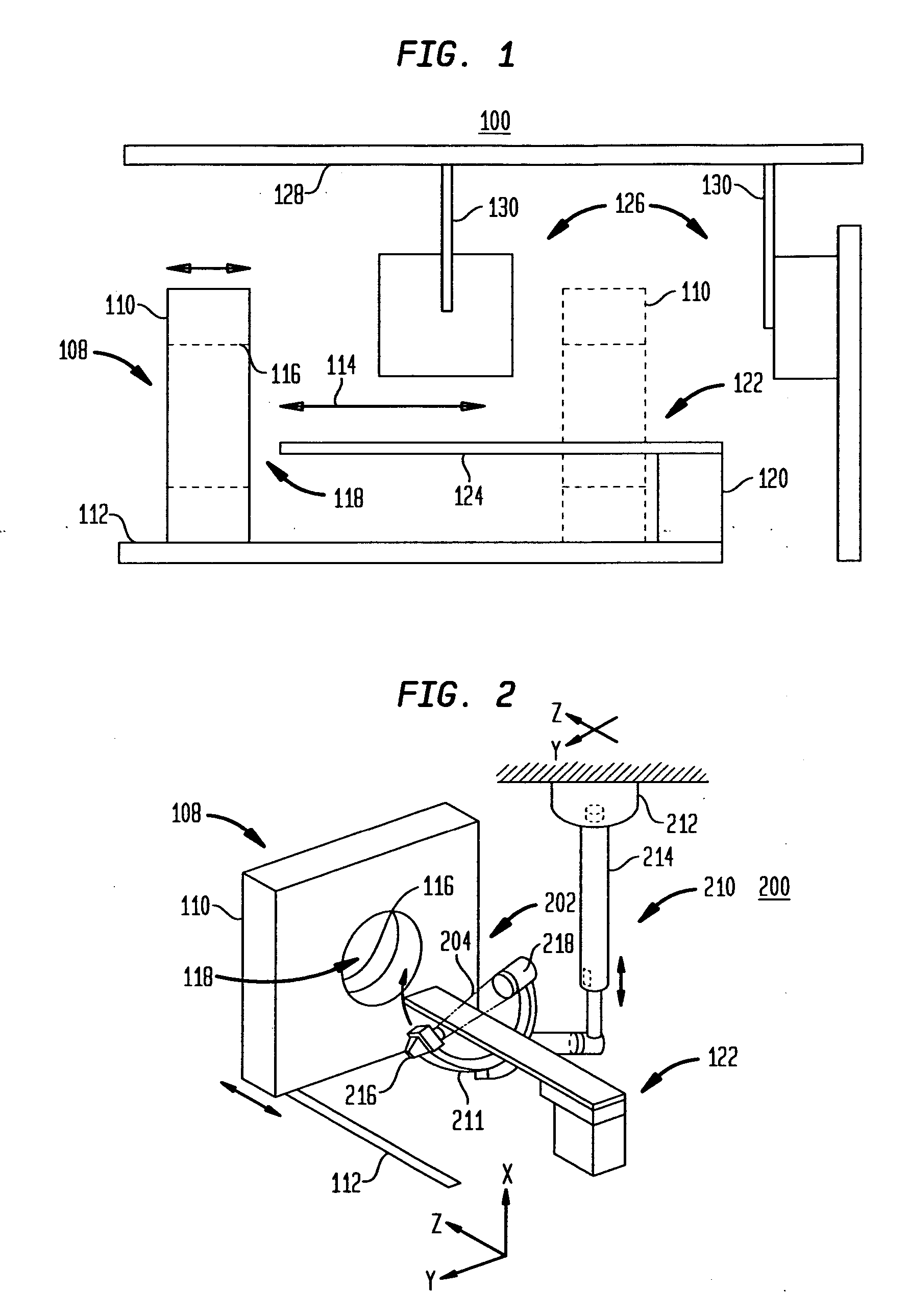

Breast imaging method and system

ActiveUS20160166234A1Reduce compressionOrgan movement/changes detectionTomosynthesisTomosynthesisUltrasound imaging

An ultrasound scan probe and support mechanism are provided for use in a multi-modality mammography imaging system, such as a combined tomosynthesis and ultrasound imaging system. In one embodiment, the ultrasound components may be positioned and configured so as not to interfere with the tomosynthesis imaging operation, such as to remain out of the X-ray beam path. Further, the ultrasound probe and associated components may be configured to as to move and scan the breast tissue under compression, such as under the compression provided by one or more paddles used in the tomosynthesis imaging operation.

Owner:GENERAL ELECTRIC CO

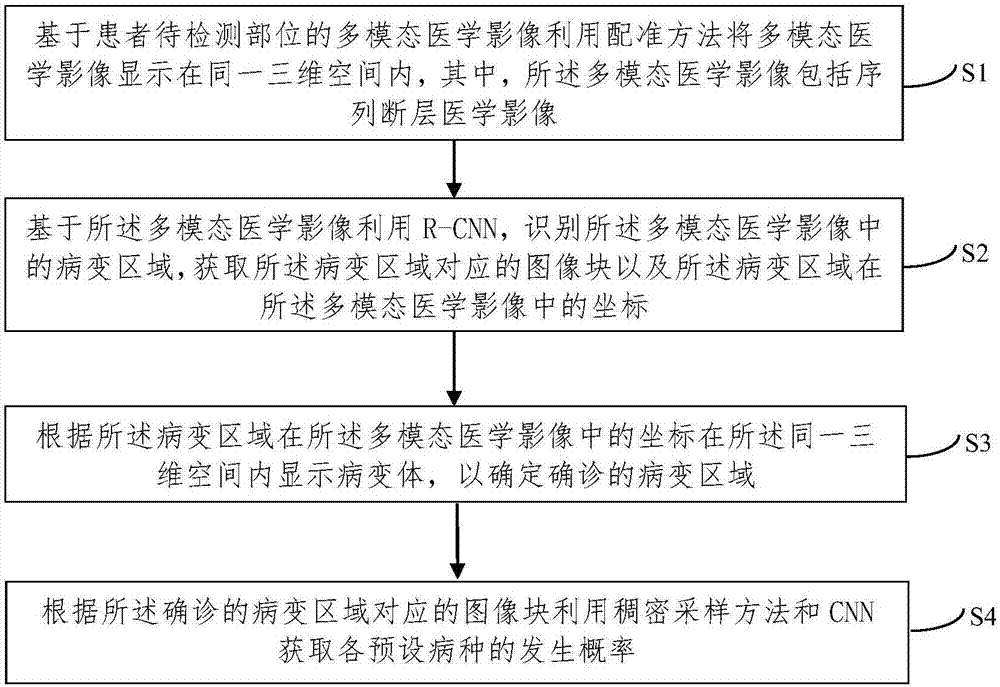

Multi-modality medical image identification method and device based on deep learning

ActiveCN106909778AEasy to observeEasy to diagnose and judgeImage analysisSpecial data processing applicationsDiseaseDiagnostic Radiology Modality

The invention provides a multi-modality medical image identification method based on deep learning. The method includes the steps that on the basis of multi-modality medical images of a patient's part to be detected, a registration method is adopted to display the multi-modality medical images in the same three-dimensional space; on the basis of the multi-modality medical images, R-CNN is adopted to identify lesion areas in the multi-modality medical images; according to coordinates of the lesion areas in the multi-modality medical images, lesion bodies are displayed in the same three-dimensional space, and according to image blocks corresponding to diagnosed lesion areas, a dense sampling method and a CNN are adopted to obtain occurrence probabilities of various preset disease types. The invention provides a multi-modality medical image identification device based on the deep learning. The device includes a multi-modality medical image display module, a lesion area detection module, a lesion body display module and a preset disease-type occurrence probability module. According to the multi-modality medical image identification method and device, the automatic identification of the lesion areas in the medical images is achieved, and effective reference data is provided for further diagnosis by doctors.

Owner:BEIJING COMPUTING CENT

Methods and systems for multi-modality imaging

ActiveUS20050207526A1Radiation/particle handlingComputerised tomographsDiagnostic Radiology ModalityX-ray

A method of examining a patient is provided. The method includes aligning a patient table in an opening of a gantry unit that includes a CZT photon detector and an x-ray source, imaging a patient utilizing a first imaging modality during a first portion of a scan using the CZT detector, and imaging a patient utilizing a second imaging modality during a second portion of the scan using the CZT detector wherein the second imaging modality is different than the first imaging modality.

Owner:GE MEDICAL SYST GLOBAL TECH CO LLC

Multi-modality flavored chewing gum compositions

The present invention relates to compositions for a multi-modality center-filled chewing gum. The individual gum pieces, which include the compositions of the present invention, may include a center-fill region surrounded by a gum region. The gum region may include a gum base. The individual gum pieces optionally may be further coated with an external coating layer. At least two components that create a duality, such as two flavors, may be incorporated into different regions of the gum.

Owner:KRAFT FOODS GLOBAL BRANDS LLC

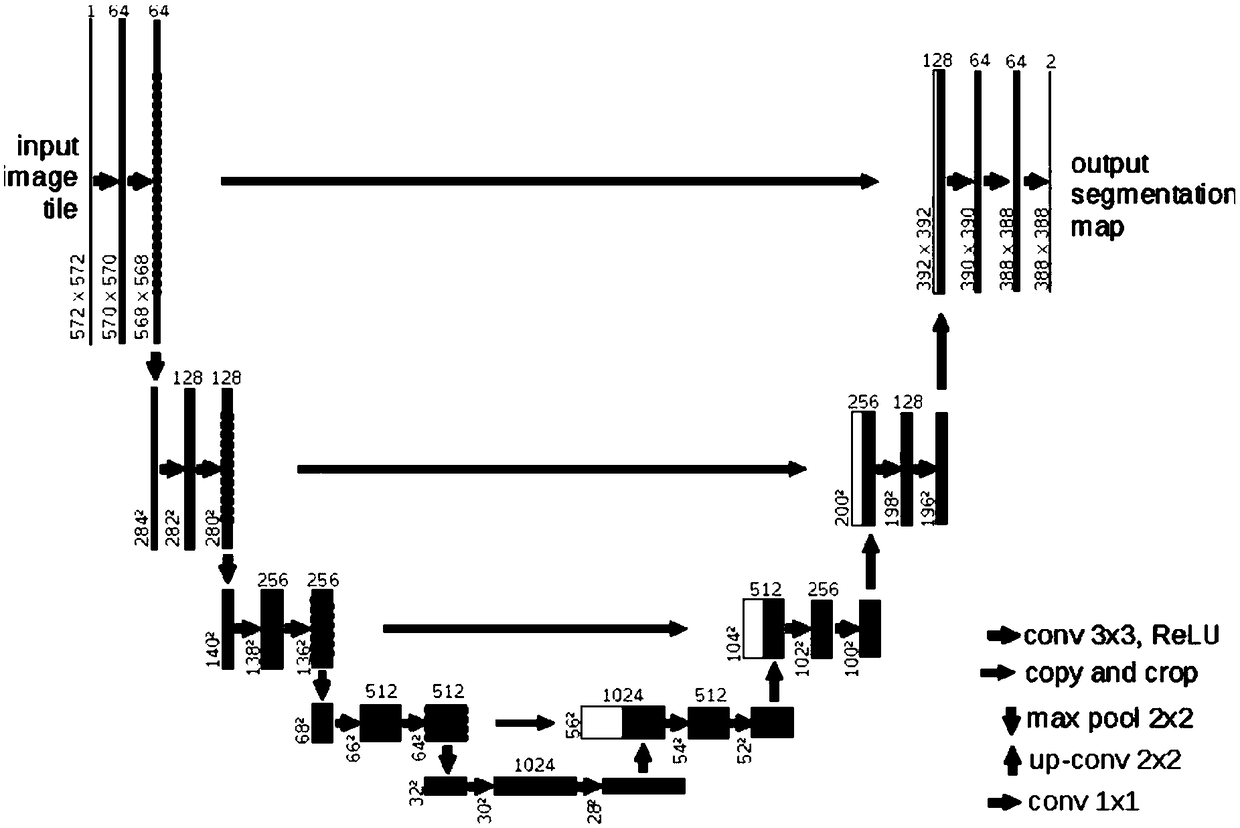

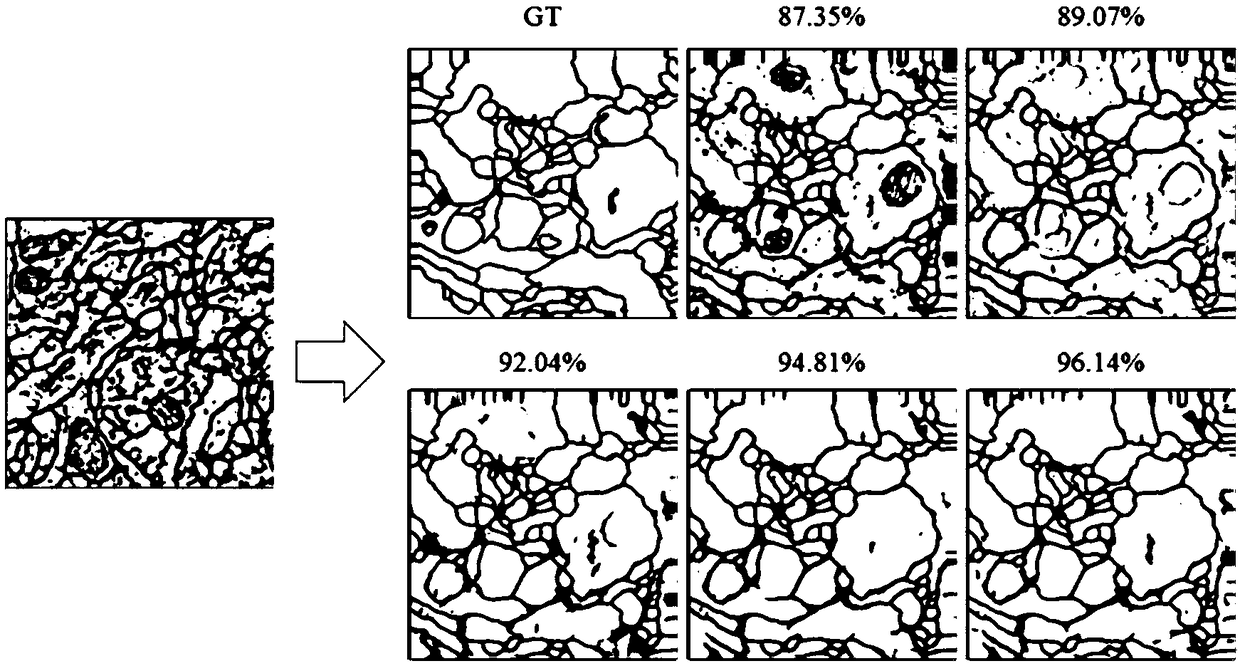

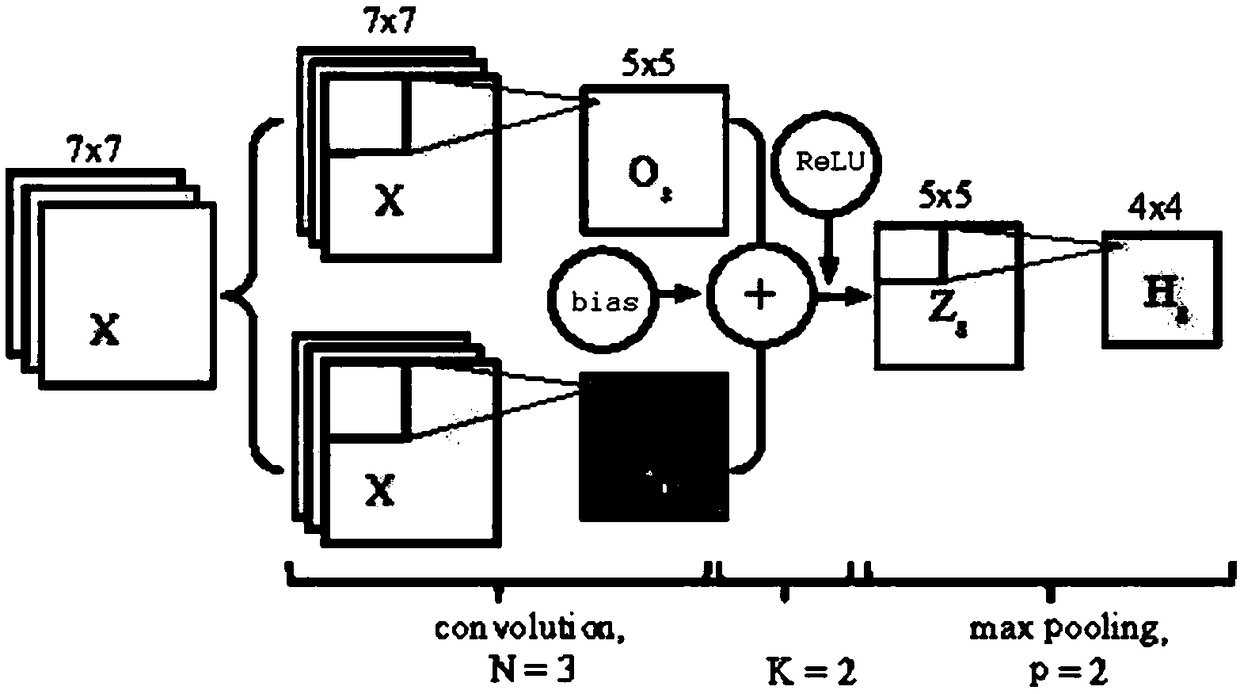

A MRI brain tumor image segmentation method based on optimized U-net network model

InactiveCN109087318AImprove accuracyPreserve image edge informationImage enhancementImage analysisImage segmentationNetwork model

The invention relates to a MRI brain tumor image segmentation method based on an optimized U-net network model. The method comprises the following steps: 101, preprocessing the acquired multimodal MRIbrain tumor image data; 102, inputting the preprocessed multimodal MRI brain tumor image data into the trained U-Net network model; 103, acquiring multi-modality MRI brain tumor image segmentation data output by a U-NET network model, wherein the multimodal MRI brain tumor image segmentation data output from the U-NET network model can preserve the image edge information to generate a complete segmented image feature map. The image segmentation method provided by the invention can not only preserve the image edge information and generate a complete characteristic map, but also improve the accuracy of image segmentation.

Owner:NORTHEASTERN UNIV

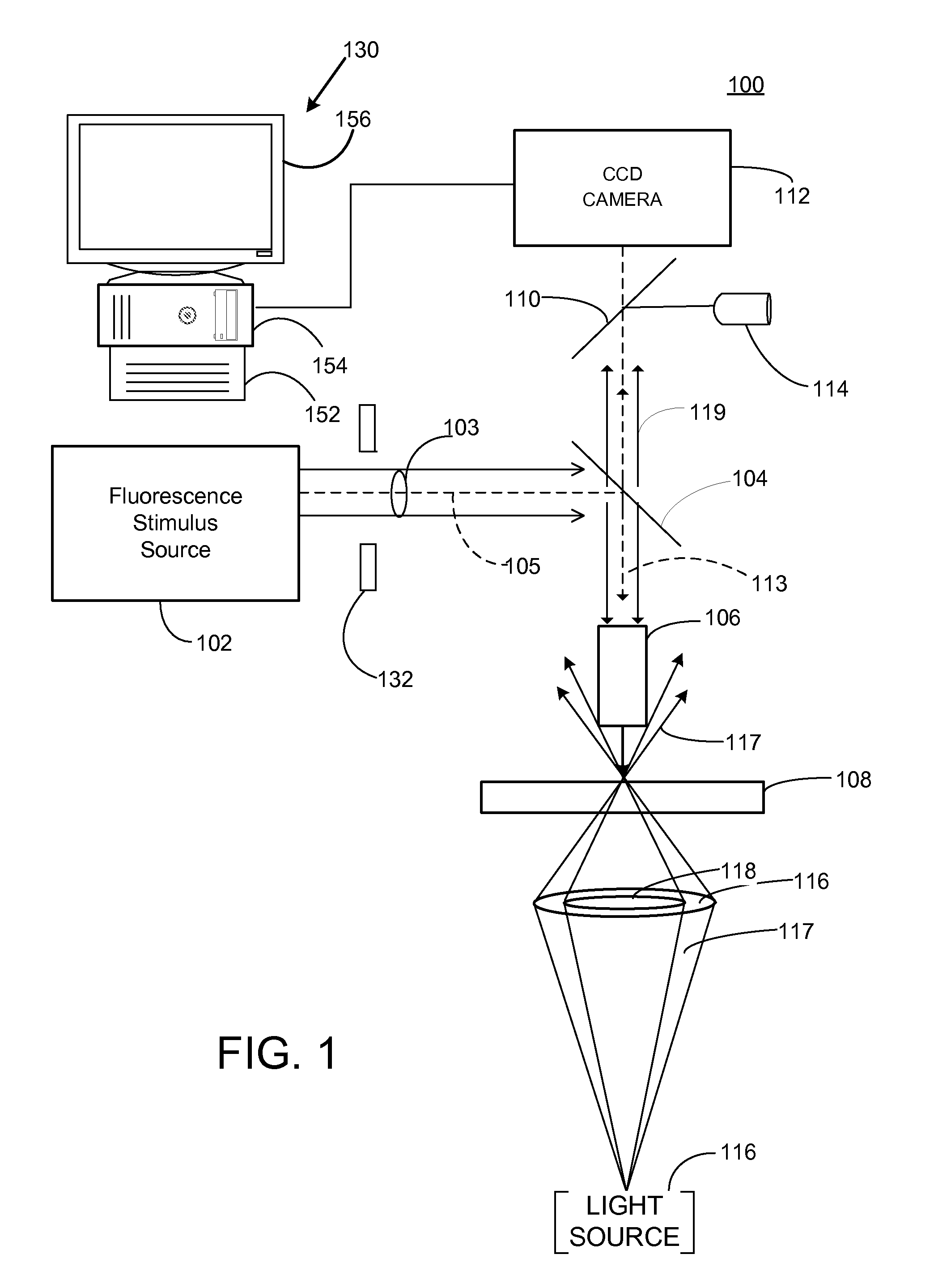

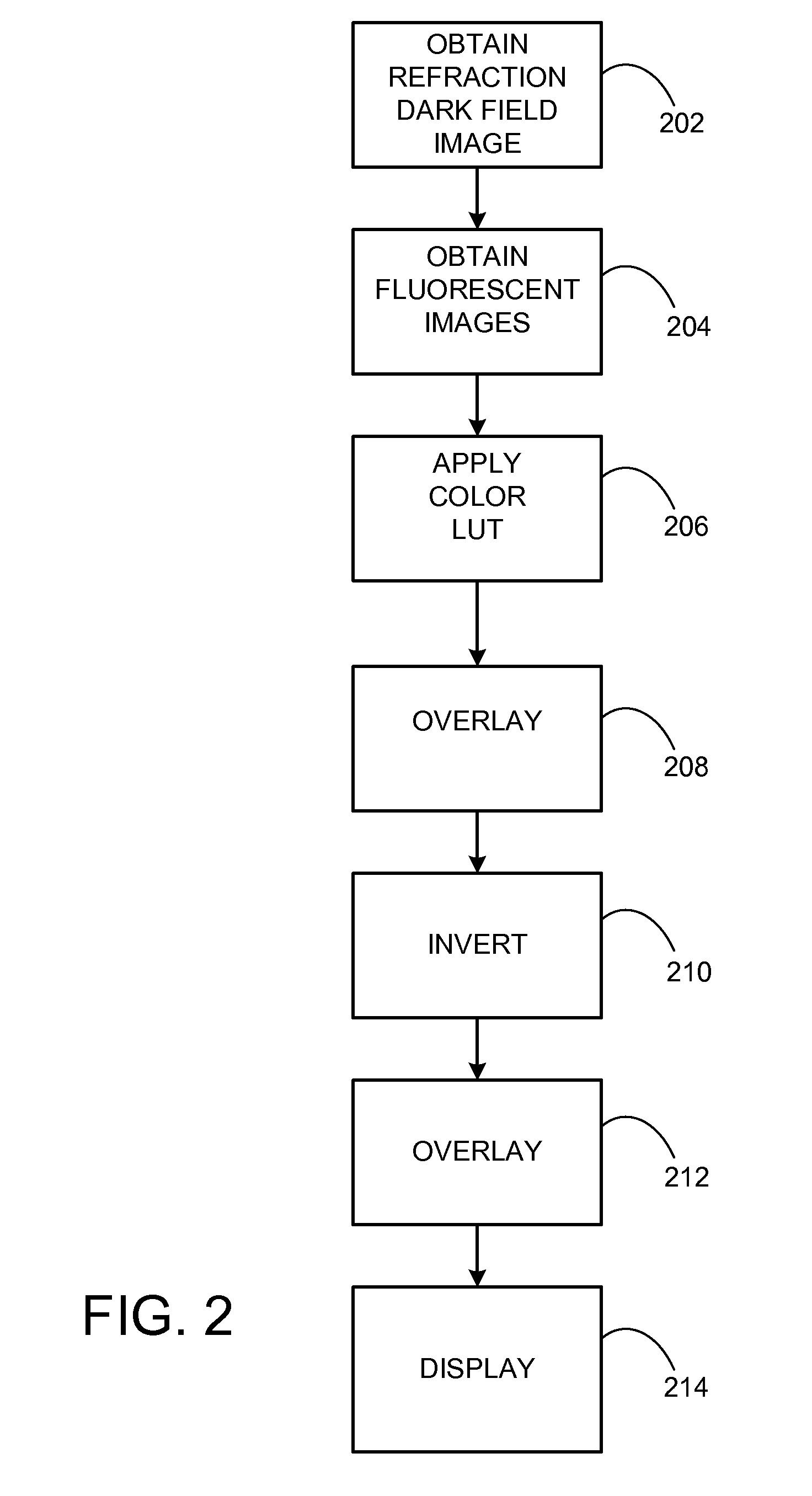

Multi-modality contrast and brightfield context rendering for enhanced pathology determination and multi-analyte detection in tissue

ActiveUS20120200694A1Material analysis by optical meansCharacter and pattern recognitionAnatomical structuresDiagnostic Radiology Modality

Multiple modality contrast can be used to produce images that can be combined and rendered to produce images similar to those produced with wavelength absorbing stains viewed under transmitted white light illumination. Images obtained with other complementary contrast modalities can be presented using engineered color schemes based on classical contrast methods used to reveal the same anatomical structures and histochemistry, thereby providing relevance to medical training and experience. Dark-field contrast images derived from refractive index and fluorescent DAPI counterstain images are combined to produce images similar to those obtained with conventional H&E staining for pathology interpretation. Such multi-modal image data can be streamed for live navigation of histological samples, and can be combined with molecular localizations of genetic DNA probes (FISH), sites of mRNA expression (mRNA-ISH), and immunohistochemical (IHC) probes localized on the same tissue sections, used to evaluate and map tissue sections prepared for imaging mass spectrometry.

Owner:VENTANA MEDICAL SYST INC

System and method for salient region feature based 3D multi modality registration of medical images

ActiveUS20070047840A1Reduces feature clusteringReduces corresponding search space complexityImage enhancementImage analysisSimilarity measureFeature based

A method for aligning a pair of images includes providing a pair of images, identifying salient feature regions in both a first image and a second image, wherein each region is associated with a spatial scale, representing feature regions by a center point of each region, registering the feature points of one image with the feature points of the other image based on local intensities, ordering said feature pairs by a similarity measure, and optimizing a joint correspondence set of feature pairs by refining the center points to sub-pixel accuracy.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

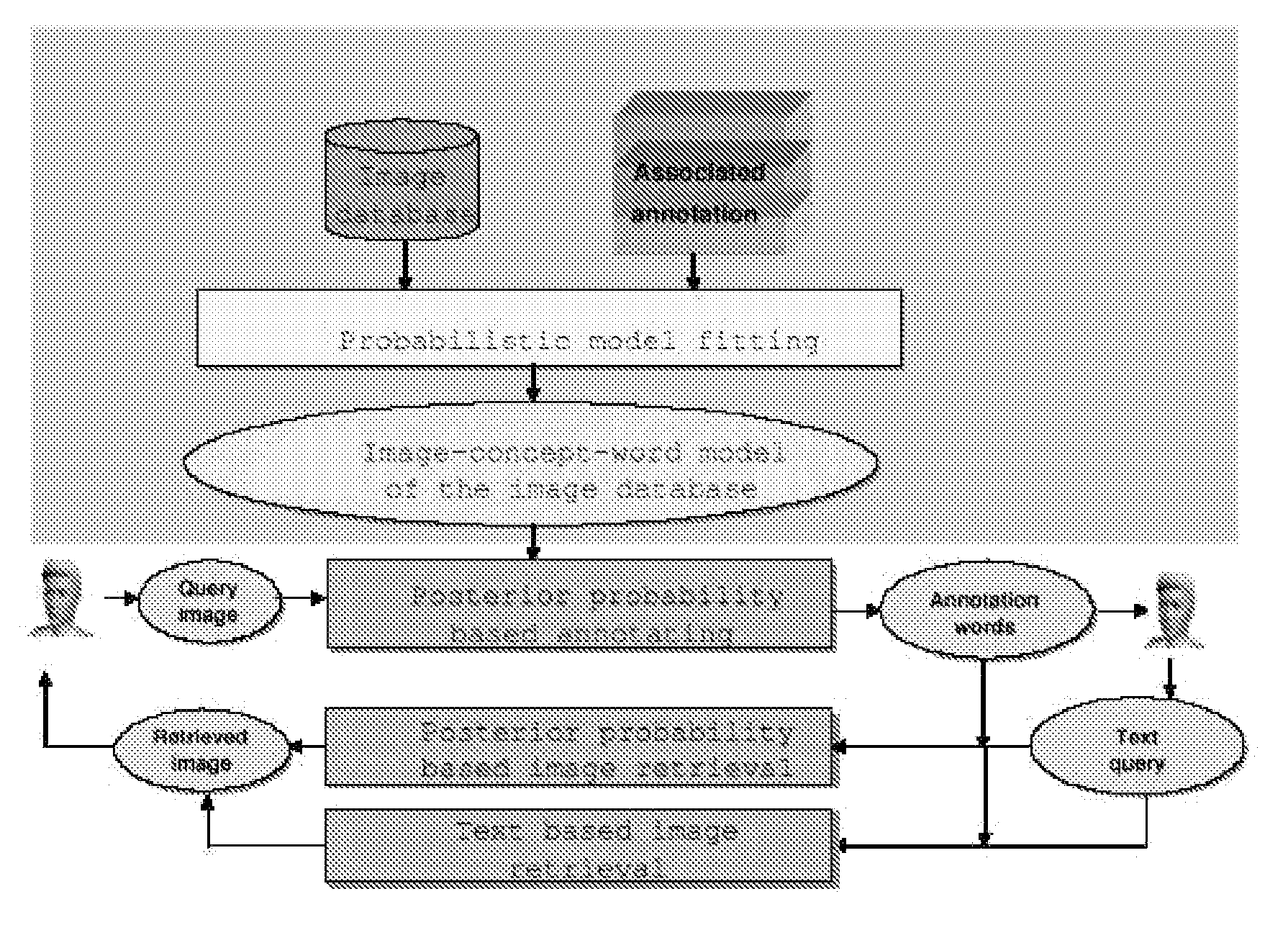

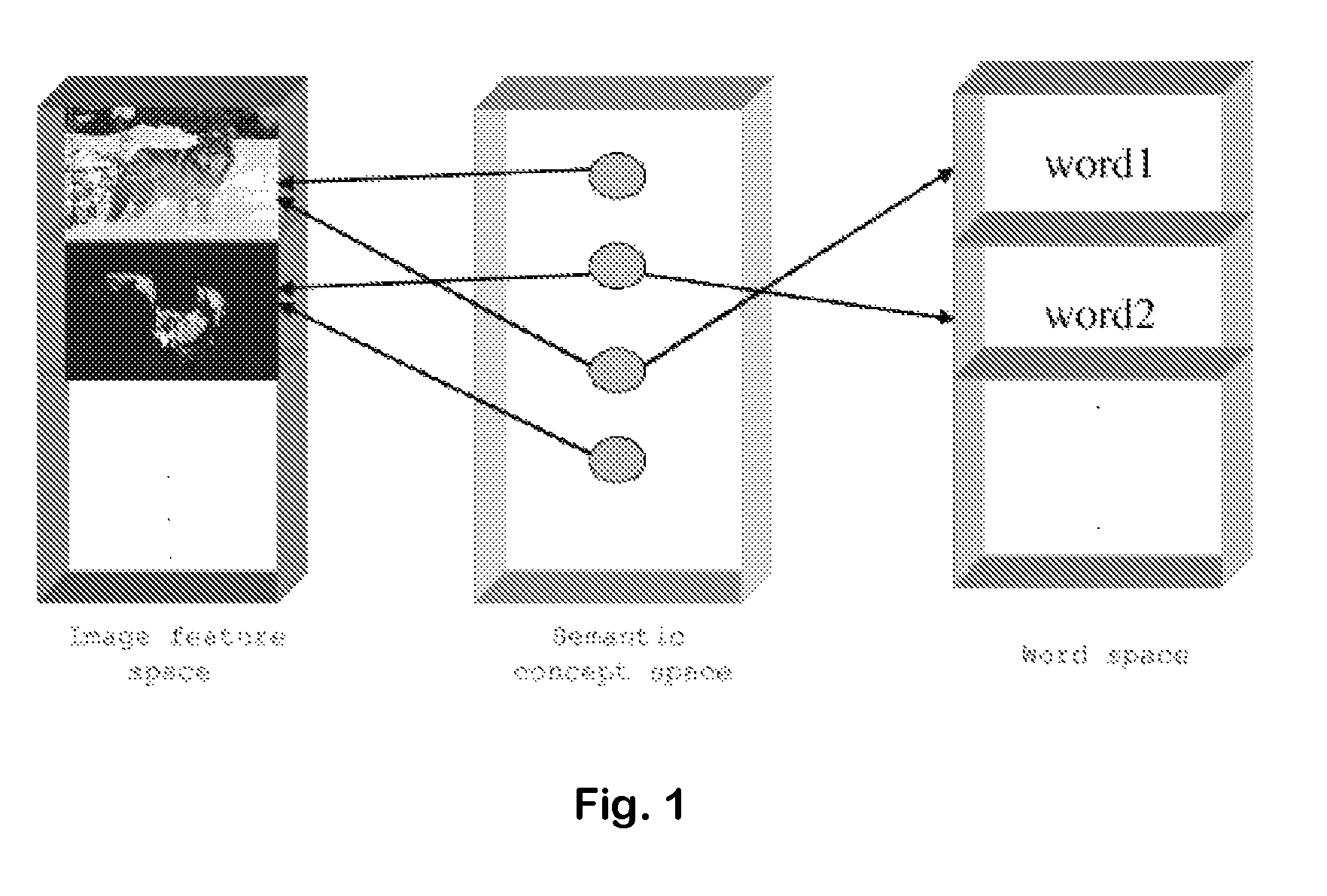

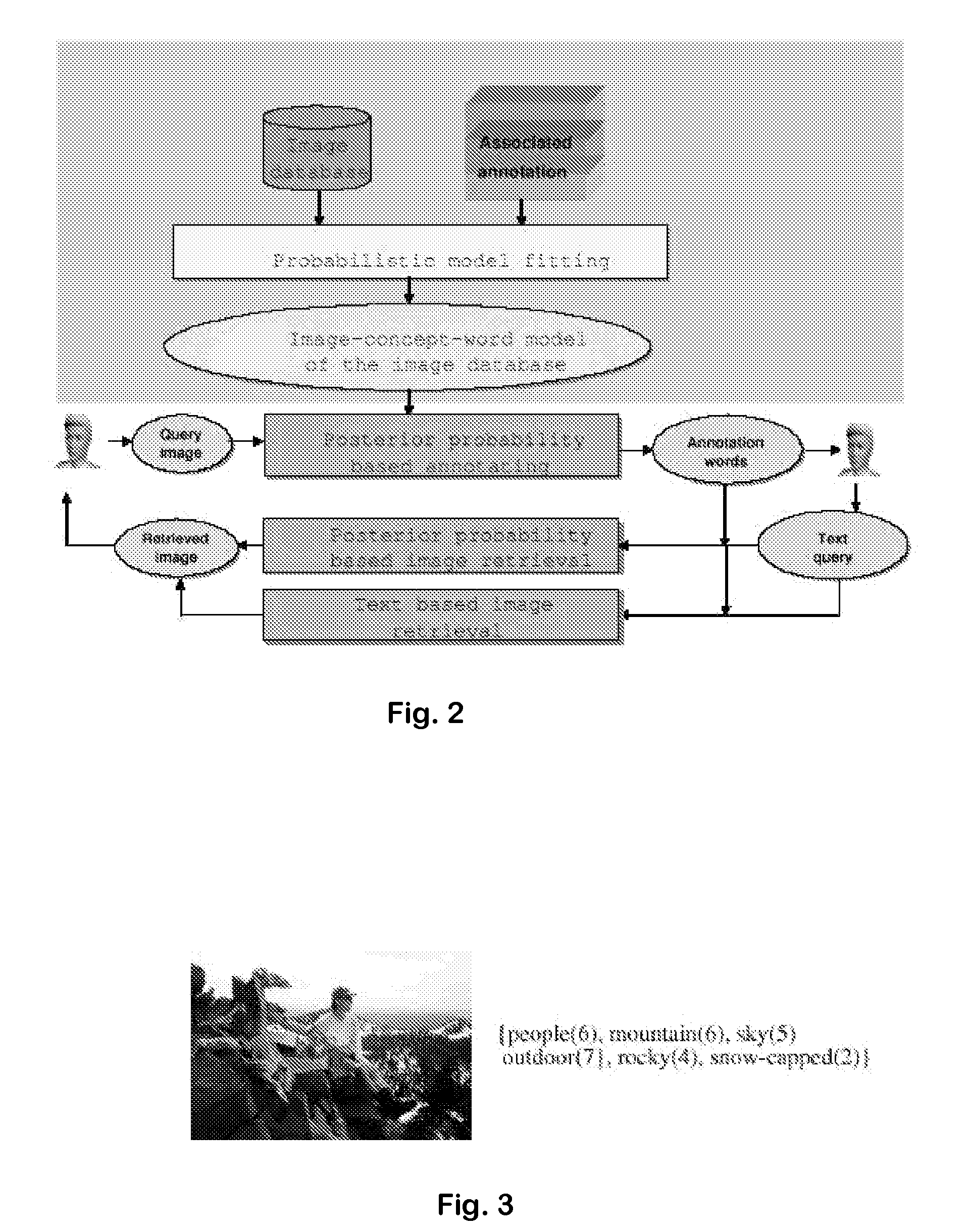

System and method for image annotation and multi-modal image retrieval using probabilistic semantic models

ActiveUS7814040B1Easy to annotate and retrieveEvaluation lessMathematical modelsDigital data information retrievalAlgorithmCorresponding conditional

Systems and Methods for multi-modal or multimedia image retrieval are provided. Automatic image annotation is achieved based on a probabilistic semantic model in which visual features and textual words are connected via a hidden layer comprising the semantic concepts to be discovered, to explicitly exploit the synergy between the two modalities. The association of visual features and textual words is determined in a Bayesian framework to provide confidence of the association. A hidden concept layer which connects the visual feature(s) and the words is discovered by fitting a generative model to the training image and annotation words. An Expectation-Maximization (EM) based iterative learning procedure determines the conditional probabilities of the visual features and the textual words given a hidden concept class. Based on the discovered hidden concept layer and the corresponding conditional probabilities, the image annotation and the text-to-image retrieval are performed using the Bayesian framework.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

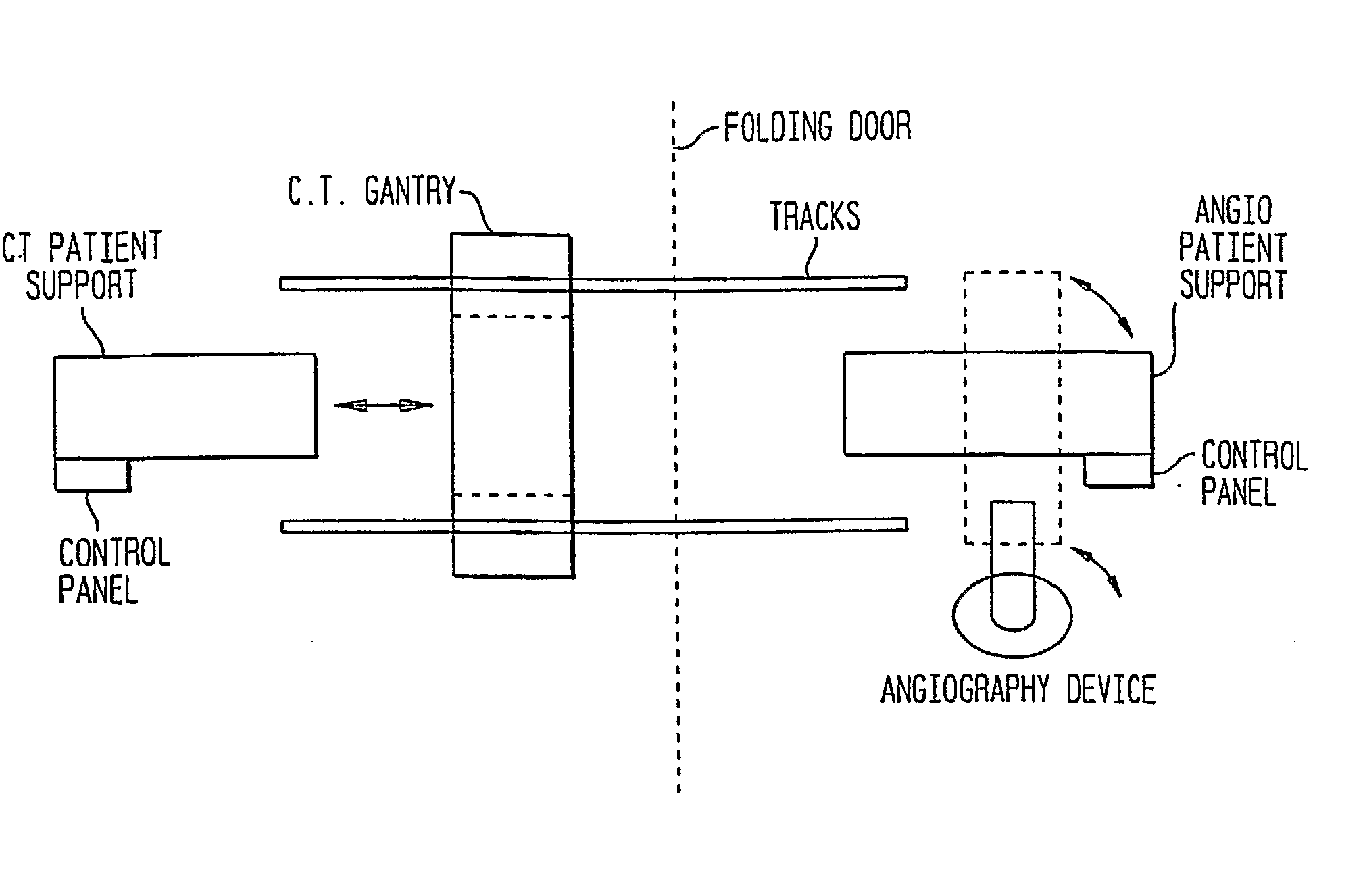

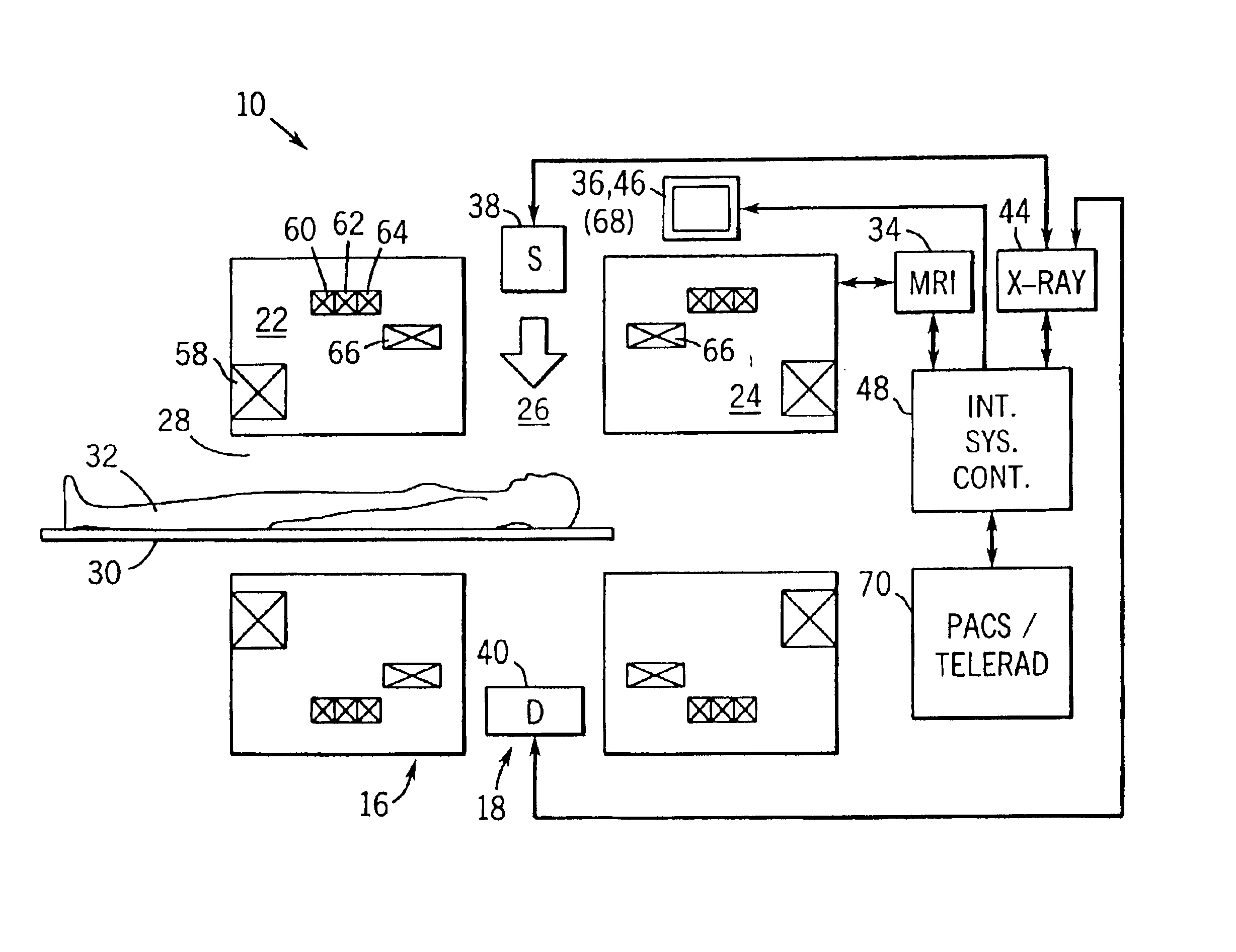

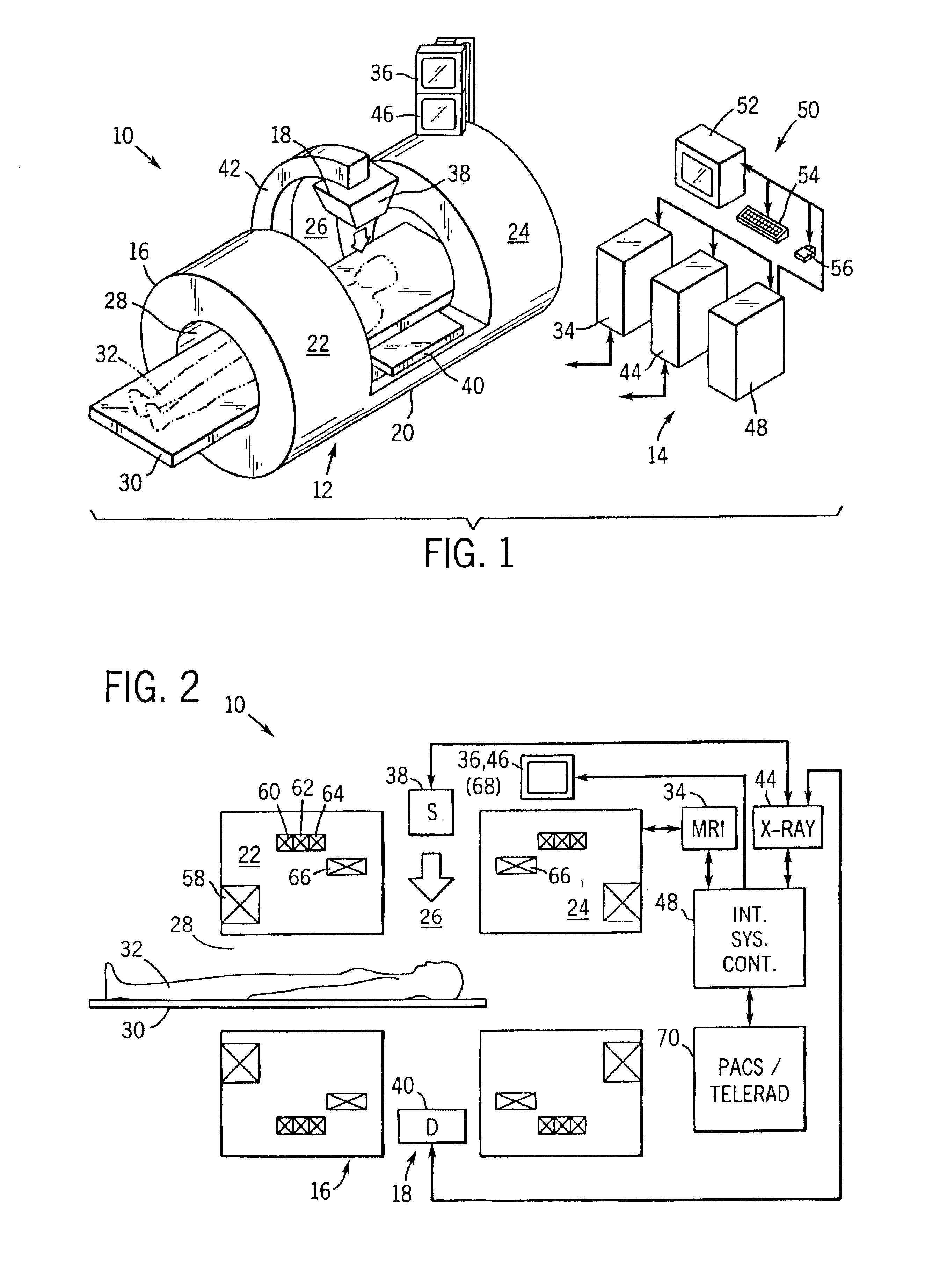

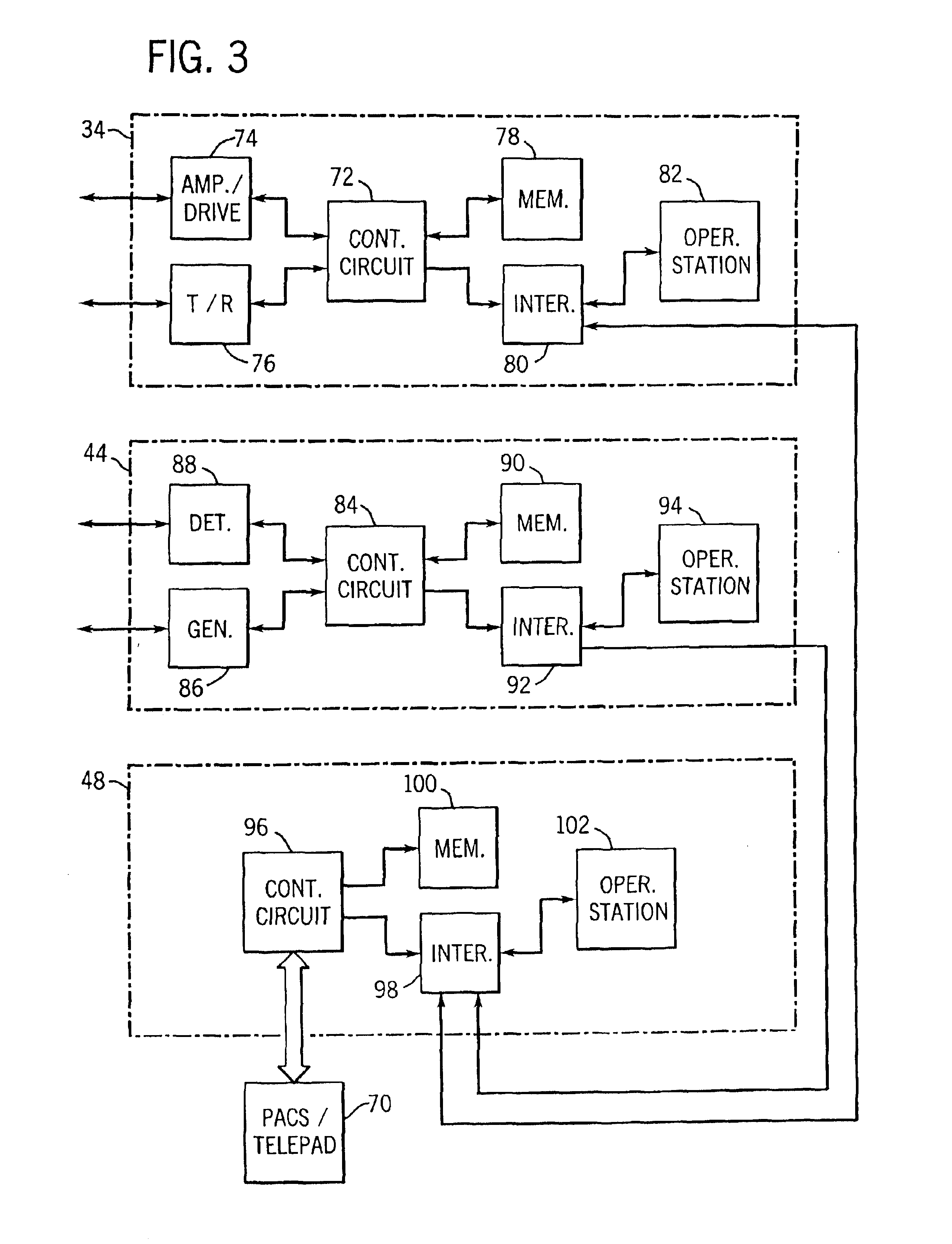

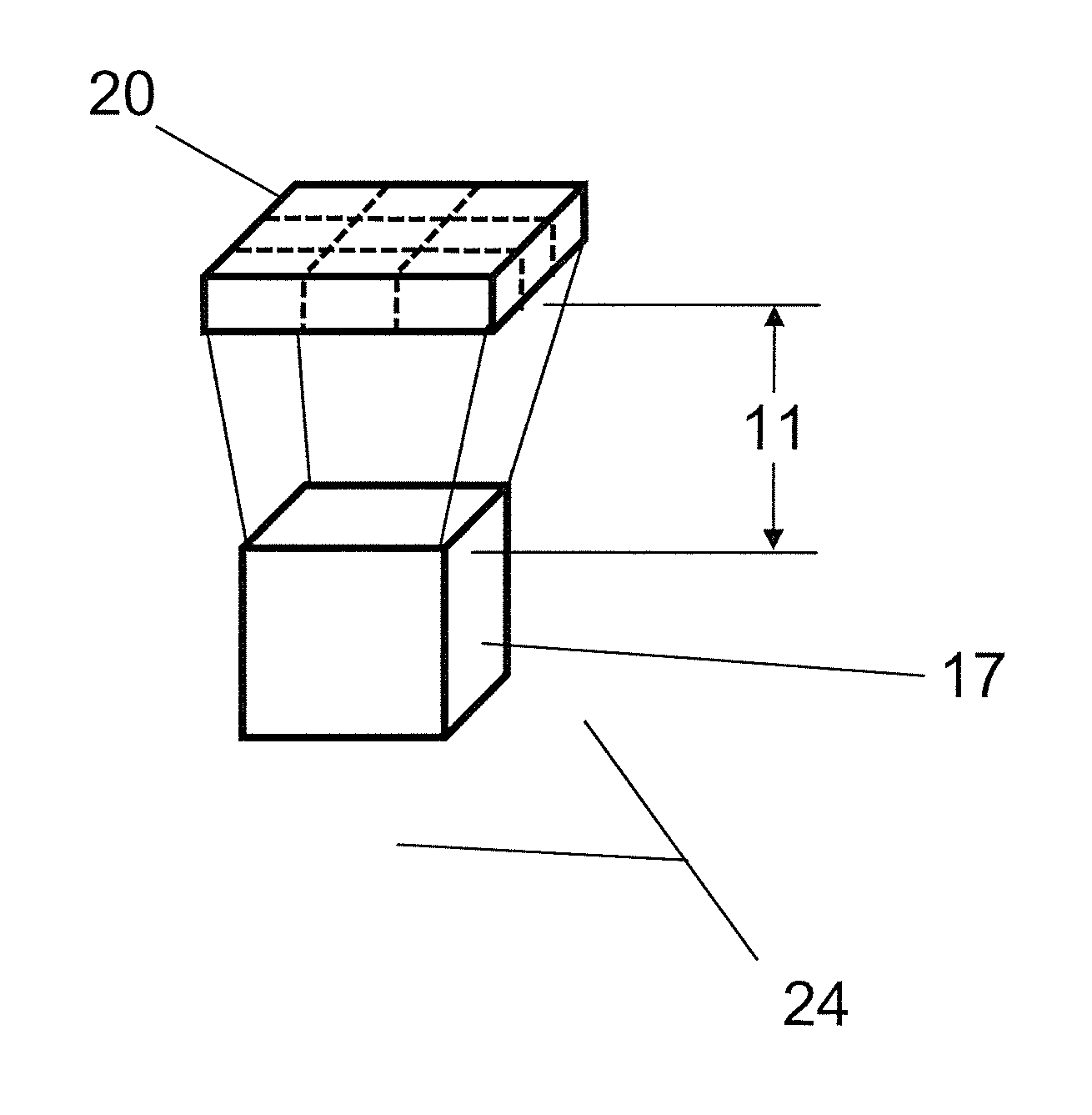

Integrated multi-modality imaging system

InactiveUS6925319B2Ultrasonic/sonic/infrasonic diagnosticsMagnetic measurementsSplit magnetDiagnostic Radiology Modality

An integrated, multi-modality imaging technique is disclosed. The embodiment described combines a split-magnet MRI system with a digital x-ray system. The two systems are employed together to generate images of a subject in accordance with their individual physics and imaging characteristics. The images may be displaced in real time, such as during a surgical intervention. The images may be registered with one another and combined to form a composite image in which tissues or objects difficult to image in one modality are visible. By appropriately selecting the position of an x-ray source and detector, and by programming a desired corresponding slice for MRI imaging, useful combined images may be obtained and displayed.

Owner:GENERAL ELECTRIC CO

Methods and systems of combining magnetic resonance and nuclear imaging

InactiveUS20110270078A1Reducing and minimizing mis-registration artifactReducing or minimizing mis-registration artifactsMagnetic measurementsDiagnostic recording/measuringDiagnostic Radiology ModalityProton magnetic resonance

An multi-modality imaging system for imaging of an object under study that includes a magnetic resonance imaging (MRI) apparatus and an MRI-compatible single-photon nuclear imaging apparatus imbedded within the RF coil of the MRI system such that sequential or simultaneous imaging can be done with the two modalities using the same support bed of the object under study during the imaging session.

Owner:GAMMA MEDICA

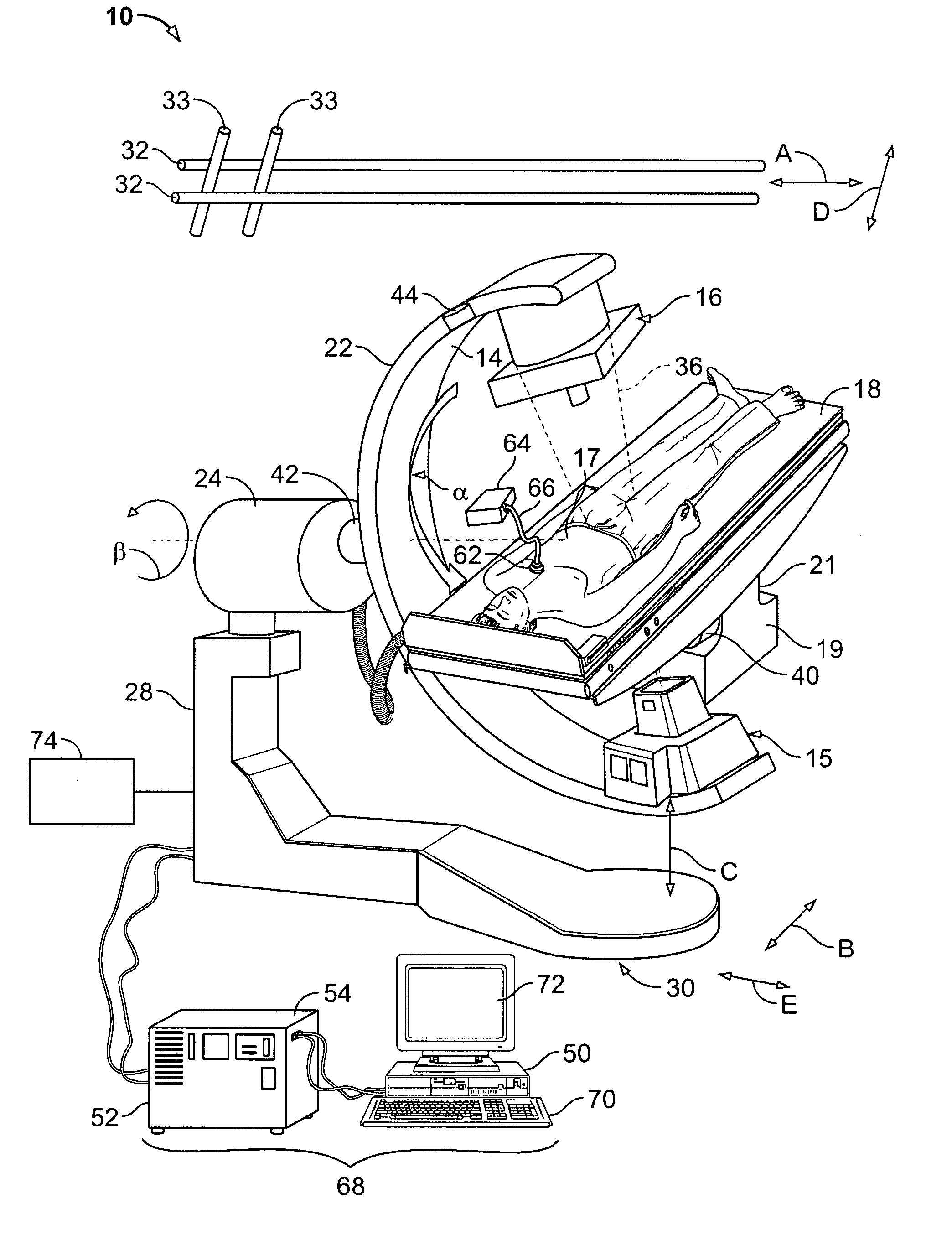

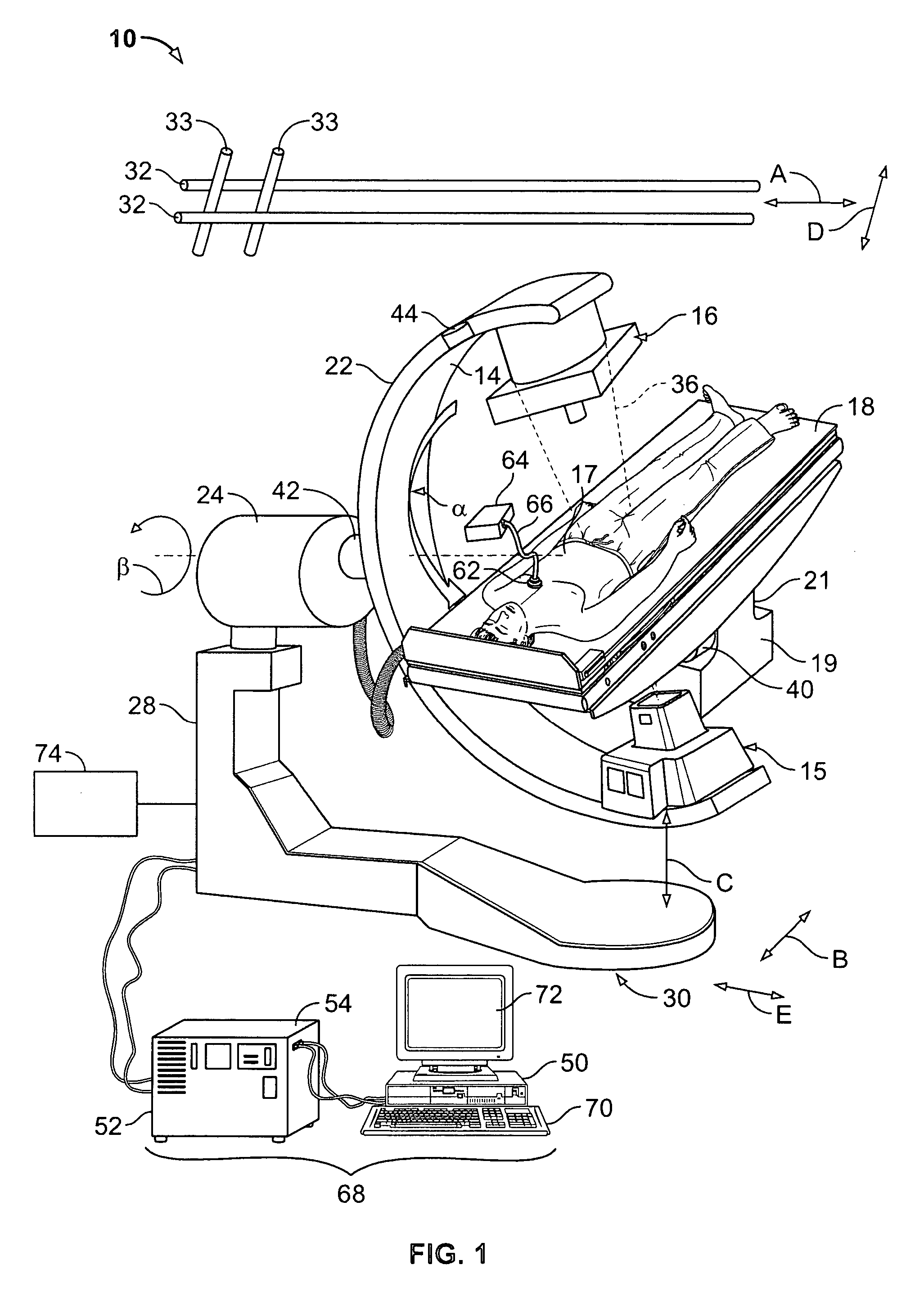

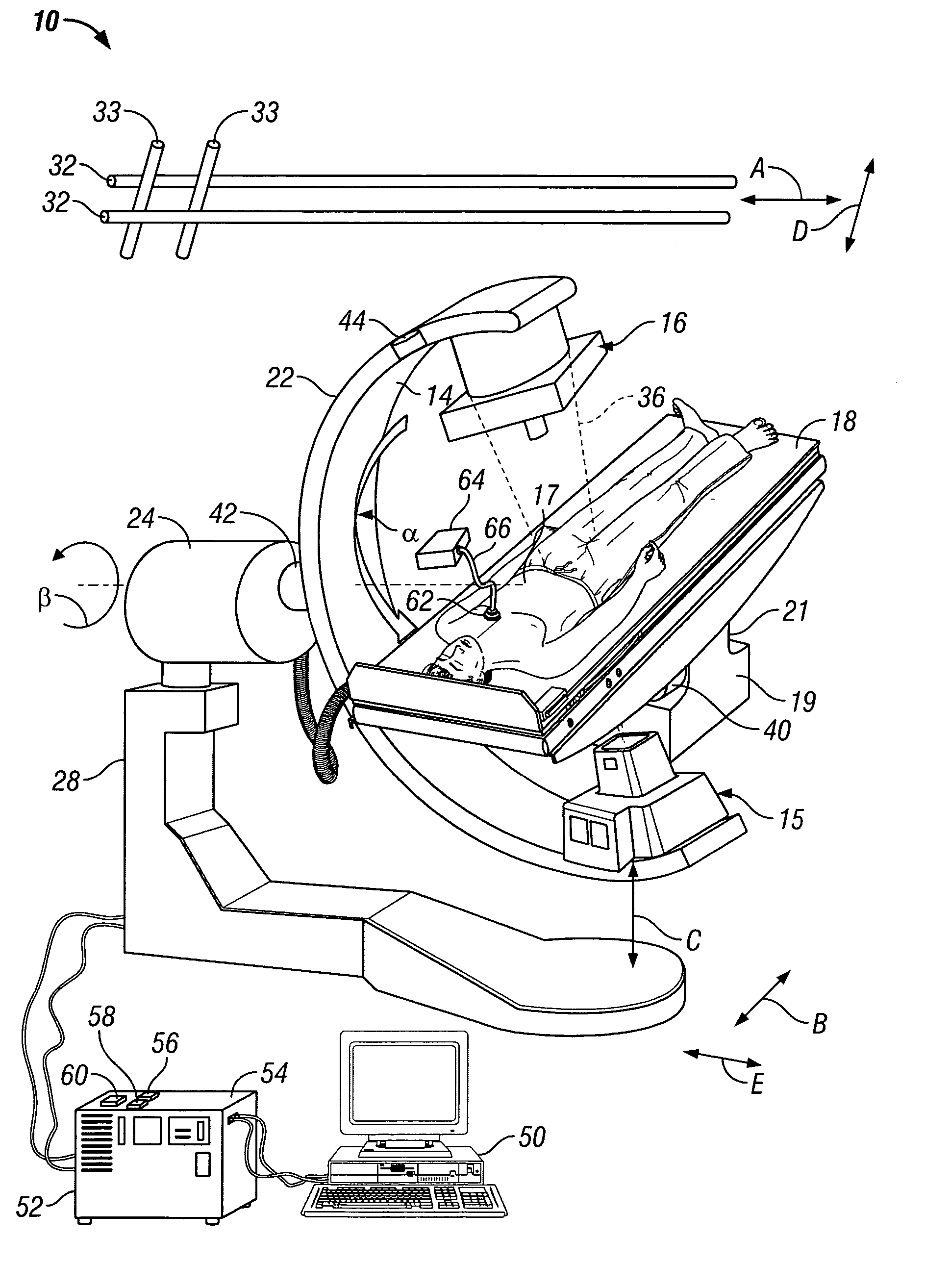

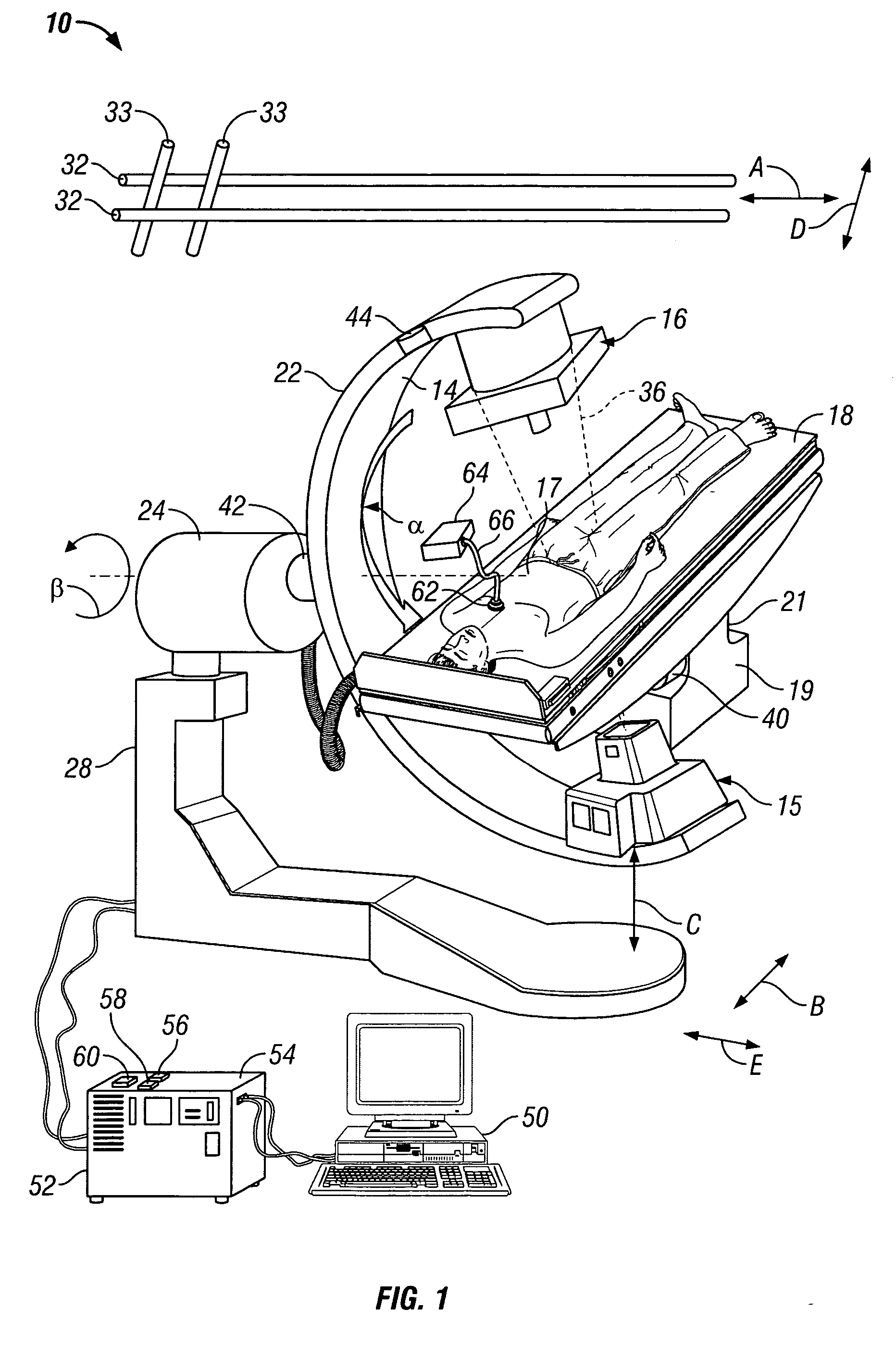

Separate and combined multi-modality diagnostic imaging system

InactiveUS20070238950A1Improve productivityApparent advantageUltrasonic/sonic/infrasonic diagnosticsPatient positioning for diagnosticsNuclear medicineOrbit

A multi-modality diagnostic imaging system includes a first imaging subsystem, such as a computed tomographic (CT) system, for performing a first imaging procedure on a subject. The first imaging system has a gantry that slides along rails. A second imaging subsystem, such as a nuclear medicine system (NUC), performs a second imaging procedure on a subject. The second imaging subsystem is separate from the first imaging system, but in the same room. The second imaging subsystem has a gantry or is gantryless. A patient couch supports a subject. The patient couch is supported on a couch support having vertical adjustment and horizontally fixed. In this manner, the patient is maintained in the same position for multiple types of scans using the same couch support without horizontal translation of the patient couch and patient.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

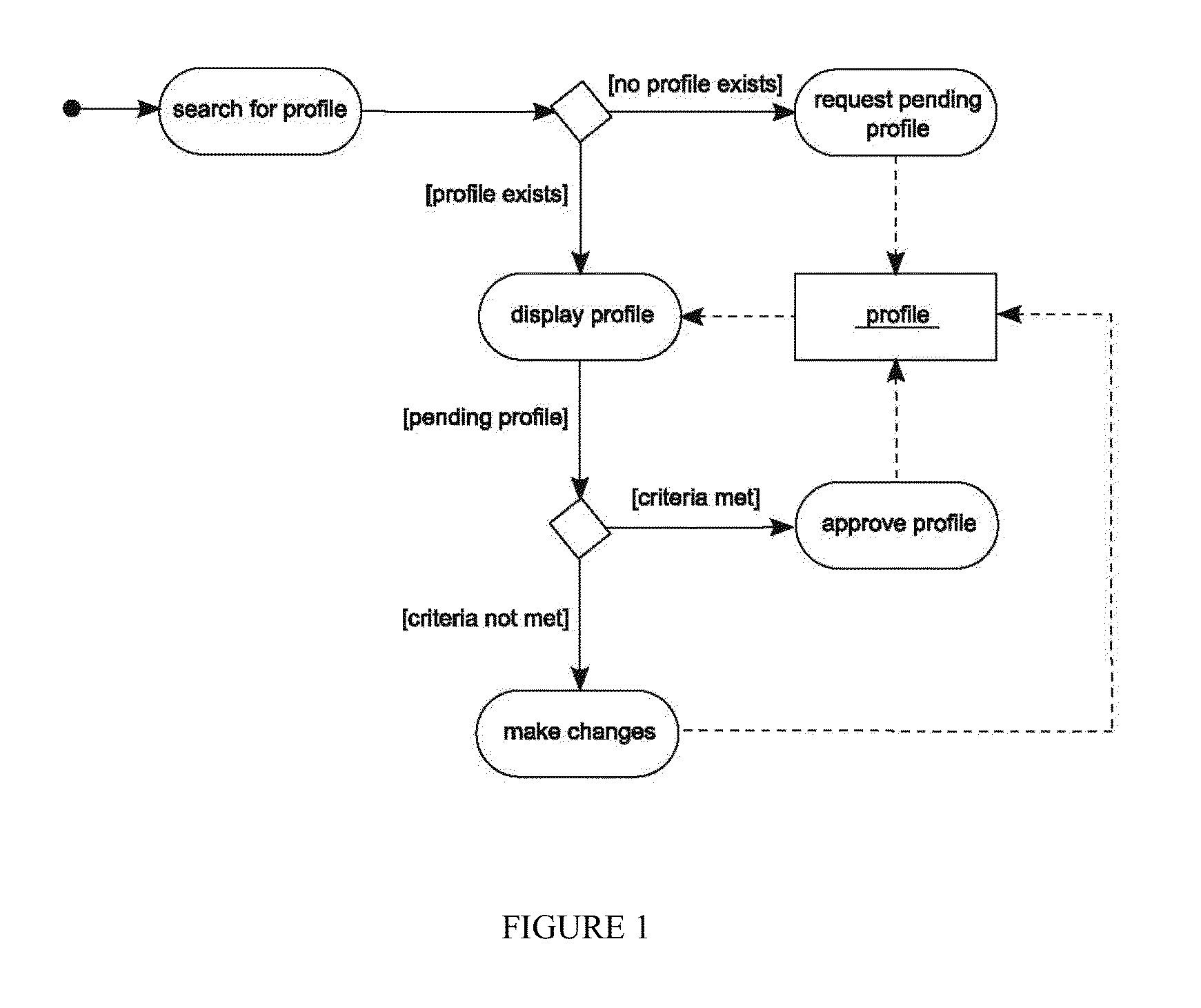

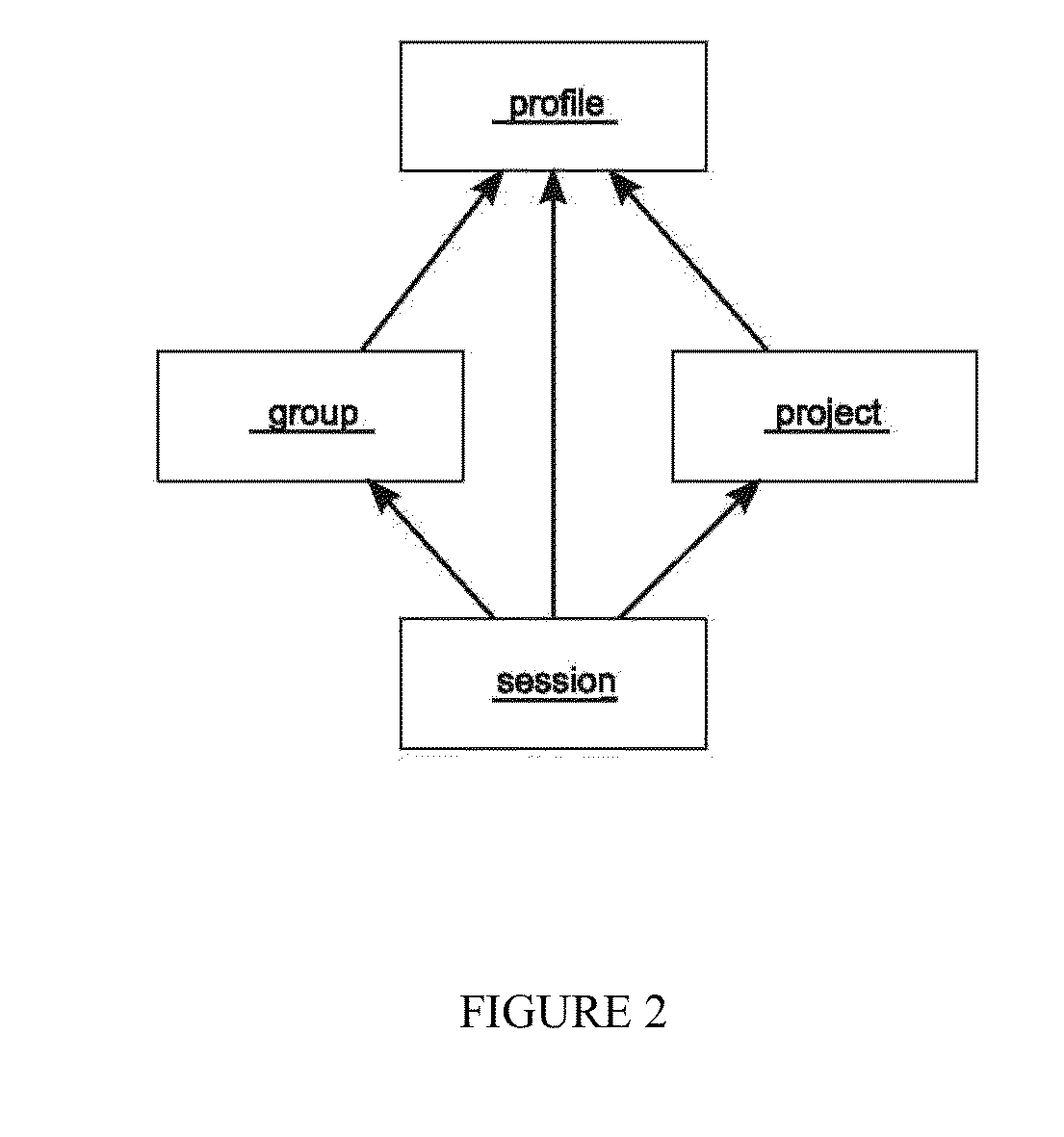

Multi-modality, multi-resource, information integration environment

ActiveUS20130091170A1Digital data processing detailsDigital data authenticationMulti resourceInformation integration

A multi-modality, multi-resource, information integration environment system is disclosed that comprises: (a) at least one computer readable medium capable of securely storing and archiving system data; (b) at least one computer system, or program thereon, designed to permit and facilitate web-based access of the at least one computer readable medium containing the secured and archived system data; (c) at least one computer system, or program thereon, designed to permit and facilitate resource scheduling or management; (d) at least one computer system, or program thereon, designed to monitor the overall resource usage of a core facility; and (e) at least one computer system, or program thereon, designed to track regulatory and operational qualifications.

Owner:CASE WESTERN RESERVE UNIV

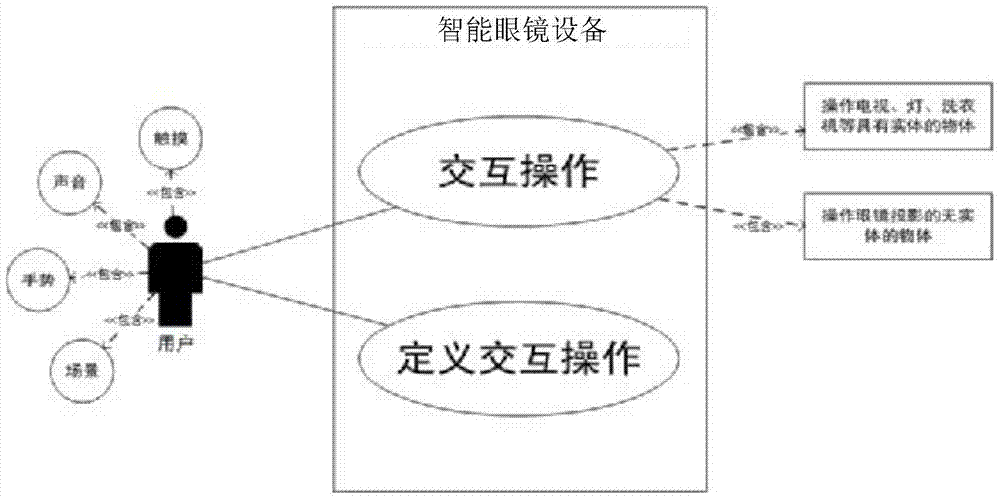

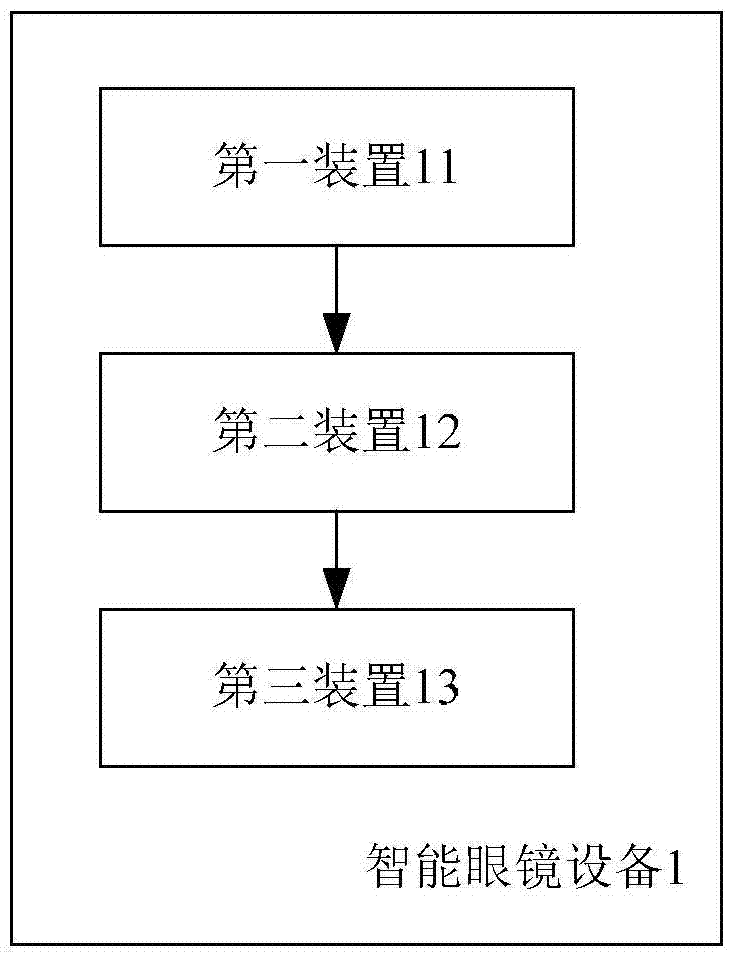

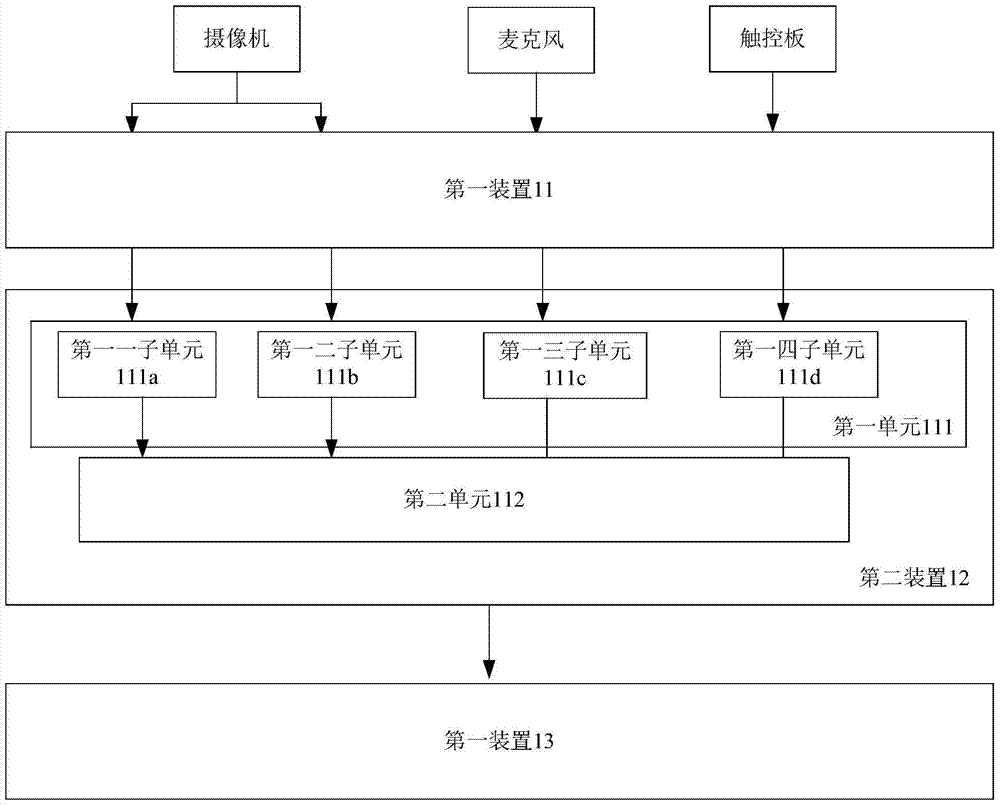

Multimodal input-based interactive method and device

ActiveCN106997236AImprove interactive experienceIncrease flexibilityInput/output for user-computer interactionCharacter and pattern recognitionObject basedComputer module

The invention aims to provide an intelligent glasses device and method used for performing interaction based on multimodal input and capable of enabling the interaction to be closer to natural interaction of users. The method comprises the steps of obtaining multiple pieces of input information from at least one of multiple input modules; performing comprehensive logic analysis on the input information to generate an operation command, wherein the operation command has operation elements, and the operation elements at least include an operation object, an operation action and an operation parameter; and executing corresponding operation on the operation object based on the operation command. According to the intelligent glasses device and method, the input information of multiple channels is obtained through the input modules and is subjected to the comprehensive logic analysis, the operation object, the operation action and the operation element of the operation action are determined to generate the operation command, and the corresponding operation is executed based on the operation command, so that the information is subjected to fusion processing in real time, the interaction of the users is closer to an interactive mode of a natural language, and the interactive experience of the users is improved.

Owner:HISCENE INFORMATION TECH CO LTD

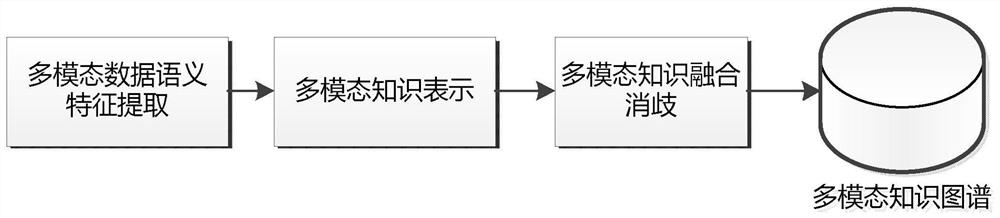

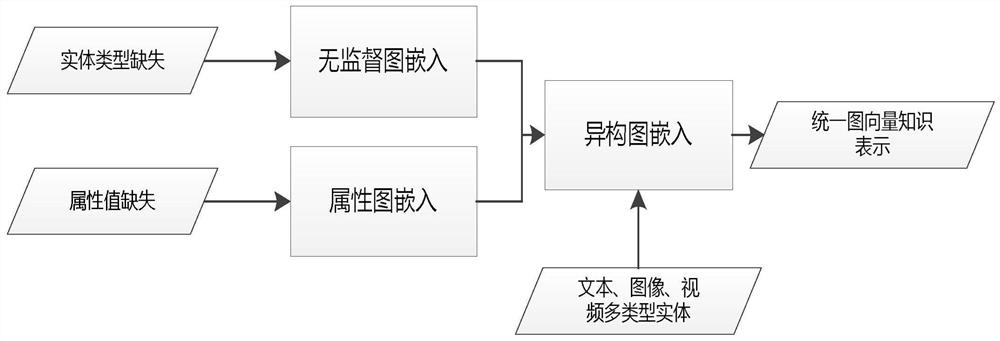

Multi-modal knowledge graph construction method

PendingCN112200317ARich knowledge typeThree-dimensional knowledge typeKnowledge representationSpecial data processing applicationsFeature extractionEngineering

The invention discloses a multi-modal knowledge graph construction method, and relates to the knowledge engineering technology in the field of big data. The method is realized through the following technical scheme: firstly, extracting multi-modal data semantic features based on a multi-modal data feature representation model, constructing a pre-training model-based data feature extraction model for texts, images, audios, videos and the like, and respectively finishing single-modal data semantic feature extraction; secondly, projecting different types of data into the same vector space for representation on the basis of unsupervised graph, attribute graph, heterogeneous graph embedding and other modes, so as to realize cross-modal multi-modal knowledge representation; on the basis of the above work, two maps needing to be fused and aligned are converted into vector representation forms respectively, then based on the obtained multi-modal knowledge representation, the mapping relation of entity pairs between knowledge maps is learned according to priori alignment data, multi-modal knowledge fusion disambiguation is completed, decoding and mapping to corresponding nodes in the knowledge maps are completed, and a fused new atlas, entities and attributes thereof are generated.

Owner:10TH RES INST OF CETC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com