Method and system for expanding stand-alone graph neural network training to distributed training, and medium

A kind of neural network training and neural network technology, which is applied in the field of expanding stand-alone graph neural network training to distributed training, can solve the problems that it is difficult for users to verify the correctness of backpropagation logic, complexity, error-prone backpropagation logic, etc. Achieve the effect of easy to use and use, low learning cost, training accuracy and speed guarantee of parameter convergence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The present invention will be further described below in conjunction with accompanying drawing.

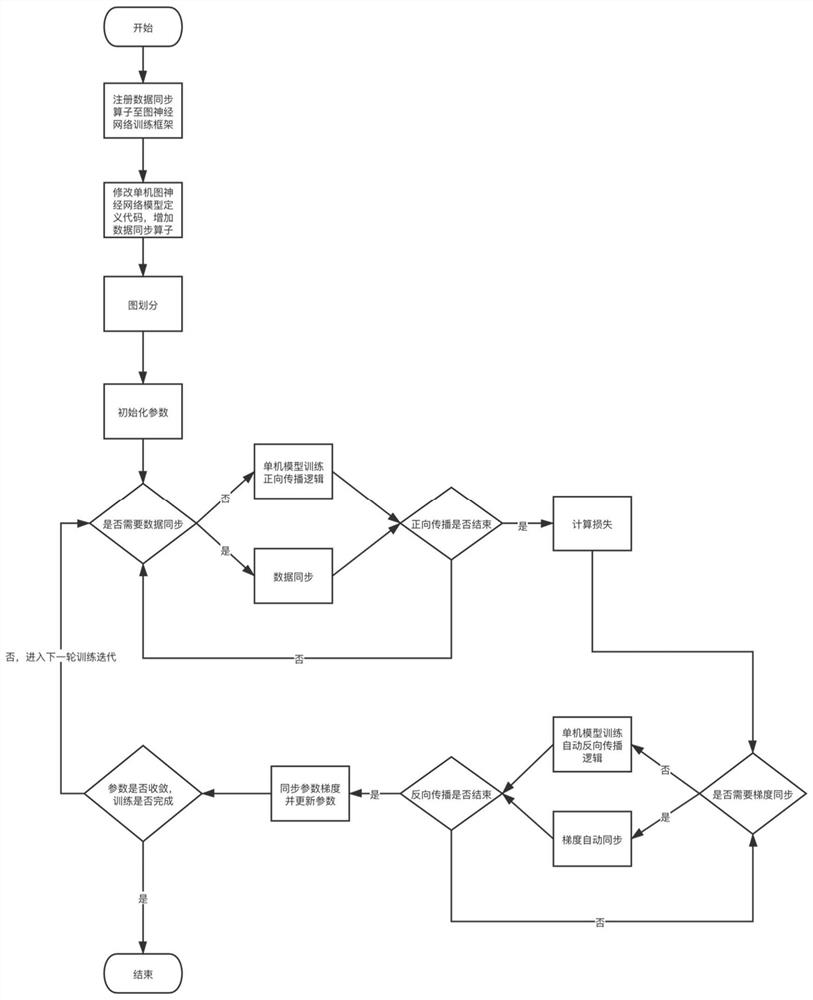

[0042] Such as figure 1 As shown, it is the specific flow of the present invention to expand the single-machine graph neural network training to the distributed training method. Combine below figure 2 The disclosed embodiments are described in detail:

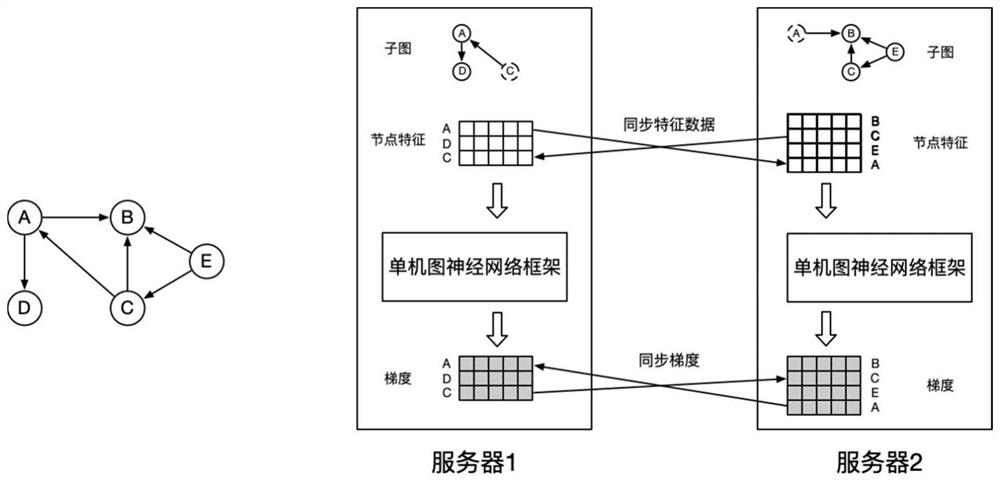

[0043] figure 2 There are 5 nodes in the Zhongyuan graph, namely A, B, C, D and E. The connection relationship of the edges between the nodes is shown in the figure. Each node contains a multi-dimensional initial feature tensor and may contain a label. In the embodiment, a total of two servers are used for training.

[0044] In step 1, the data synchronization operation is registered as an operator of the stand-alone graph neural network framework.

[0045] In step 2, modify the code of the stand-alone graph neural network model, and add a line of code that calls the data synchronization operator defined in step 1 bef...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com