Patents

Literature

260 results about "Computational logic" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Computational logic is the use of logic to perform or reason about computation. It bears a similar relationship to computer science and engineering as mathematical logic bears to mathematics and as philosophical logic bears to philosophy. It is synonymous with "logic in computer science".

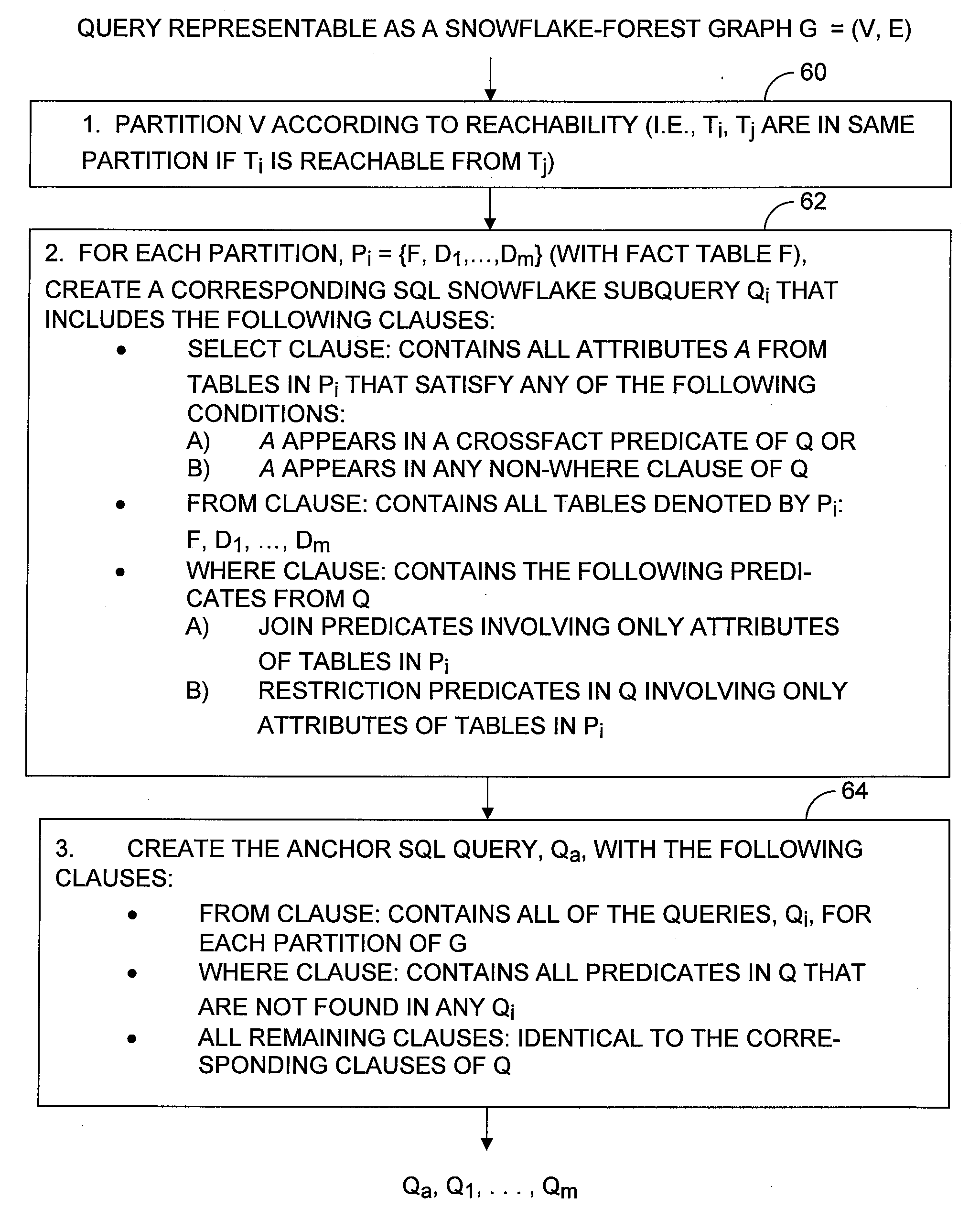

Query Optimizer

ActiveUS20080033914A1Digital data processing detailsMulti-dimensional databasesQuery optimizationComputational logic

For a database query that defines a plurality of separate snowflake schemas, a query optimizer computes separately for each of the snowflake schemas a logical access plan for obtaining from that schema's tables a respective record set that includes the data requested from those tables by that query. The query optimizer also computes a logical access plan for obtaining the query's results from the record sets in which execution of the logical access plans thus computed will result.

Owner:MICRO FOCUS LLC

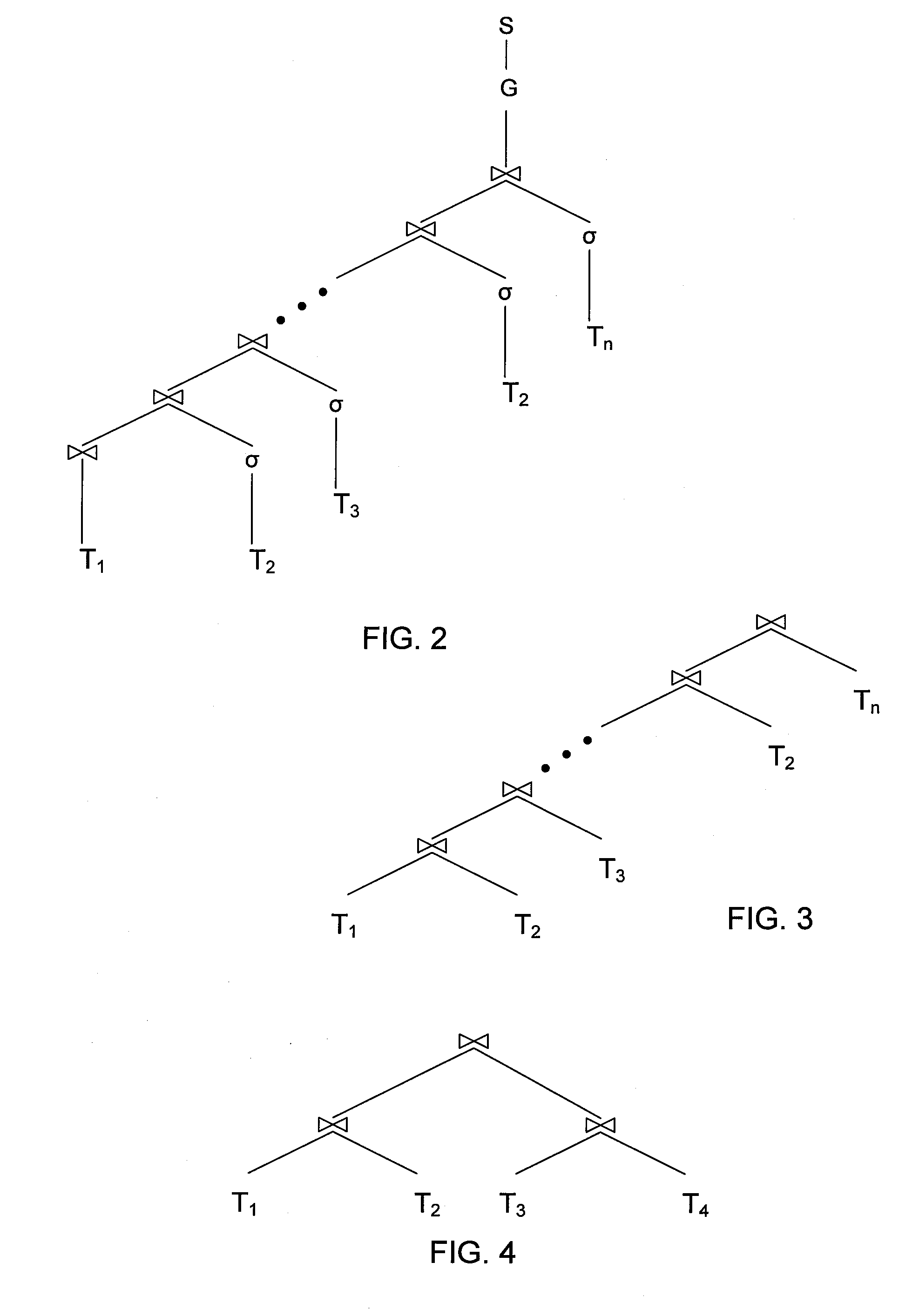

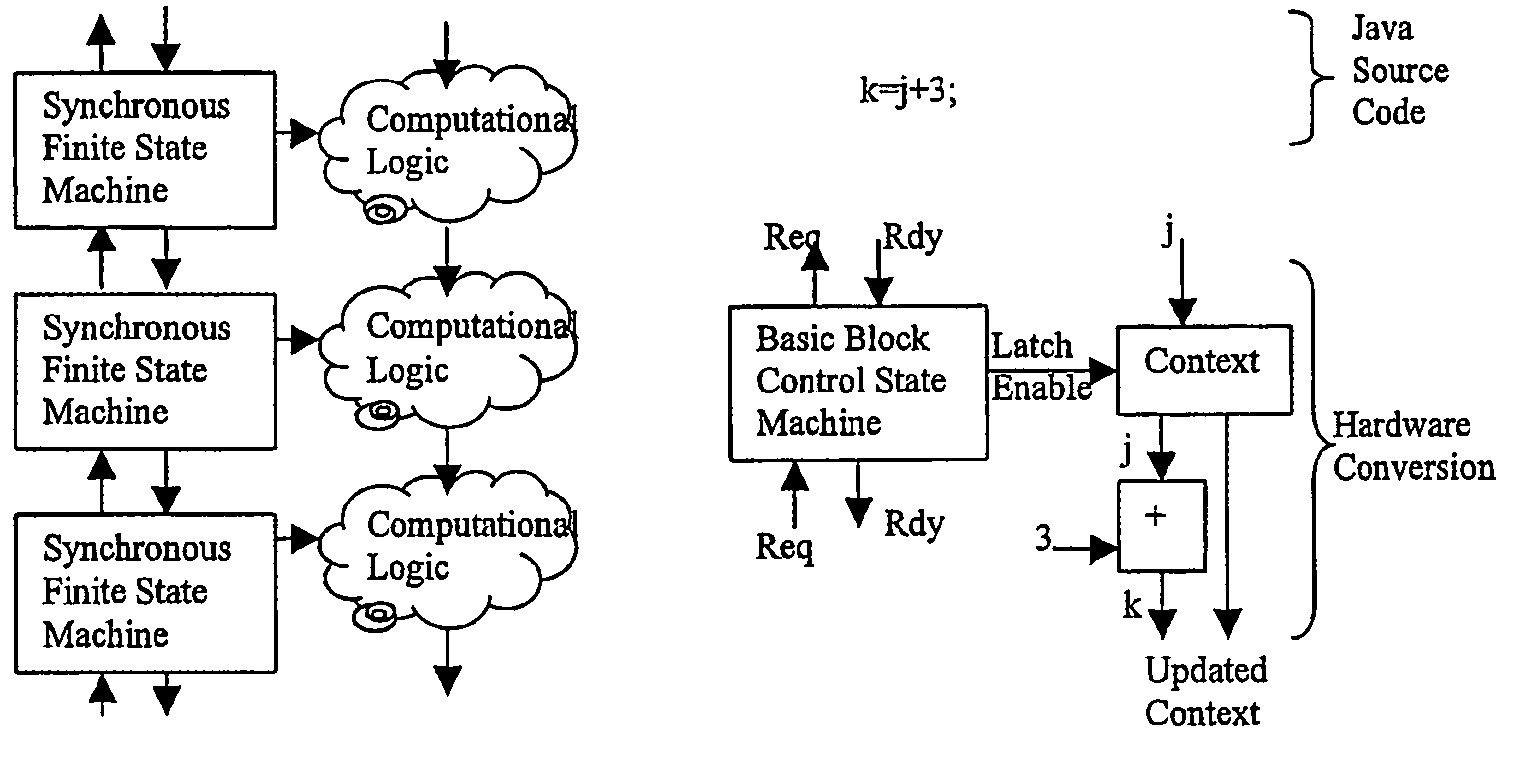

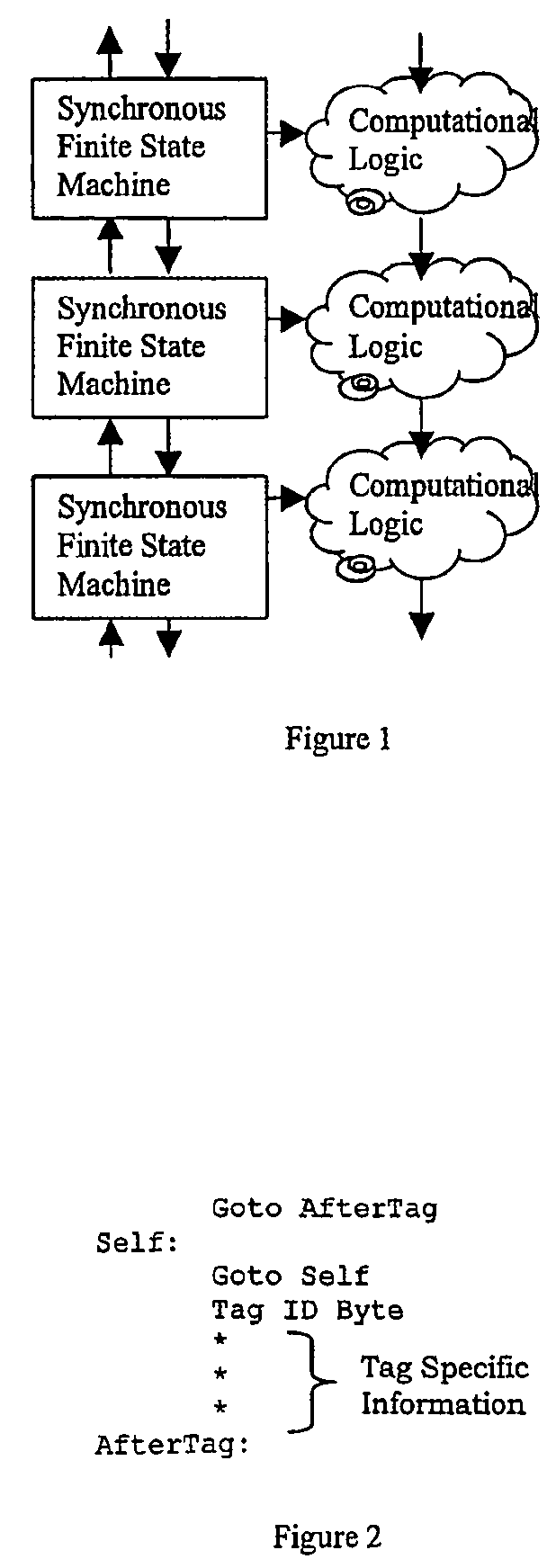

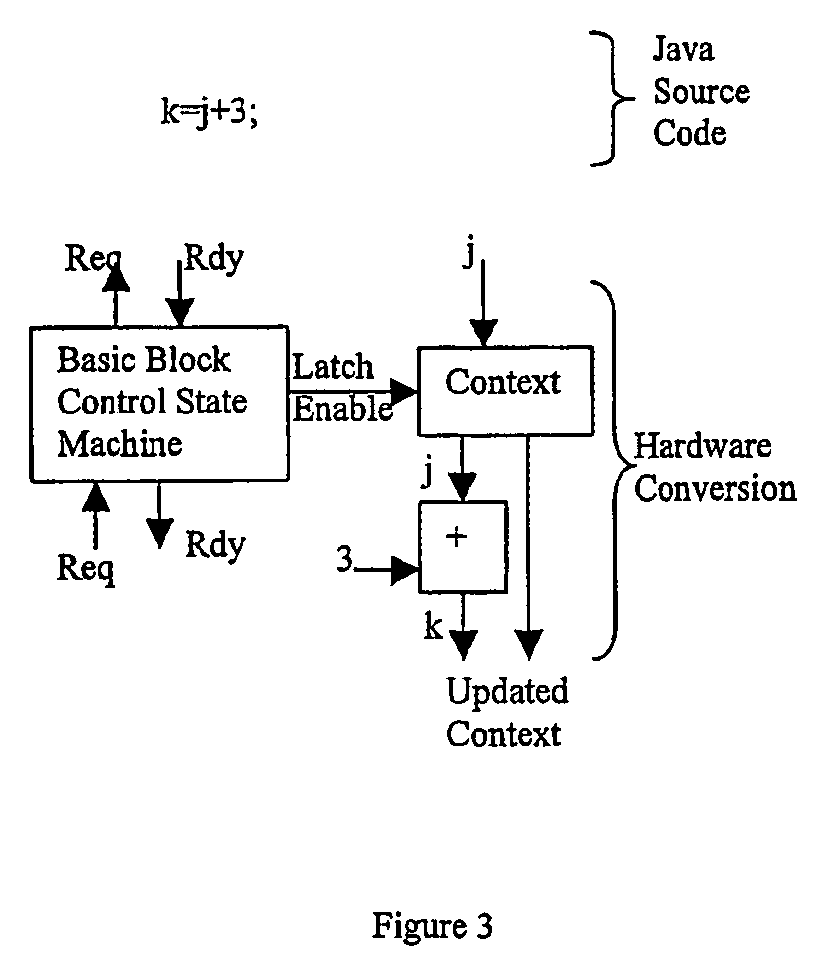

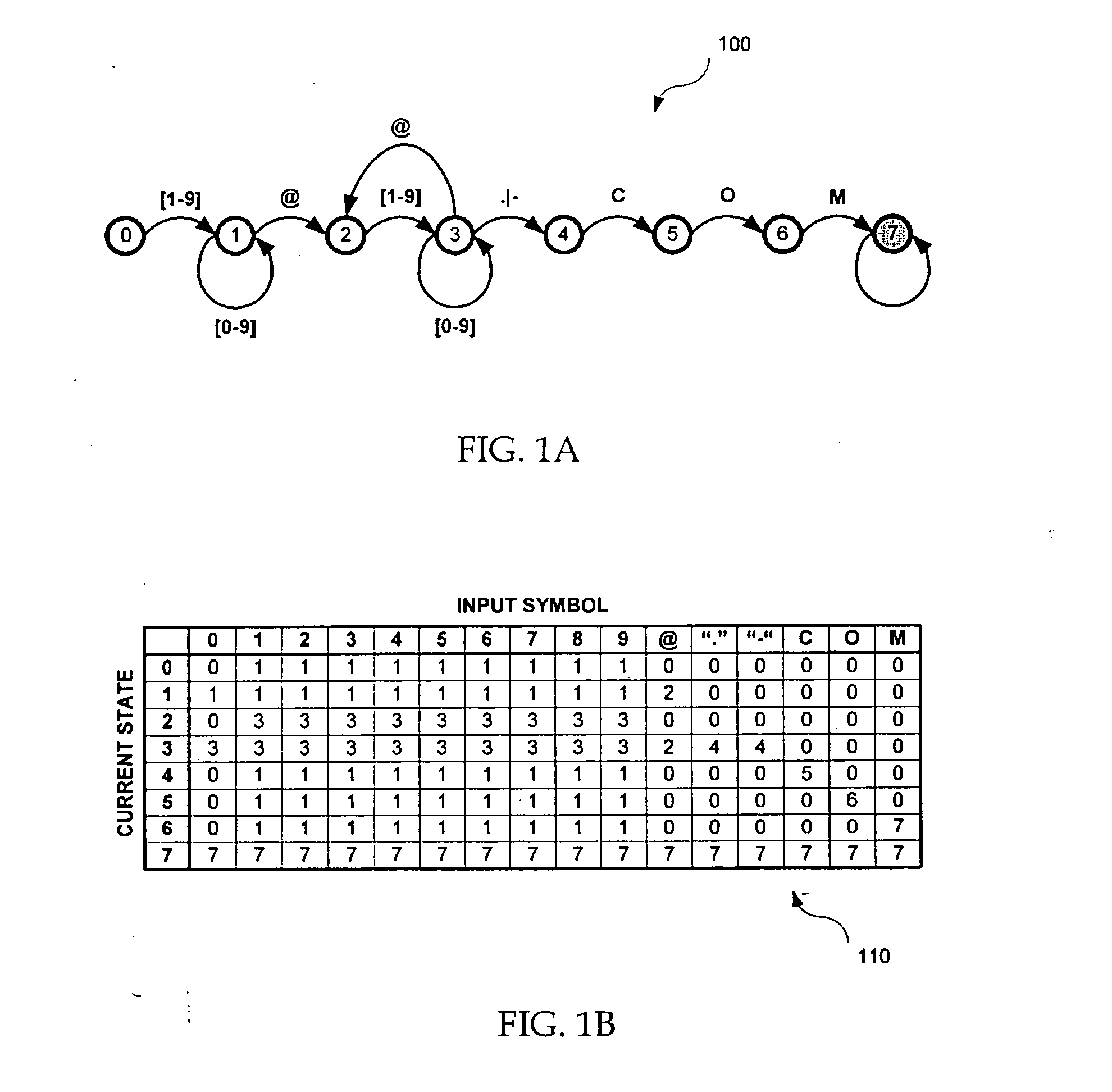

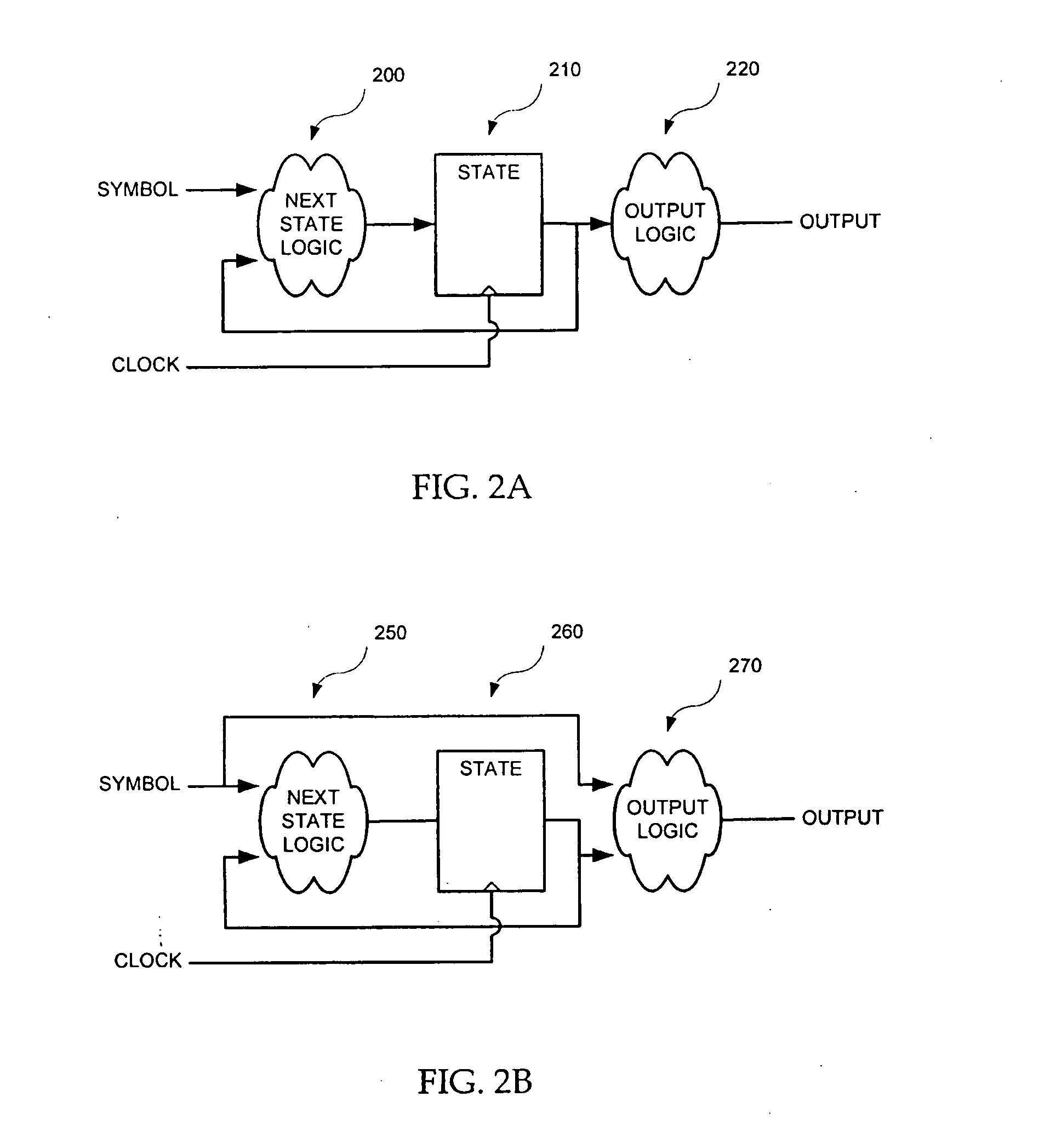

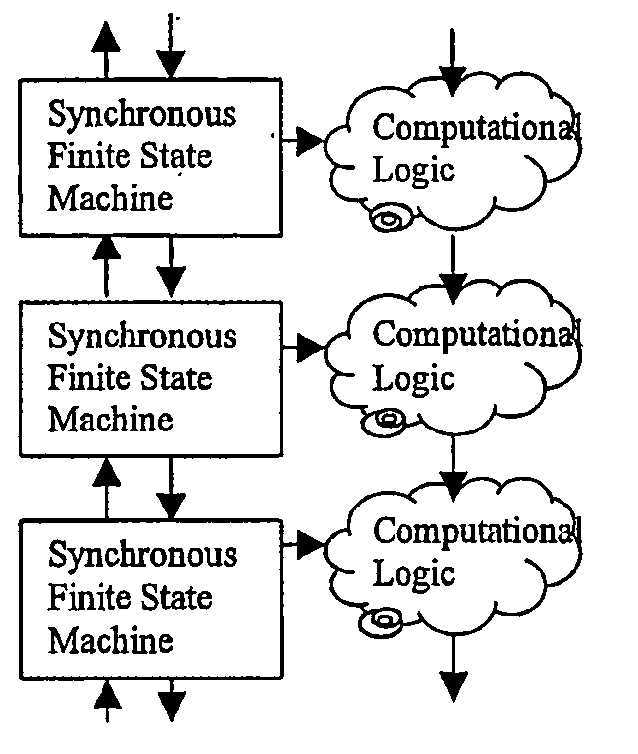

System of finite state machines

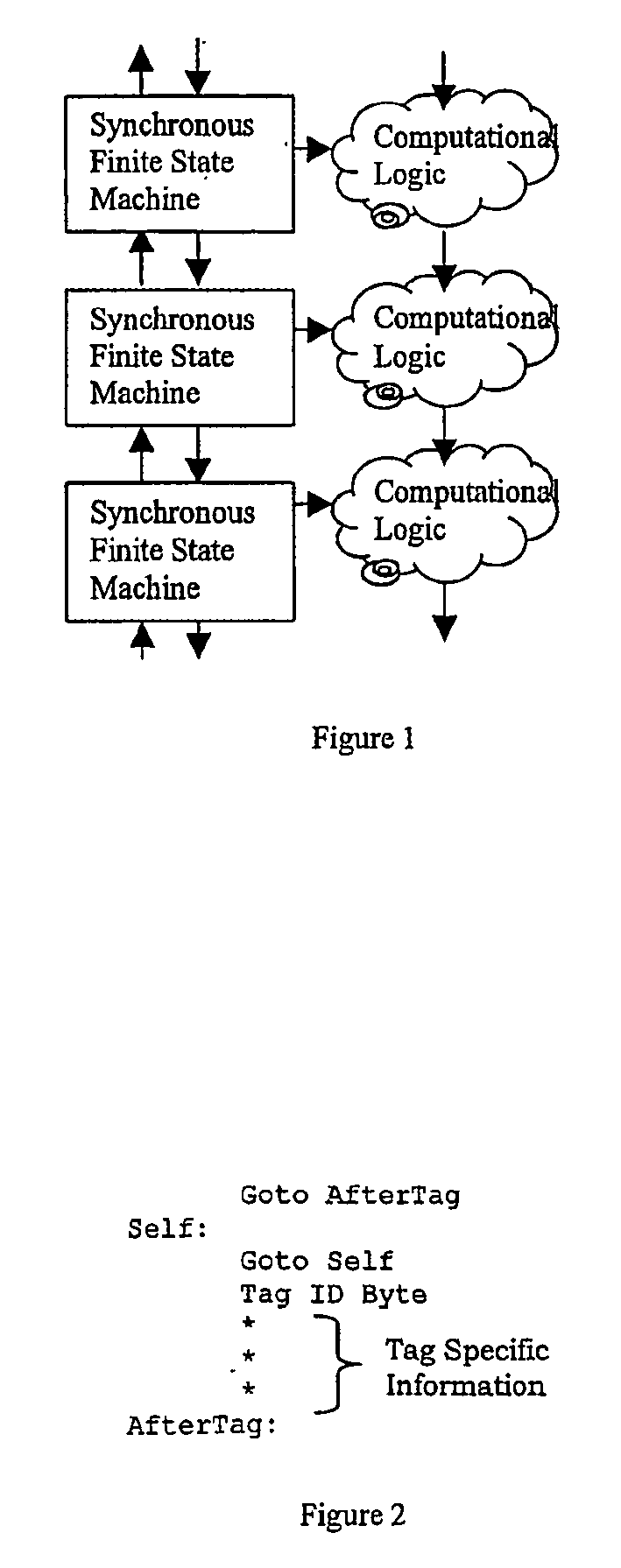

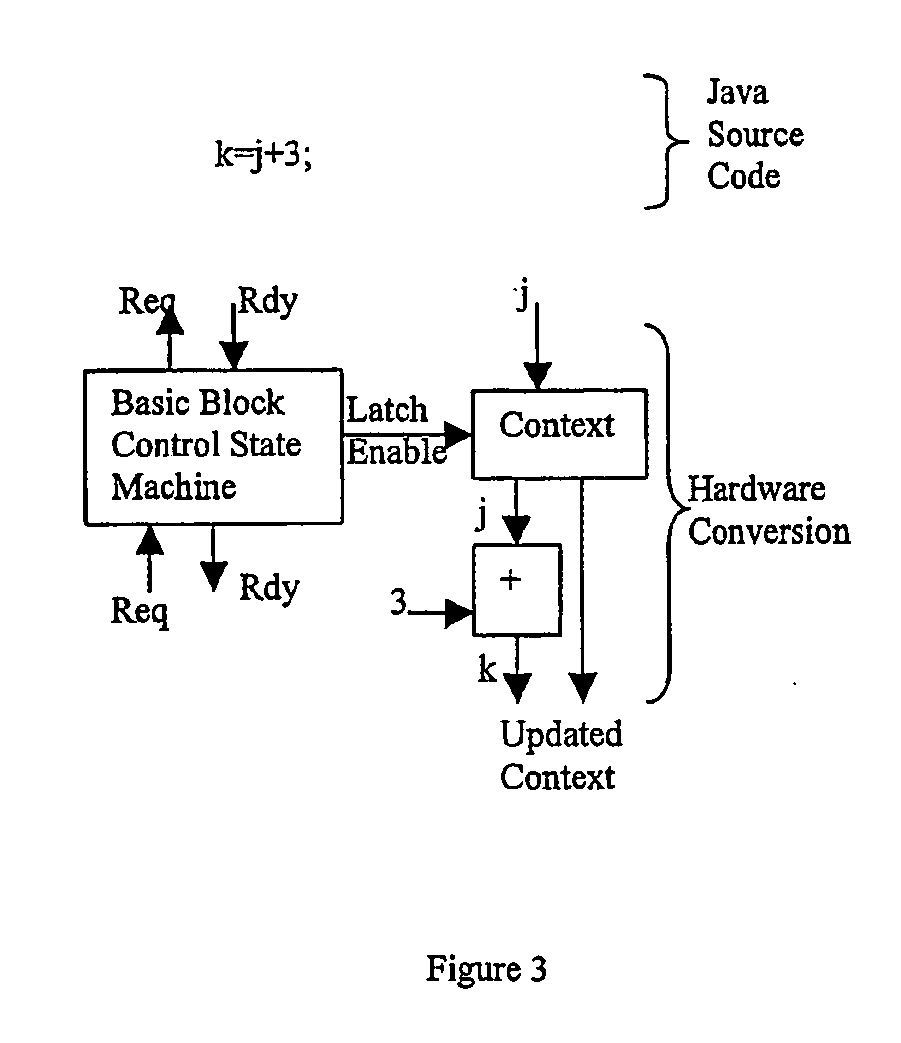

InactiveUS7224185B2Enough timeConcurrent instruction executionMultiple digital computer combinationsSufficient timeComputational logic

A system of finite state machines built with asynchronous or synchronous logic for controlling the flow of data through computational logic circuits programmed to accomplish a task specified by a user, having one finite state machine associated with each computational logic circuit, having each finite state machine accept data from either one or more predecessor finite state machines or from one or more sources outside the system and furnish data to one or more successor finite state machines or a recipient outside the system, excluding from consideration in determining a clock period for the system logic paths performing the task specified by the user, and providing a means for ensuring that each finite state machine allows sufficient time to elapse for the computational logic circuit associated with that finite state to perform its task.

Owner:CAMPBELL JOHN +1

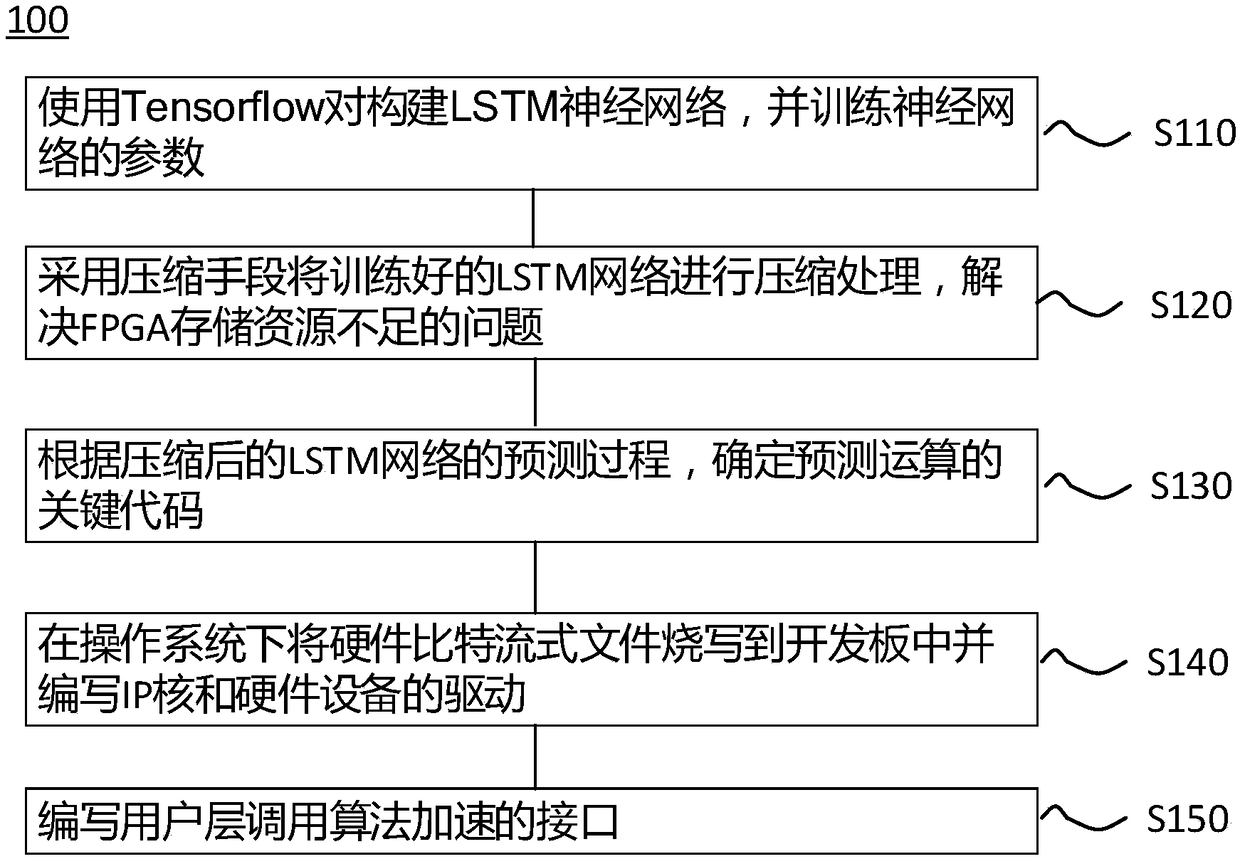

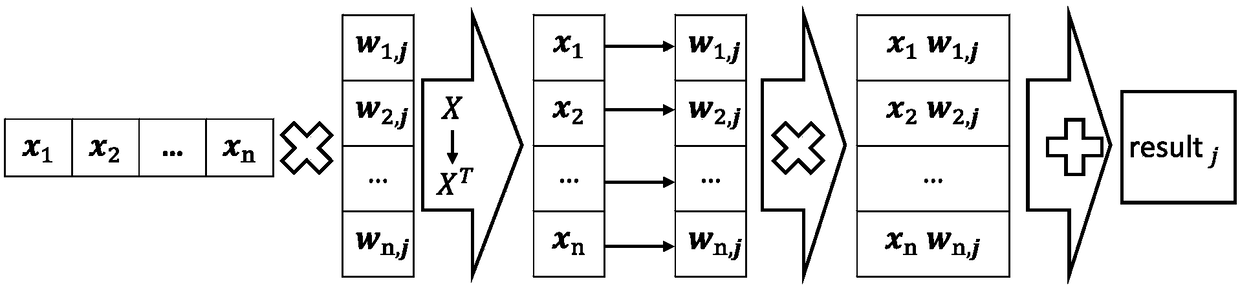

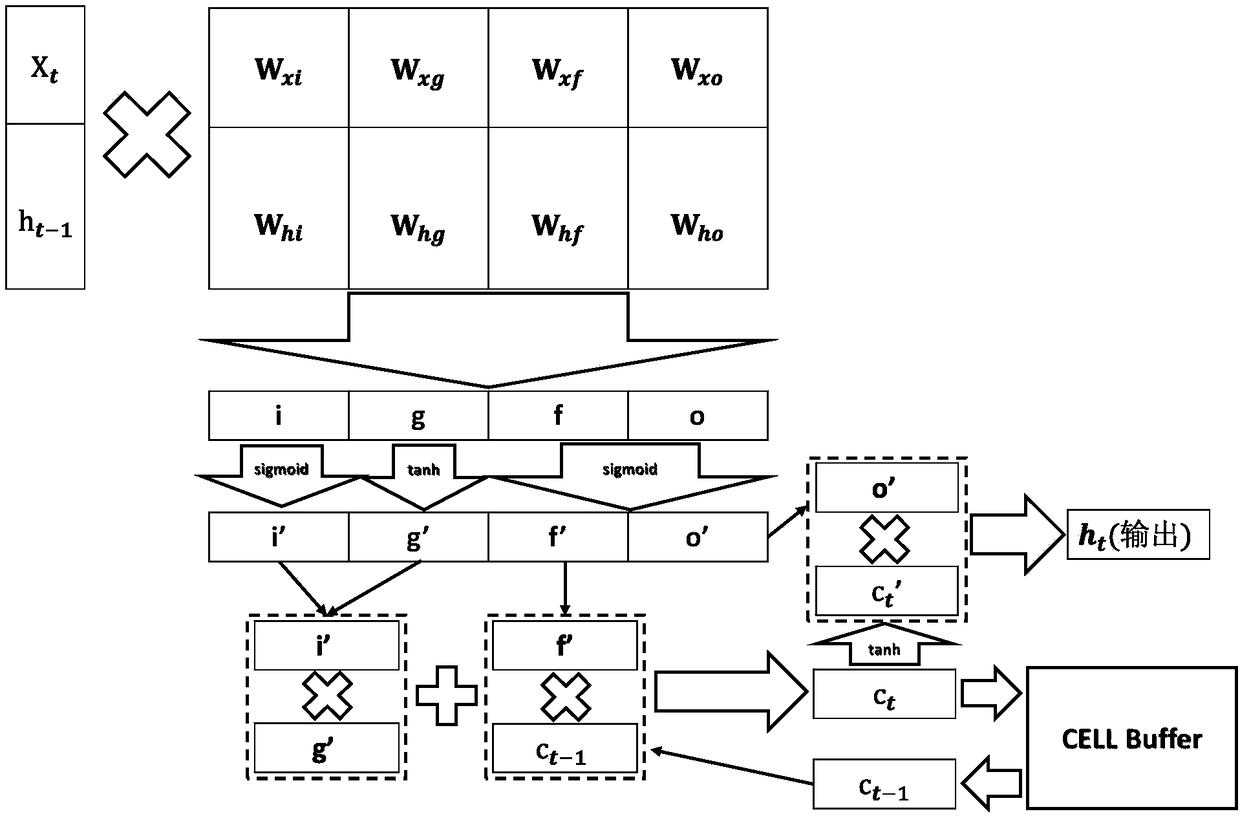

Design method of hardware accelerator based on LSTM recursive neural network algorithm on FPGA platform

InactiveCN108090560AImprove forecastImprove performanceNeural architecturesPhysical realisationNeural network hardwareLow resource

The invention discloses a method for accelerating an LSTM neural network algorithm on an FPGA platform. The FPGA is a field-programmable gate array platform and comprises a general processor, a field-programmable gate array body and a storage module. The method comprises the following steps that an LSTM neural network is constructed by using a Tensorflow pair, and parameters of the neural networkare trained; the parameters of the LSTM network are compressed by adopting a compression means, and the problem that storage resources of the FPGA are insufficient is solved; according to the prediction process of the compressed LSTM network, a calculation part suitable for running on the field-programmable gate array platform is determined; according to the determined calculation part, a softwareand hardware collaborative calculation mode is determined; according to the calculation logic resource and bandwidth condition of the FPGA, the number and type of IP core firmware are determined, andacceleration is carried out on the field-programmable gate array platform by utilizing a hardware operation unit. A hardware processing unit for acceleration of the LSTM neural network can be quicklydesigned according to hardware resources, and the processing unit has the advantages of being high in performance and low in power consumption compared with the general processor.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

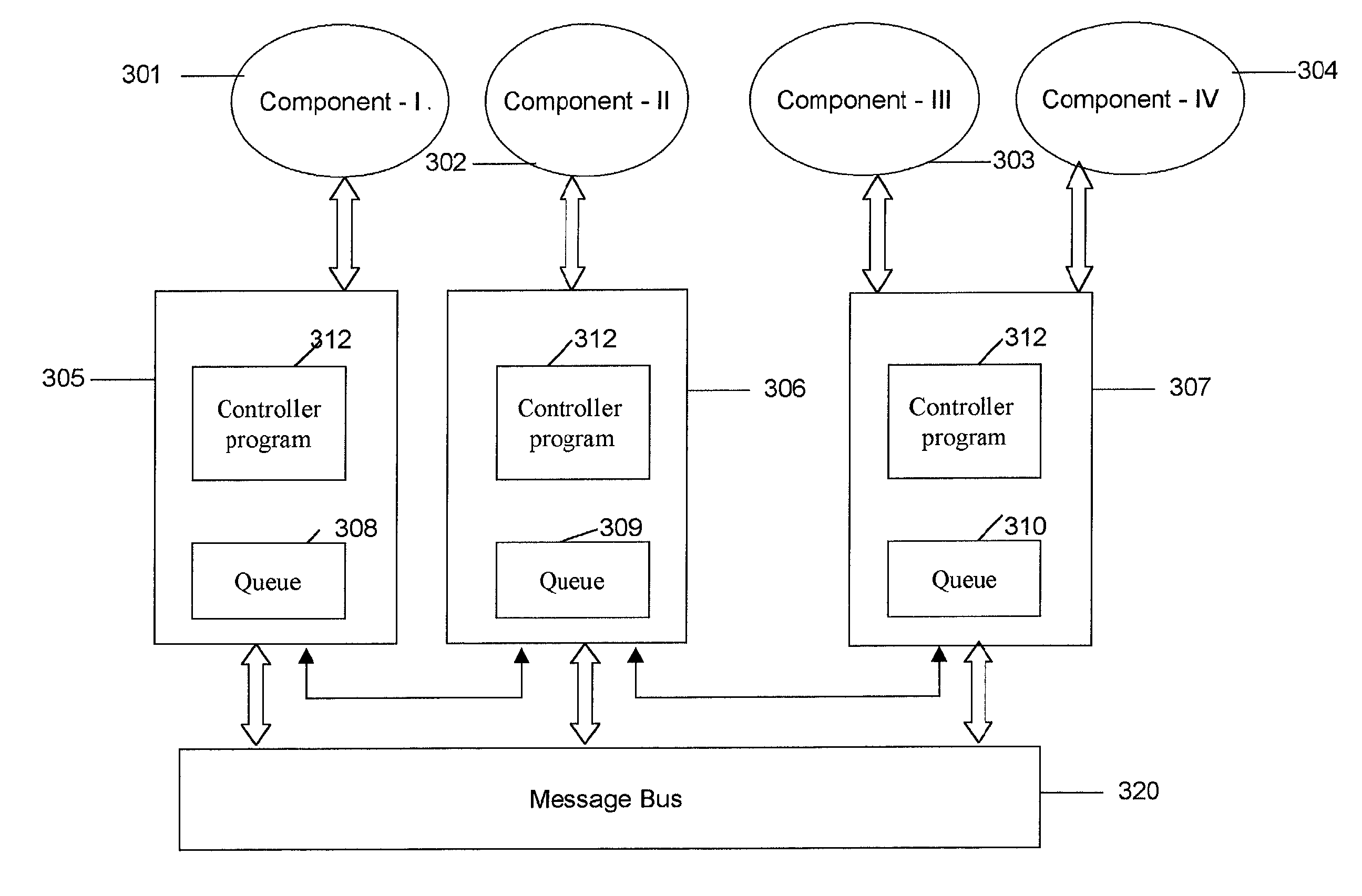

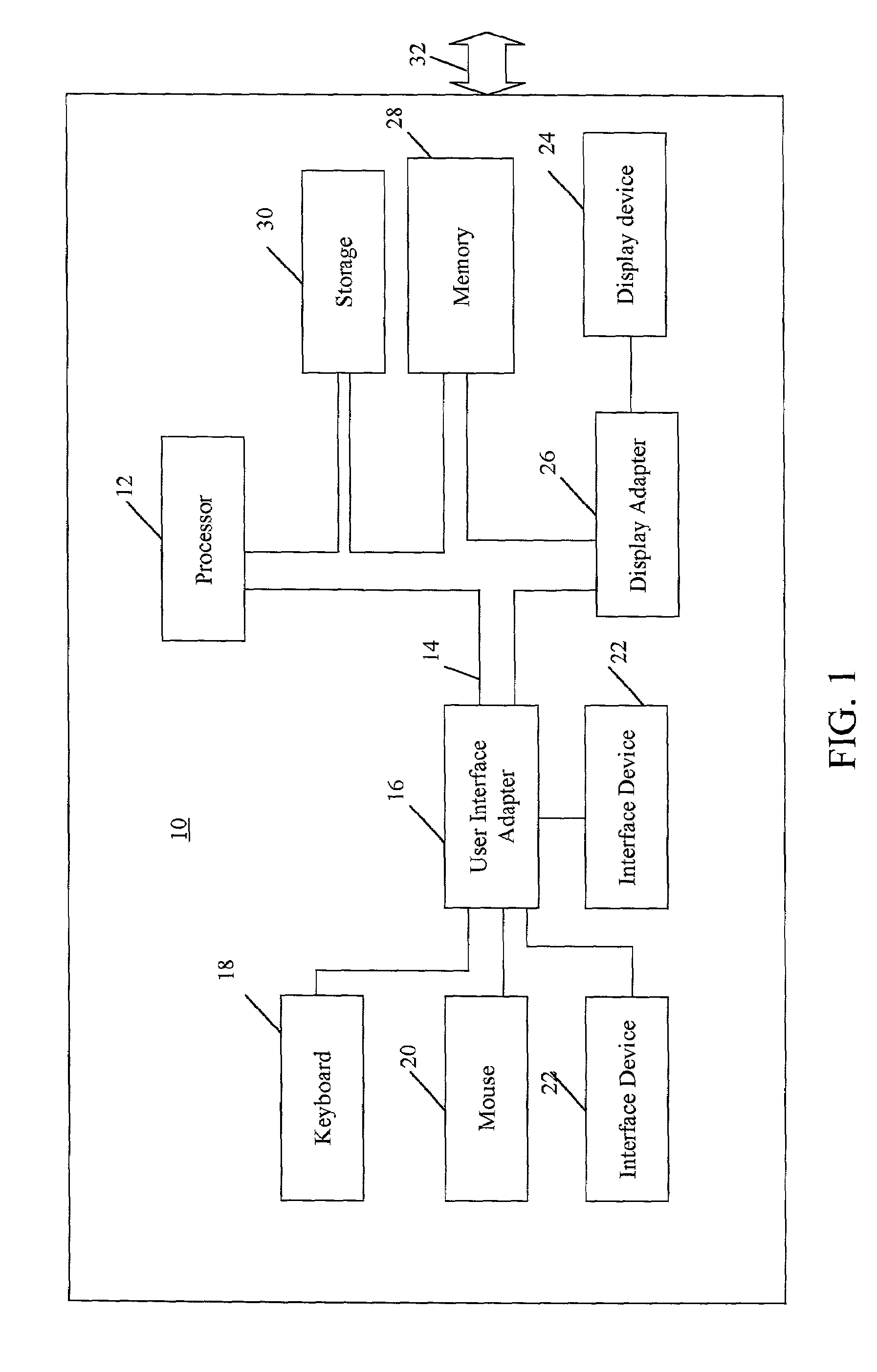

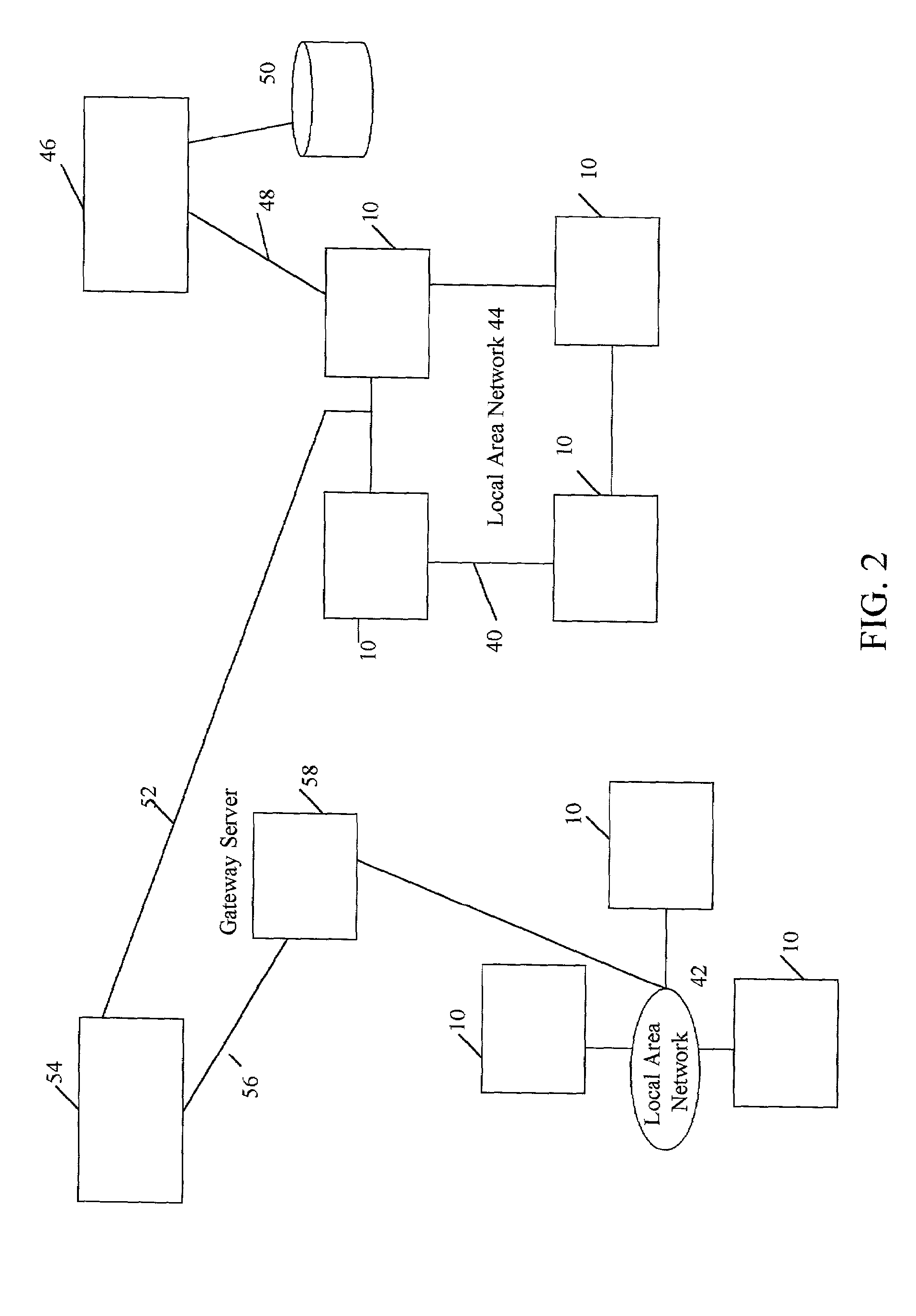

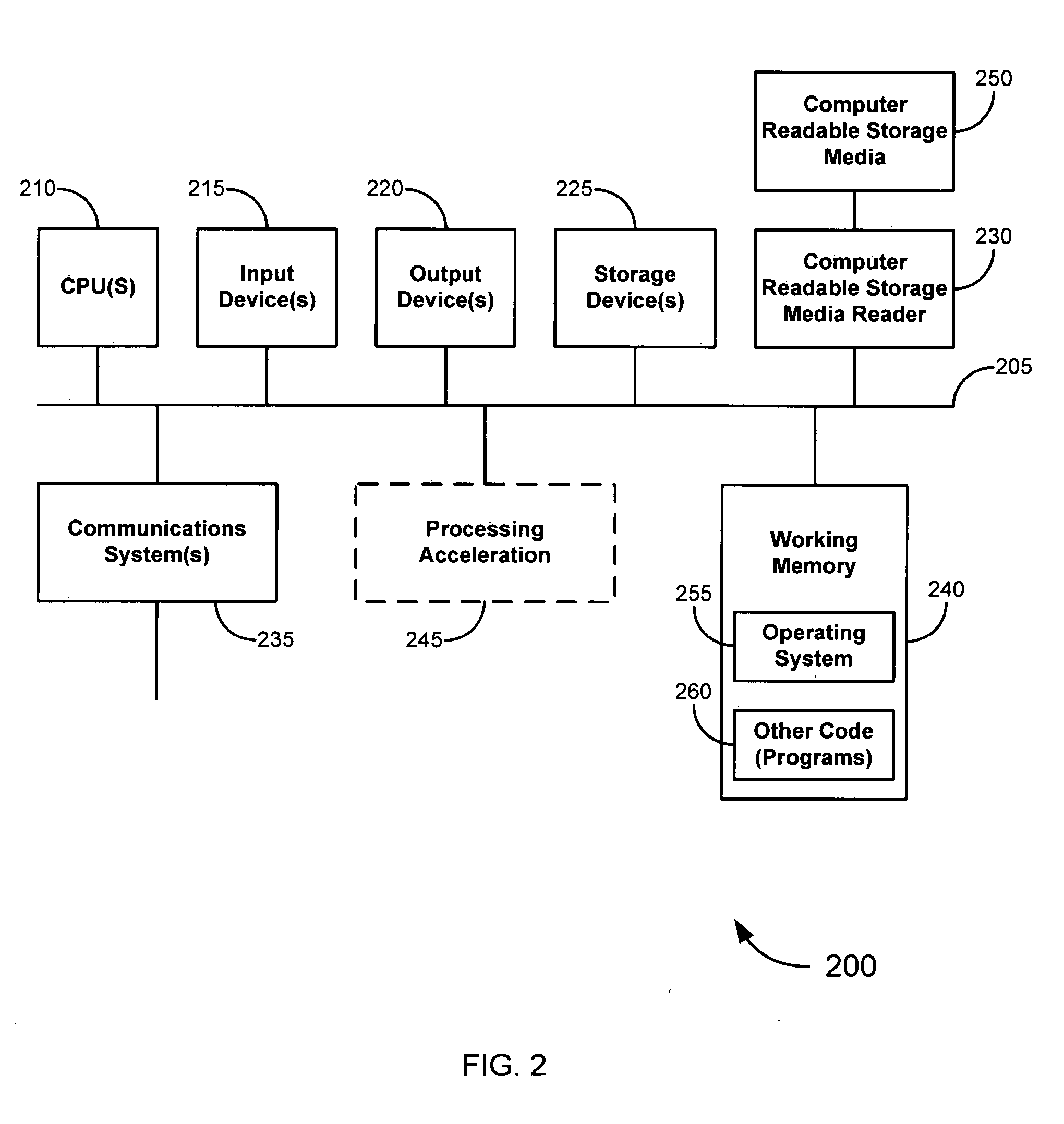

System and methodology for developing, integrating and monitoring computer applications and programs

ActiveUS7174370B1Facilitates composingDigital computer detailsTransmissionComputational logicApplication software

The present invention provides a system, method and computer program product for developing distributed applications, integrating component programs, integrating enterprise applications and managing change. The invention provides for an infrastructure where component programs that are the computational logic of the distributed application are installed over a network of computing units having controller programs running on each of these computing units. The invention provides for separating the concerns of computation, installation, execution and monitoring of the distributed application in terms of time, space and people involved. This is accomplished as the component programs simply perform the computation task and the communication between the component programs and their monitoring is handled by the controller programs.

Owner:FIORANO TECH INC

Methods and apparatus for centralized global tax computation, management, and compliance reporting

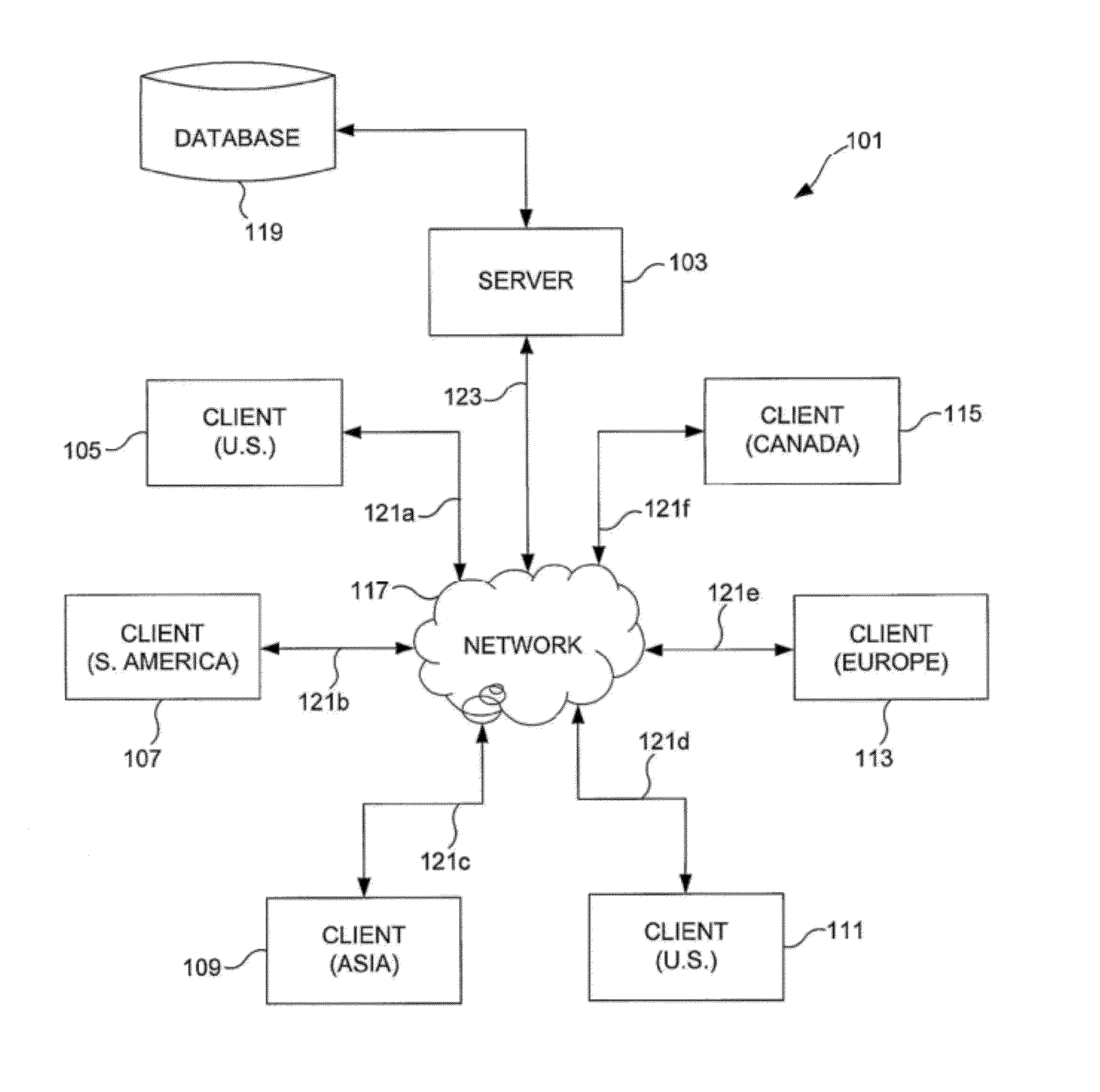

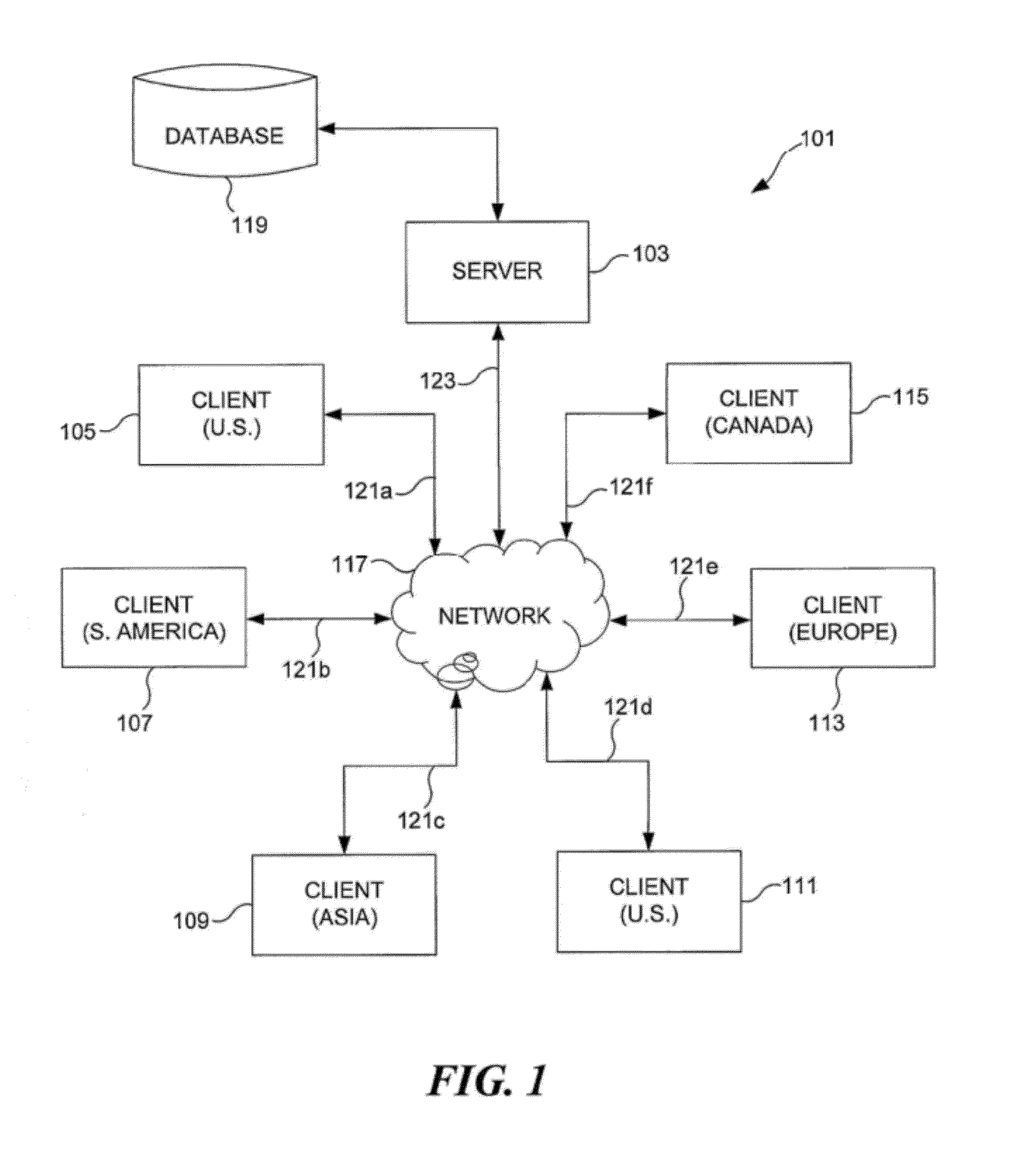

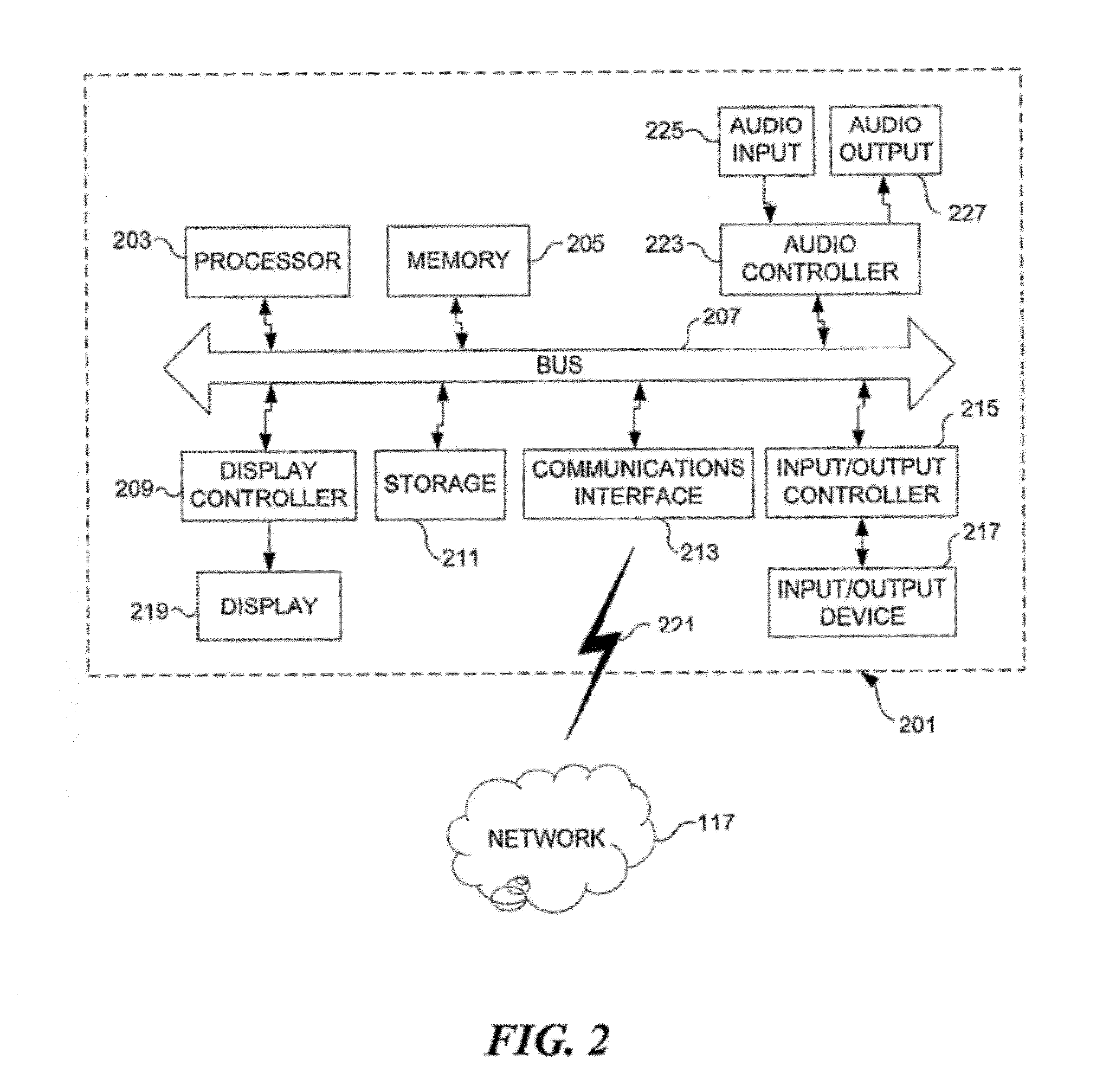

Methods, apparatus, and articles of manufacture for tax computation, management, and compliance reporting via a centralized transactional tax platform capable of incorporating transaction data and / or tax information from multiple locations and / or multiple business applications via a network architecture are disclosed herein. In one embodiment, a central server may be configured to execute an application to generate a user interface to enable configuration of tax compliance data via a network link, to receive transaction data from one or more client systems via the network, to calculate transaction taxes corresponding to the transaction data, and to store tax information, including outputs and computational logic generated by tax calculation engines executed by the server. In another embodiment, the tax calculations may be executed client-side, while administration of tax compliance data and reporting are facilitated by the central server.

Owner:THOMSON REUTERS ENTERPRISE CENT GMBH +1

Apparatus and method for large hardware finite state machine with embedded equivalence classes

InactiveUS20050035784A1Improve throughputData efficientSpecific program execution arrangementsSpecial data processing applicationsComputational logicParallel computing

Owner:INTEL CORP +1

Division unit in a processor using a piece-wise quadratic approximation technique

InactiveUS6351760B1Computations using contact-making devicesComputation using non-contact making devicesComputational logicRounding

A computation unit computes a division operation Y / X by determining the value of a divisor reciprocal 1 / X and multiplying the reciprocal by a numerator Y. The reciprocal 1 / X value is determined using a quadratic approximation having a form:where coefficients A, B, and C are constants that are stored in a storage or memory such as a read-only memory (ROM). The bit length of the coefficients determines the error in a final result. Storage size is reduced through use of "least mean square error"techniques in the determination of the coefficients that are stored in the coefficient storage. During the generation of partial products x2, Ax2, and Bx, the process of rounding is eliminated, thereby reducing the computational logic to implement the division functionality.

Owner:ORACLE INT CORP

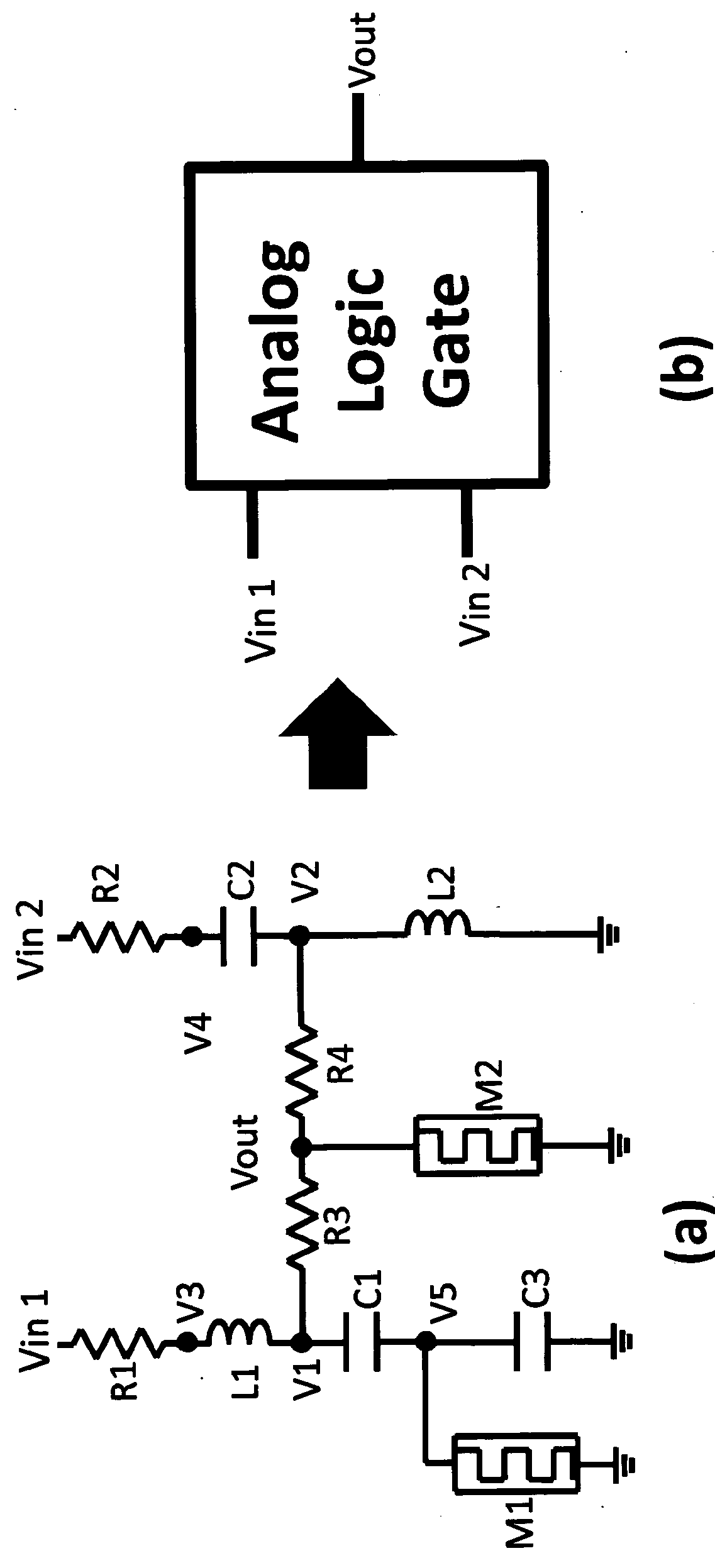

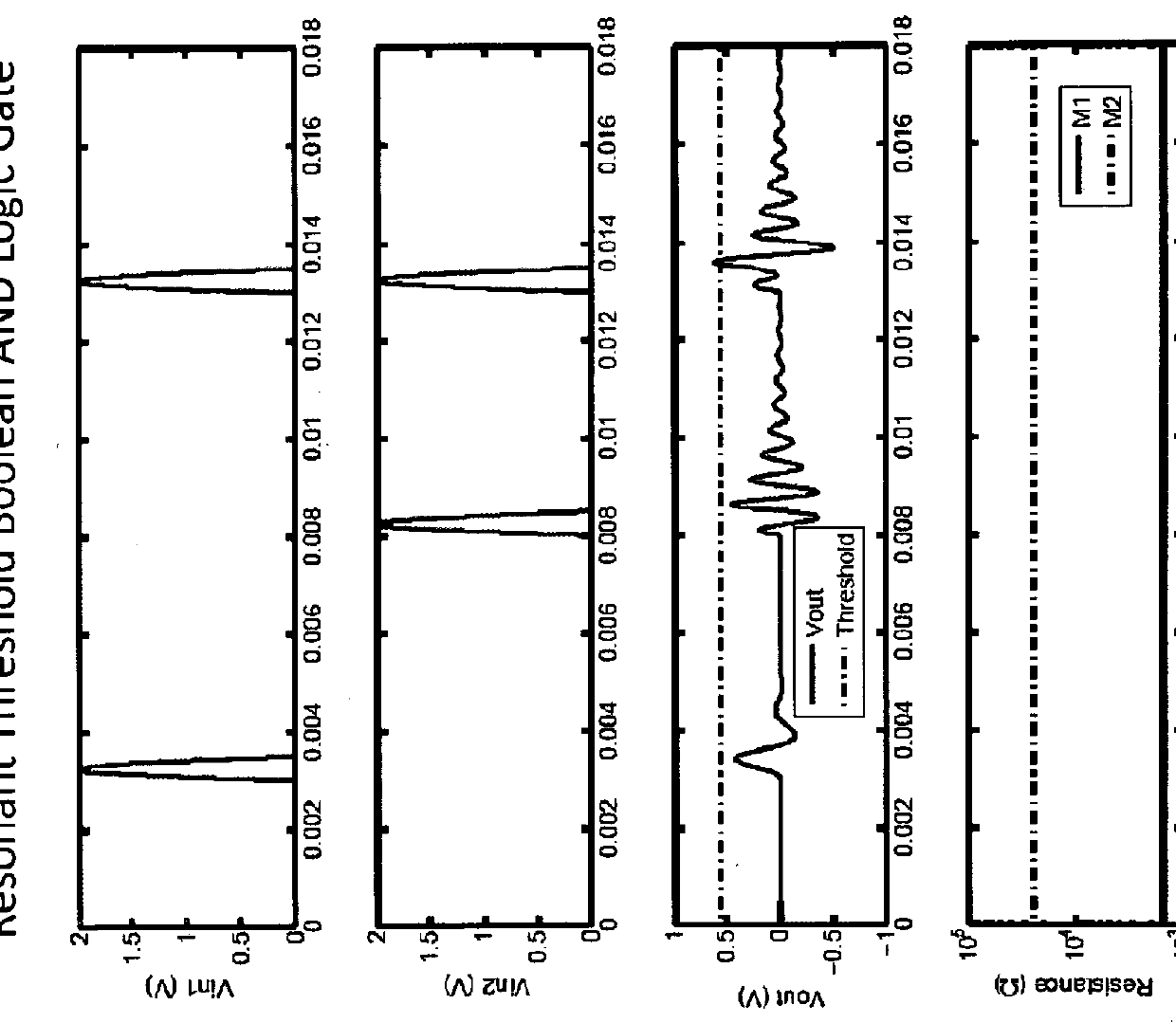

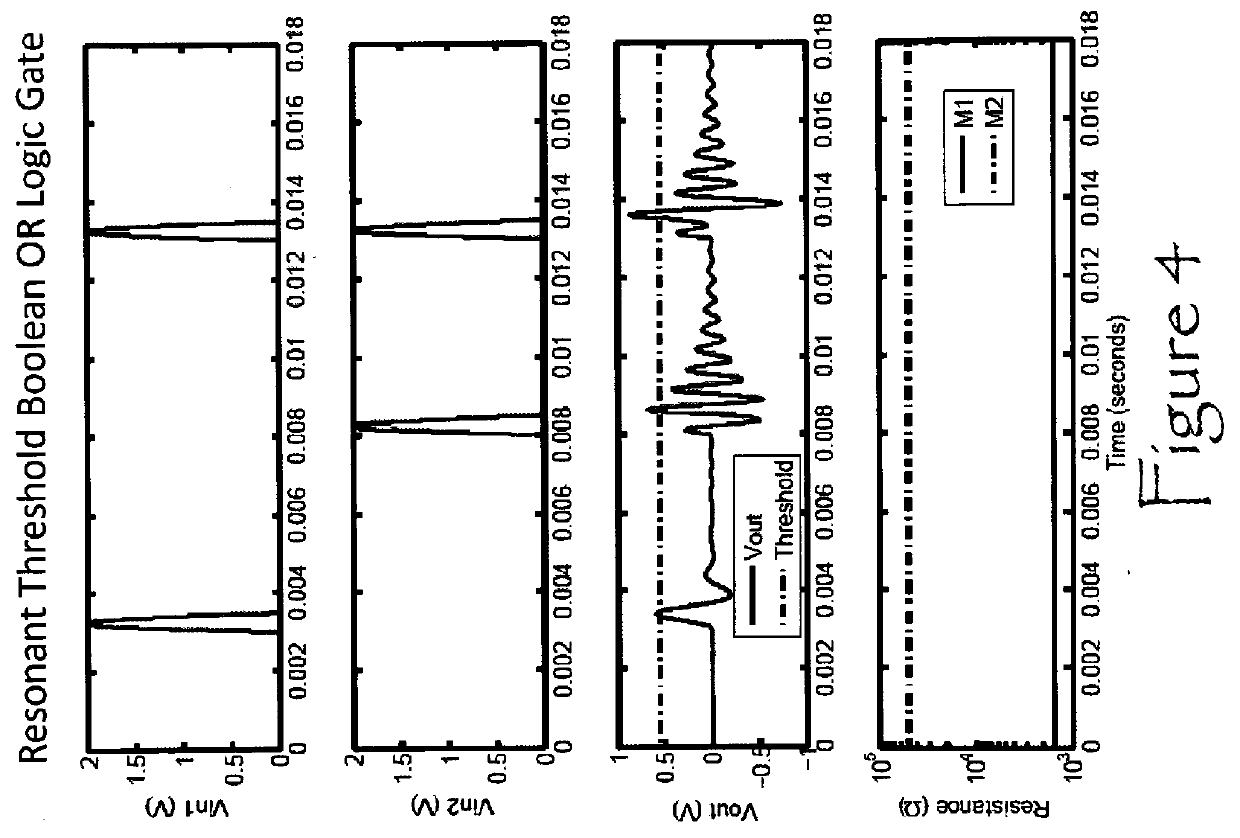

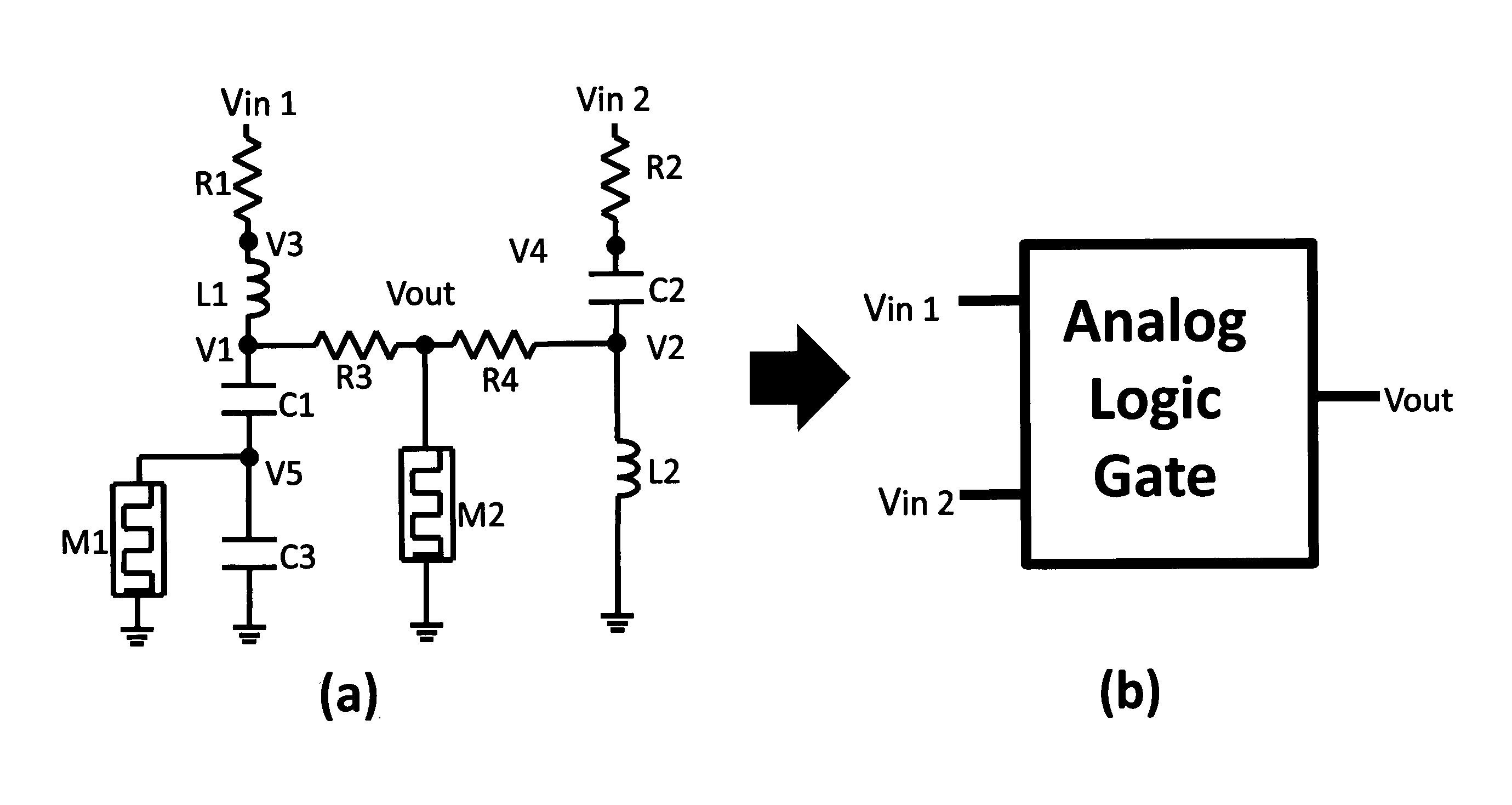

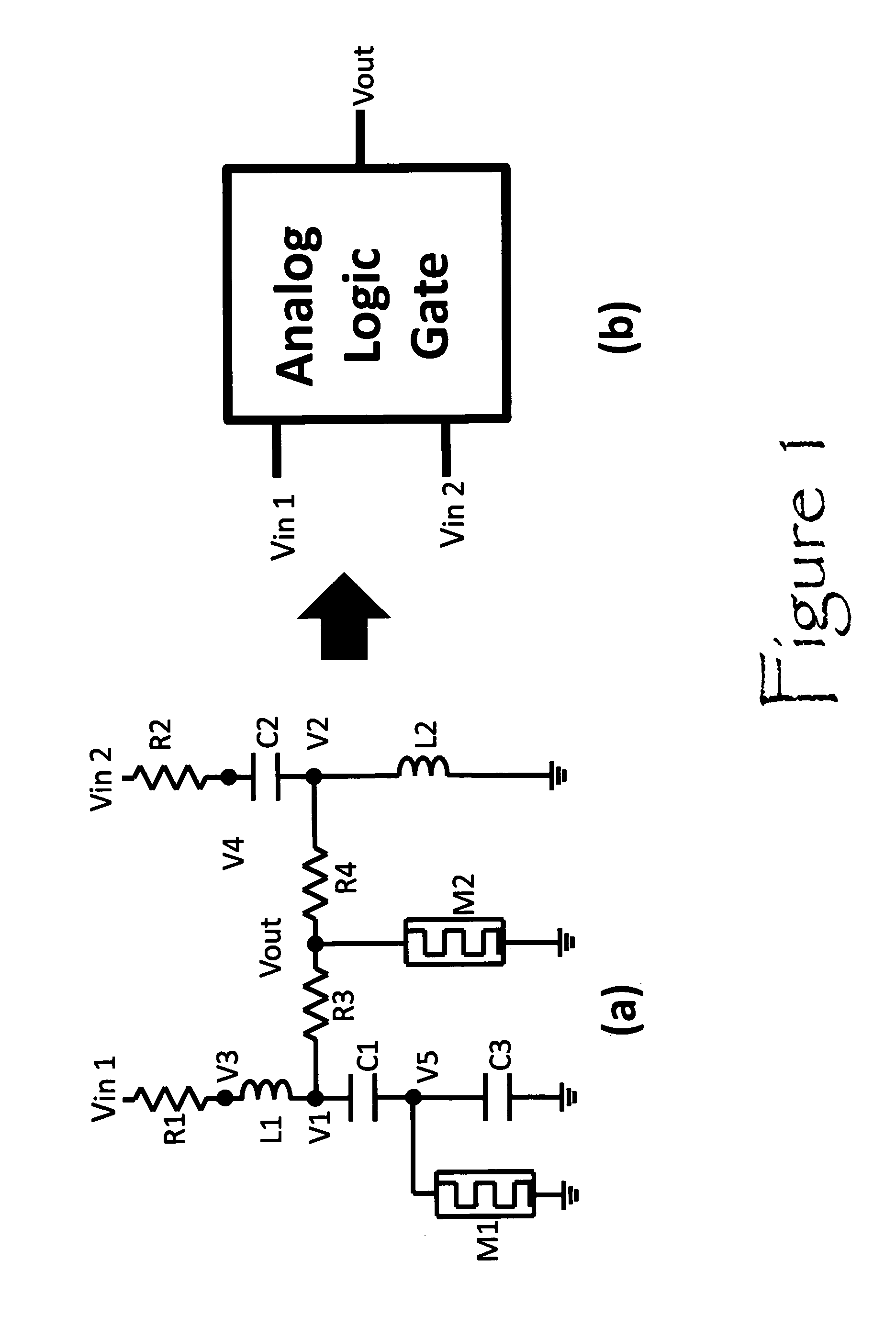

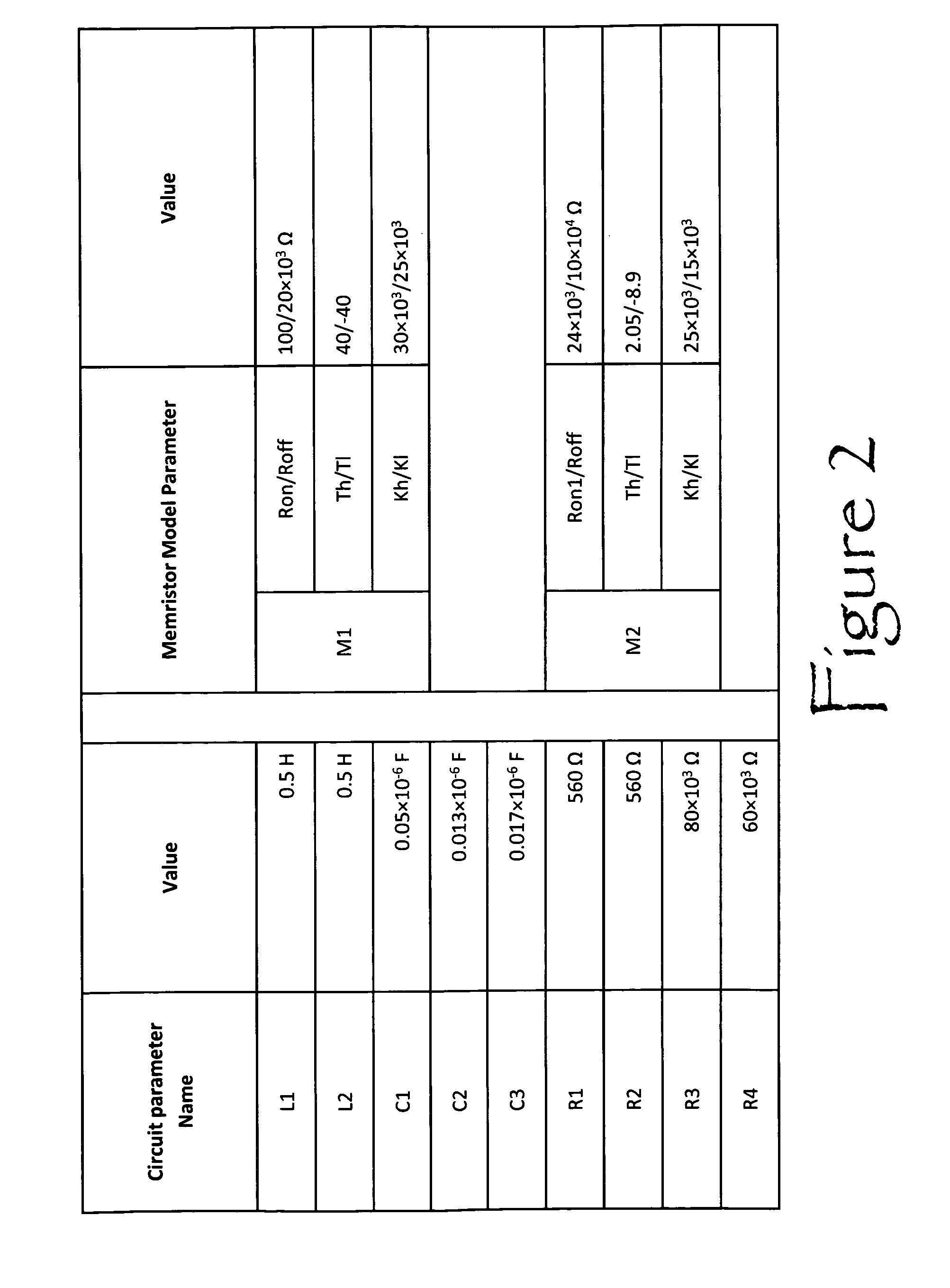

Self-reconfigurable memristor-based analog resonant computer

ActiveUS20120217994A1Logic circuits characterised by logic functionDigital storageComputational logicInductor

An apparatus which provides a self-reconfigurable analog resonant computer employing a fixed electronic circuit schematic which performs computing logic operations (for example OR, AND, NOR, and XOR Boolean logic) without physical re-wiring and whose components only include passive circuit elements such as resistors, capacitors, inductors, and memristor devices. The computational logic self-reconfiguration process in the circuit takes place as training input signals, which are input causing the impedance state of the memristor device to change. Once the training process is completed, the circuit is probed to determine whether the desired logic operation has been programmed.

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

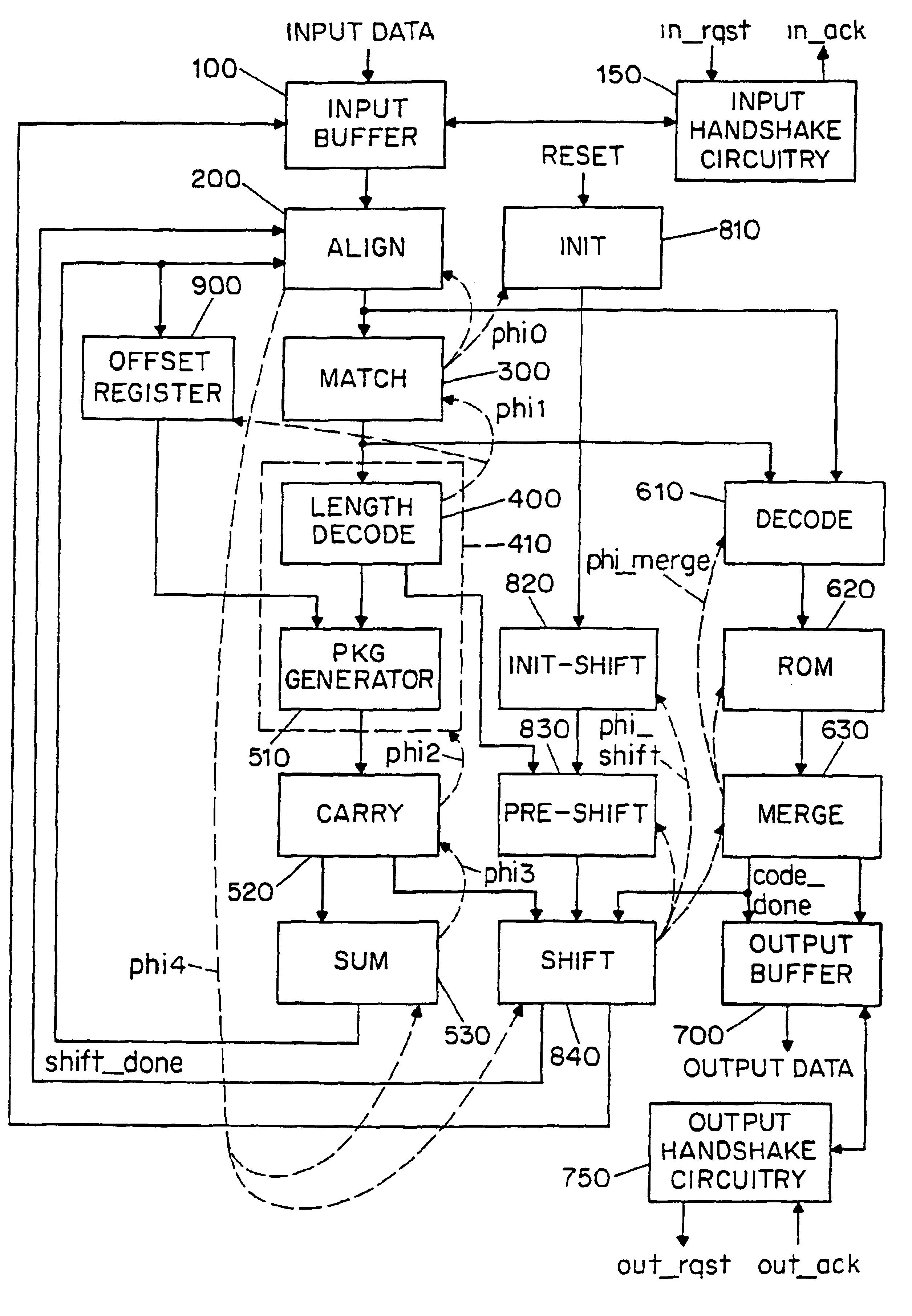

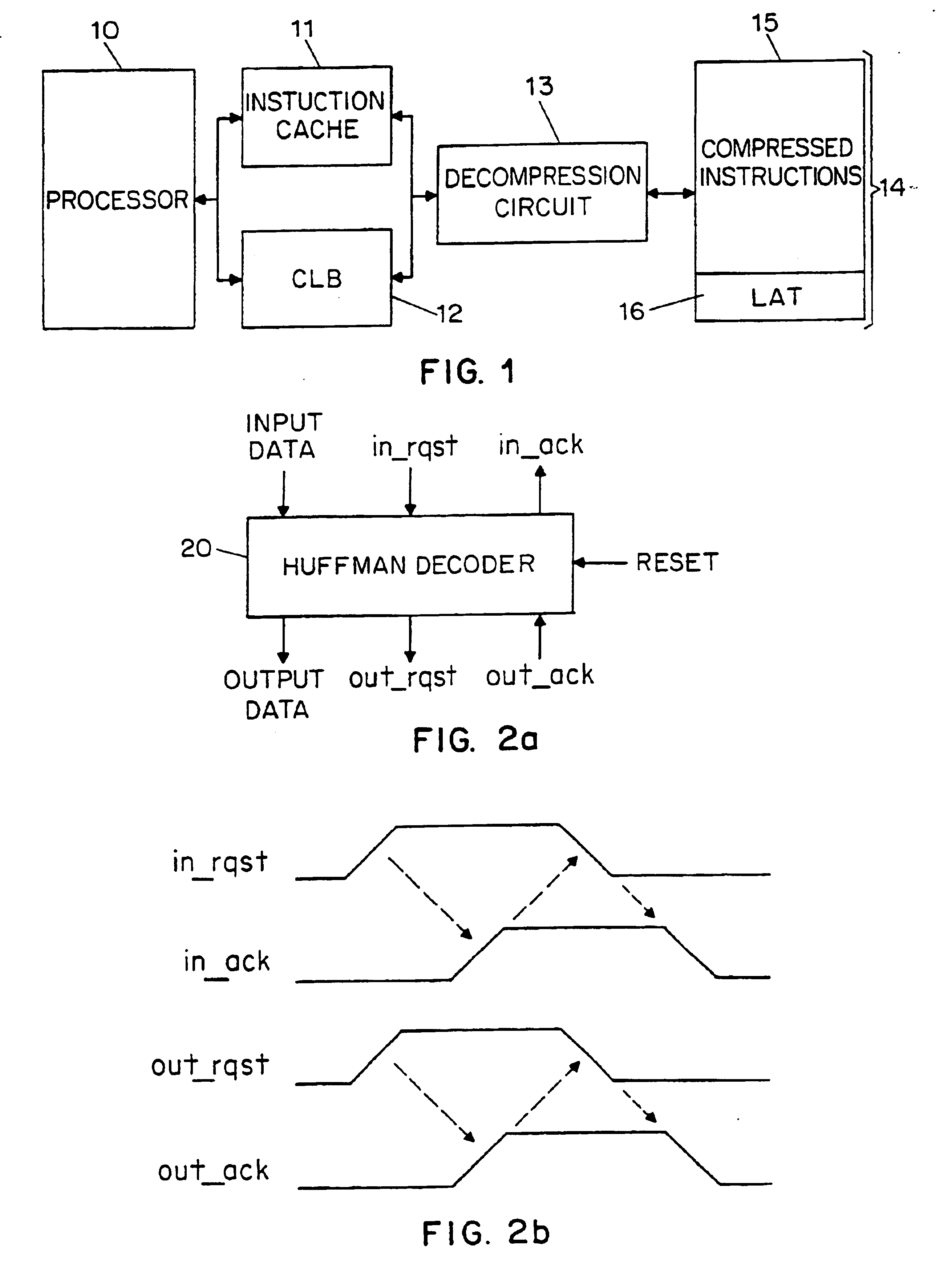

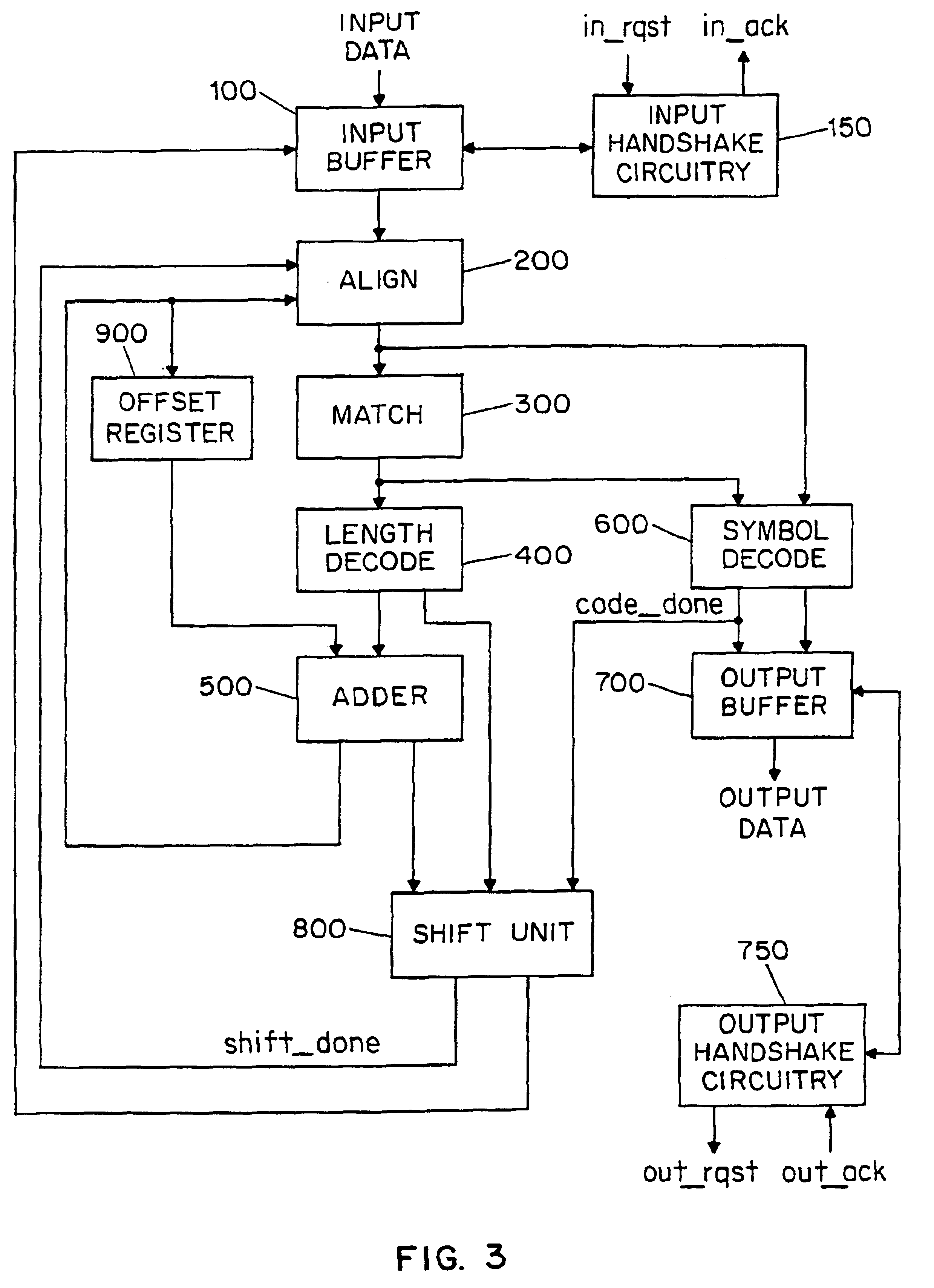

Variable-length, high-speed asynchronous decoder circuit

InactiveUS6865668B1Improve throughputDigital data processing detailsDigital computer detailsVariable-length codeComputational logic

There is disclosed a decoder circuit (20) for decoding input data coded using a variable length coding technique, such as Huffman coding. The decoder circuit (20) comprises an input buffer (100), a logic circuit (150) coupled to the input buffer (100), and an output buffer (700) coupled to the logic circuit (750). The logic circuit (750) includes a plurality of computational logic stages for decoding the input data, the plurality of computational logic stages arranged in one or more computational threads. At least one of the computational threads is arranged as a self-timed ring, wherein each computational logic stage in the ring produces a completion signal indicating either completion or non-completion of the computational logic of the associated computational logic stage. Each completion signal is coupled to a previous computational logic stage in the ring. The previous computational logic stage performs control operations when the completion signal indicates completion and performs evaluation of its inputs when the completion signal indicates non-completion.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

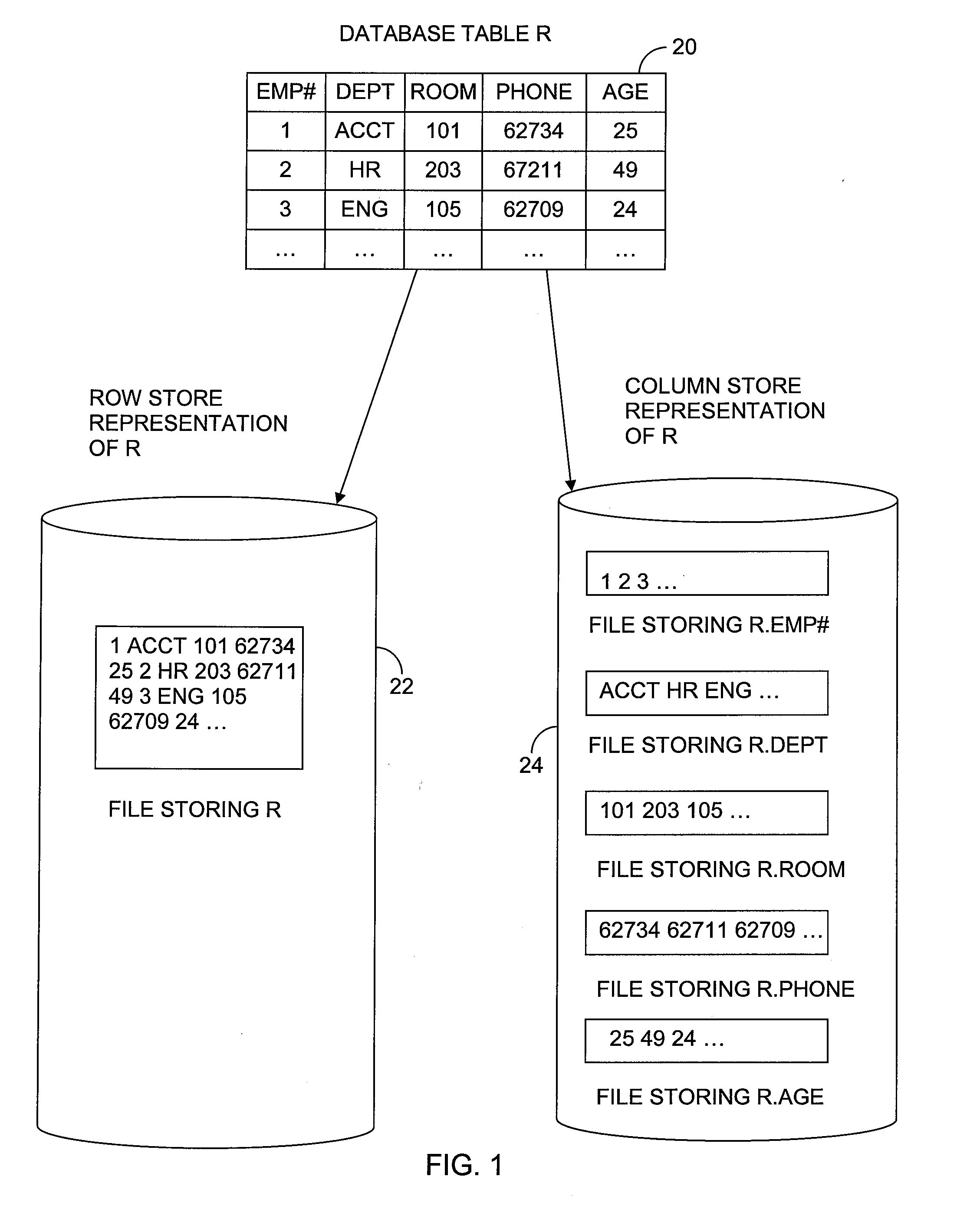

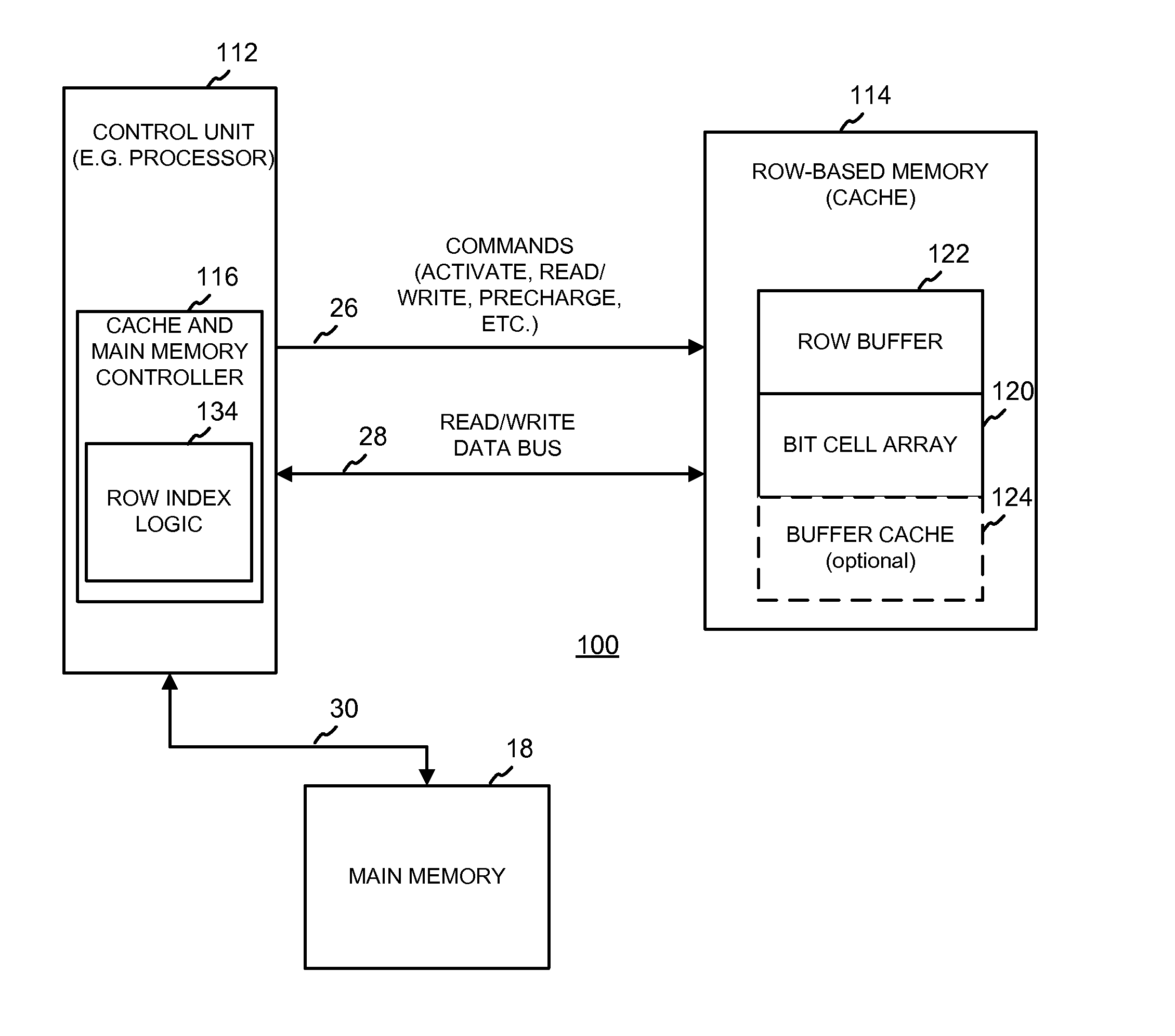

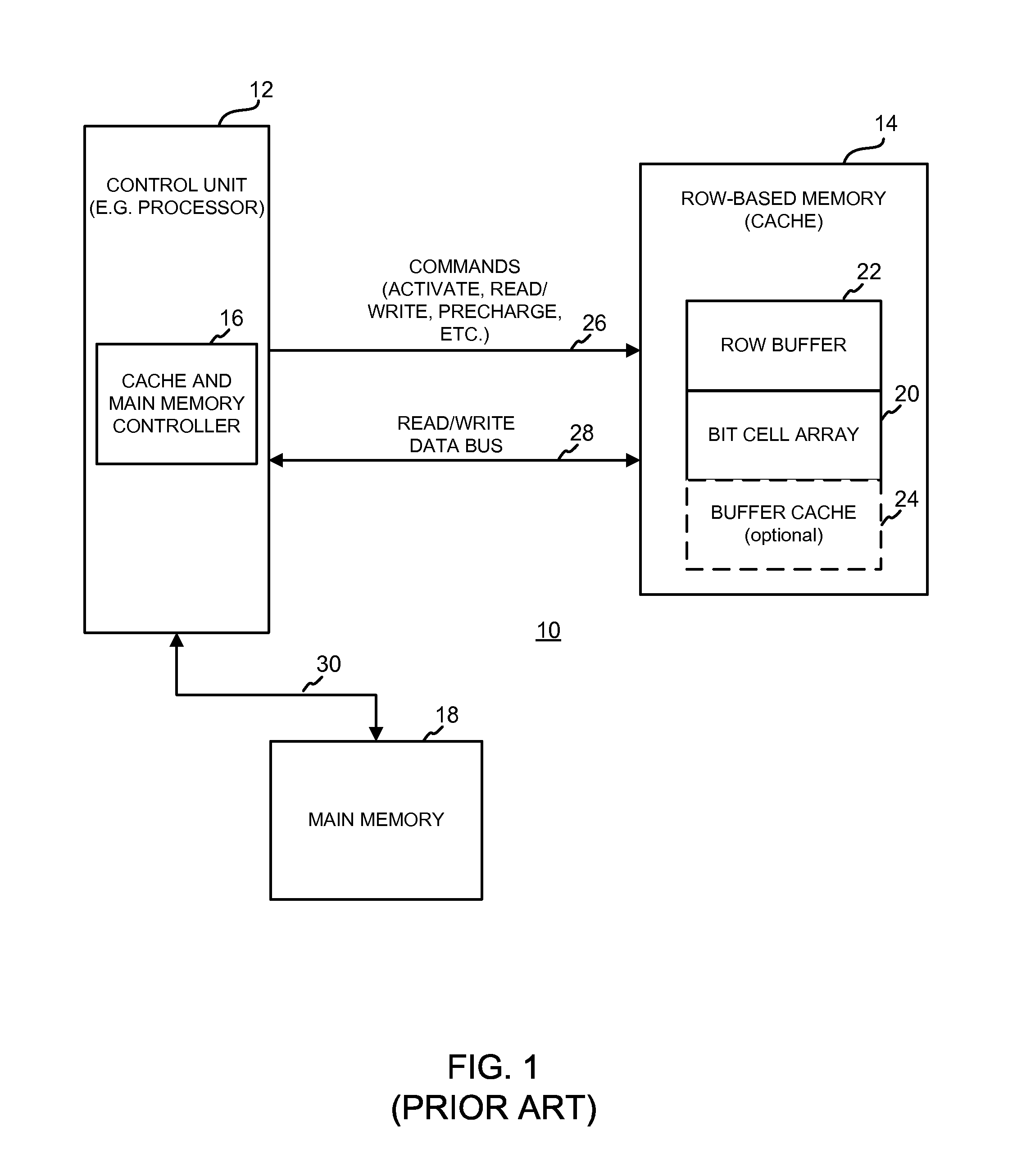

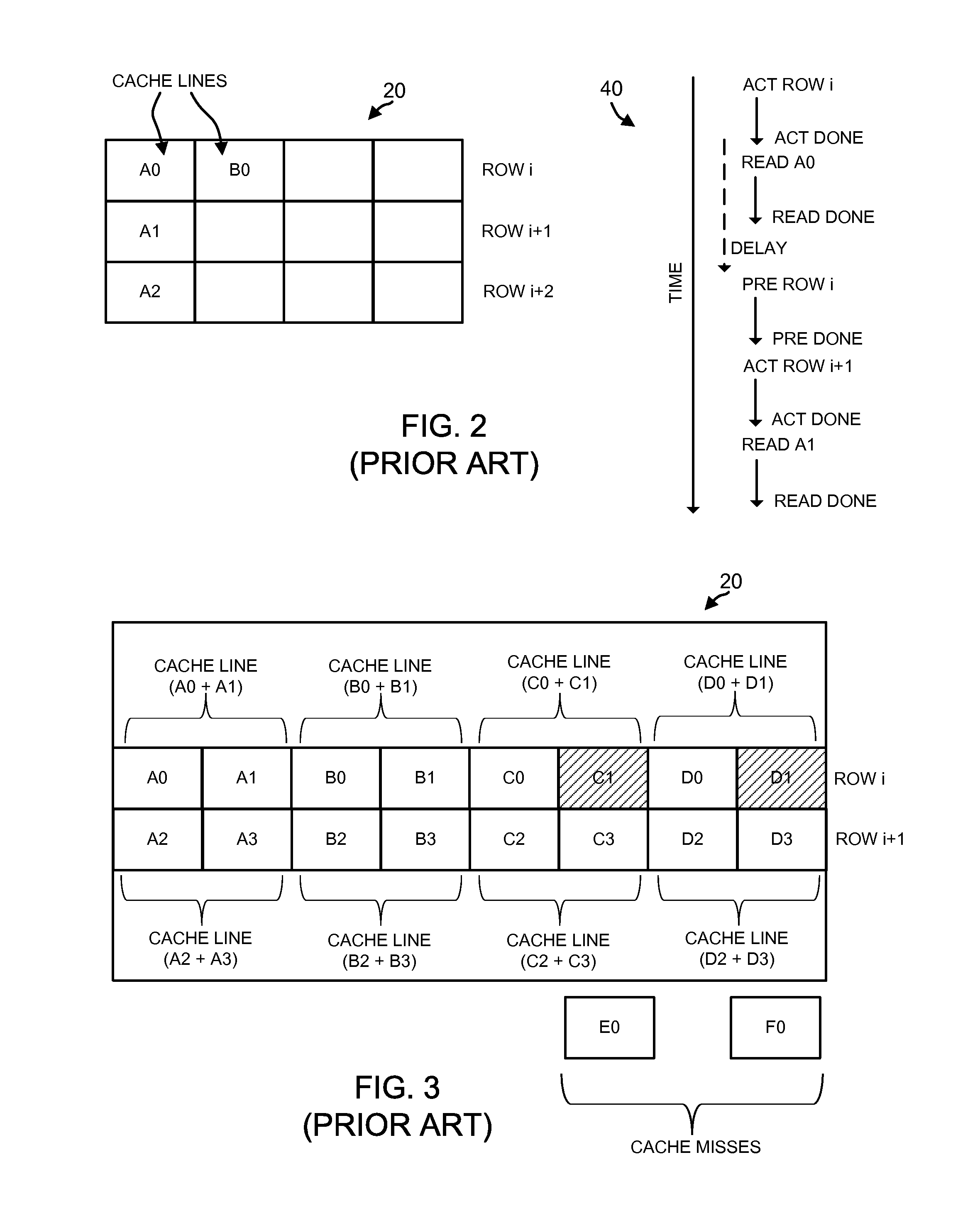

System and Method for Cache Organization in Row-Based Memories

ActiveUS20130238856A1Reduce power consumptionImprove caching capacityMemory adressing/allocation/relocationComputational logicParallel computing

The present disclosure relates to a method and system for mapping cache lines to a row-based cache. In particular, a method includes, in response to a plurality of memory access requests each including an address associated with a cache line of a main memory, mapping sequentially addressed cache lines of the main memory to a row of the row-based cache. A disclosed system includes row index computation logic operative to map sequentially addressed cache lines of a main memory to a row of a row-based cache in response to a plurality of memory access requests each including an address associated with a cache line of the main memory.

Owner:ADVANCED MICRO DEVICES INC

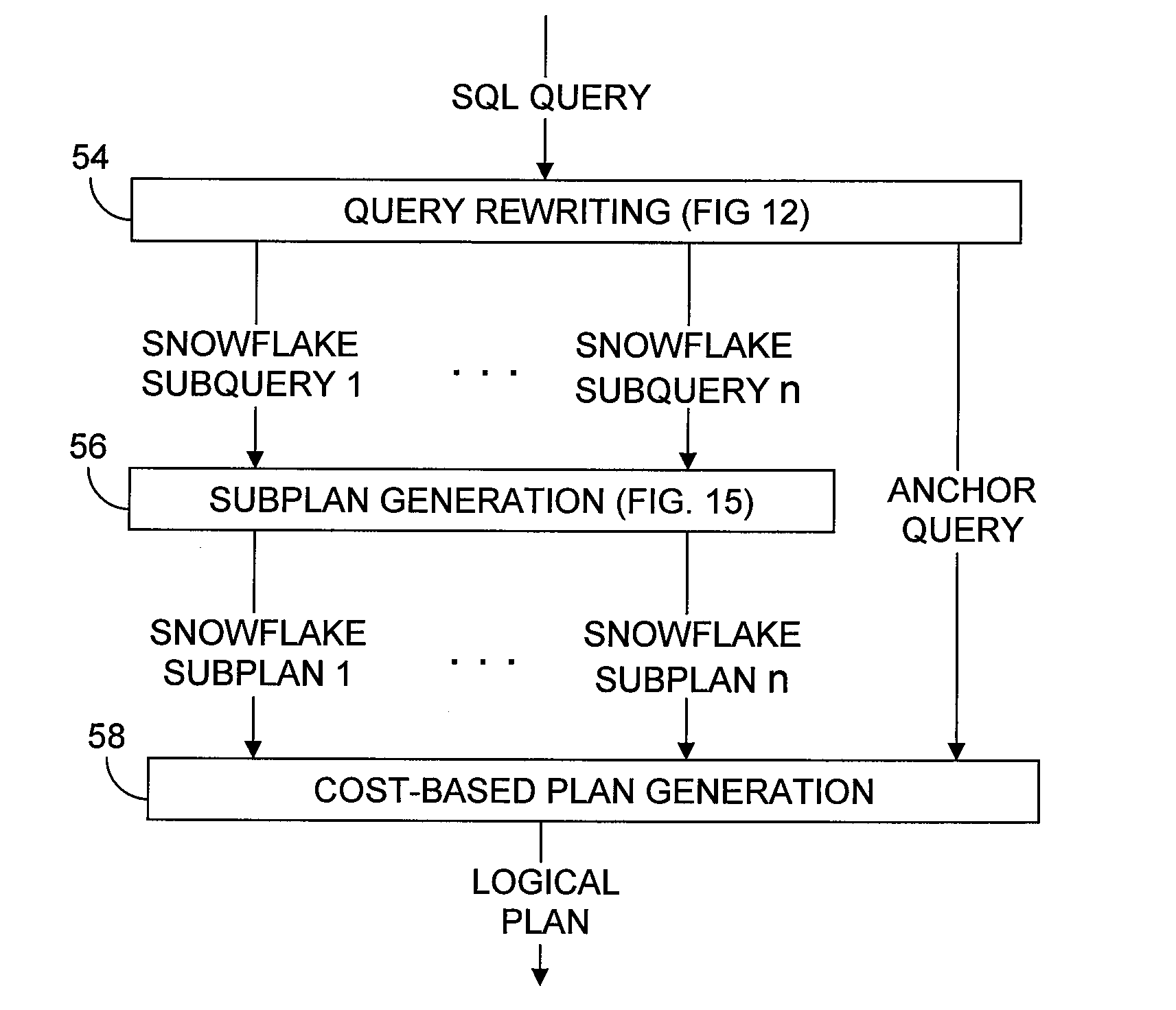

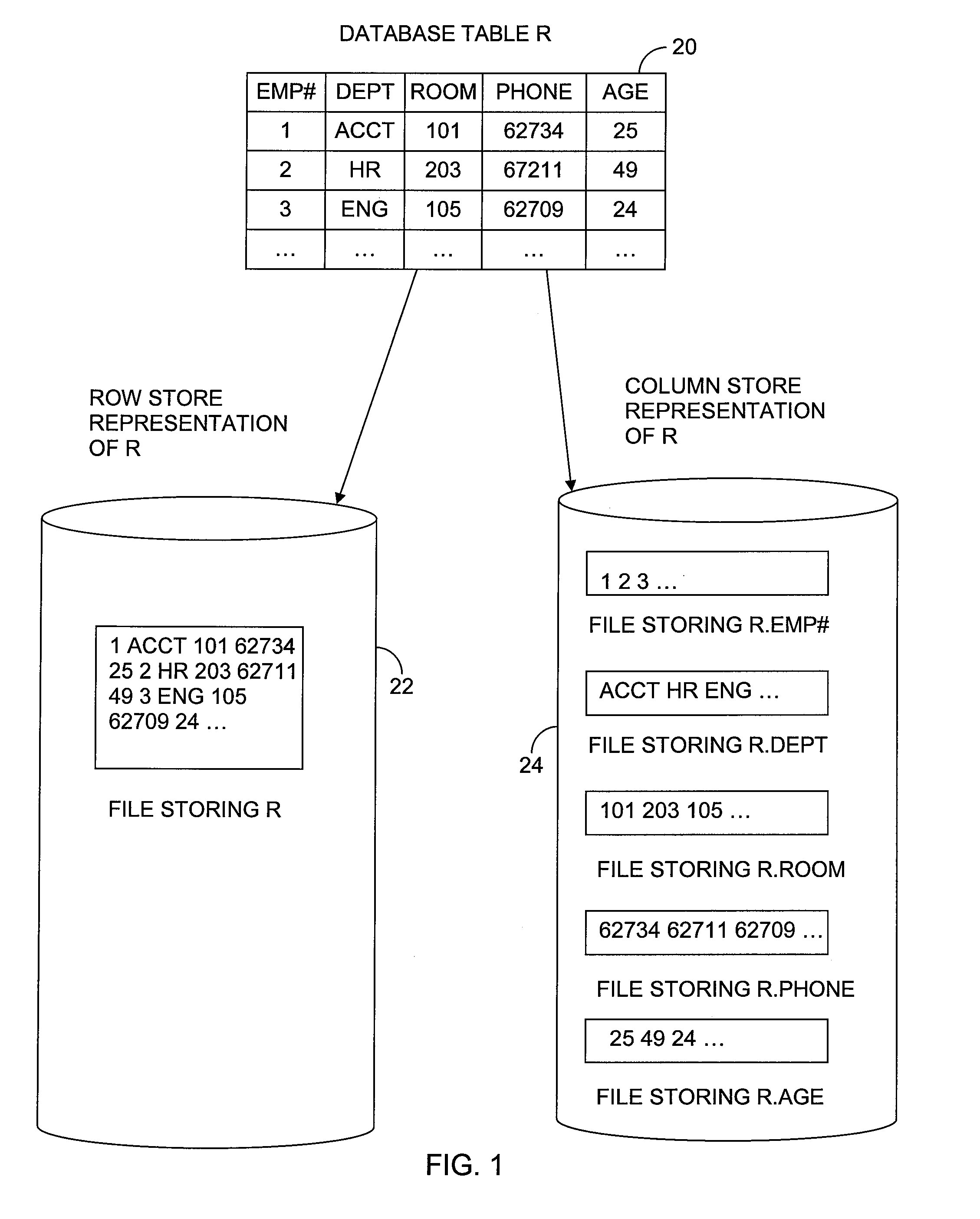

Optimizing snowflake schema queries

ActiveUS8671091B2Digital data processing detailsMulti-dimensional databasesSnowflake schemaComputational logic

For a database query that defines a plurality of separate snowflake schemas, a query optimizer computes separately for each of the snowflake schemas a logical access plan for obtaining from that schema's tables a respective record set that includes the data requested from those tables by that query. The query optimizer also computes a logical access plan for obtaining the query's results from the record sets in which execution of the logical access plans thus computed will result.

Owner:MICRO FOCUS LLC

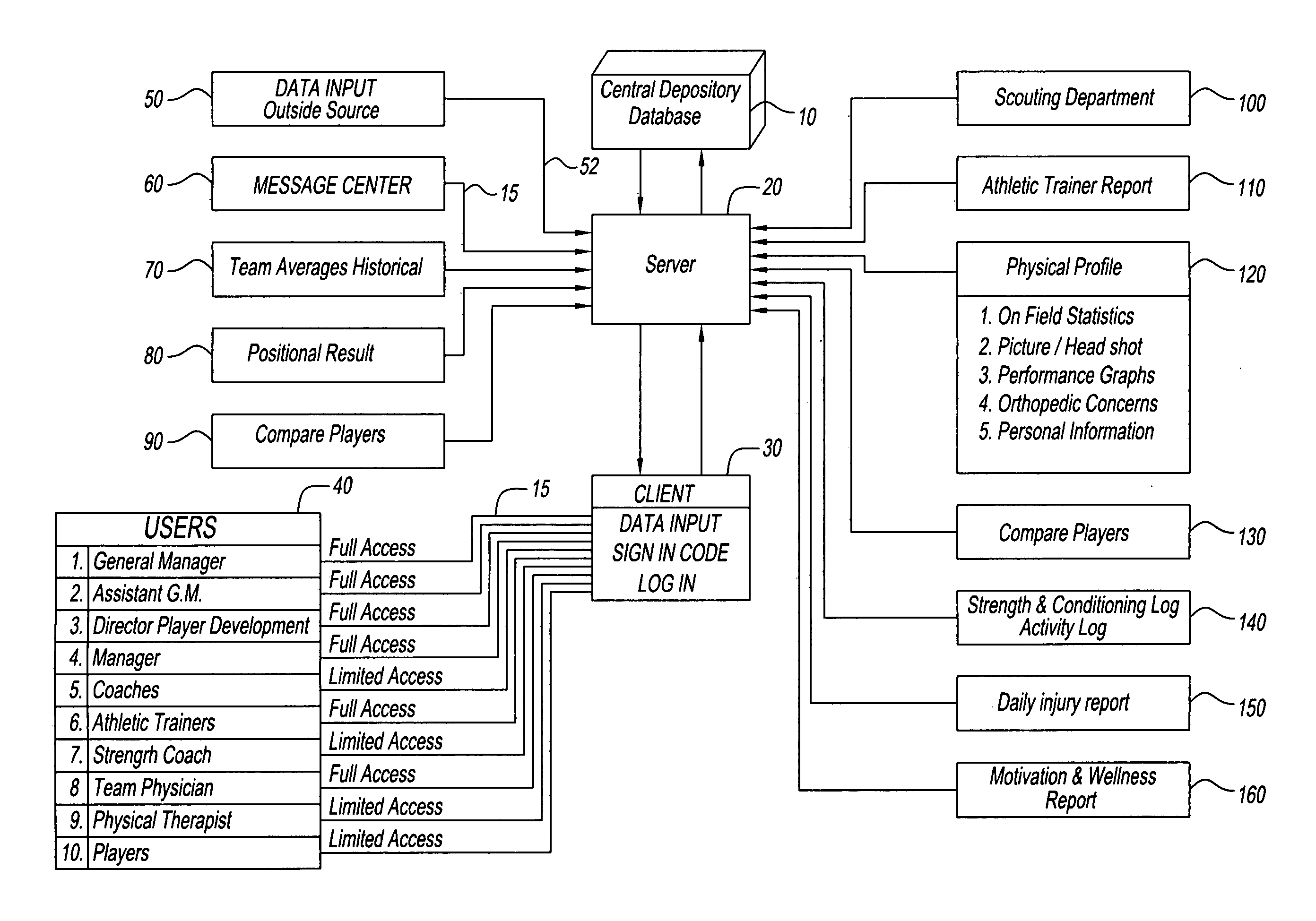

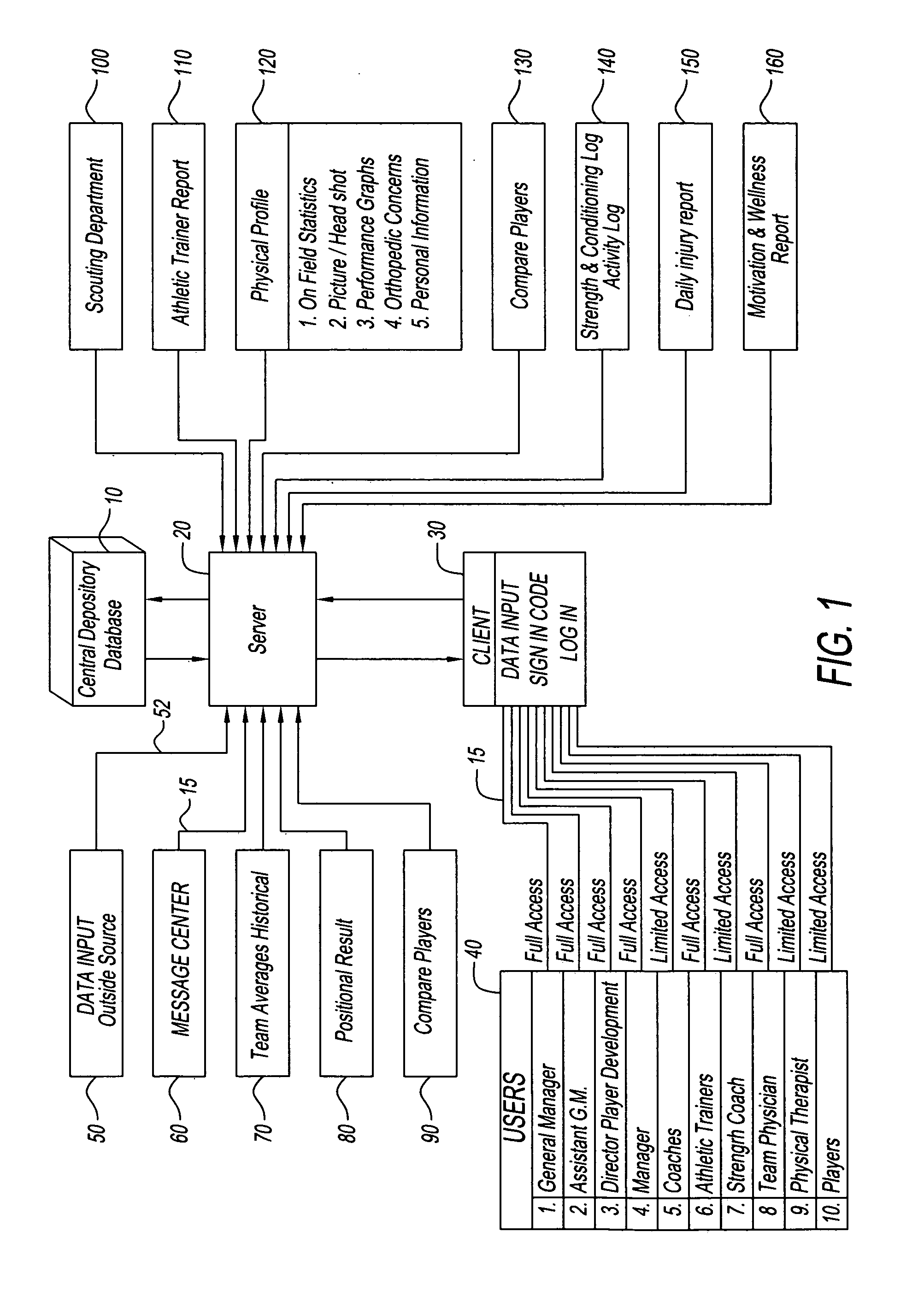

System and method for optimizing the physical development of athletes

InactiveUS20100057848A1Easy to useFacilitate communicationPhysical therapies and activitiesGymnastic exercisingDashboardData store

The present invention discloses a computer system for providing athlete metrics having a data storage tier, a server tier, a client tier and a connectivity tier. The data storage tier may be capable of storing player and team metrics data or performance data, along with external data. All data collection and storage may be capable of occurring in real time, meaning, contemporaneously with and occurrence of the even the data represents. The client tier may be capable of collecting and displaying said player and team metrics data from a plurality of input sources. The server tier may be capable facilitating communication between the client tier and the data storage tier, as well as for having computer logic for parsing and organizing conditioning data that is being recorded and reviewed at the client tier. The computational logic may be written in any computer language, disclosed herein, and other languages, and enables the present invention to establish a correlating relationship between player testing and evaluation metrics data and performance data, and team metrics that is then used to create forecasts, make decisions regarding the future, and provide feedback and direction for day to day operation of a sports industry enterprise, such as a sports team. The usage of the client is facilitated with a dashboard style interface that standardizes various implementations and applications of the present invention.

Owner:MANGOLD JEFFREY E

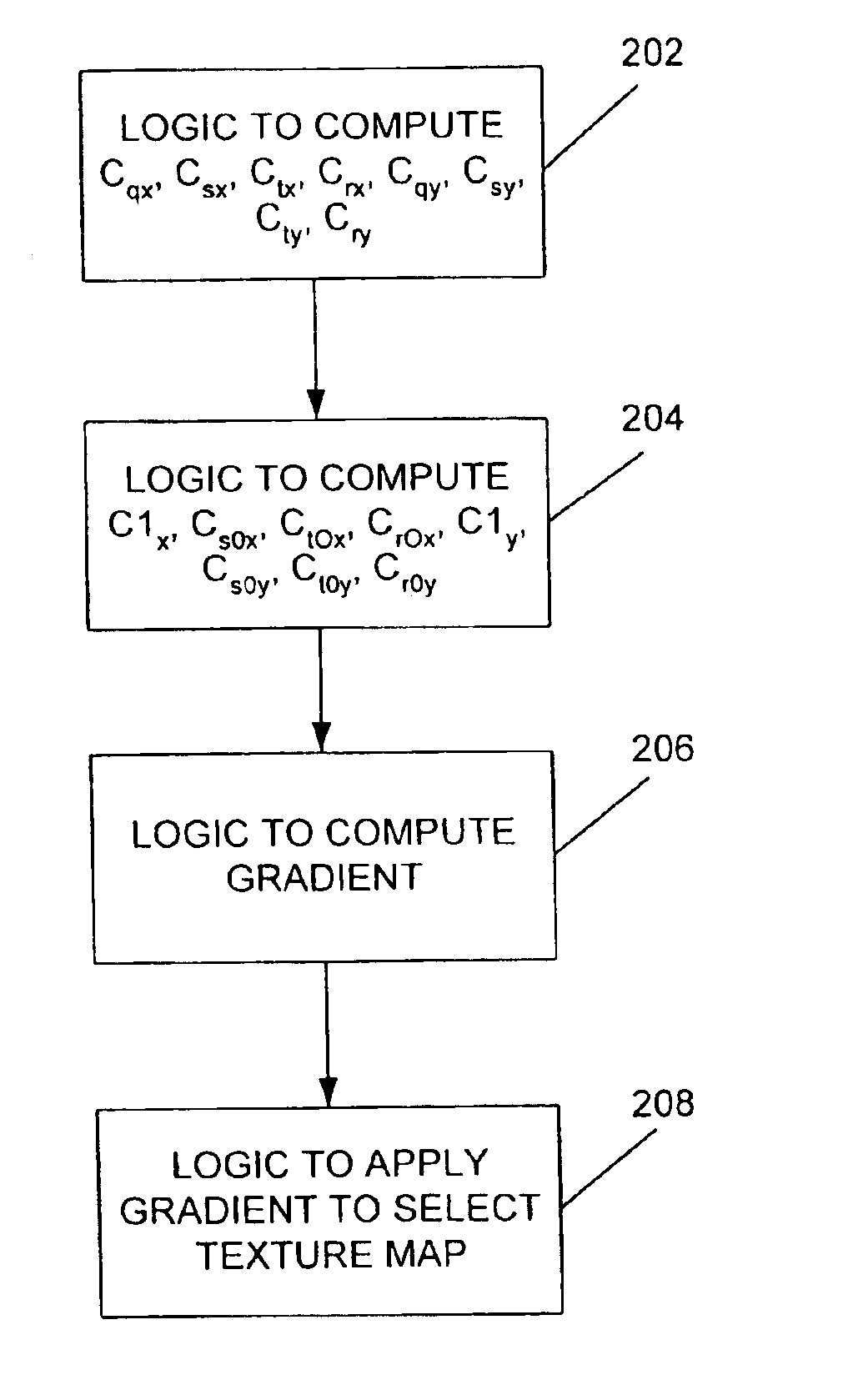

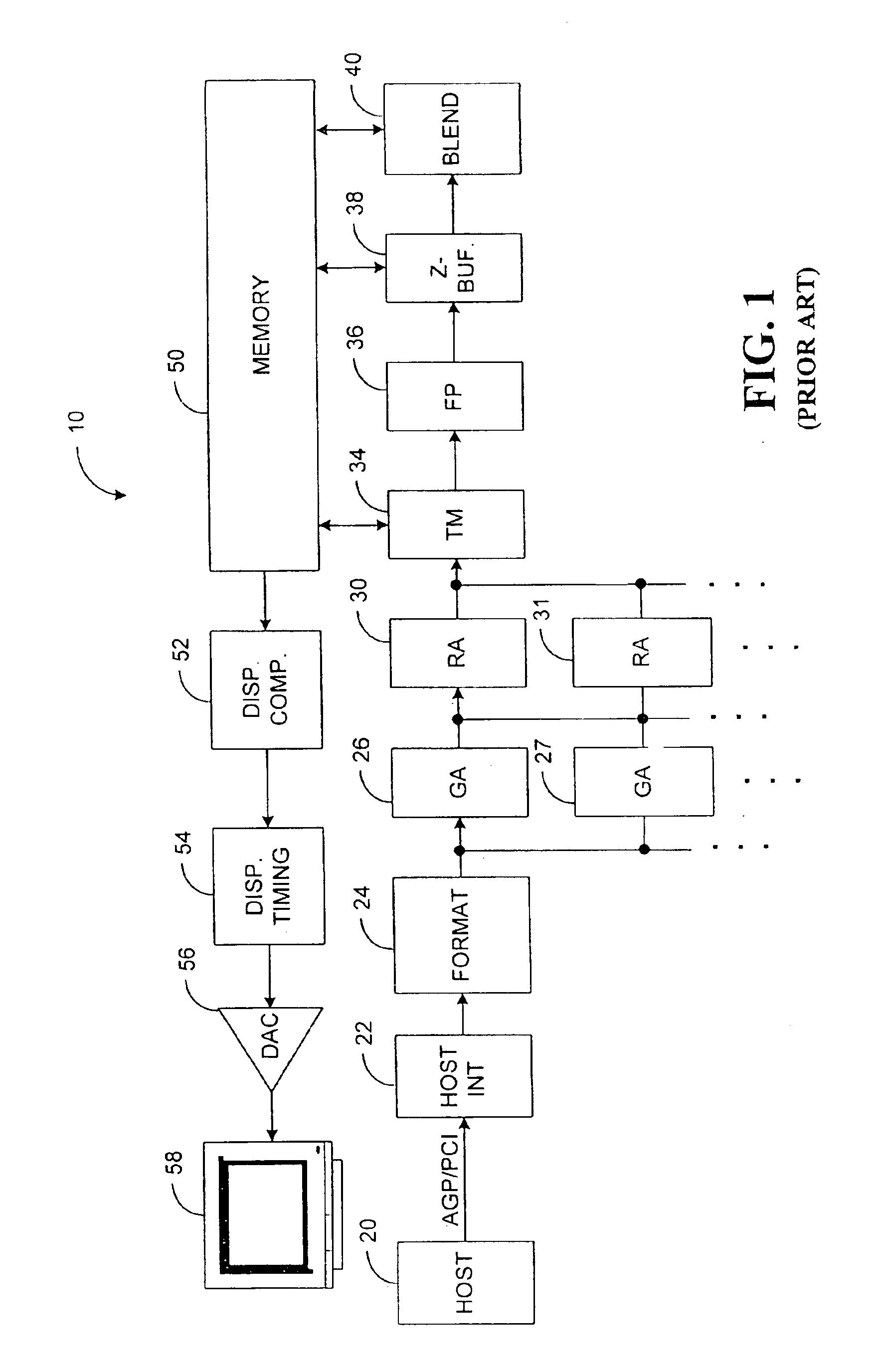

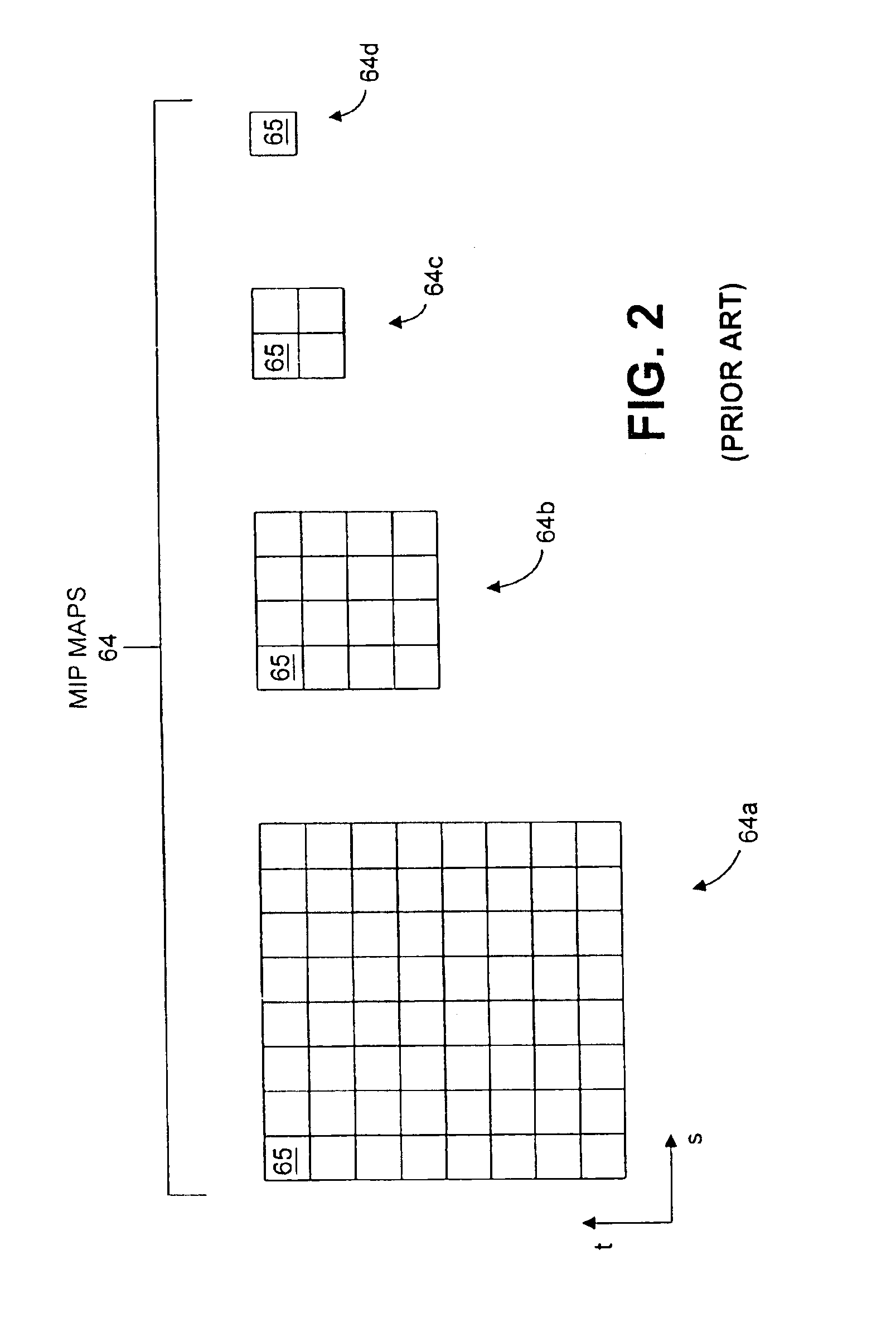

System and method for calculating a texture-mapping gradient

Systems and methods provide a more efficient and effective gradient computation. Specifically, in one embodiment, a method is provided for calculating a texture-mapping gradient, which comprises calculating constant values for use in a gradient-calculating equation, passing the constant values to logic configured to calculate the gradient, and computing the gradient using barycentric coordinates and the calculated constant values. In accordance with another embodiment, an apparatus is provided for calculating a texture-mapping gradient, which comprises logic for calculating constant values for use in a gradient-calculating equation, and logic for computing the gradient-calculating equation using barycentric coordinates and the calculated constant values. In accordance with another embodiment, a computer-readable medium is also provided that contains code (e.g., RTL logic) for generating the computational logic mentioned above.

Owner:HEWLETT PACKARD DEV CO LP

Self-reconfigurable memristor-based analog resonant computer

ActiveUS8274312B2Logic circuits characterised by logic functionDigital storageComputational logicInductor

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

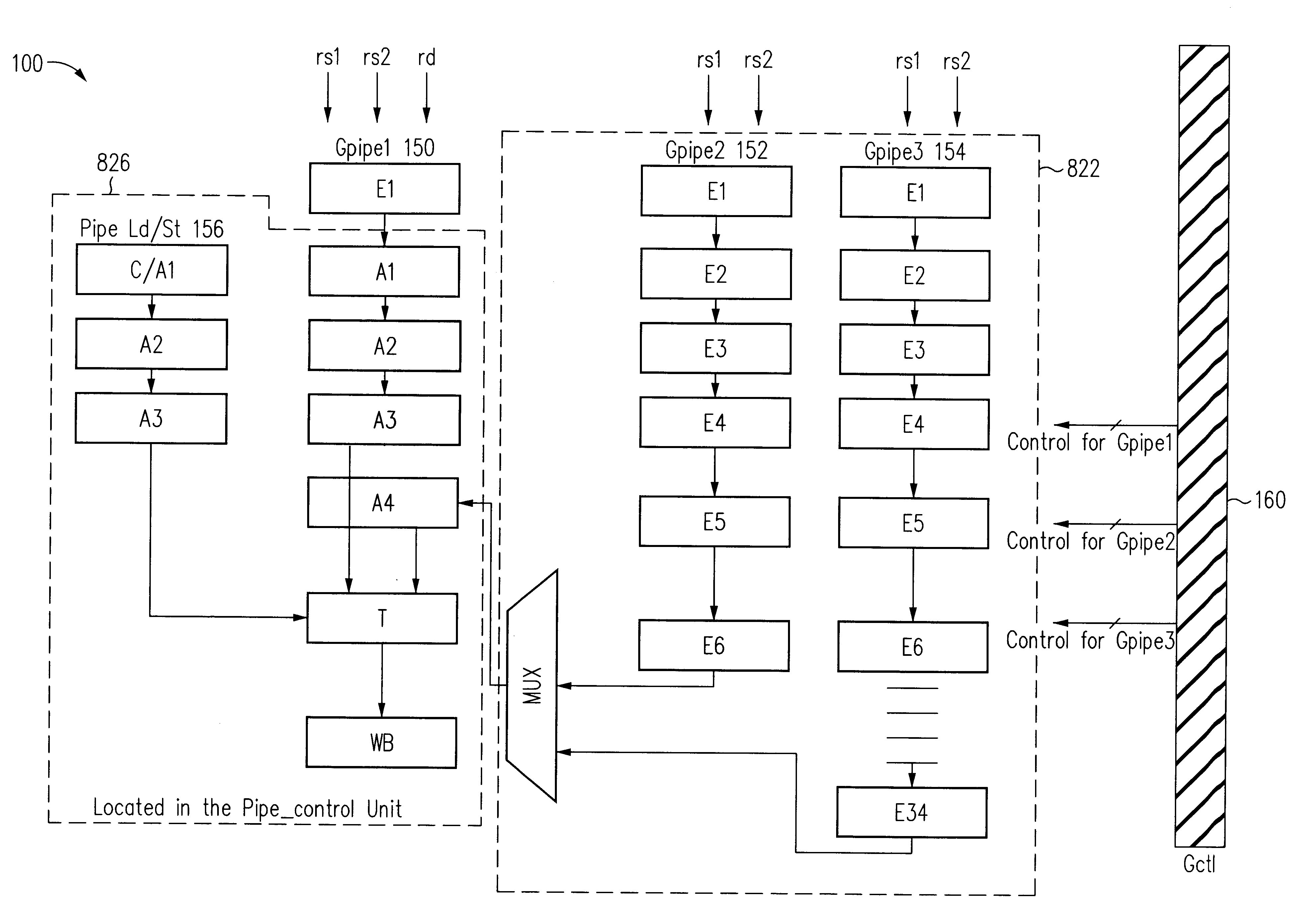

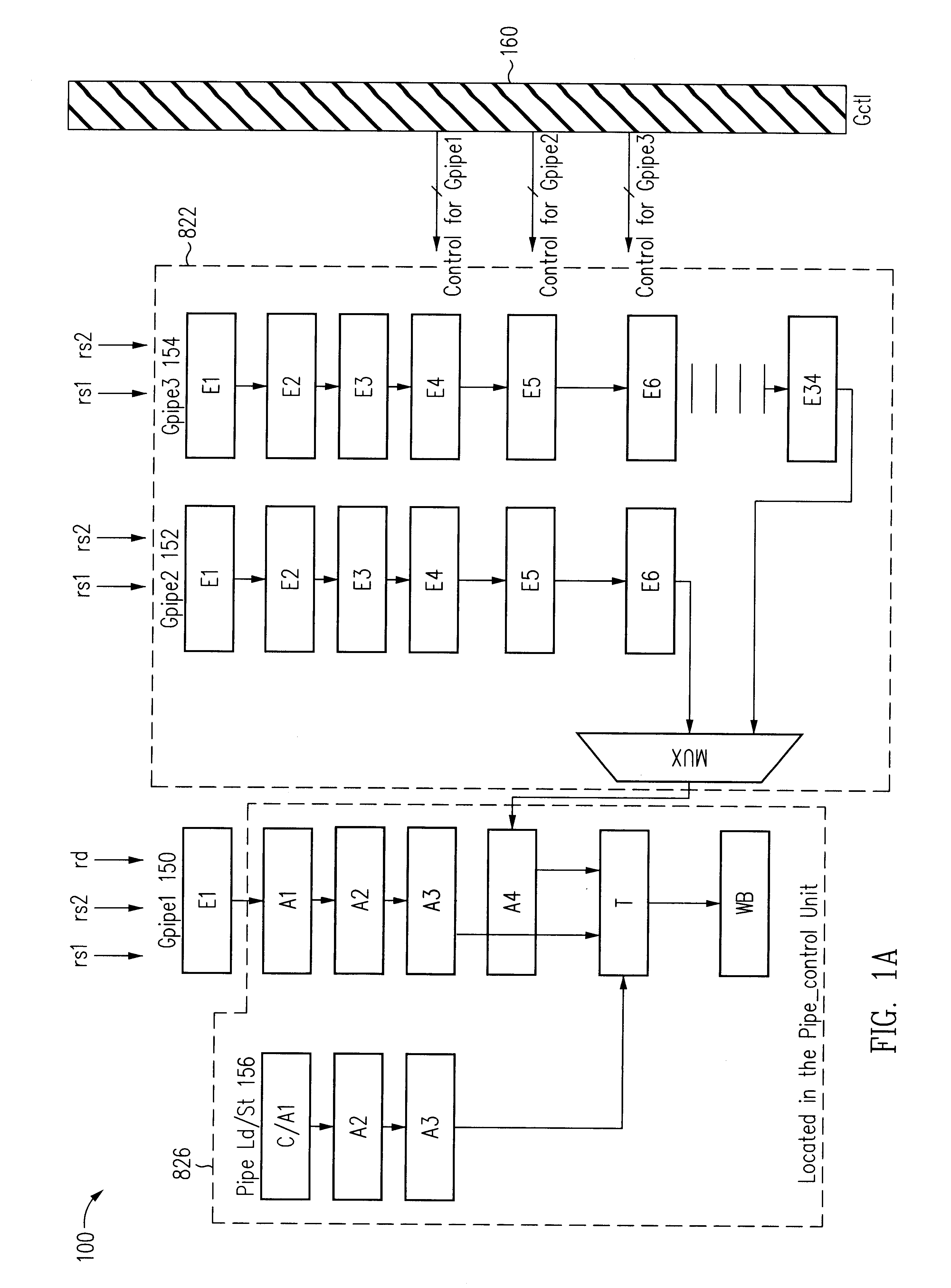

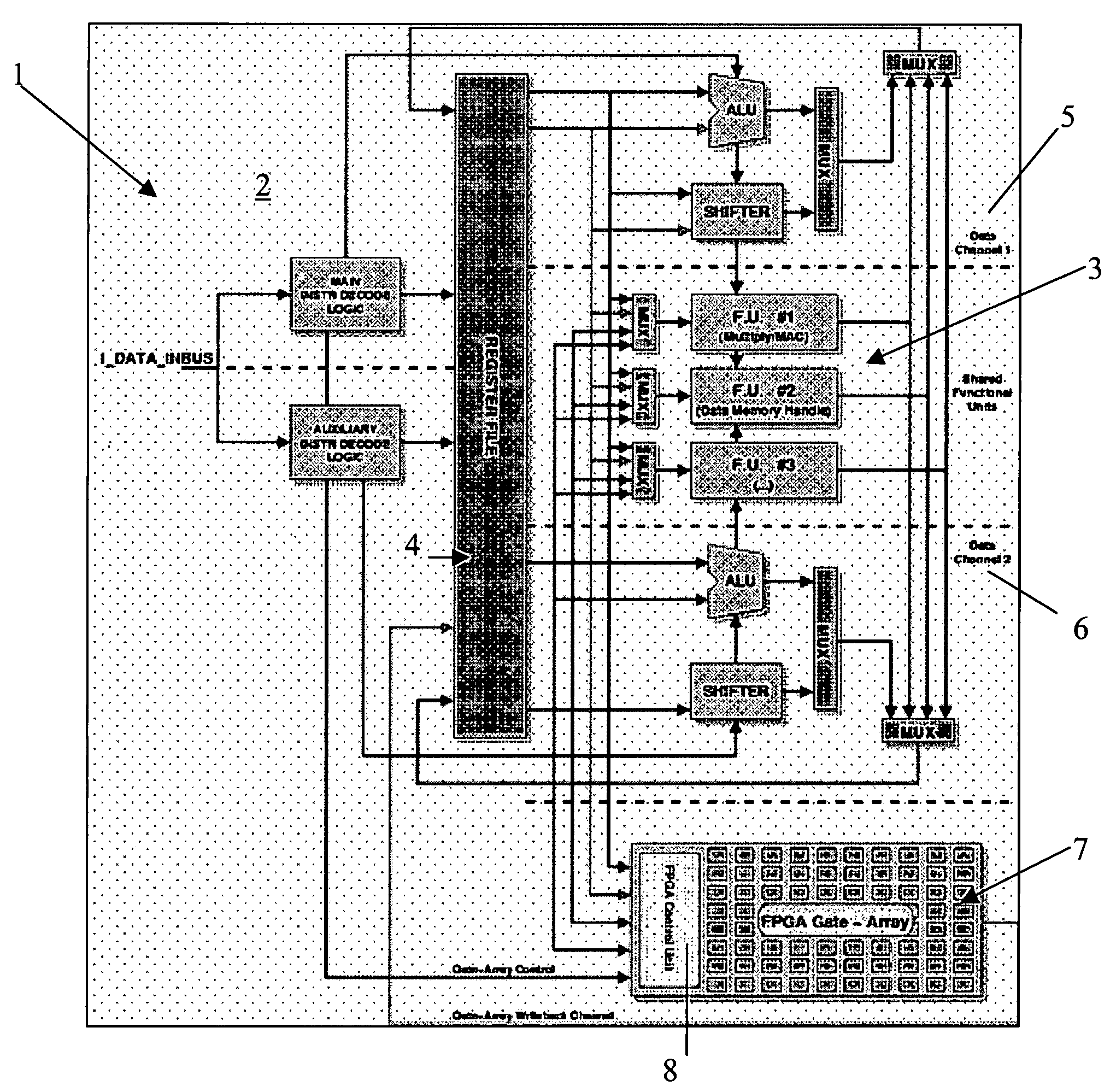

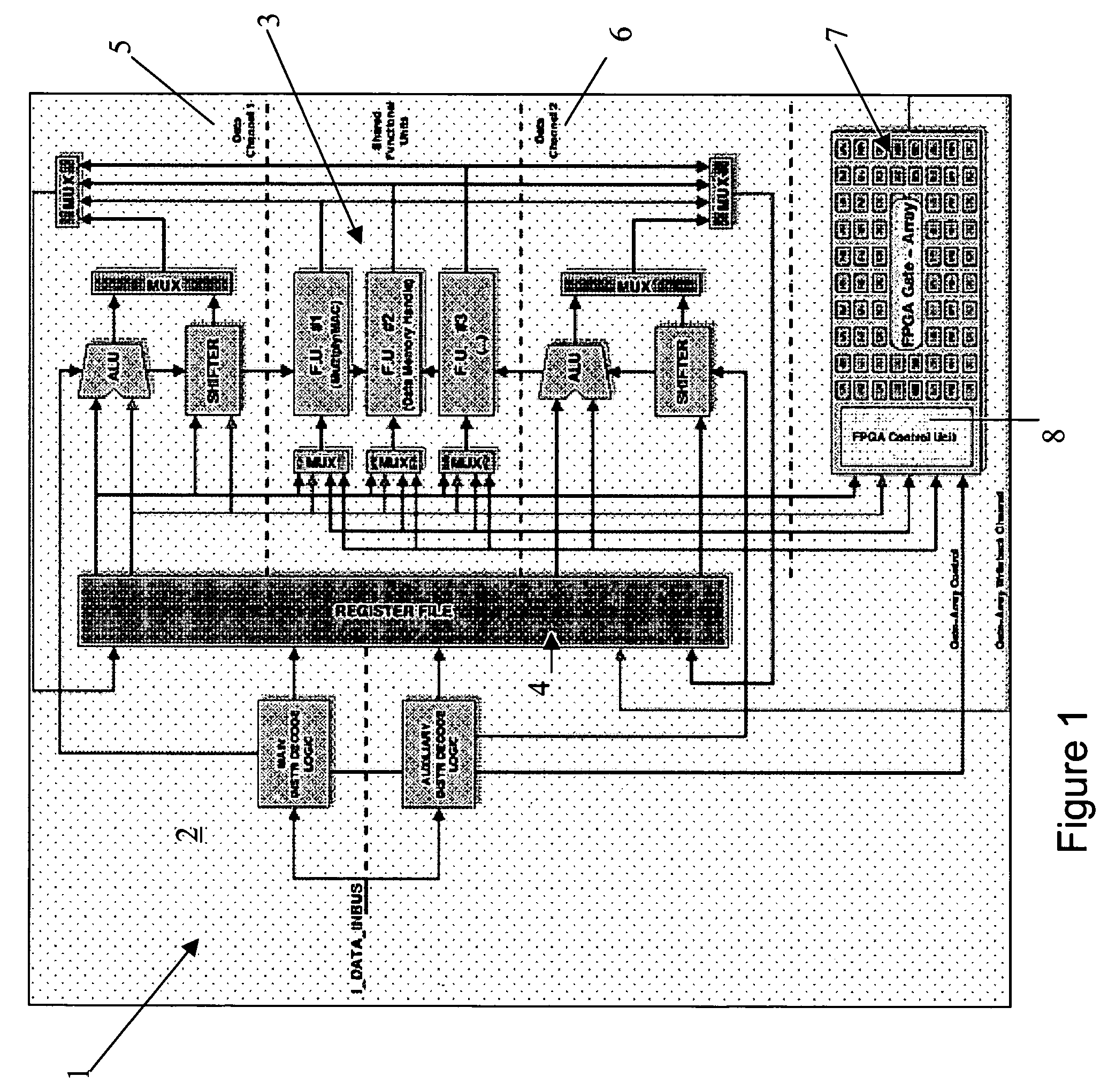

Digital architecture for reconfigurable computing in digital signal processing

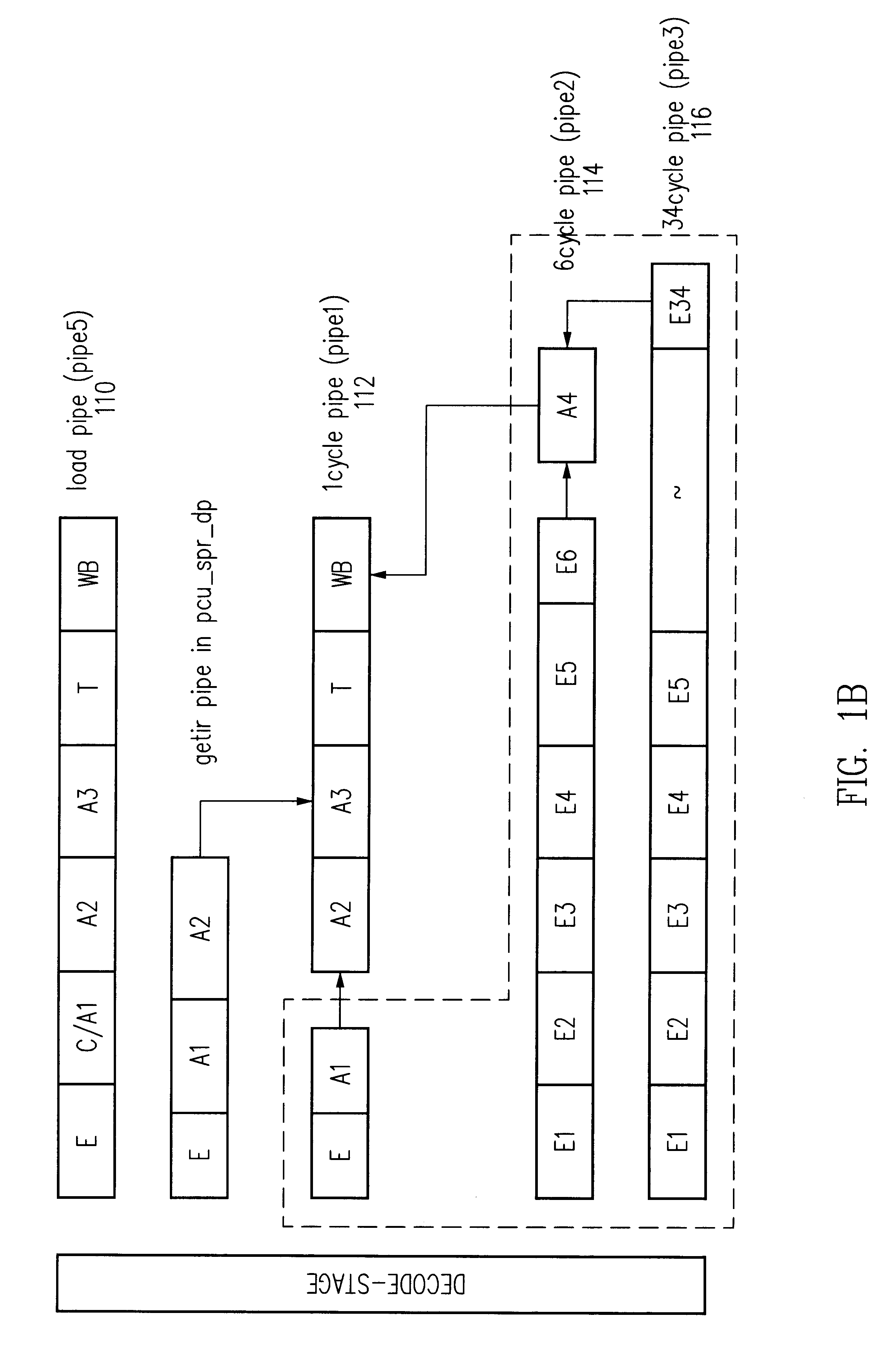

ActiveUS7225319B2Significant energySignificant performanceConcurrent instruction executionArchitecture with single central processing unitMicrocontrollerDigital signal processing

A digital embedded architecture, includes a microcontroller and a memory device, suitable for reconfigurable computing in digital signal processing and comprising: a processor, structured to implement a Very Long Instruction Word elaboration mode by a general purpose hardwired computational logic, and an additional data elaboration channel comprising a reconfigurable function unit based on a pipelined array of configurable look-up table based cells controlled by a special purpose control unit, thus easing the elaboration of critical kernels algorithms.

Owner:STMICROELECTRONICS SRL

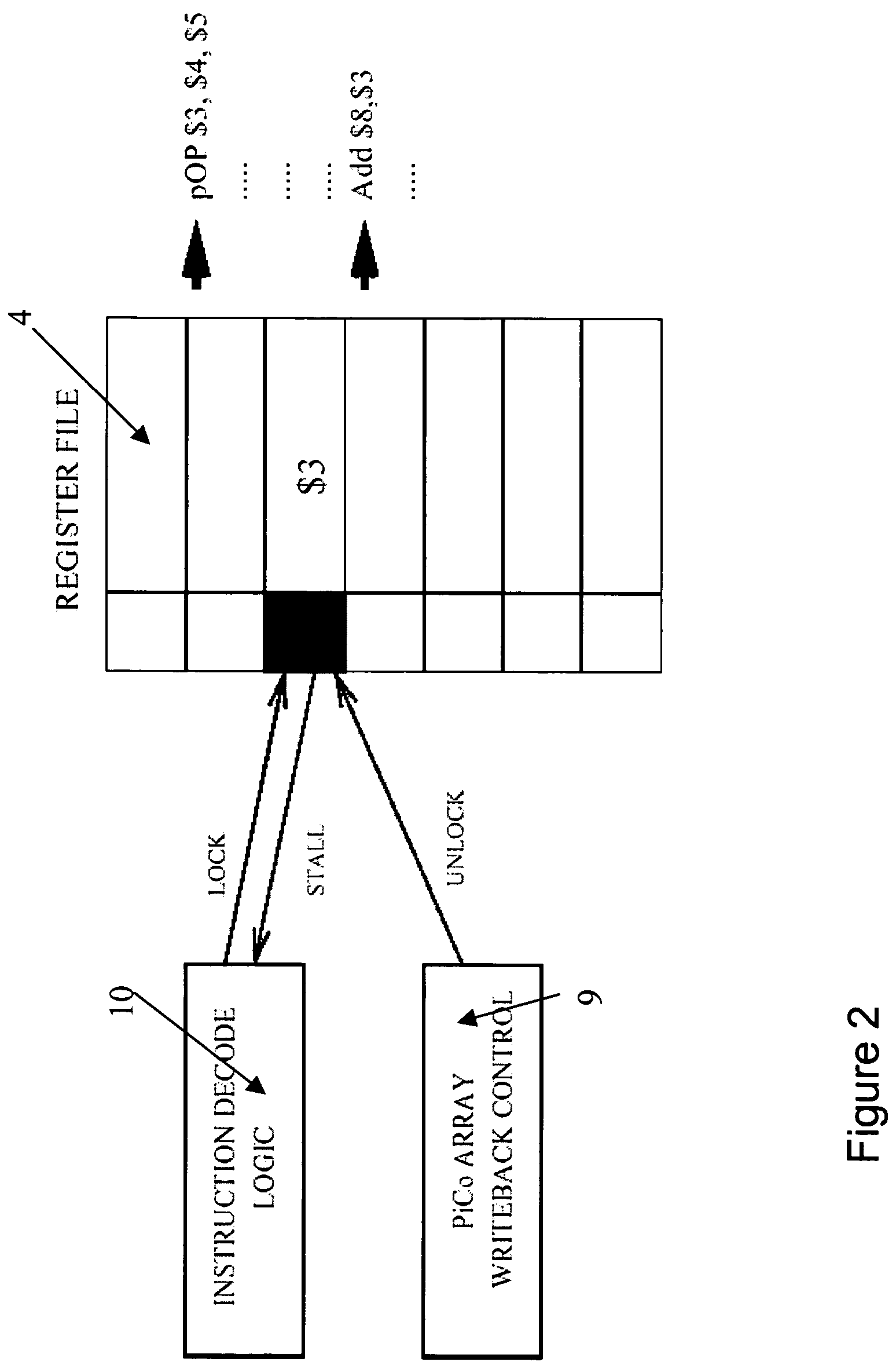

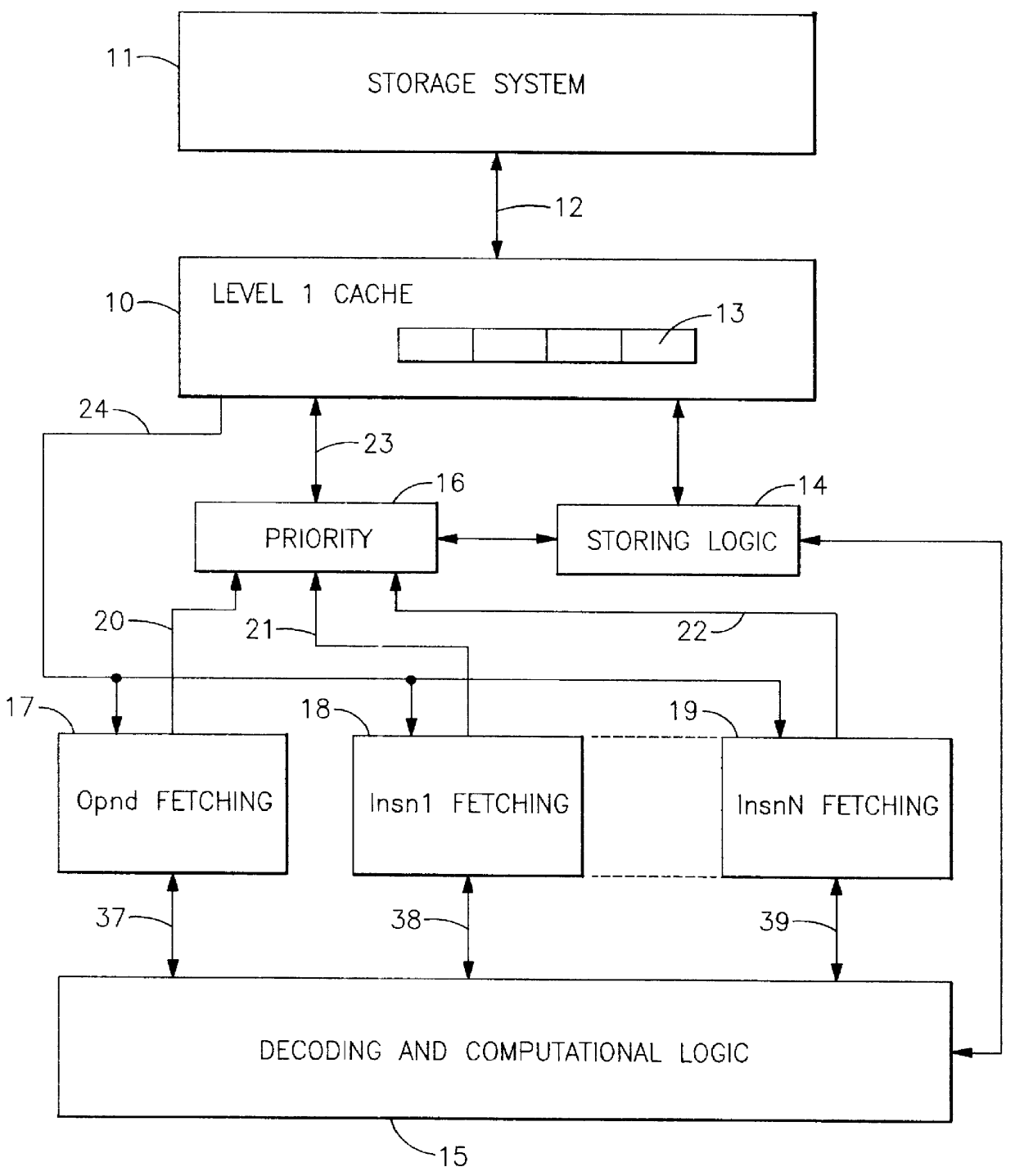

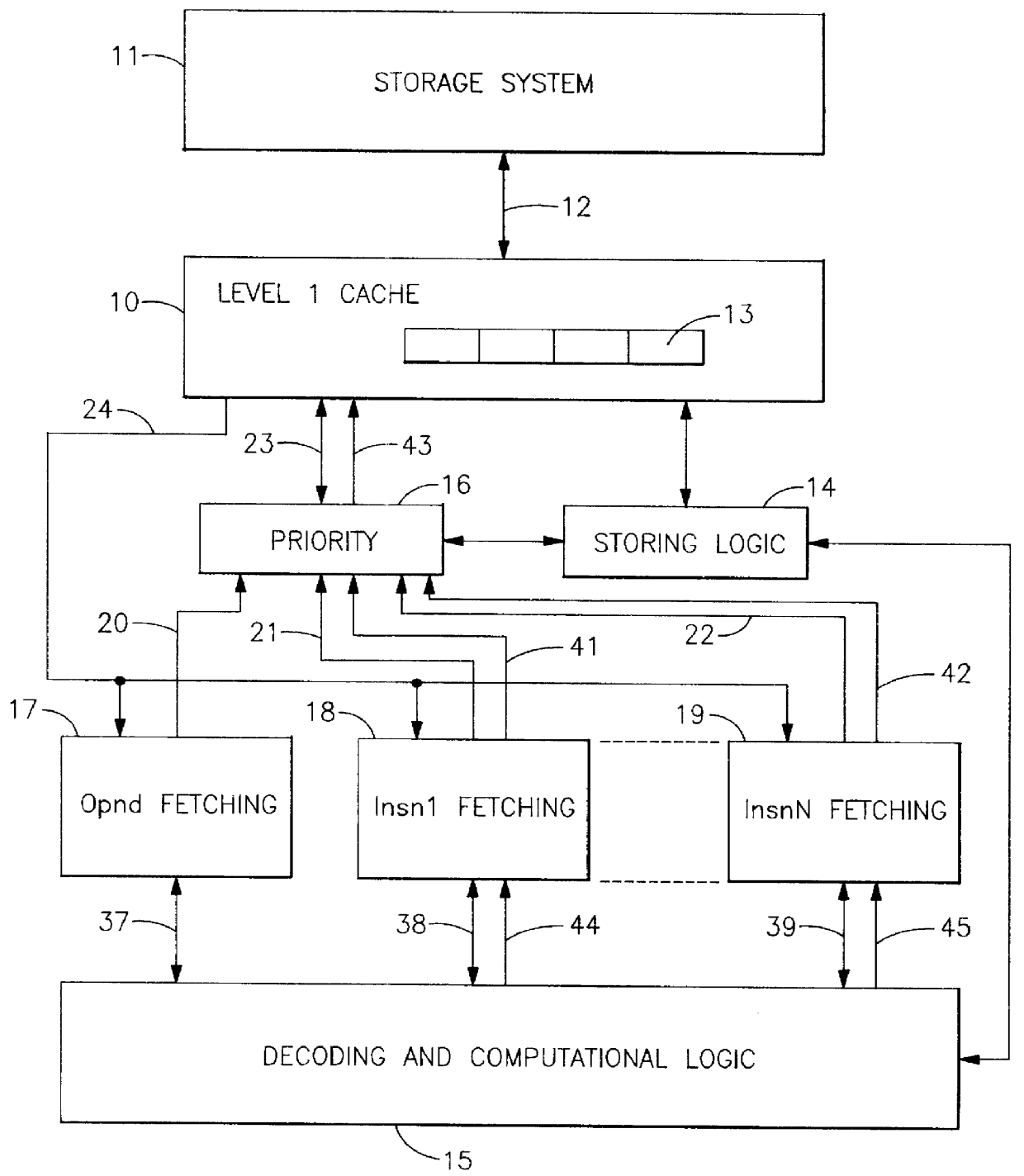

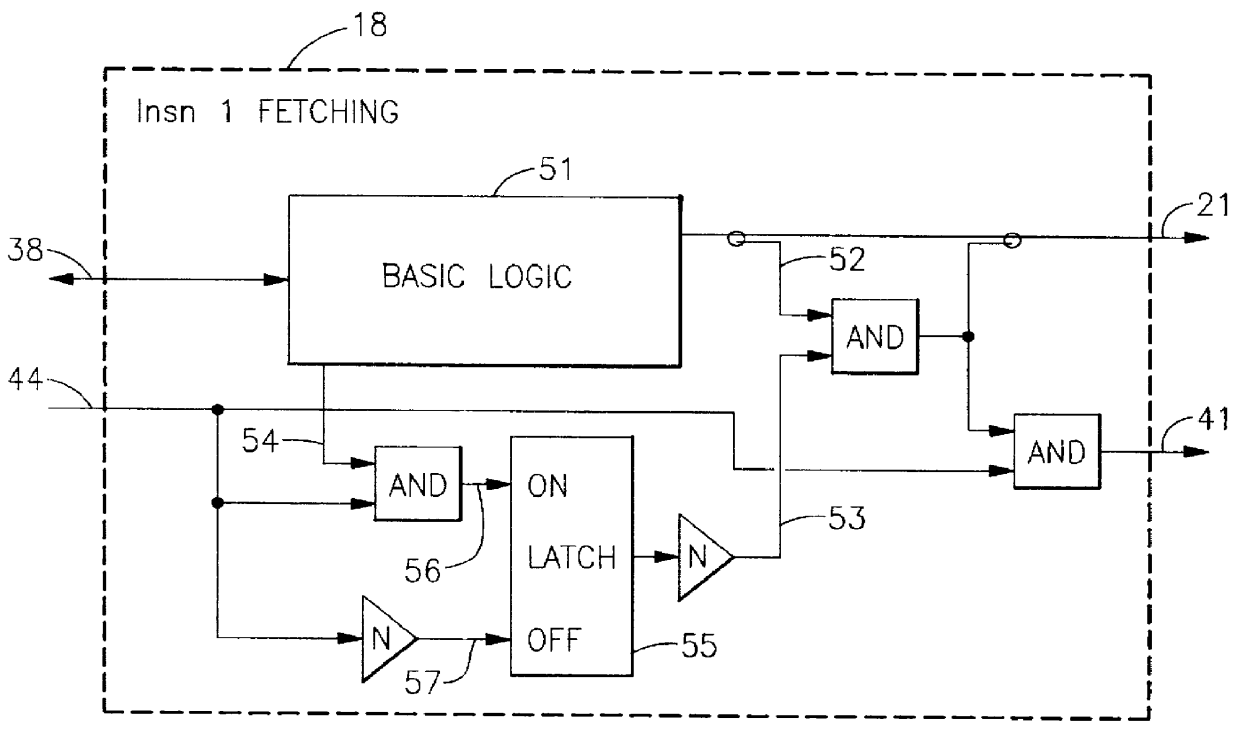

Computer with optimizing hardware for conditional hedge fetching into cache storage

InactiveUS6035392ADigital computer detailsConcurrent instruction executionComputational logicParallel computing

A computer for executing programs and having a structure for fetching instructions and / or operands along a path which may not be taken by a process being executed by a computer processor having a hierarchical memory structure with data being loaded into cache lines of a cache in the structure, and having block line fetch signal selection logic and computational logic with hedge selection logic for generating line fetch block signals for control of hedging by fetching instructions and / or operands along a path which may not be taken by a process being executed and making selected hedge fetches sensitive to whether the data is in the cache so as to gain the best performance advantage with a selected hedge fetch signal which accompanies each fetch request to the cache to identify whether a line should be loaded if it misses the cache to indicate a selected hedge fetch when this signal is ON, and rejecting a fetch request in the event the selected hedge fetch signal is turned ON if the data is not in the cache, the cache will reject the fetch, and thereafter repeating the fetch request after a fetch request has been rejected when the selected hedge fetch signal was turned ON the data was not in the cache to repeat the fetch request at a later time when it is more certain that the process being executed wants the data, or never repeating the request upon determination that the process being executed does not need the data to he fetched.

Owner:IBM CORP

Distributed Storage and Distributed Processing Query Statement Reconstruction in Accordance with a Policy

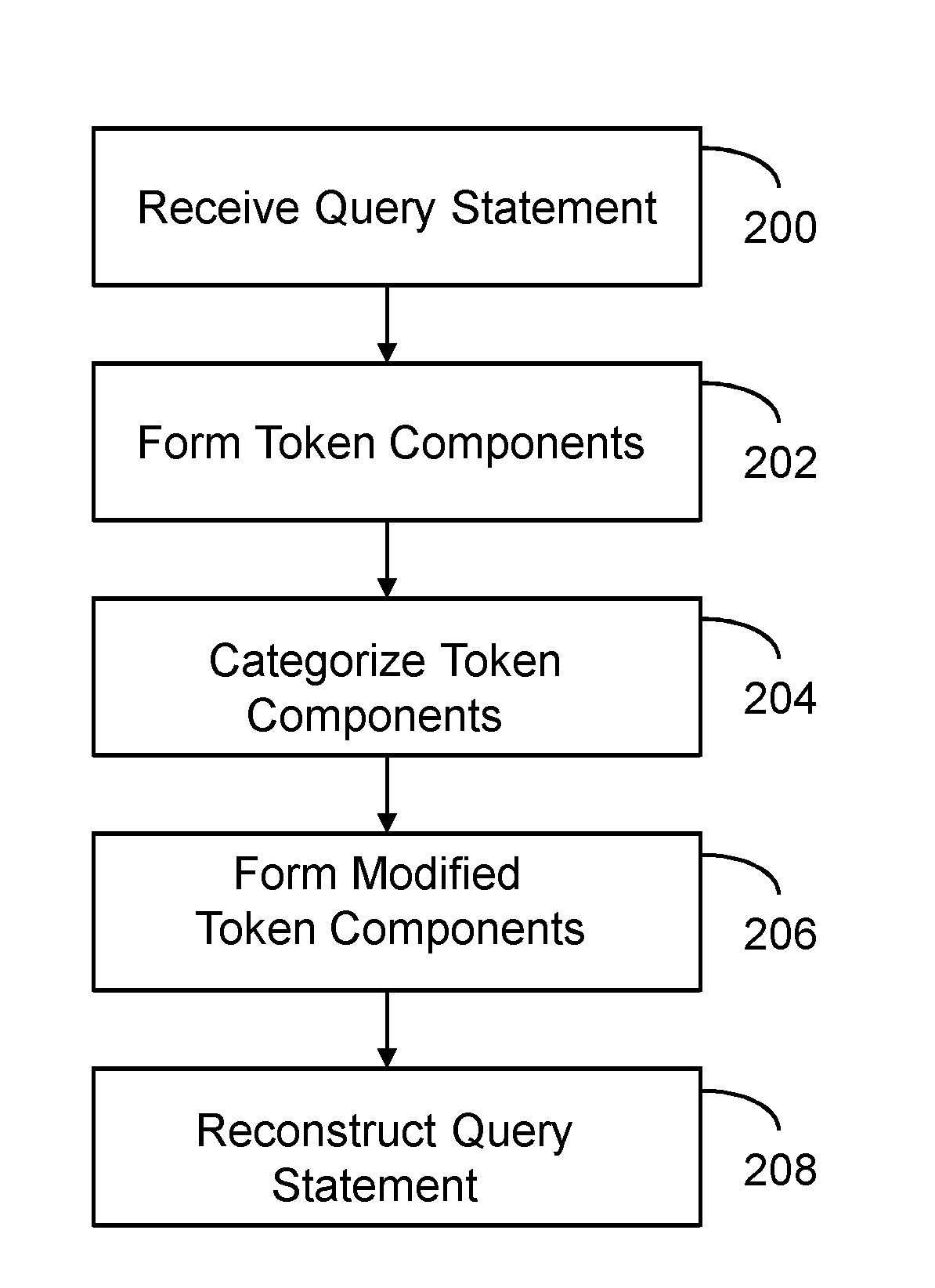

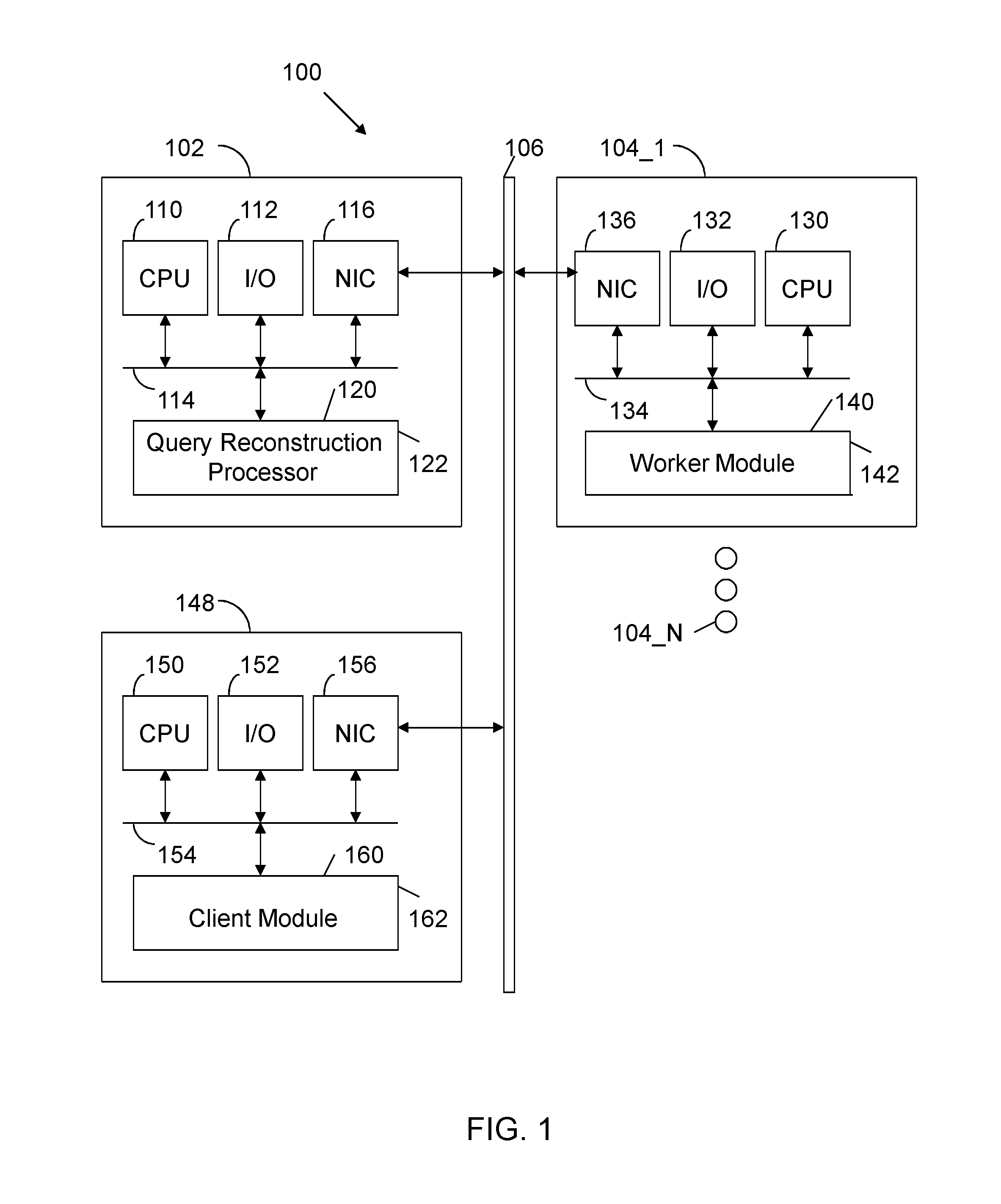

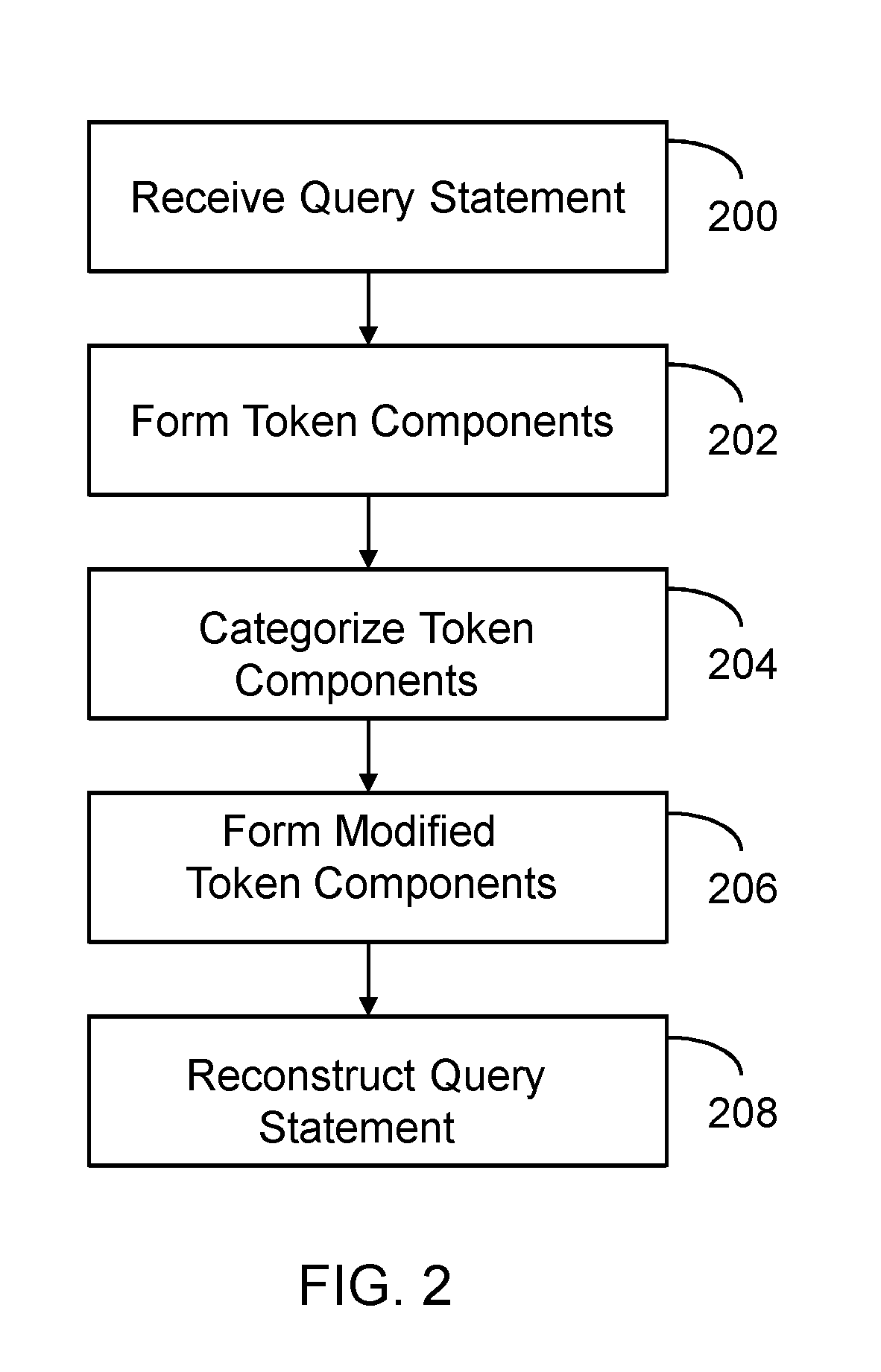

A non-transitory computer readable storage medium has instructions executed by a processor to receive a query statement. The query statement is one of many distributed storage and distributed processing query statements with unique data access methods. Token components are formed from the query statement. The token components are categorized as data components or logic components. Modified token components are formed from the token components in accordance with a policy. The query statement is reconstructed with the modified token components and original computational logic and control logic associated with the query statement.

Owner:MICROSOFT TECH LICENSING LLC

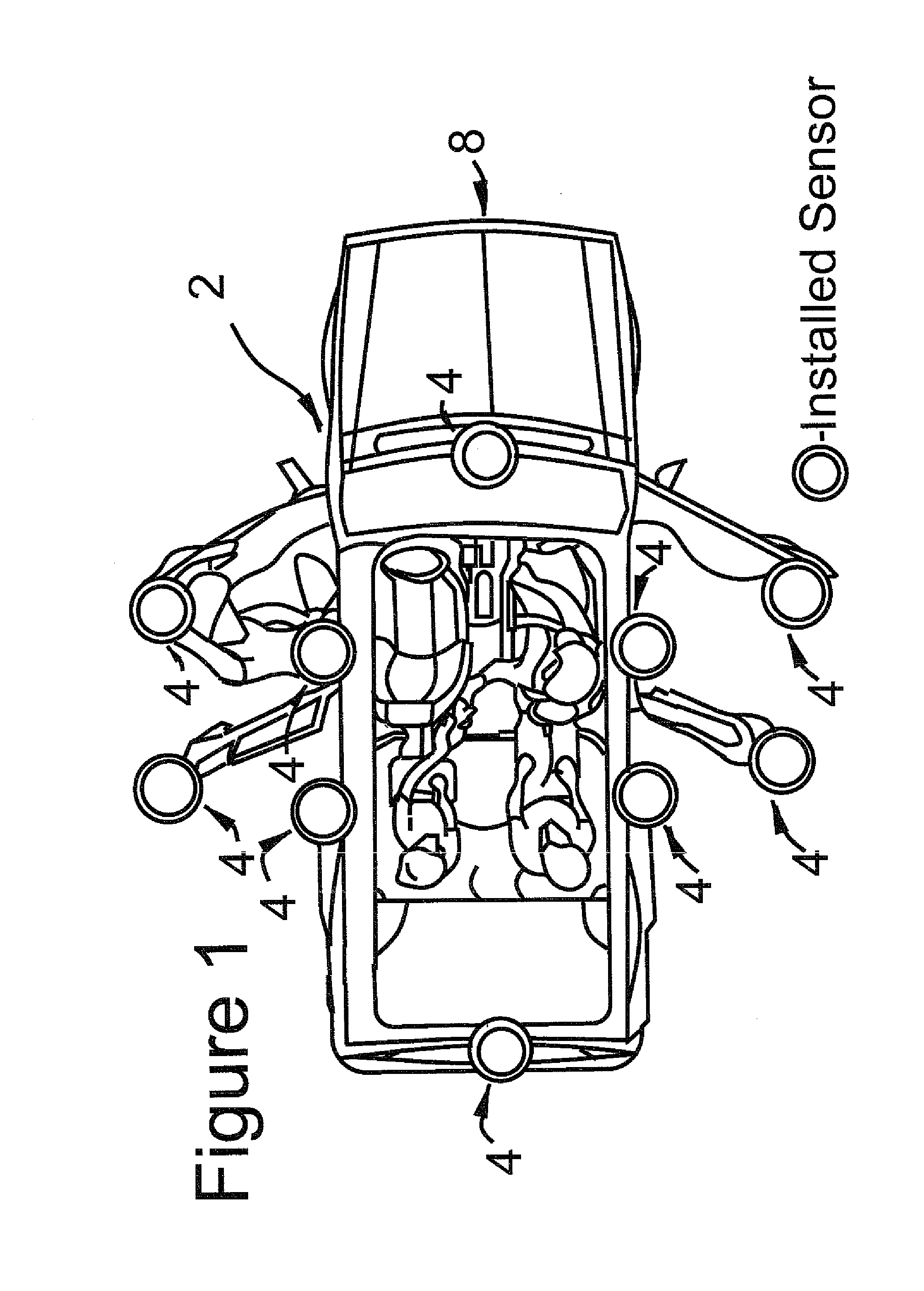

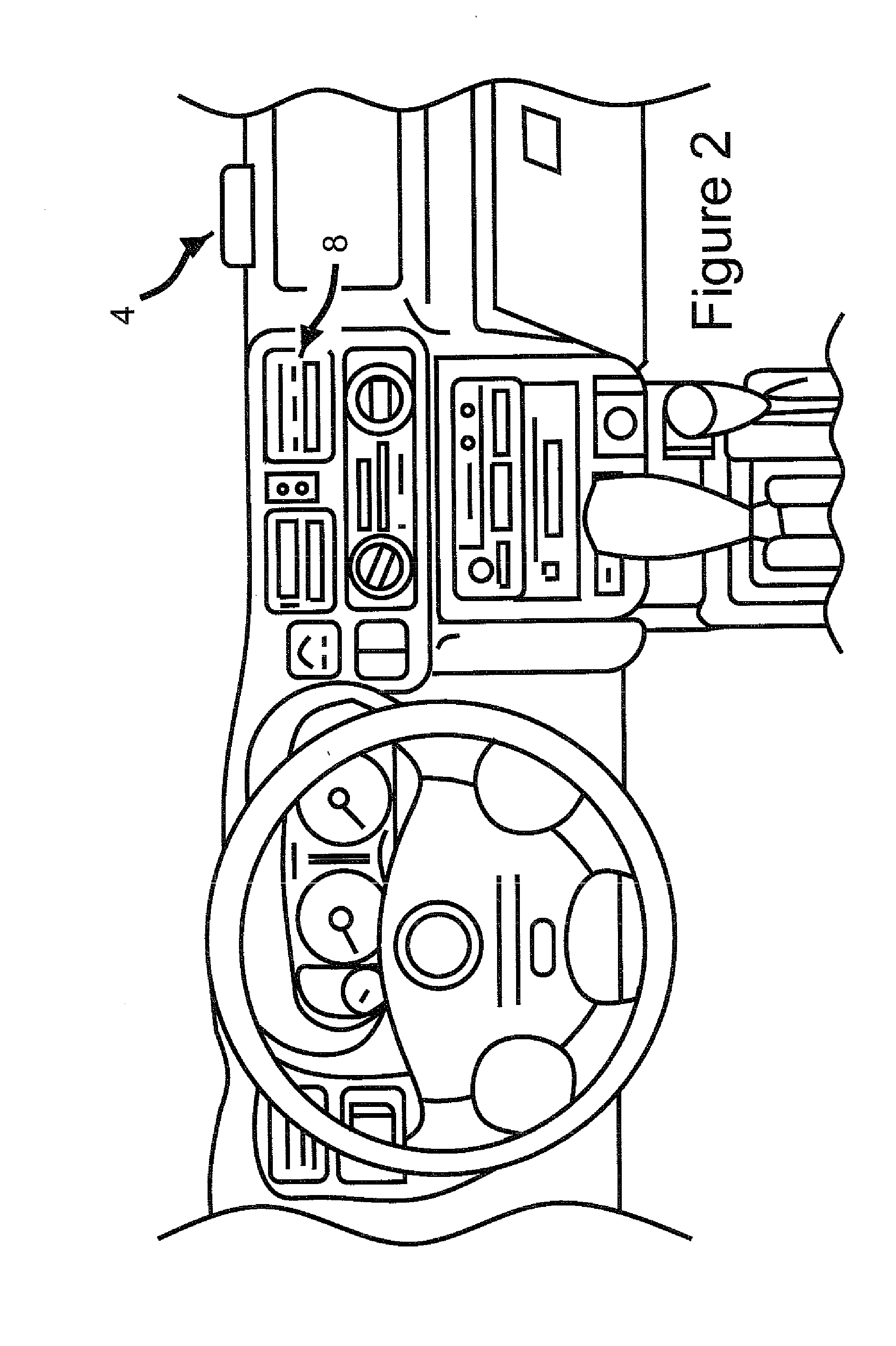

Safety enhancing cellphone functionality limitation system

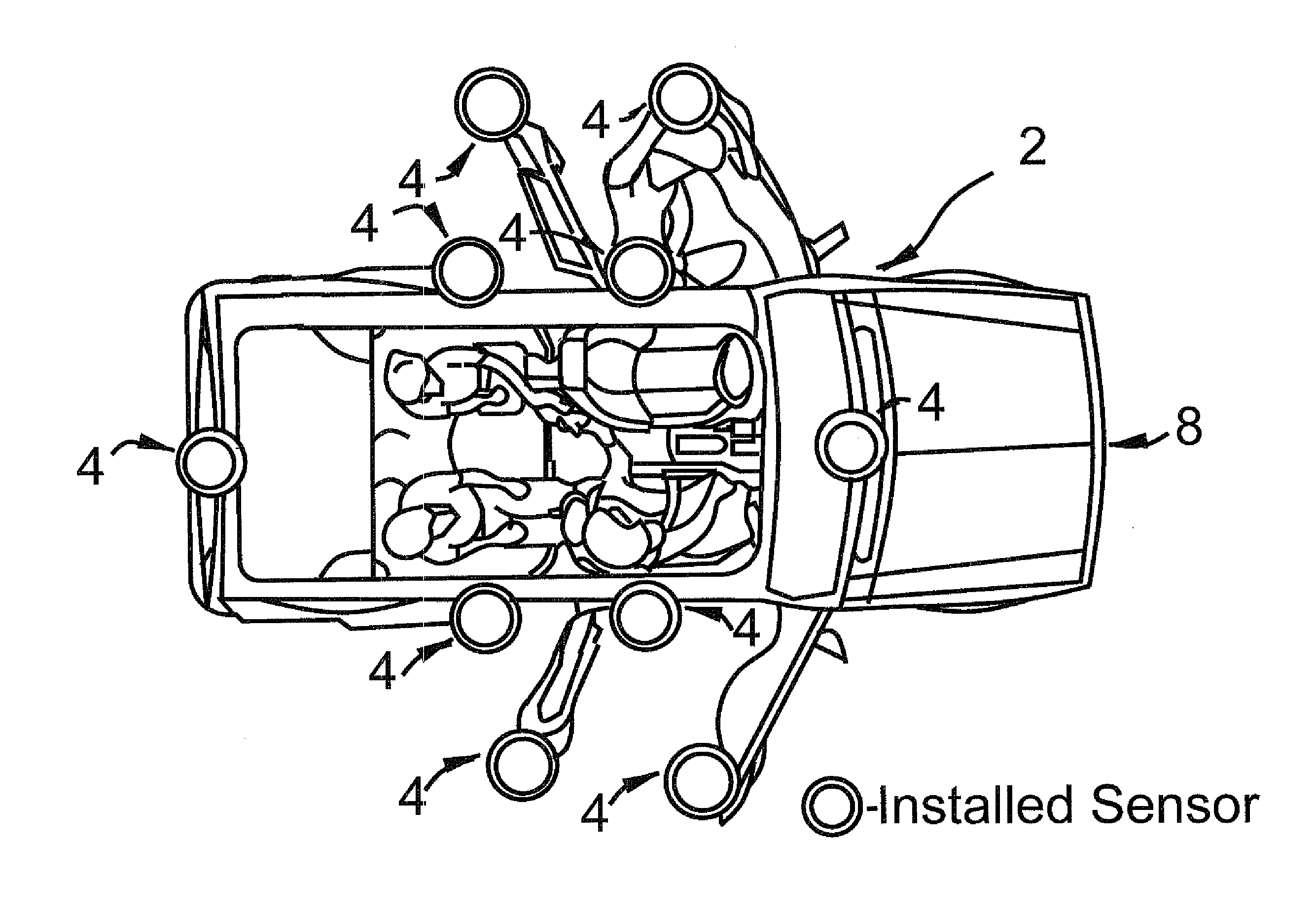

InactiveUS20140274020A1Service provisioningParticular environment based servicesComputational logicEngineering

Apparatus for the management of mobile telephone device functionality while the mobile telephone device is located within a vehicle, comprising a plurality of wireless sensors is disclosed. Each of the sensors receives position indication signals emitted by the mobile telephone device, and the Mets signals indicating the position of each sensor relative to the mobile telephone device, the wireless sensors being positioned at different positions in the period a transmitter transmits a signal to the mobile telephone device, causing the mobile telephone device to transmit the position indicating signals to the sensors. A processor is coupled to receive the signals indicating the position of each sensor relative to the signals indicating the position of each sensor relative to the mobile telephone device. The processor comprises a computational logic unit and a data storage device for storing software for computing the position of the mobile telephone device.

Owner:MILLER ALAN

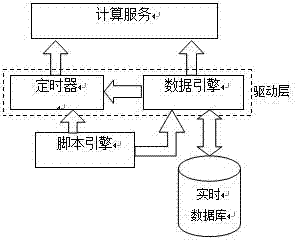

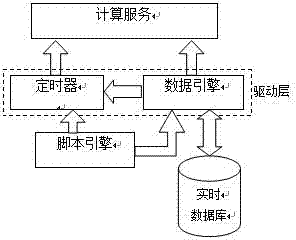

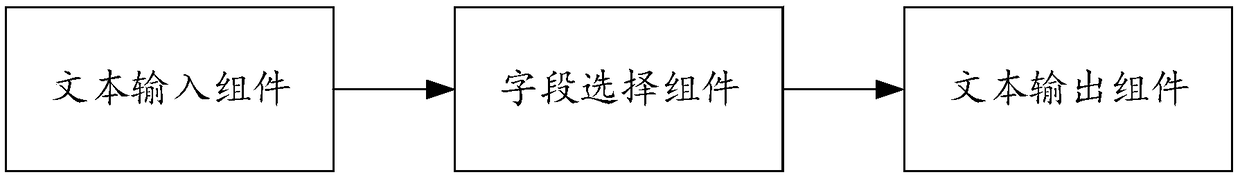

Calculation method based on expansible script language

ActiveCN102346671AAchieve decouplingImprove reliabilitySpecific program execution arrangementsSystem callLogisim

The invention provides a calculation method based on an expansible script language, wherein the calculation method is capable of supporting a user to provide multiple lines of script codes in each calculation service so as to perform various complicated calculation, logic processing and system calling (by executing an application program in a host system). The technology of the calculation method is deeply integrated with an object-oriented ChRDB (Chec Real-Time Database) system and supports the following three types of calculation script logic trigger manners: (1) timer trigger, (2) specific data point variation / write-in trigger, and (3) certain data object variation / write-in trigger, wherein a more complex real-time execution logic based on real-time data can be generated through configuration, and when variable user requirements are confronted during engineering implementation of an automated monitoring project, corresponding work can be completed through configuration, so that the secondary development work load of a system can be maximally reduced.

Owner:南京国电南自轨道交通工程有限公司

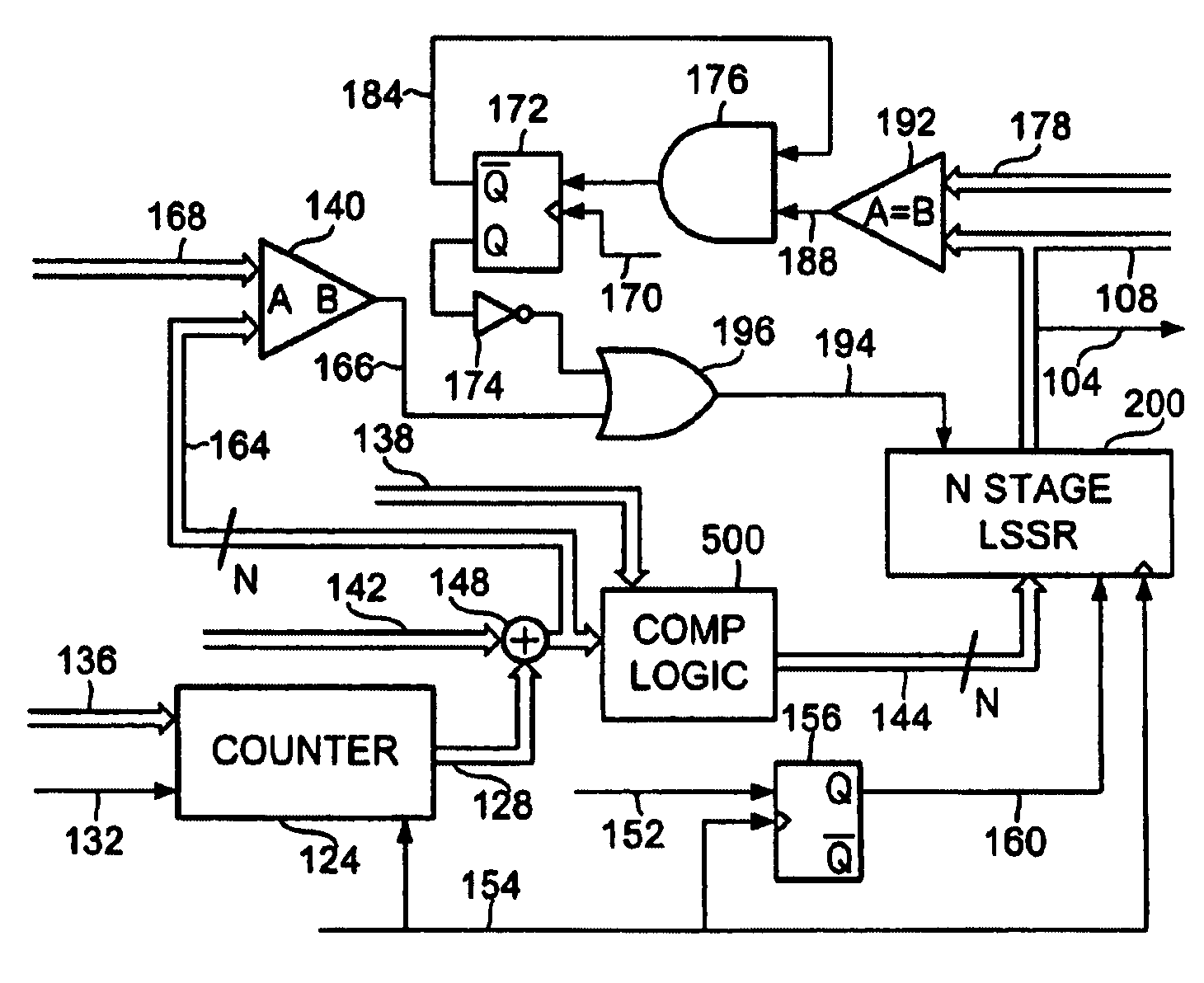

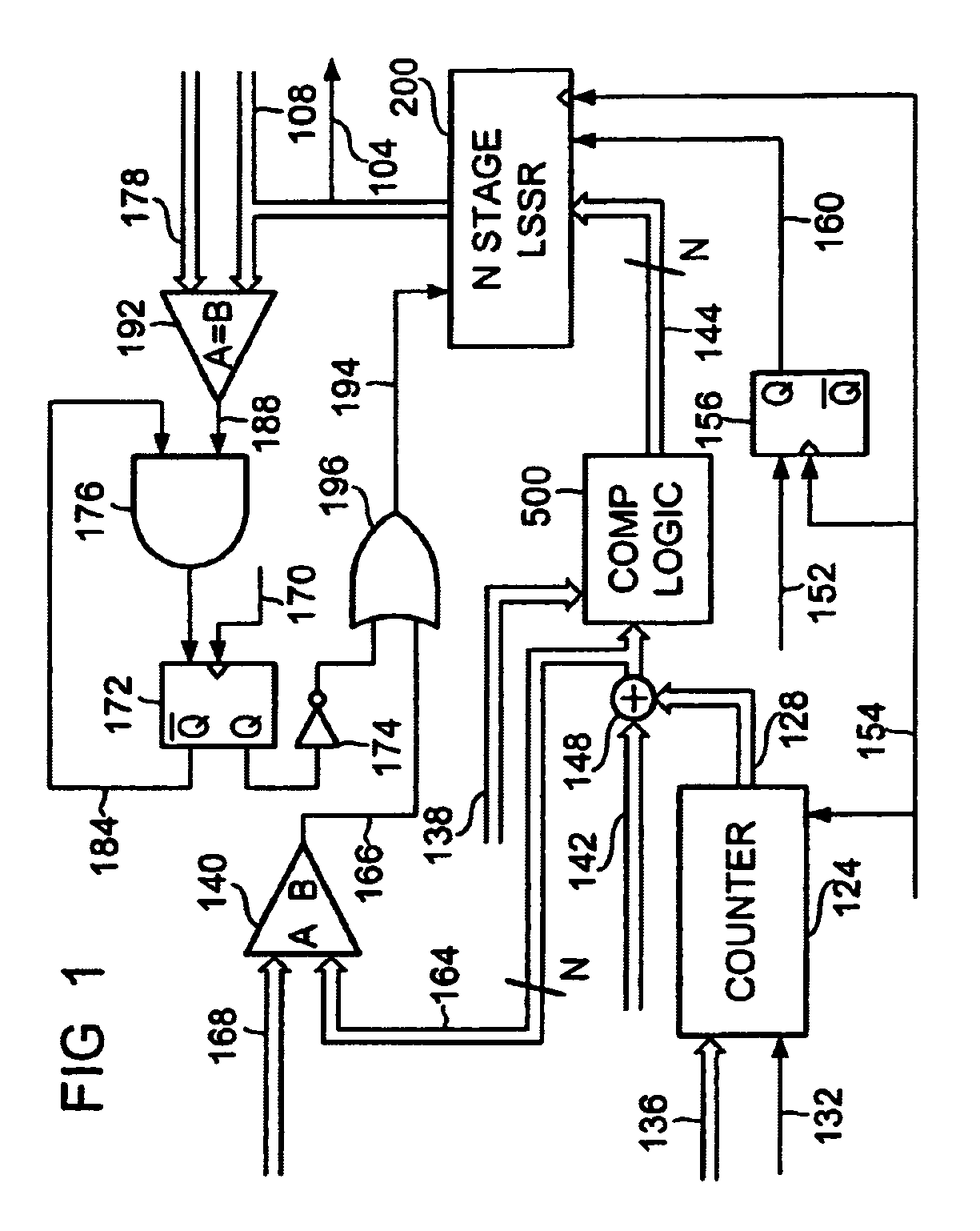

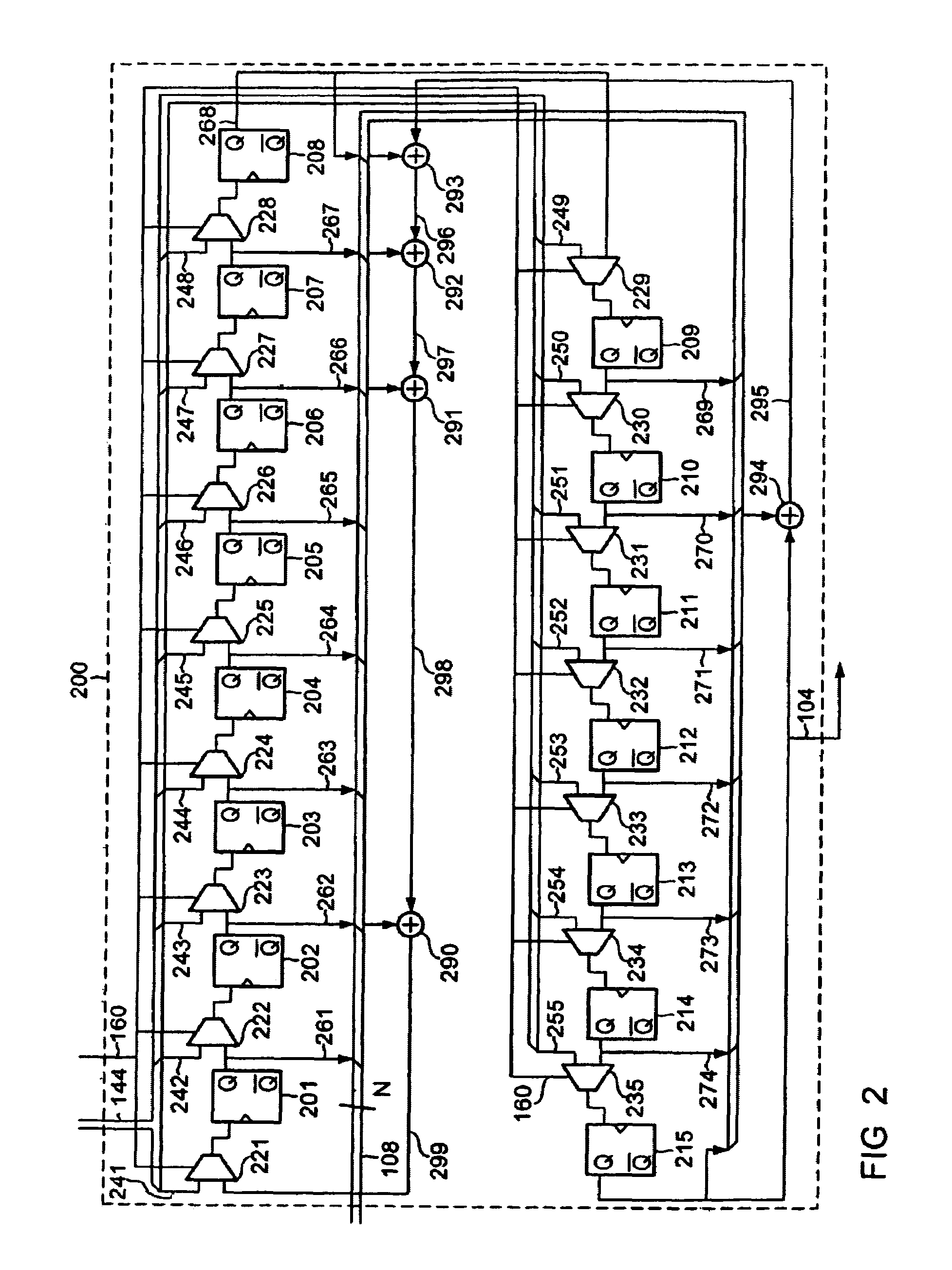

Apparatus and method for immediate non-sequential state transition in a PN code generator

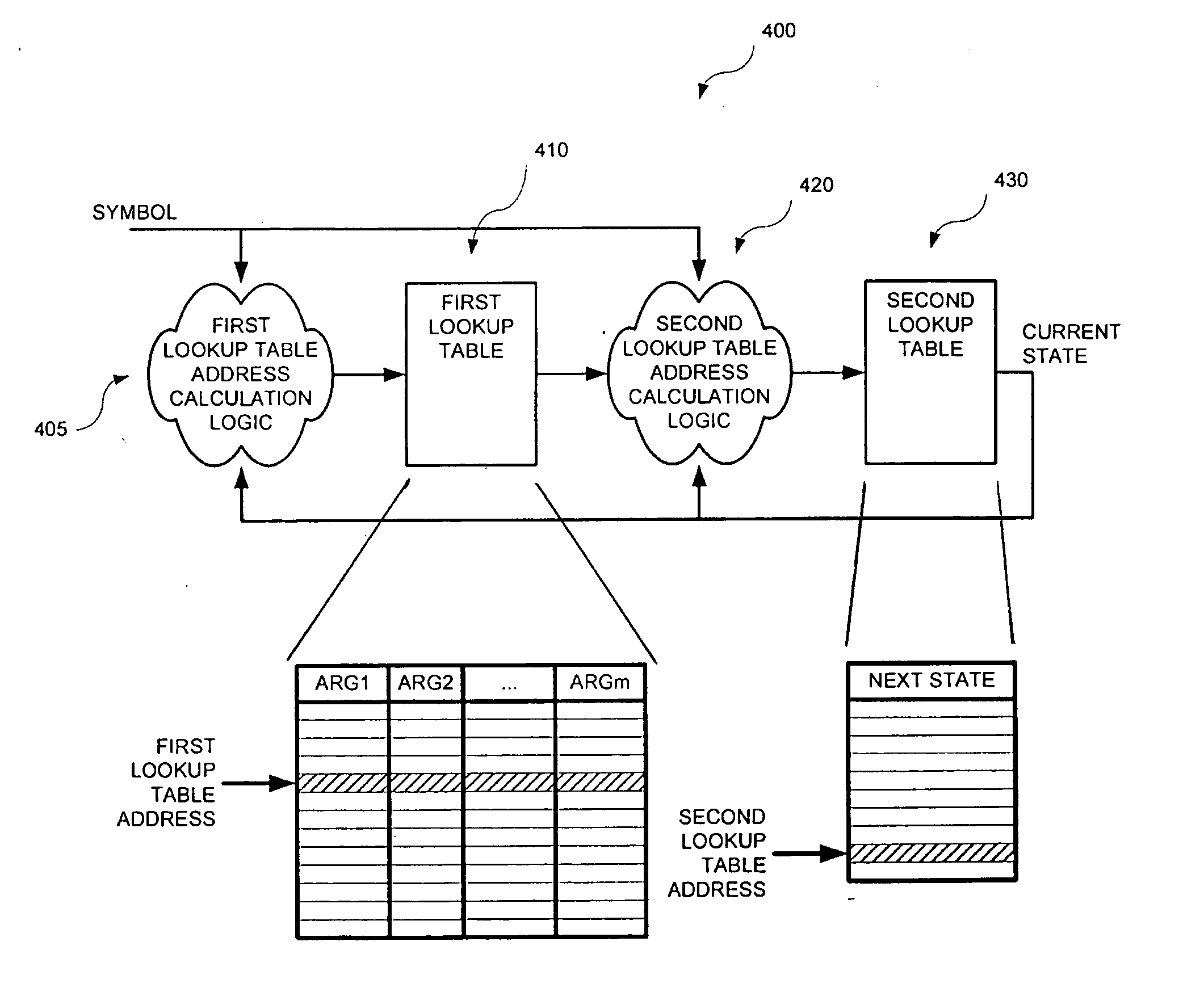

InactiveUS7124156B2Reduce complexityReduce computing timeRandom number generatorsComputation using non-contact making devicesShift registerPhase correlation

A power of a square matrix is determined in a time approximately proportional to the upper integer of the base-2 logarithm of the order of the matrix. A preferred embodiment uses two types of look-up tables and two multipliers for a matrix of 15×15, and is applied to a pseudorandom noise (PN) sequence phase correlation or state jumping circuit. An exact state of a PN code can be determined or calculated from applying an appropriate offset value into a control circuit. The control circuit can produce a PN sequence state from the offset value and typically does so within one system clock period regardless of the amount of the offset. Once the exact state is determined, it is loaded into a state generator or linear sequence shift register (LSSR) for generating a subsequent stream of bits or symbols of the PN code. The PN generator system may include state computing logic, a maximum length PN generator, a zero insertion circuit and a zero insertion skipping circuit.

Owner:NEC AMERICA

System of finite state machines

InactiveUS20060036413A1Enough timeMultiple digital computer combinationsConcurrent instruction executionSufficient timeComputational logic

A system of finite state machines built with asynchronous or synchronous logic for controlling the flow of data through computational logic circuits programmed to accomplish a task specified by a user, having one finite state machine associated with each computational logic circuit, having each finite state machine accept data from either one or more predecessor finite state machines or from one or more sources outside the system and furnish data to one or more successor finite state machines or a recipient outside the system, excluding from consideration in determining a clock period for the system logic paths performing the task specified by the user, and providing a means for ensuring that each finite state machine allows sufficient time to elapse for the computational logic circuit associated with that finite state to perform its task.

Owner:CAMPBELL JOHN +1

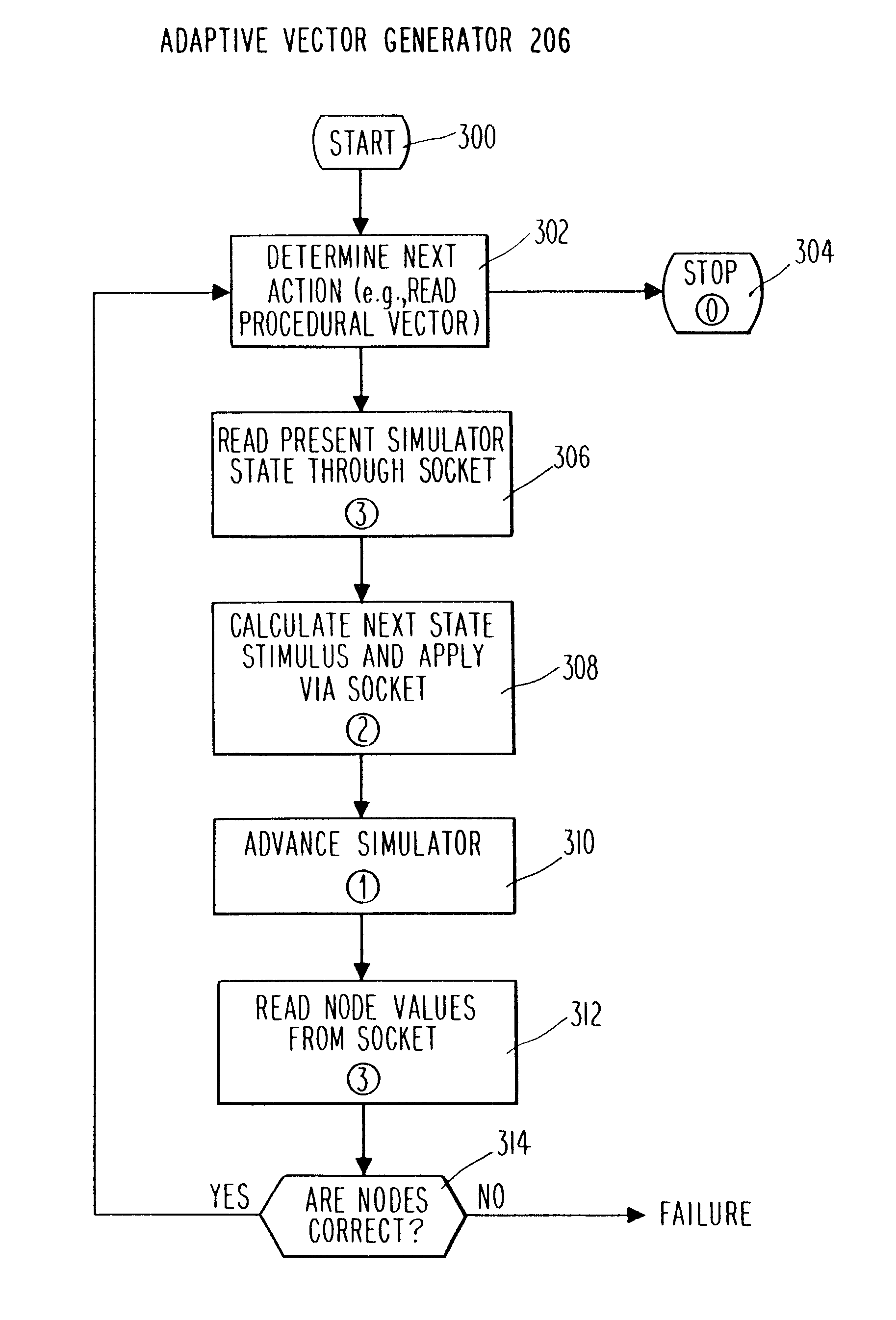

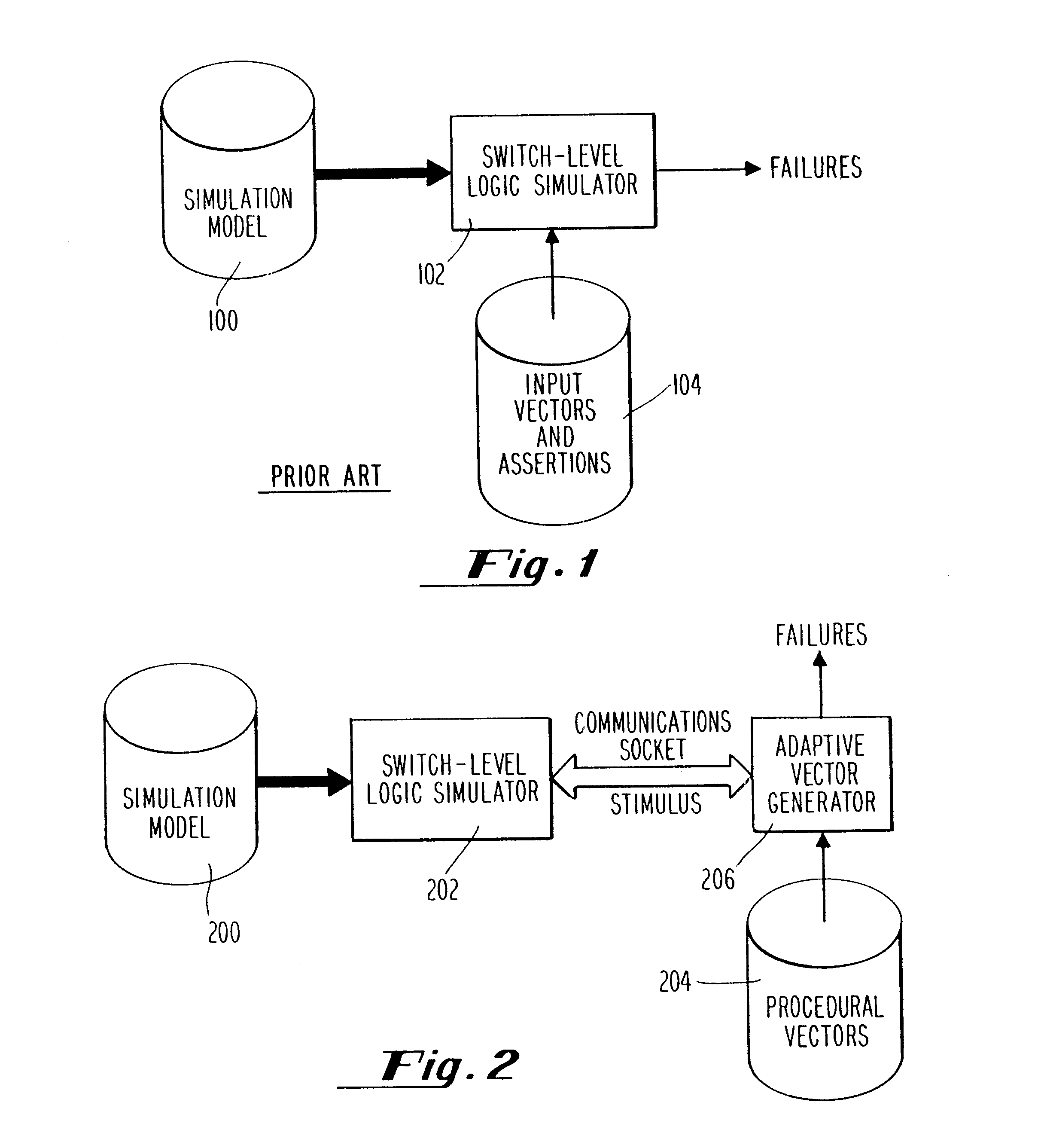

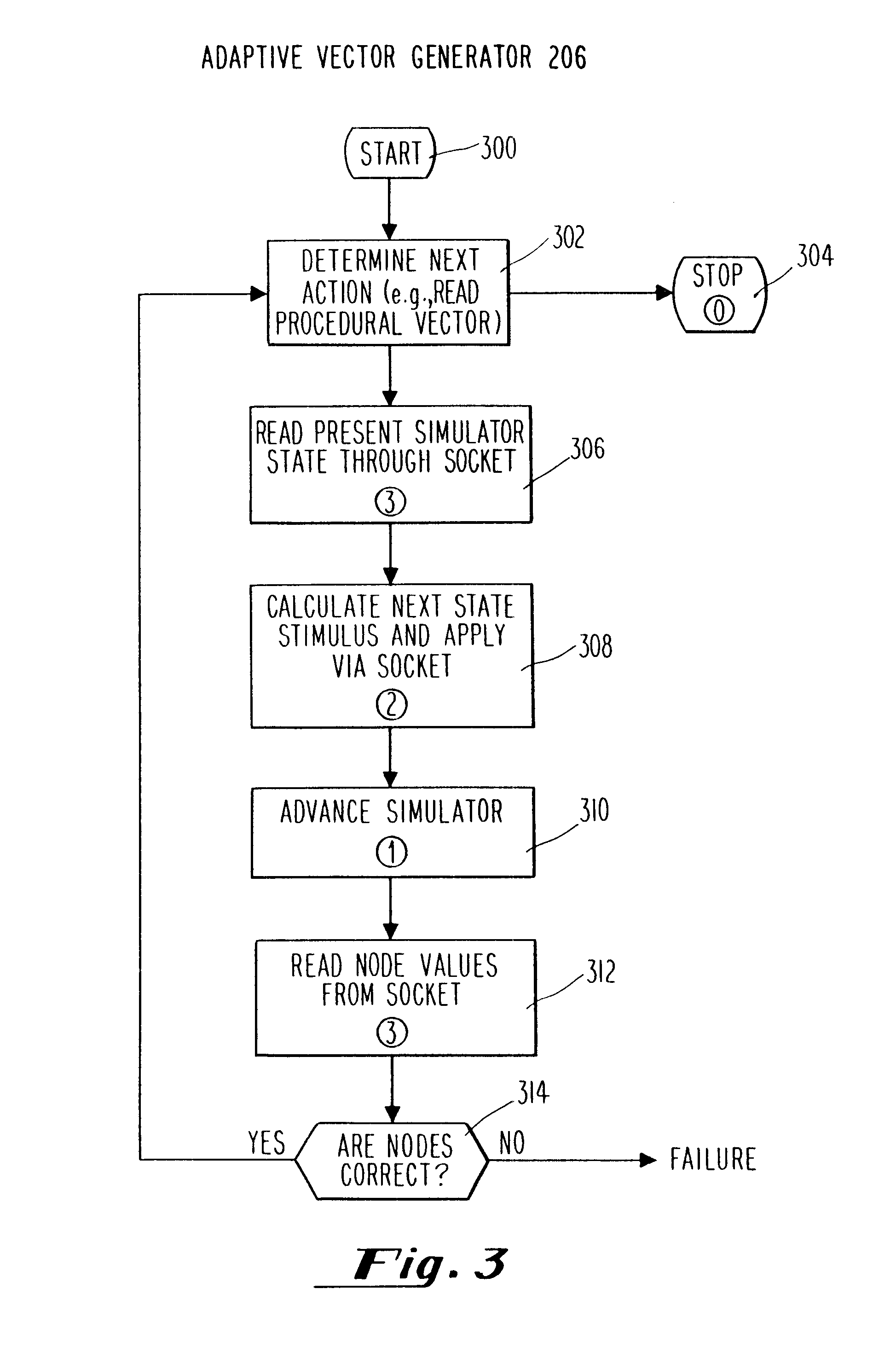

Bidirectional socket stimulus interface for a logic simulator

InactiveUS6421823B1Logical operation testingGeneral purpose stored program computerStimulus patternComputational logic

A communications socket between a logic simulator and a system for generating input stimuli based on the current state of the logic simulator is provided. Input stimuli to the logic simulator for use in implementing a particular circuit design simulation are calculated by interfacing an input program which models the function of the circuit being designed with the logic simulator. The lines in this input program are converted by an adaptive vector generator into communications signals which are understandable by the logic simulator so that the desired simulation may take place. The input program thus enables the adaptive vector generator to behaviorally model complex logical systems that the logic simulator model is only a part of and allows for more accurate and detailed simulation. The adaptive vector generator does this by determining the next input vector state in accordance with the present state of the logic simulator model as received from the communications socket. In other words, based on the state data received from the logic simulator, the adaptive vector generation automatically calculates the next stimulus pattern for the logic simulator model and provides this stimuli to the logic simulator through the communications socket. This technique removes the tediousness of the prior art techniques in that the design engineer no longer needs to specify every input step and to anticipate the output state of the circuit.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

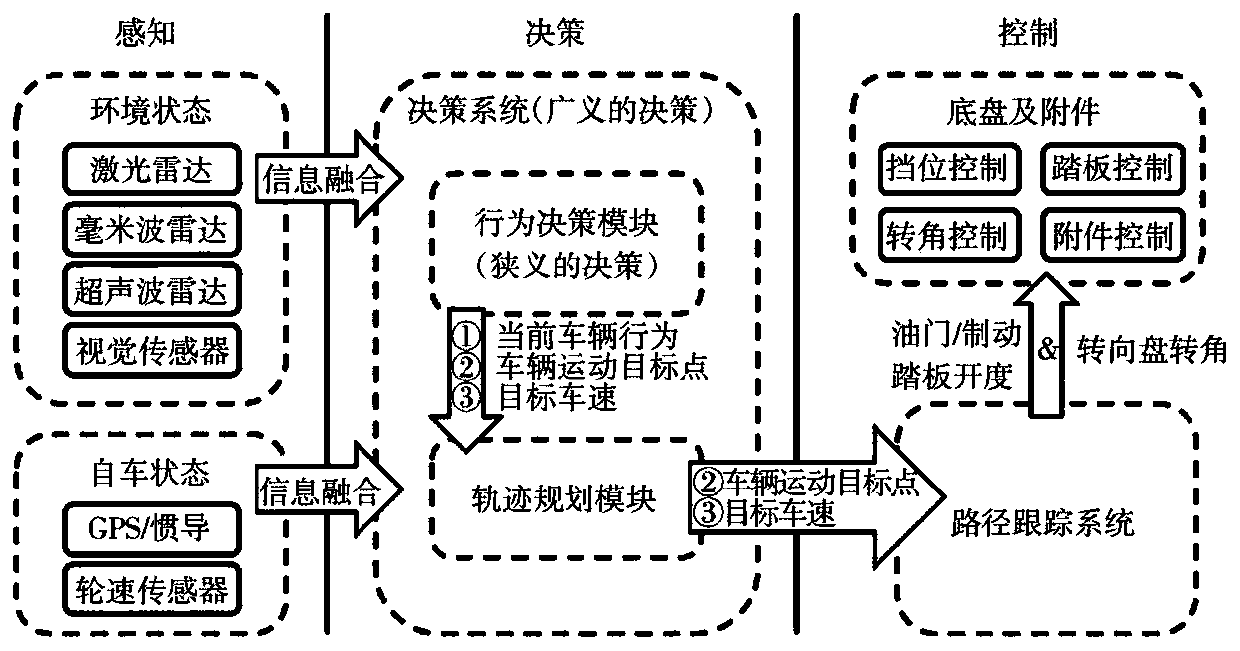

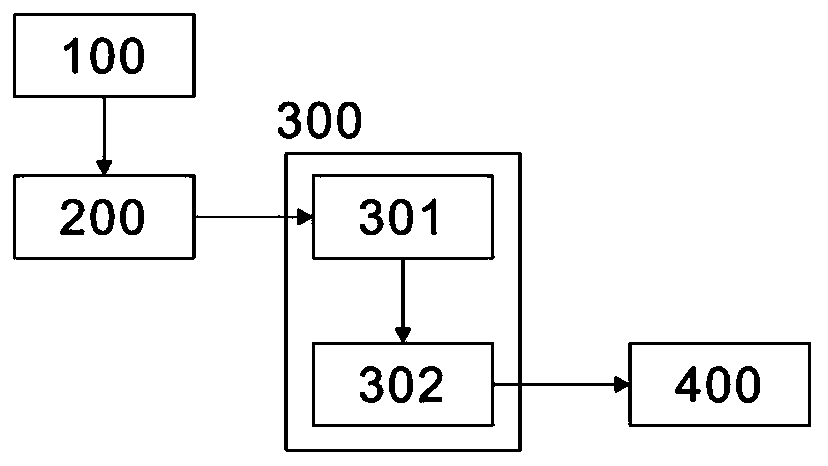

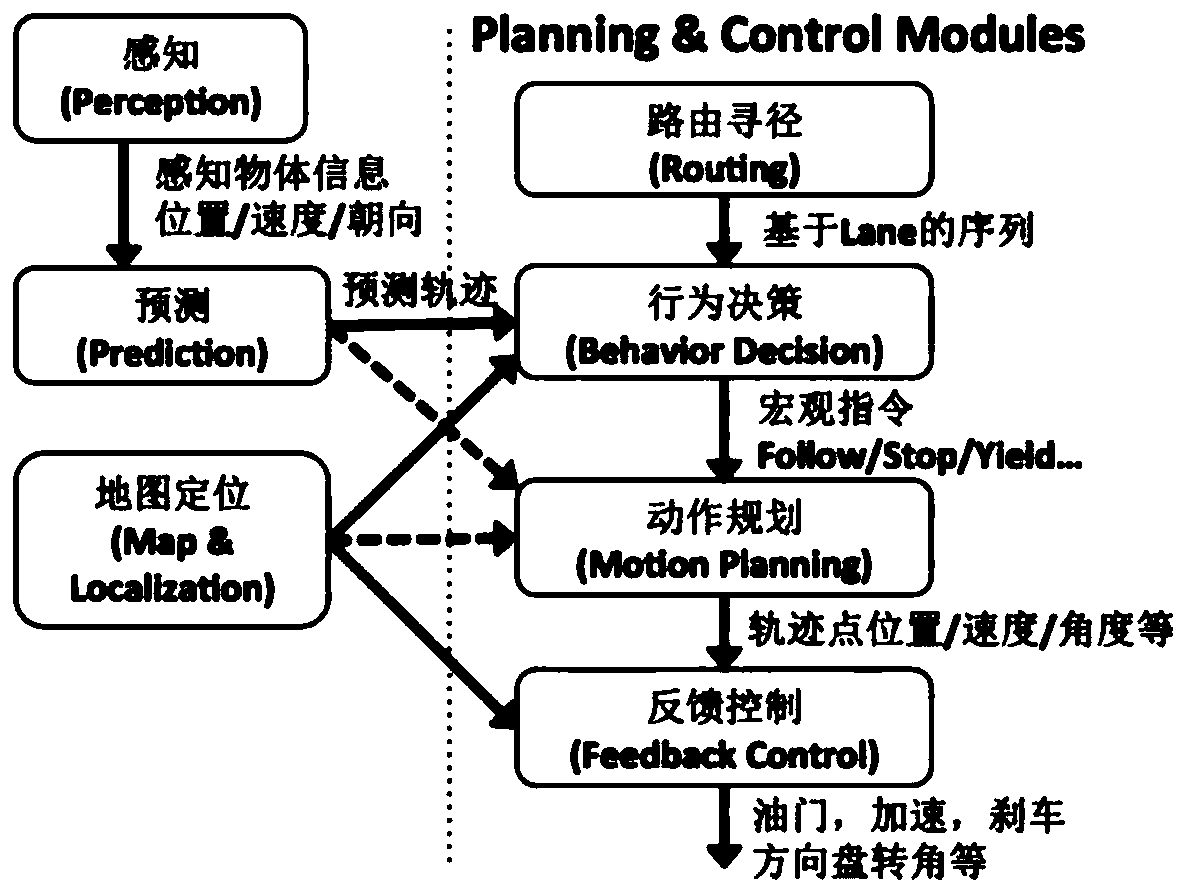

Automatic driving decision-making method and system

InactiveCN110568841AImprove development efficiencyPosition/course control in two dimensionsVehiclesDecision controlComputational logic

The invention discloses an automatic driving decision-making method and system. The system includes a sensing module, wherein the sensing module can acquire object information of a surrounding environment of a vehicle and state information of the vehicle itself; a prediction module, wherein the prediction module is connected to the sensing module and is able to receive sensed information and perform information fusion and computation to generate a predicted trajectory; a decision-making module, connected to the prediction module and being able to receive trajectory points of an output decisionof the predicted trajectory; and a control module, connected to the decision-making module of an upper layer, digesting the trajectory points and controlling the vehicle to execute the trajectory points. The system has the following beneficial effect: by dividing the modules, the system effectively and reasonably splits such a complicated problem as decision making, control and planning of an unmanned vehicle from the abstract to the concrete according to computational logics; and such division allows each module to focus on solving its own level of problems, thus the development efficiency of the entire complex software system is improved.

Owner:西藏宁算科技集团有限公司

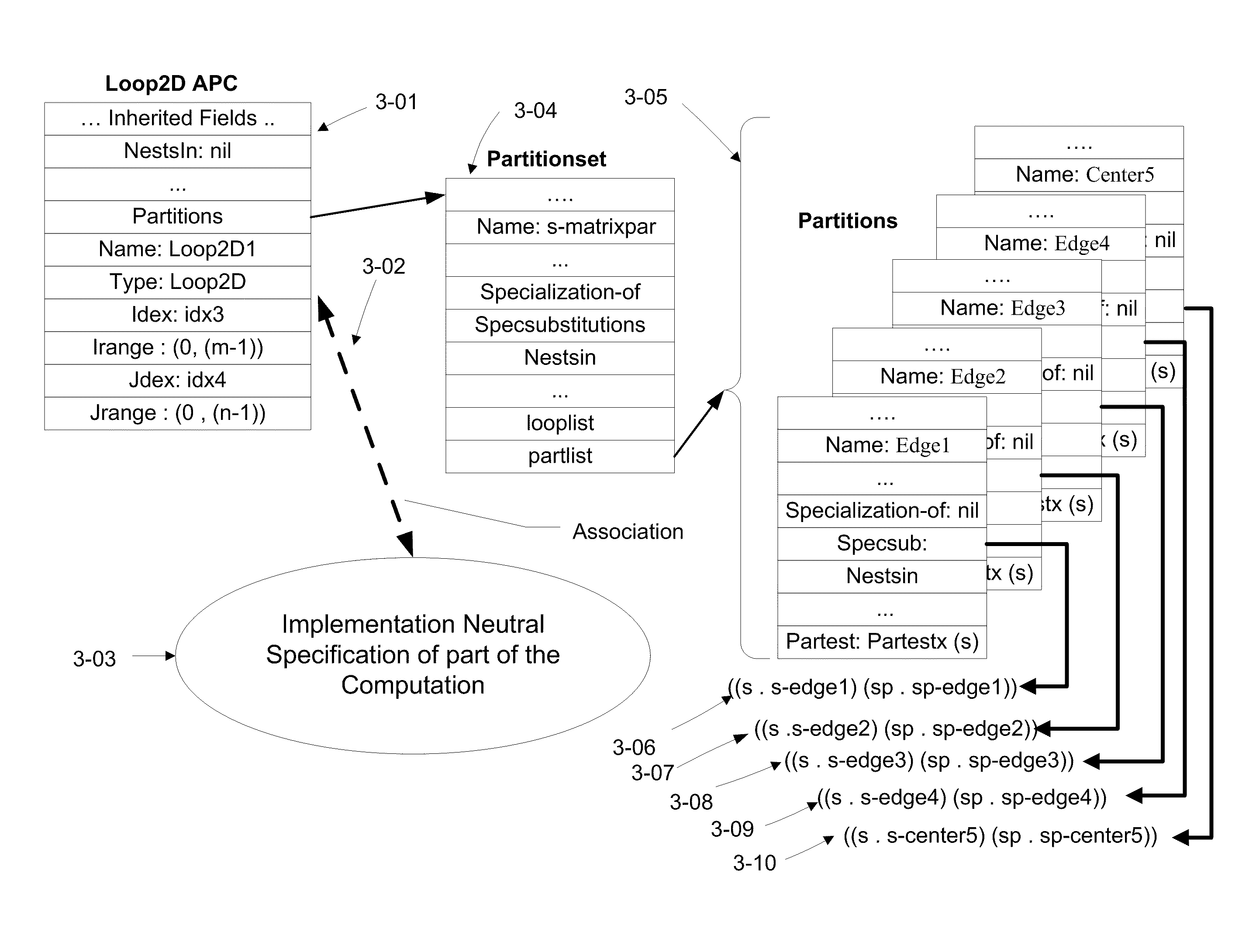

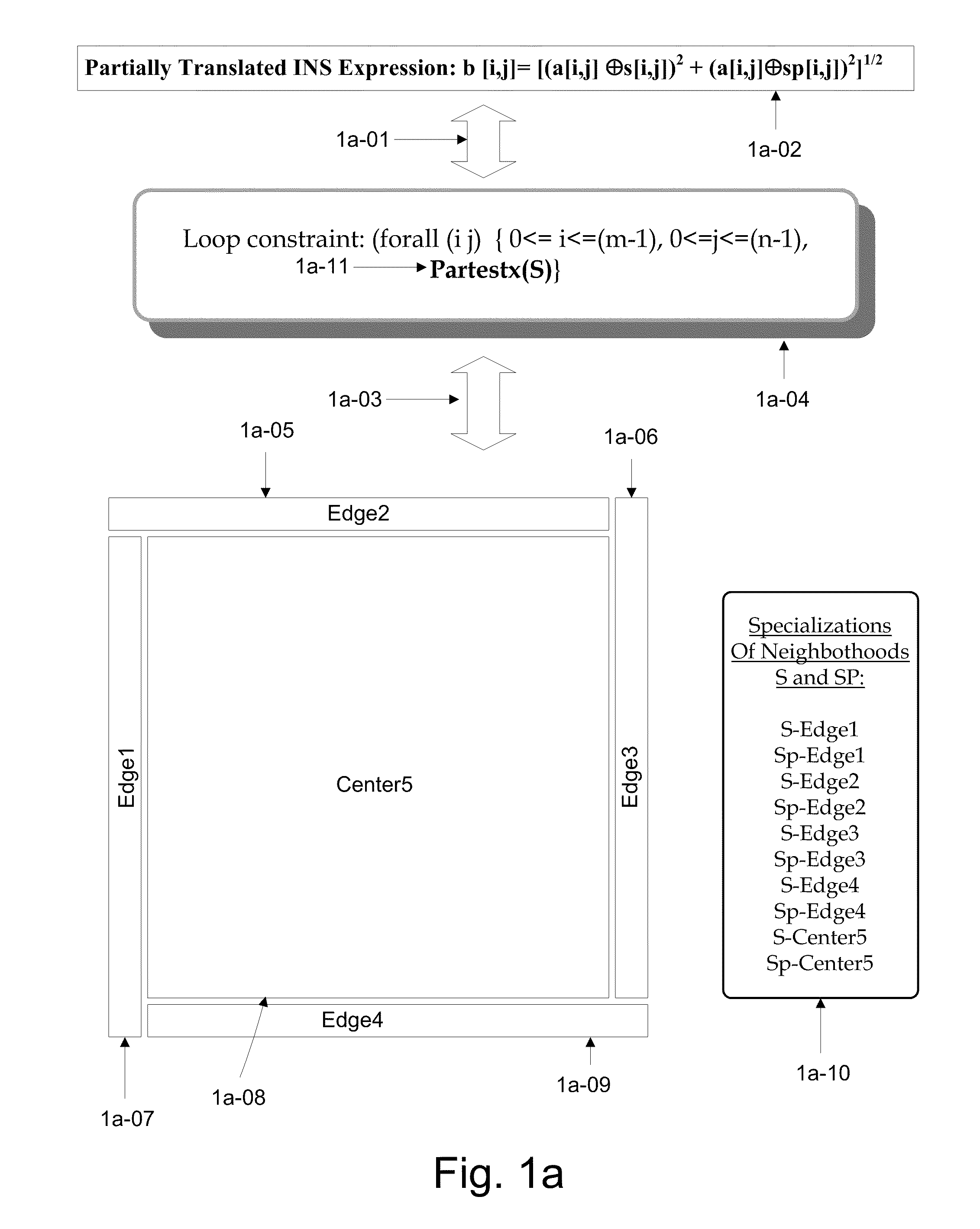

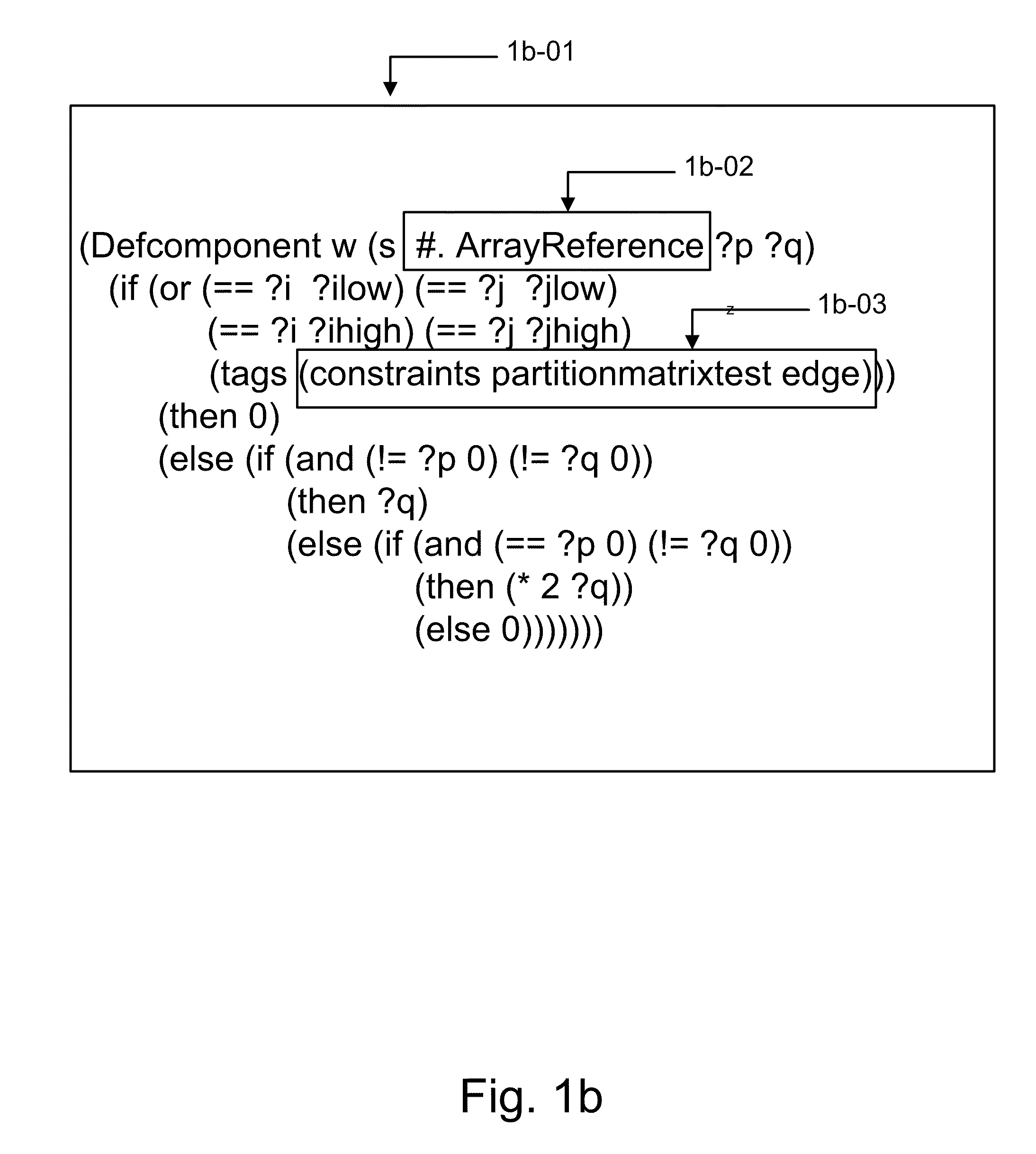

Synthetic Partitioning for Imposing Implementation Design Patterns onto Logical Architectures of Computations

ActiveUS20110314448A1Balance computing loadProgram code adaptionSpecific program execution arrangementsLanguage constructComputer architecture

A method and a system for using synthetic partitioning constraints to impose design patterns containing desired design features (e.g., distributed logic for a threaded, multicore based computation) onto logical architectures (LA) specifying an implementation neutral computation. The LA comprises computational specifications and related logical constraints (i.e., defined by logical assertions) that specify provisional loops and provisional partitionings of those loops. The LA contains virtually no programming language constructs. Synthetic partitioning constraints add implementation specific design patterns. They define how to find frameworks with desired design features, how to reorganize the LA to accommodate the frameworks, and how to map the computational payload from the LA into the frameworks. The advantage of synthetic partitioning constraints is they allow implementation neutral computations to be transformed into custom implementations that exploit the high capability features of arbitrary execution platform architectures such as multicore, vector, GPU, FPGA, virtual, API-based and others.

Owner:BIGGERSTAFF TED J

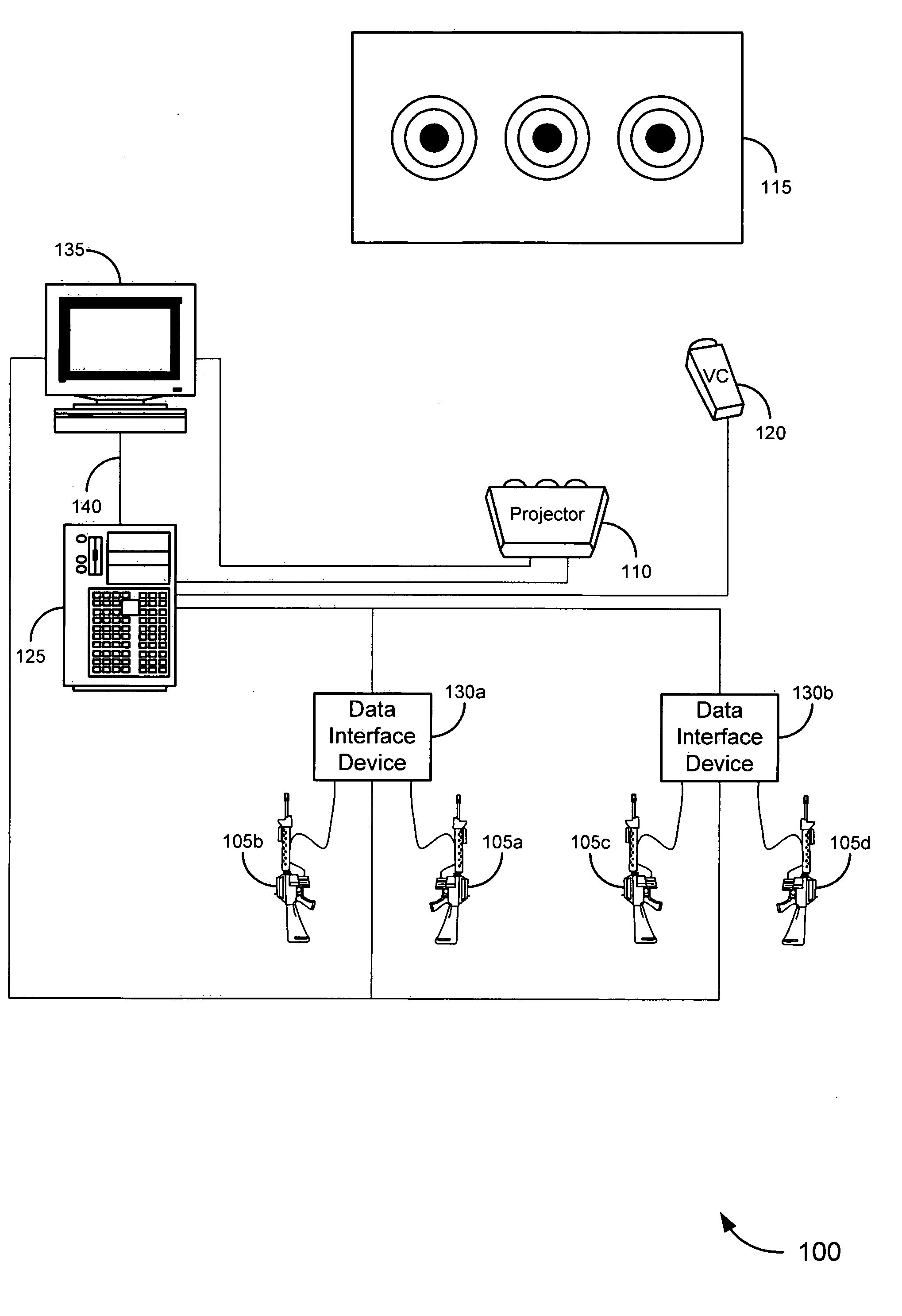

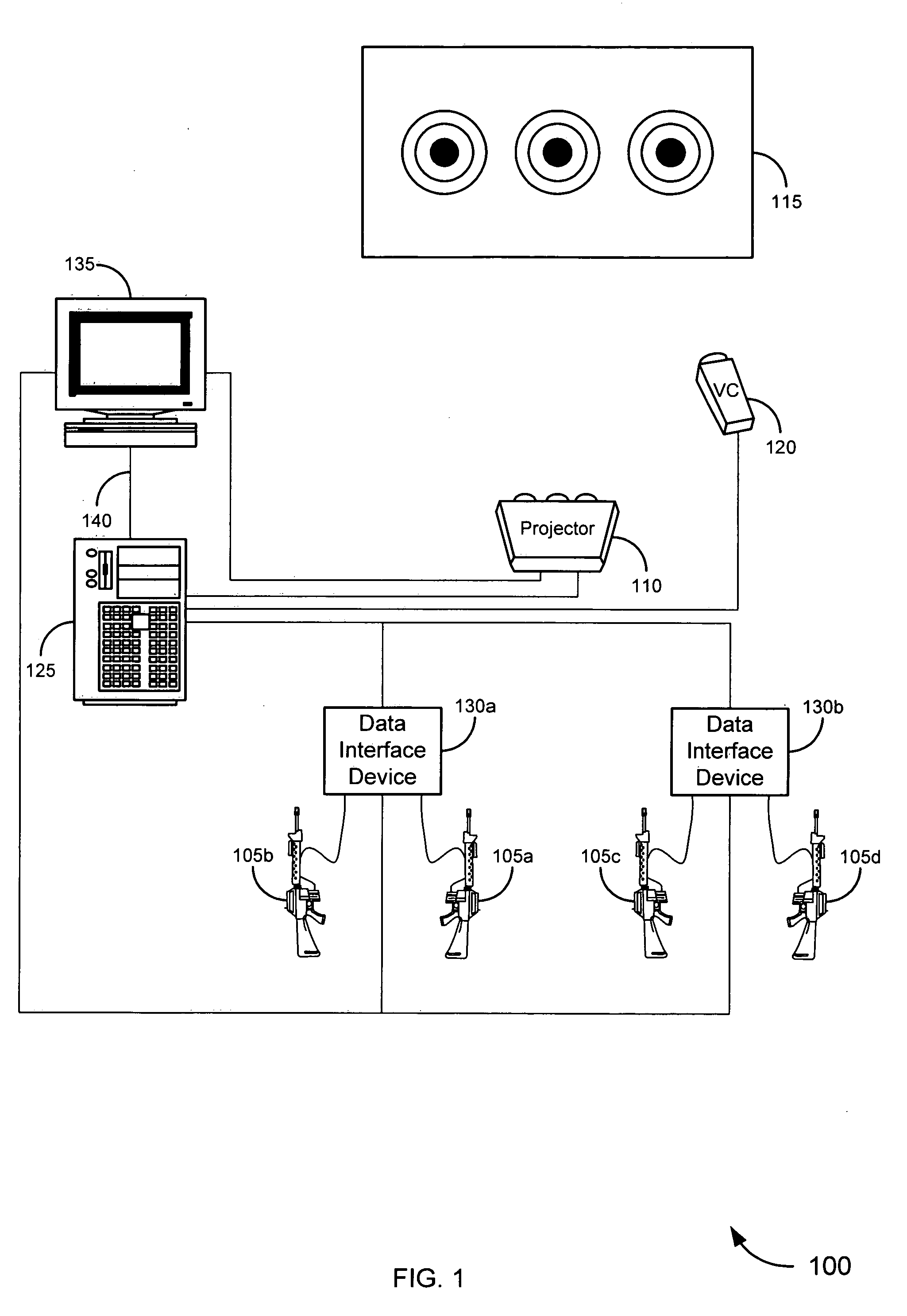

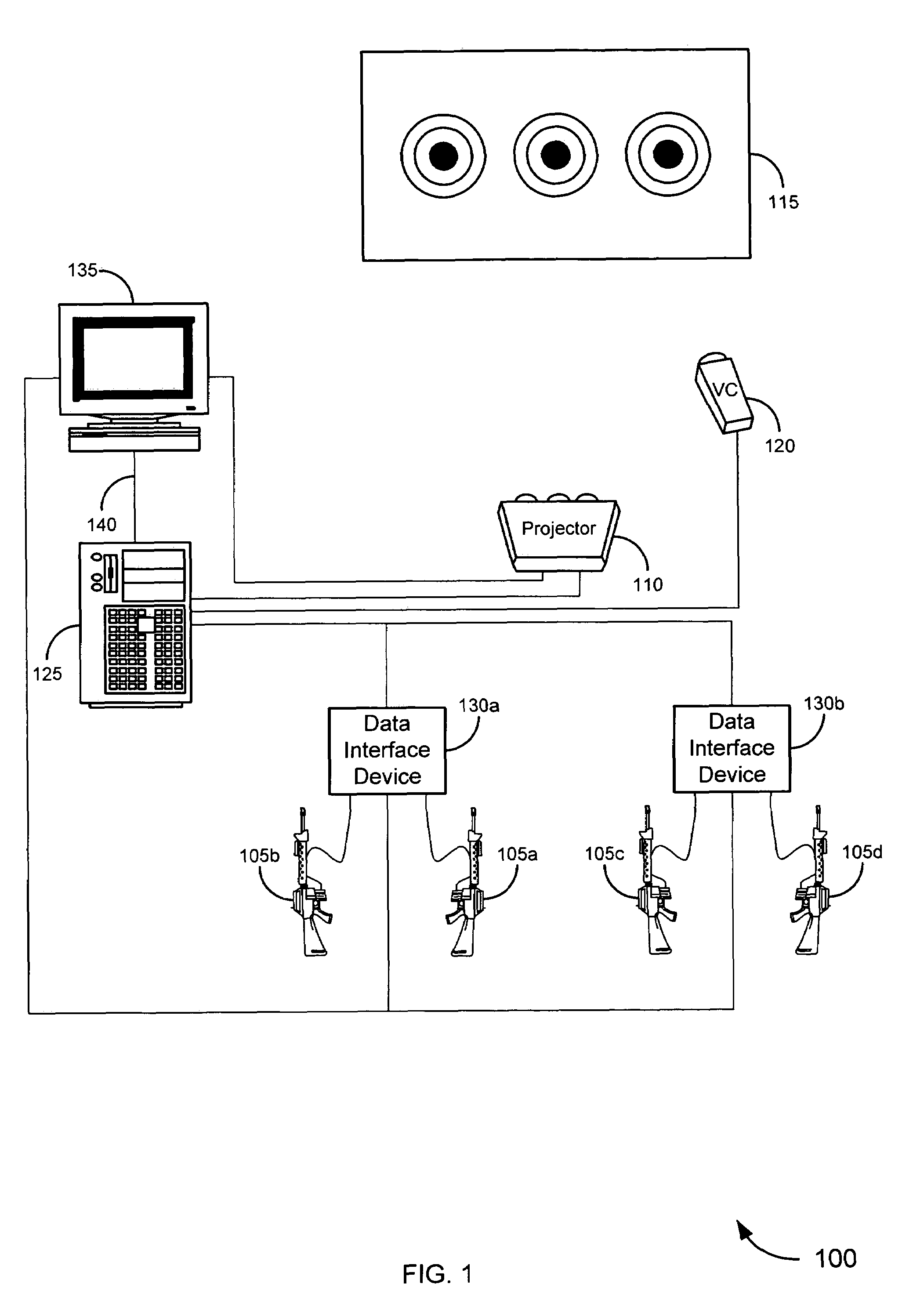

Enhancement of aimpoint in simulated training systems

ActiveUS20060073438A1Inherent delayCosmonautic condition simulationsDirection controllersComputational logicLaser target

Embodiments of the invention, therefore, provide improved systems and methods for tracking targets in a simulation environment. Merely by way of example, an exemplary embodiment provides a reflected laser target tracking system that tracks a target with a video camera and associated computational logic. In certain embodiments, a closed loop algorithm may be used to predict future positions of targets based on formulas derived from prior tracking points. Hence, the target's next position may be predicted. In some cases, targets may be filtered and / or sorted based on predicted positions. In certain embodiments, equations (including without limitation, first order equations and second order equations) may be derived from one or more video frames. Such equations may also be applied to one or more successive frames of video received and / or produced by the system. In certain embodiments, these formulas also may be used to compute predicted positions for targets; this prediction may, in some cases, compensate for inherent delays in the processing pipeline.

Owner:CUBIC CORPORATION

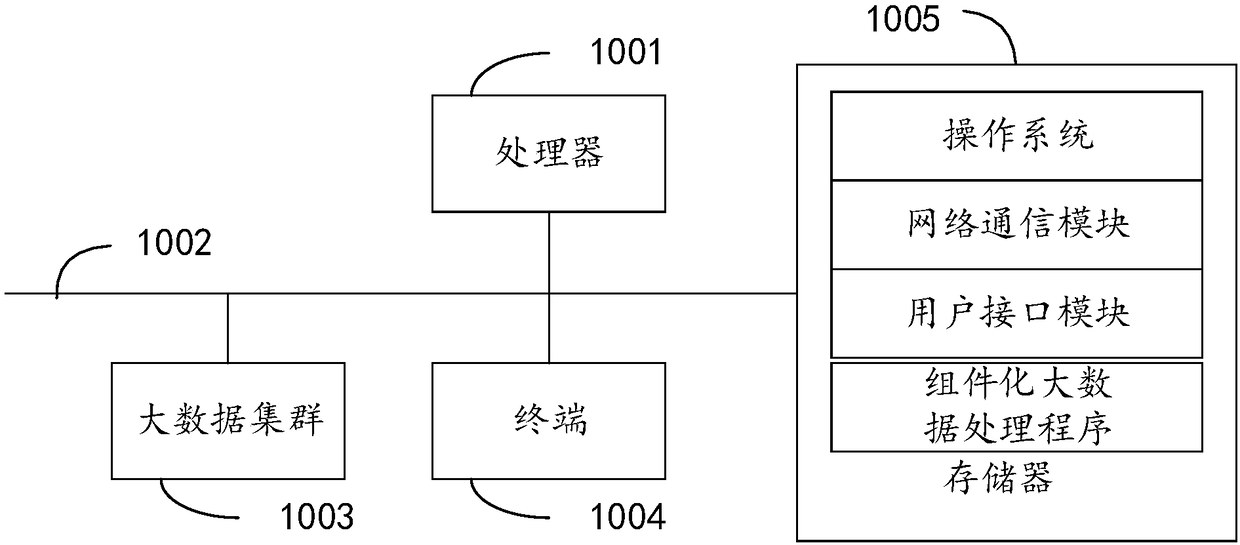

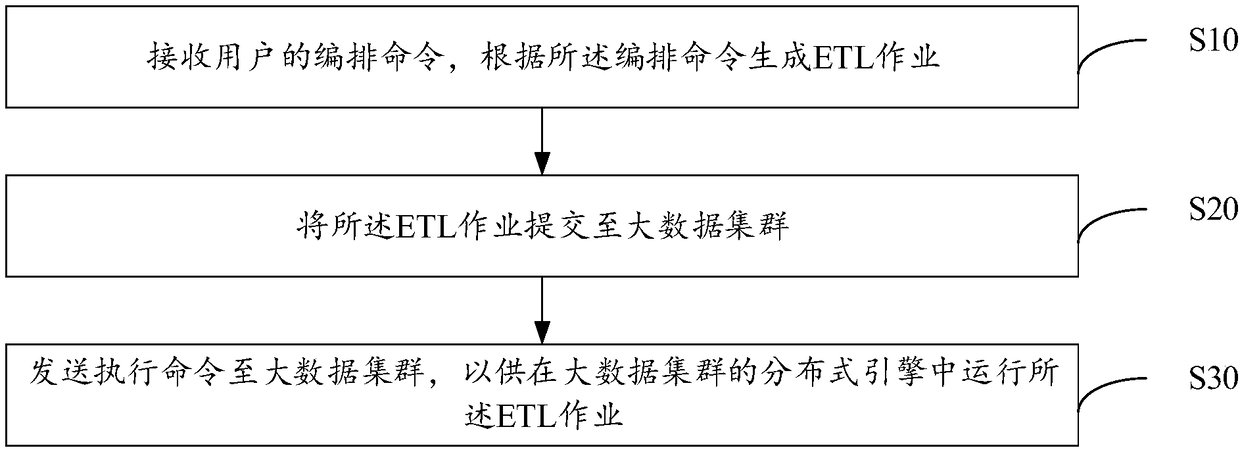

Component big data processing method, system, and computer readable storage medium

PendingCN109388667ASolve the efficiency bottleneckSolve the problem that requires users to hard-code the implementation of calculation logicDatabase management systemsHard codingComputational logic

The invention discloses a component-based large data processing method, a system and a computer-readable storage medium. The method comprises the following steps: receiving an orchestration command ofa user and generating an ETL job according to the orchestration command; Submitting the ETL job to a large data cluster; An execution command is sent to the large data cluster for running the ETL jobin a distributed engine of the large data cluster. Through the invention, the user can complete the arrangement of ETL operations through simple operation, greatly reducing technical difficulty, solving the problem that the prior non-component big data ETL technology requires the user to hard code to realize calculation logic, and the problem that the calculation logic cannot be reused.

Owner:ZTE CORP

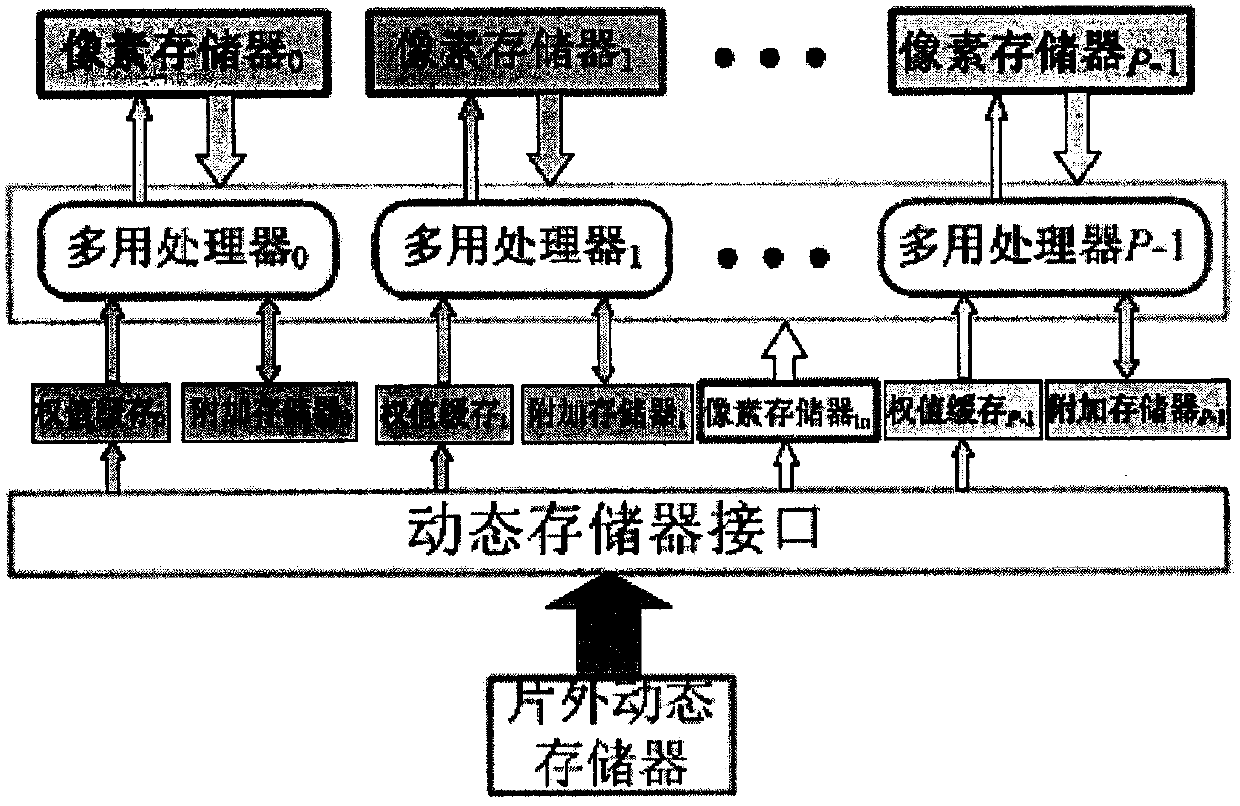

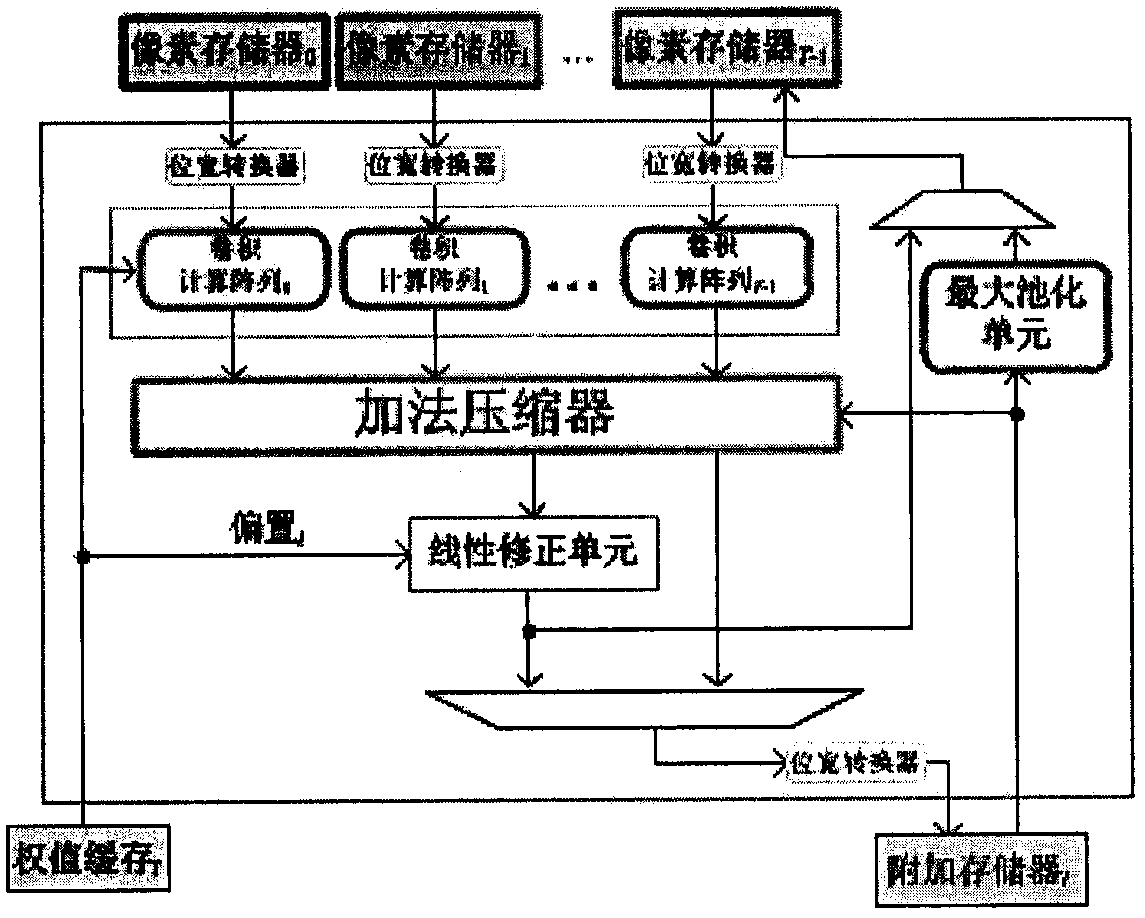

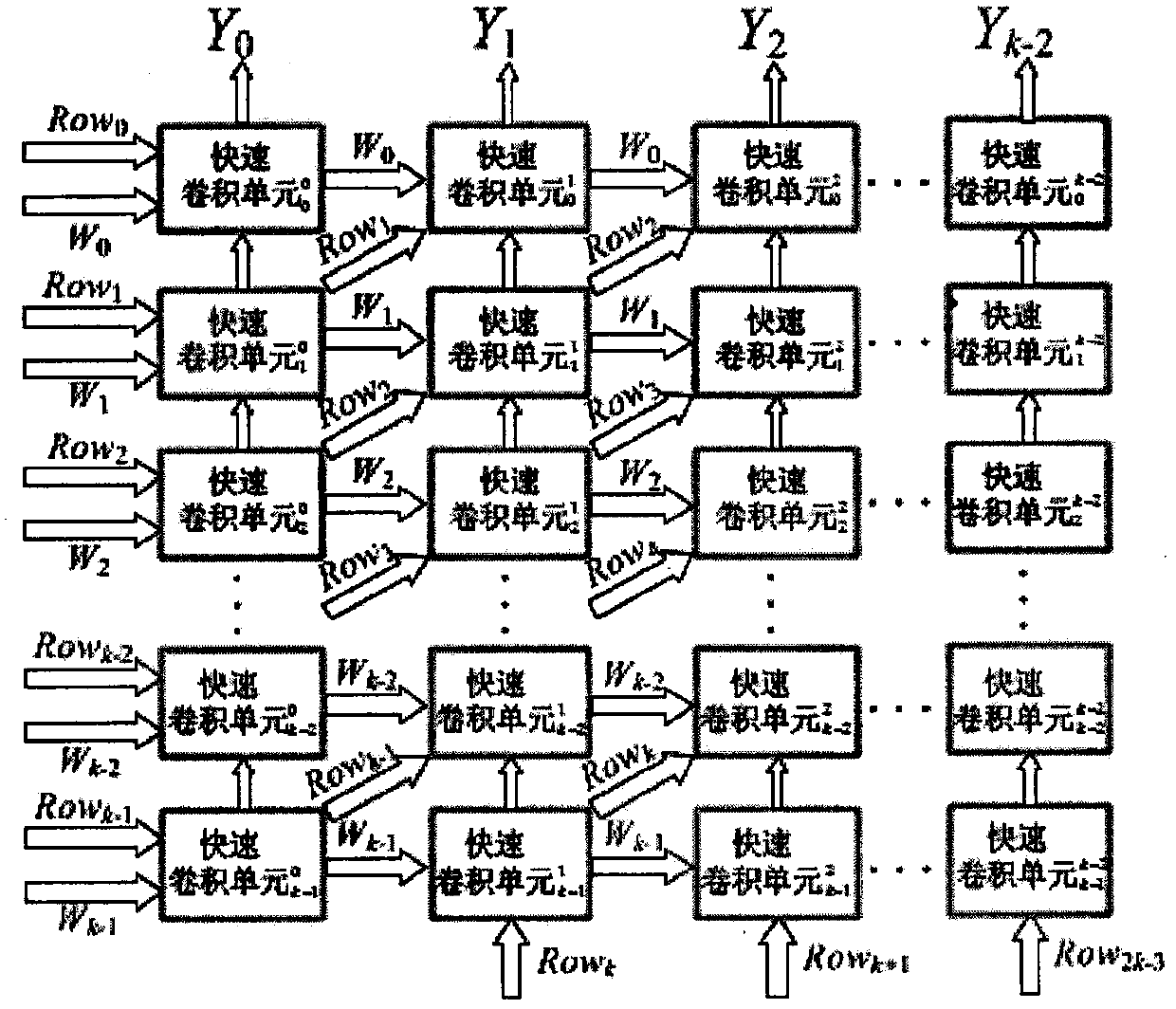

Parallel rapid FIR filter algorithm-based convolutional neural network hardware accelerator

The invention discloses a parallel rapid FIR filter algorithm-based convolutional neural network hardware accelerator. The accelerator mainly consists of calculation logic and a storage unit, whereinthe calculation logic mainly comprises a multifunctional processor, a rapid convolution unit and a convolutional calculation array formed by the rapid convolution unit; and the storage unit comprisesa pixel memory, a weight cache, an additional memory and an off-chip dynamic memory. The accelerator is capable of processing the calculation of convolutional neural networks in three levels: line (row) parallel, in-layer parallel and inter-layer parallel. The accelerator can be suitable for the occasions of multiple degrees of parallelism, so that the calculation of the convolutional neural networks can be processed efficiently, and considerable data throughput rate can be achieved.

Owner:南京风兴科技有限公司

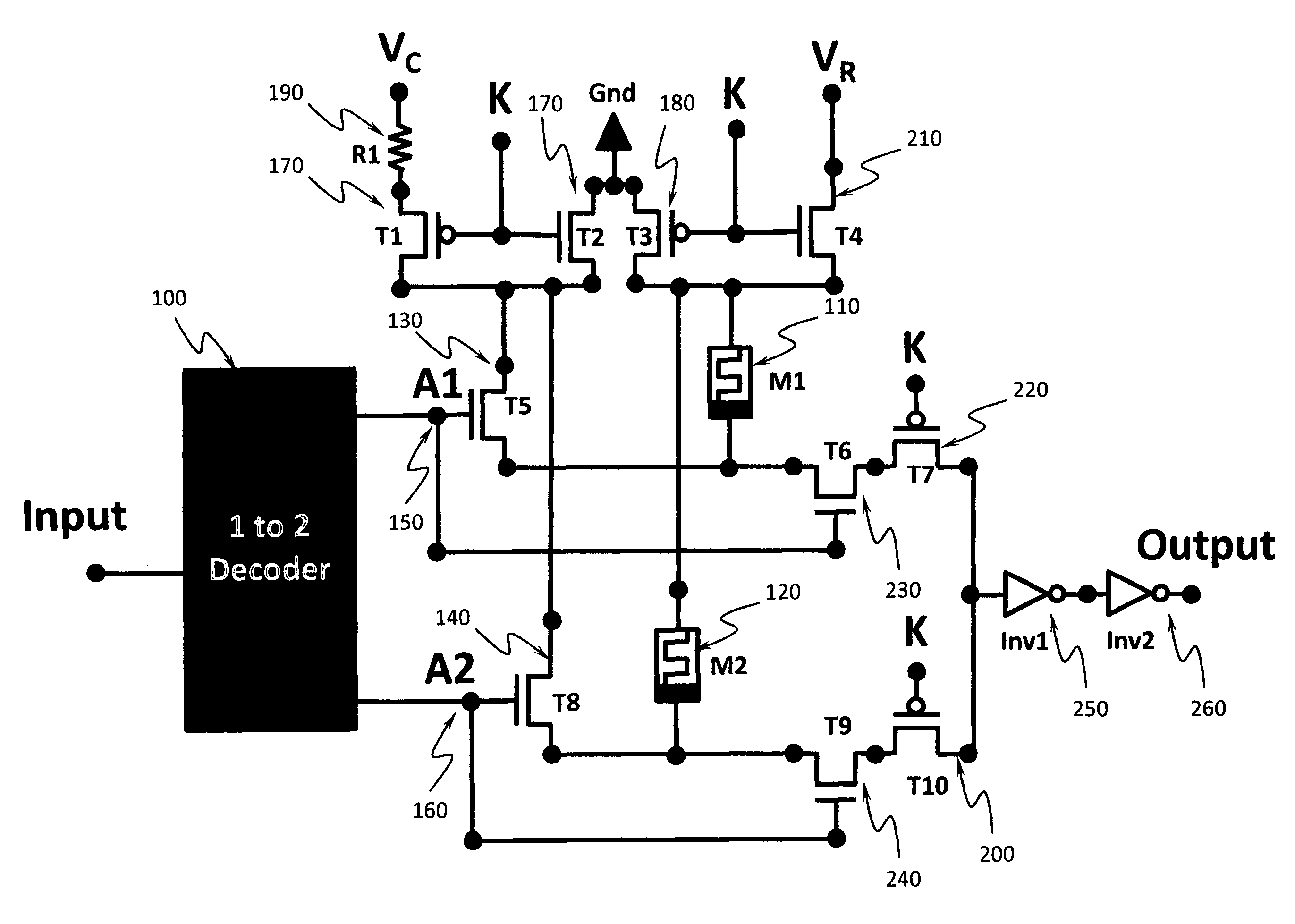

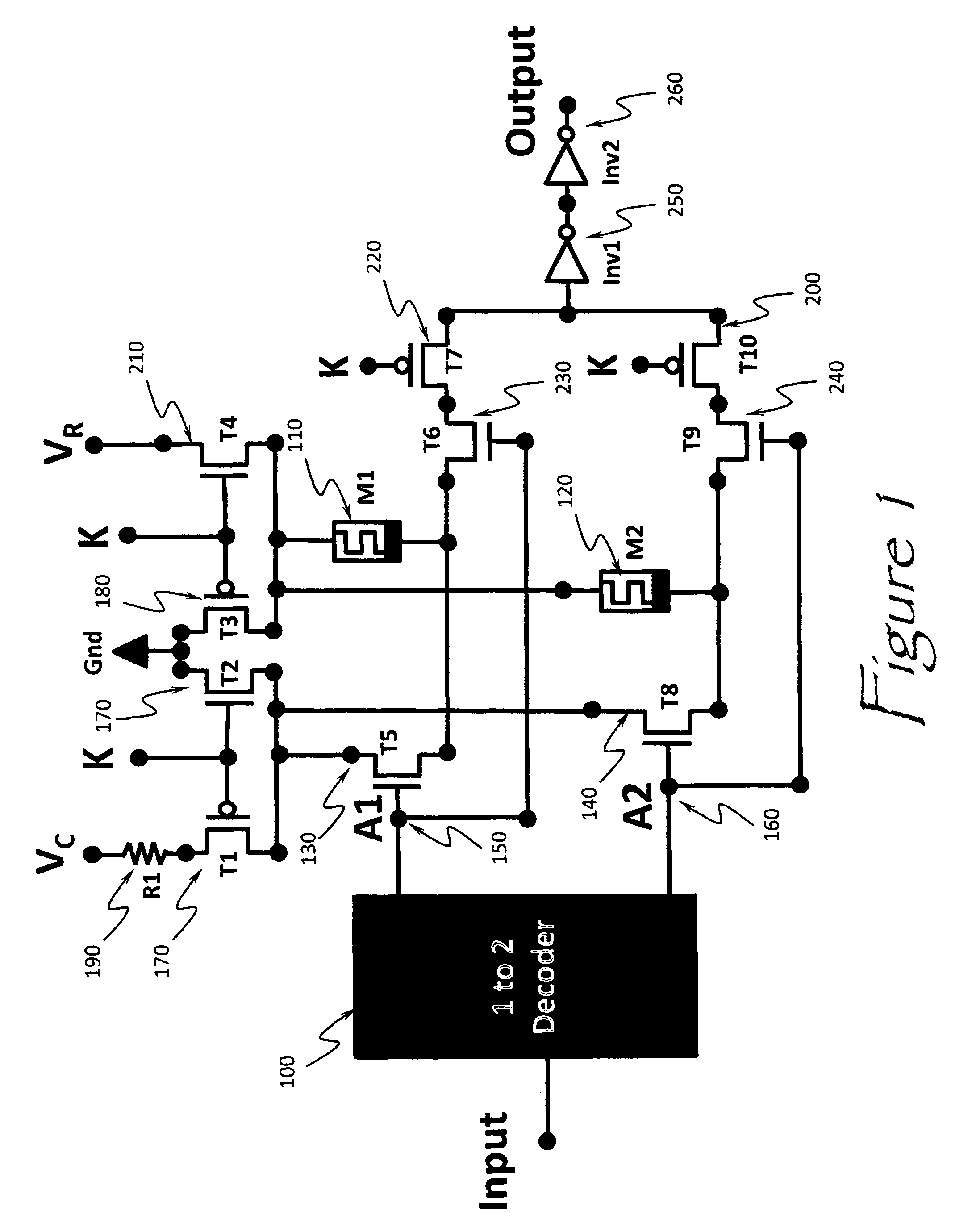

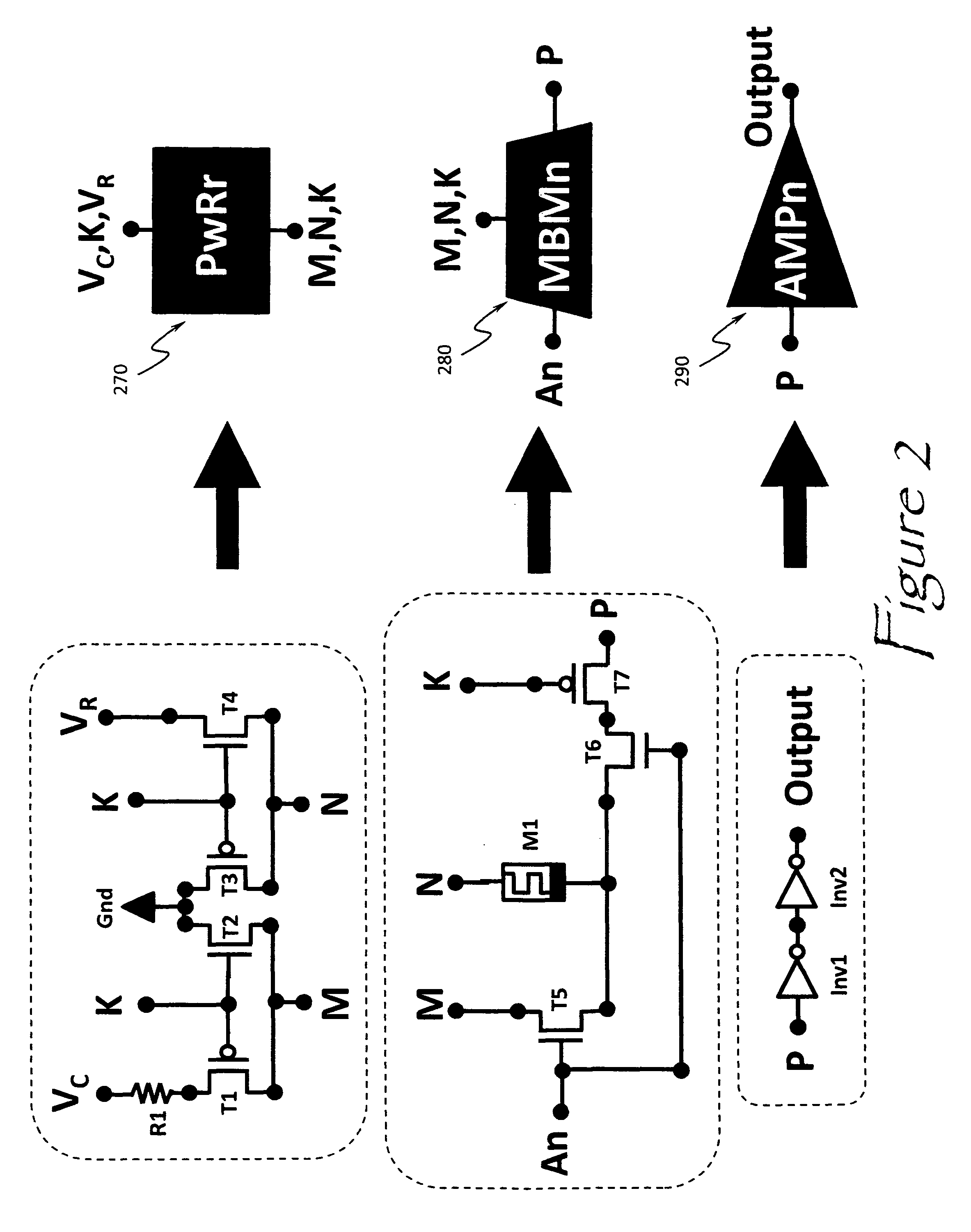

Reconfigurable memristor-based computing logic

An apparatus for reconfigurable computing logic implemented by an innovative memristor based computing architecture. The invention employs a decoder to select memristor devices whose ON / OFF impedance state will determine the reconfigurable logic output. Thus, the resulting circuit design can be electronically configured and re-configured to implement any multi-input / output Boolean logic computing functionality. Moreover, the invention retains its configured logic state without the application of a current or voltage source.

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

Enhancement of aimpoint in simulated training systems

Owner:CUBIC CORP

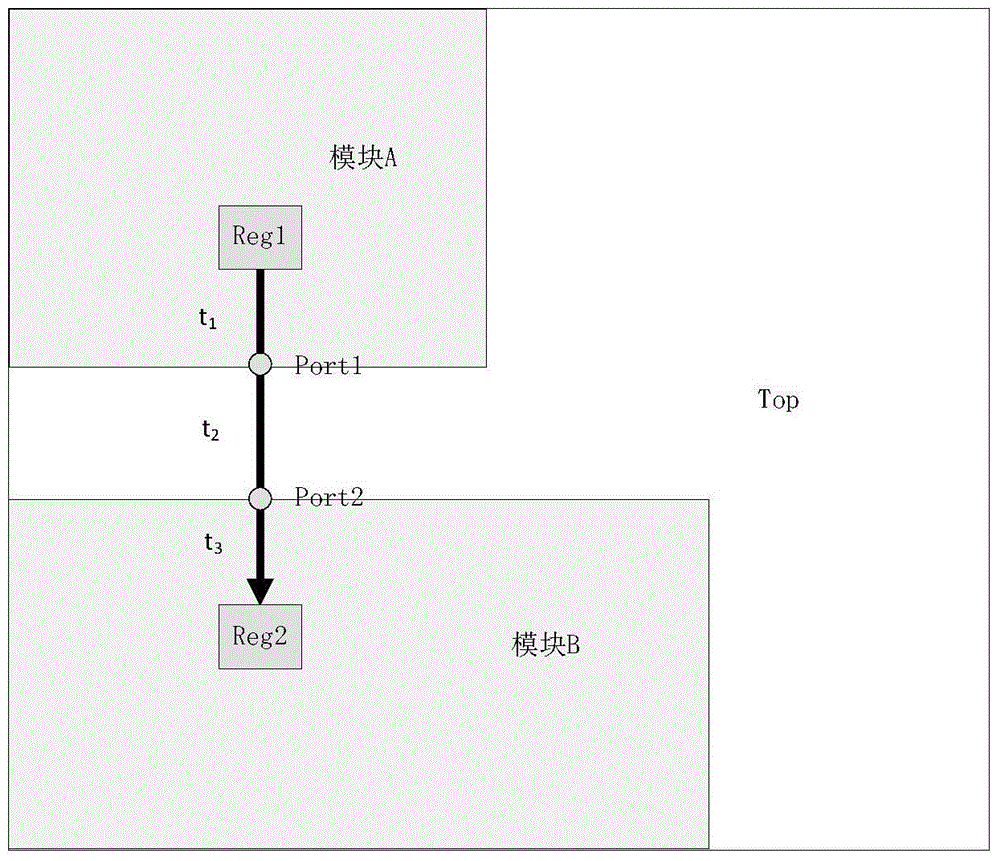

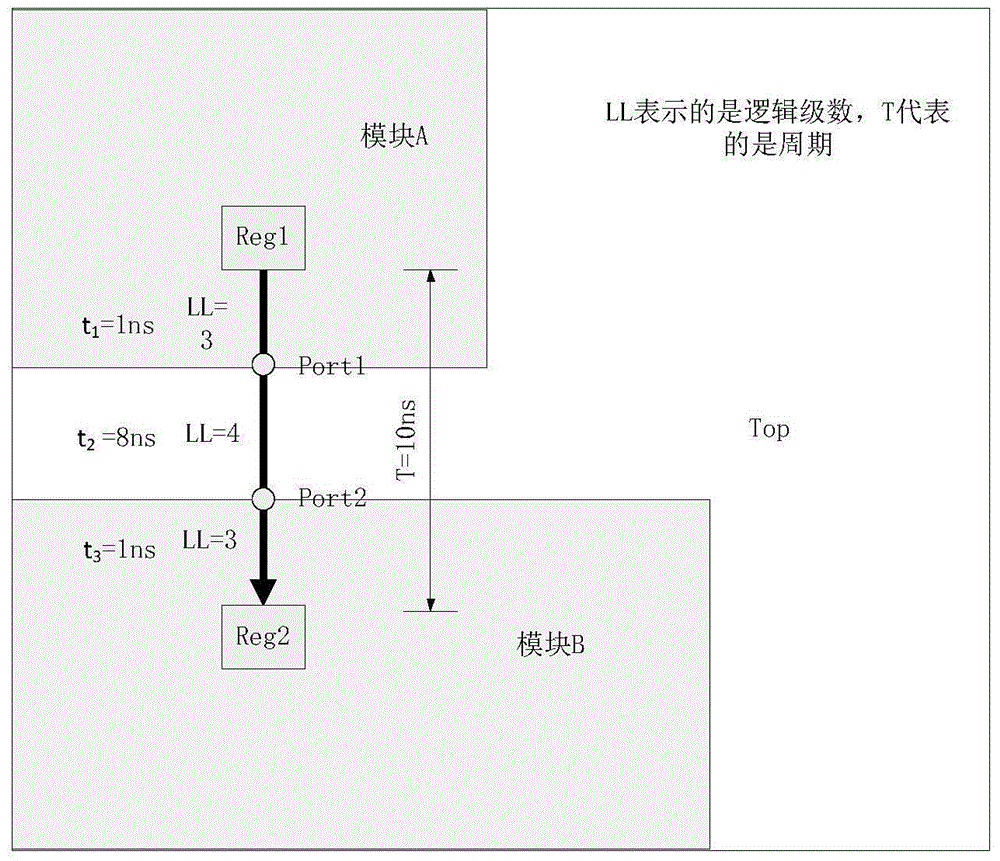

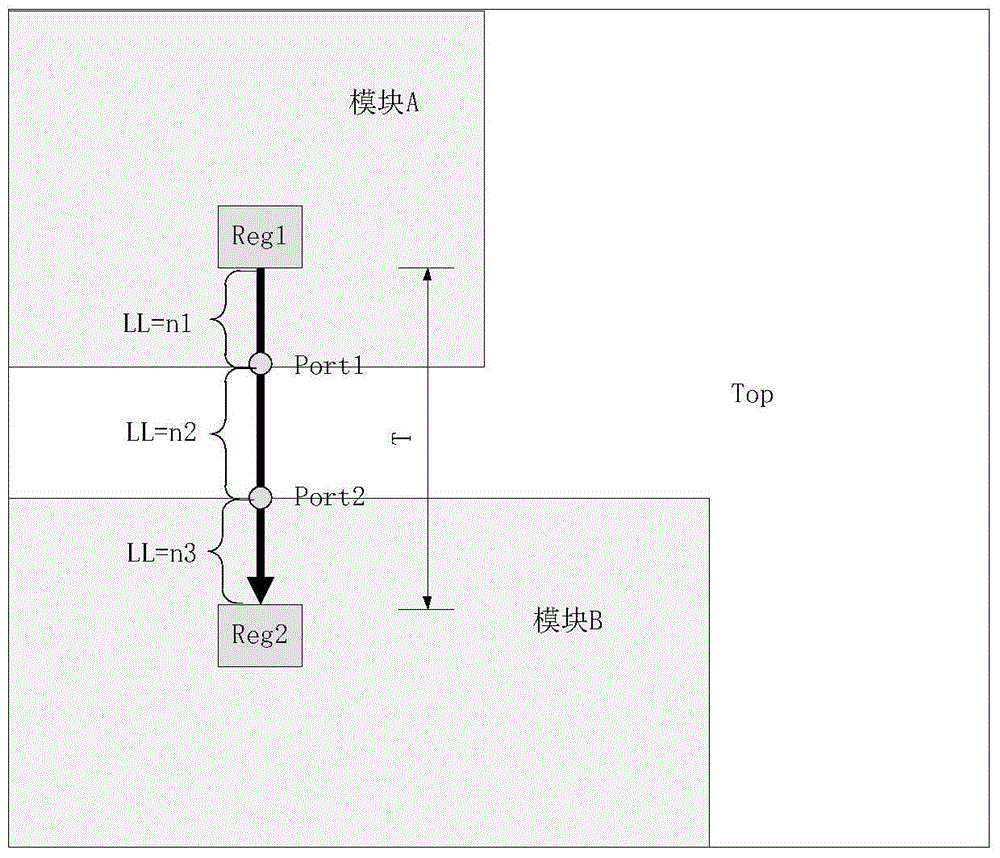

Time sequence budgeting method capable of considering distance and clock

ActiveCN105095604AGuaranteed reasonablenessAccurate Timing Budget ValuesSpecial data processing applicationsLogical depthProcessor register

The invention provides a time sequence budgeting method capable of considering a distance and a clock by aiming at the roughness and the boundness of the time sequence budgeting method with a shortest boundary in a hierarchical physical design and the time sequence budgeting method based on logical depth. The method fully considers influence caused on the time sequence of a cross-module path by the physical distance and a clock deviation between two modules and obtains a more accurate and reasonable time sequence budgeting numerical value of each module port through the following steps of carefully analyzing the physical positions of the module port and a relevant boundary register and the logic depth of the cross-module path and carrying out the time delay calculation of an interconnection line, the ratio calculation of logic depth and clock deviation estimation, so that the iterations of the time sequence optimization of the cross-module path are reduced, and time sequence convergence in a chip design is quickened.

Owner:NAT UNIV OF DEFENSE TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com