Parallel rapid FIR filter algorithm-based convolutional neural network hardware accelerator

A technology of convolutional neural network and hardware accelerator, which is applied in the direction of biological neural network model and physical realization, and can solve the problems of low parallelism and high computational complexity of deep convolutional neural network

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Embodiments of the invention are described in detail below, examples of which are illustrated in the accompanying drawings. Where the same name is used throughout to refer to modules with the same or similar functionality. The following will be specifically described by referring to the implementation examples described with reference to the accompanying drawings.

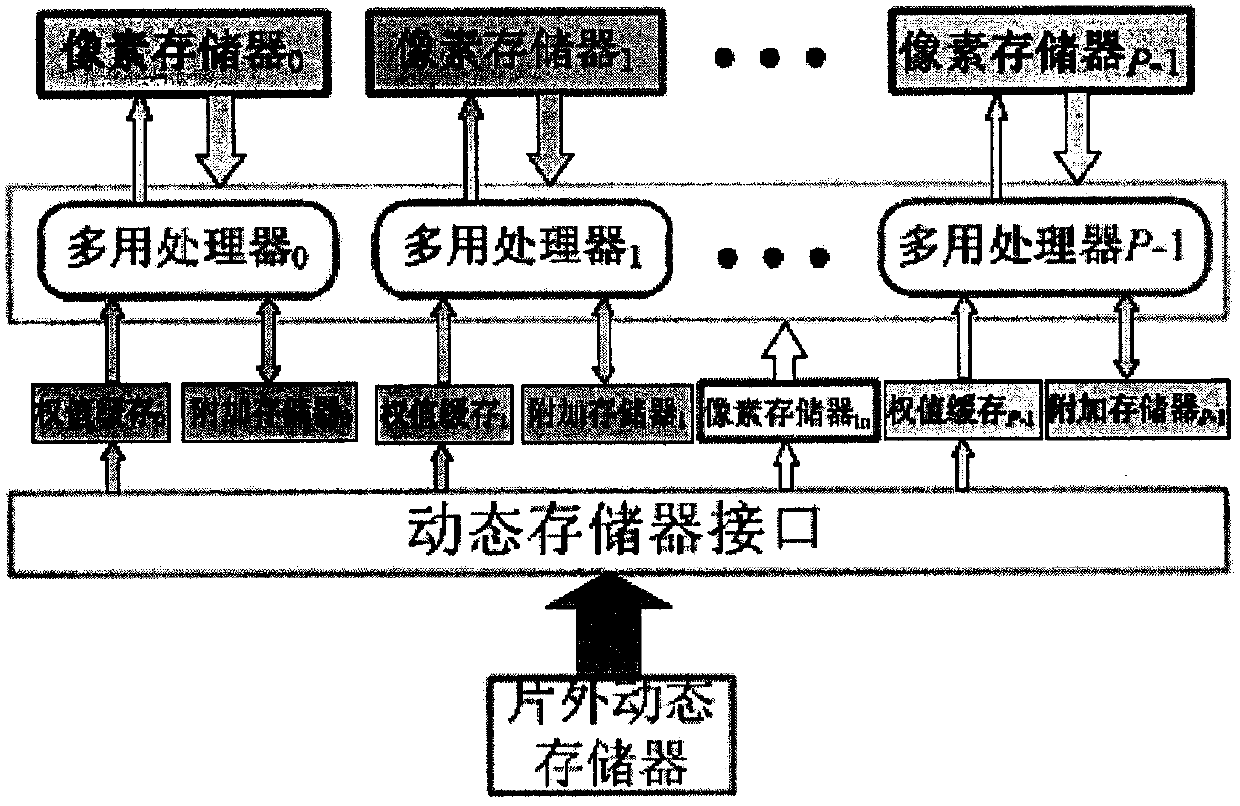

[0024] Such as figure 1 Shown is the overall structure diagram of the present invention. The convolutional neural network hardware accelerator based on the parallel fast FIR filter algorithm includes:

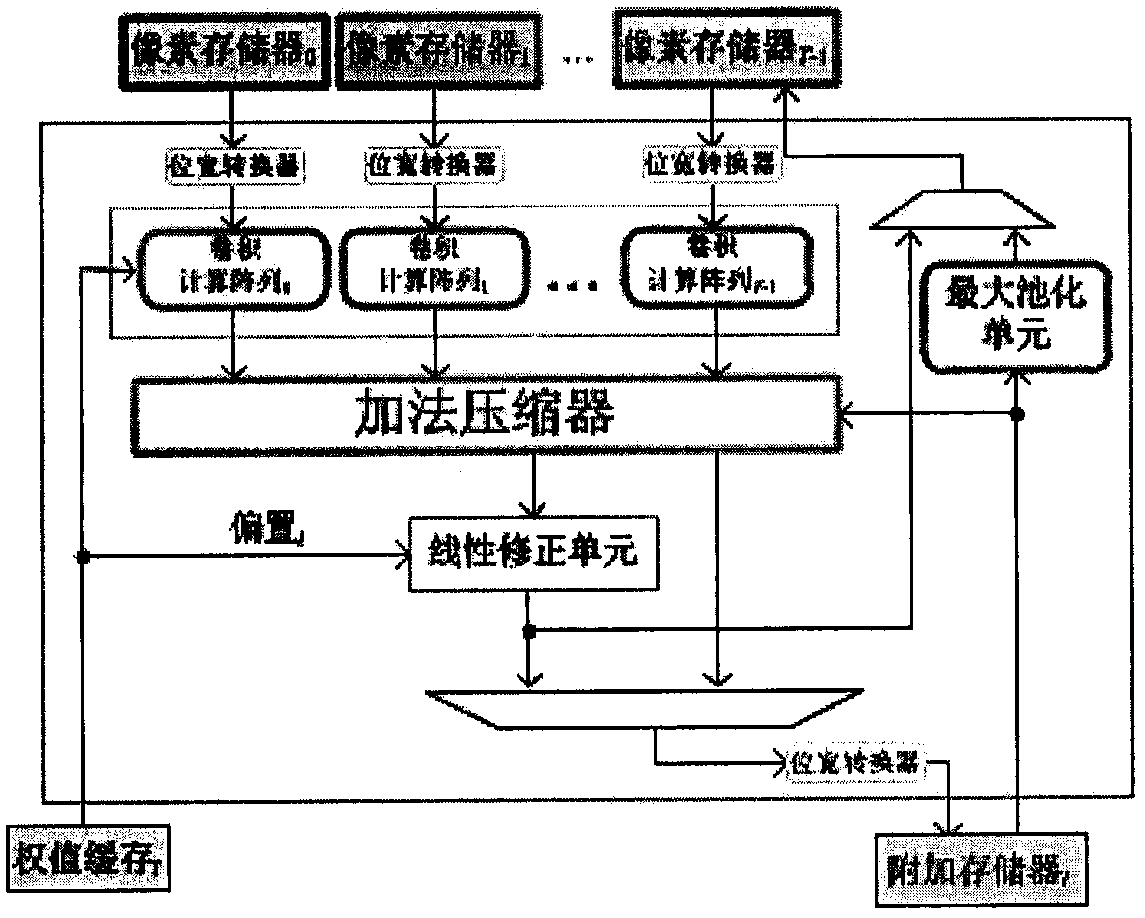

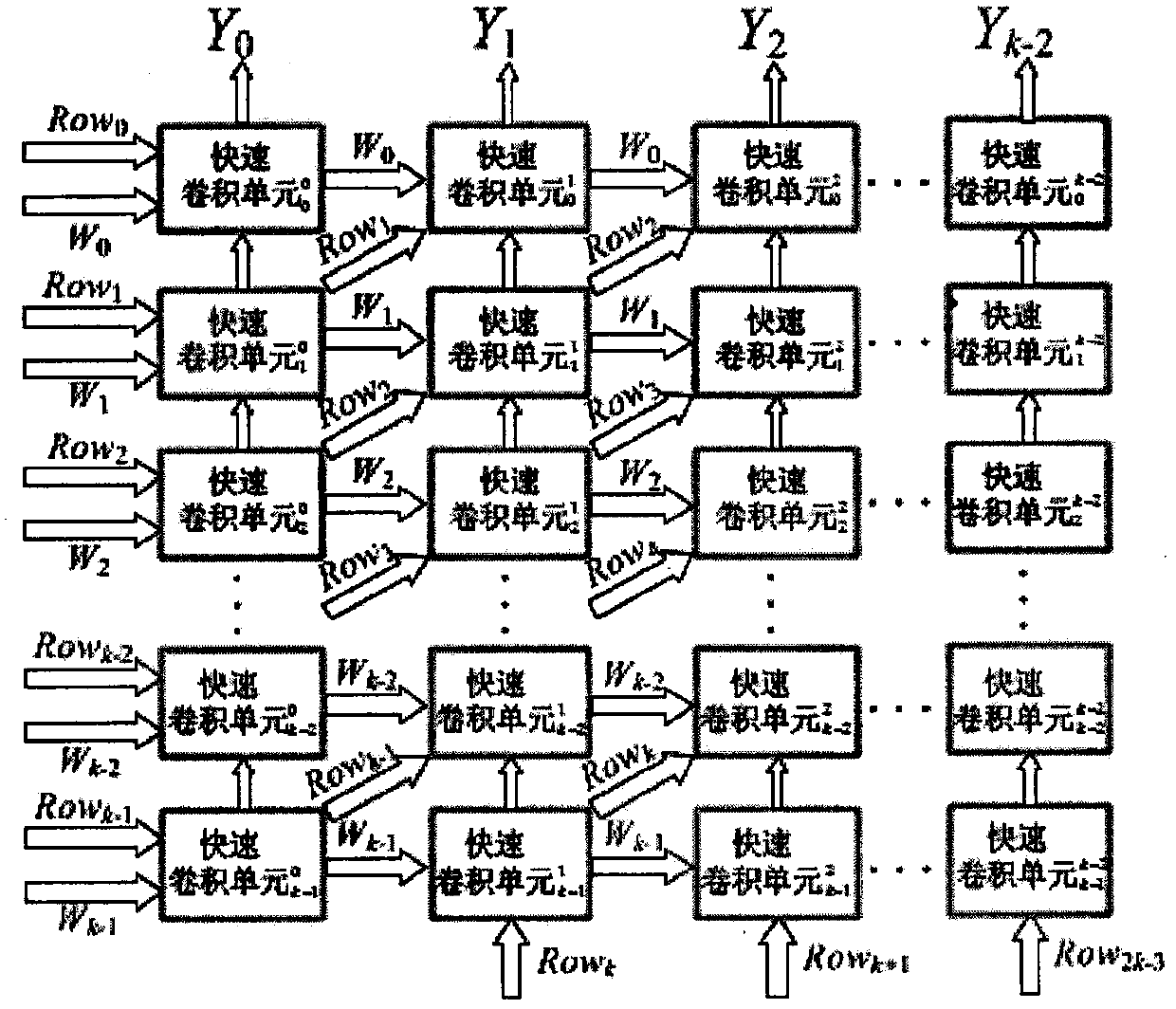

[0025] 1. P (set the size of the convolution kernel to be k×k) multi-purpose processors are used to receive input pixel neurons, perform operations such as bit width conversion, convolution, addition tree, linear correction, and maximum pooling, and convert the results into the corresponding storage unit.

[0026] 2. Pixel memory, used to store part of input pictures and feature pictures.

[0027] 3. Wei...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com