Convolutional neural network back propagation mapping method based on cyclic recombination and blocking

A convolutional neural network and backpropagation algorithm technology, applied in the field of on-chip retraining, can solve problems such as large calculation and storage capacity, scarcity, and increased difficulty in supporting software design

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

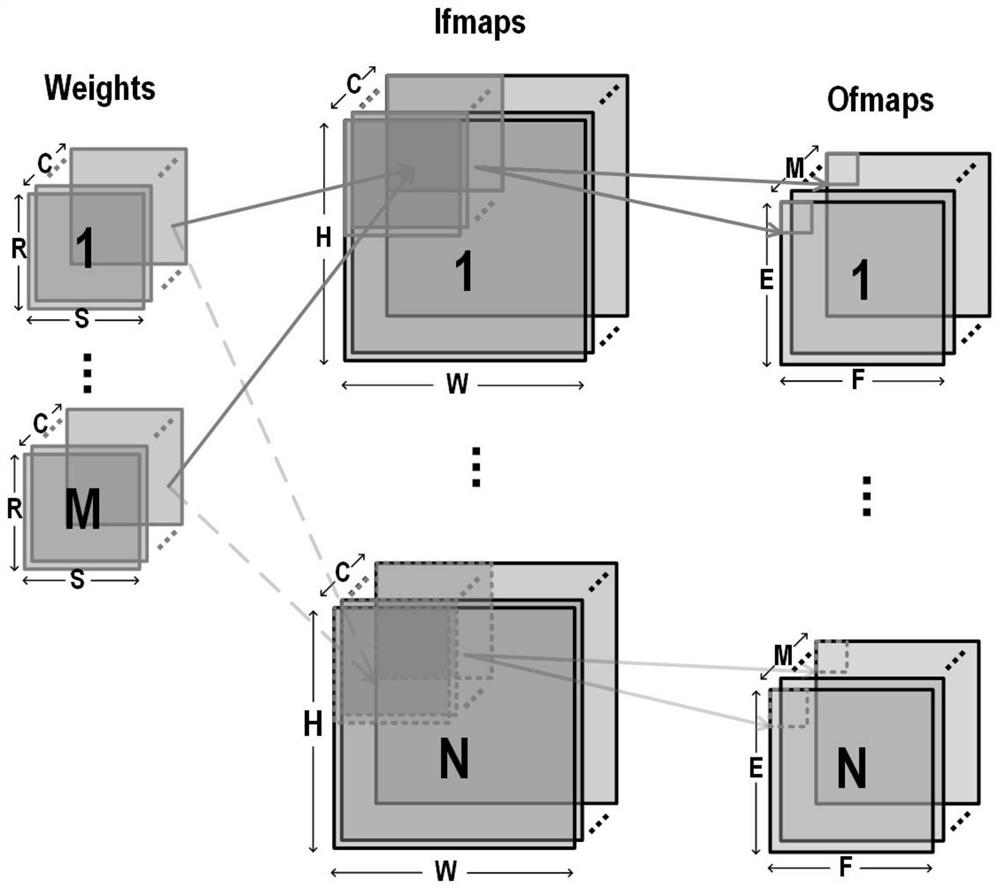

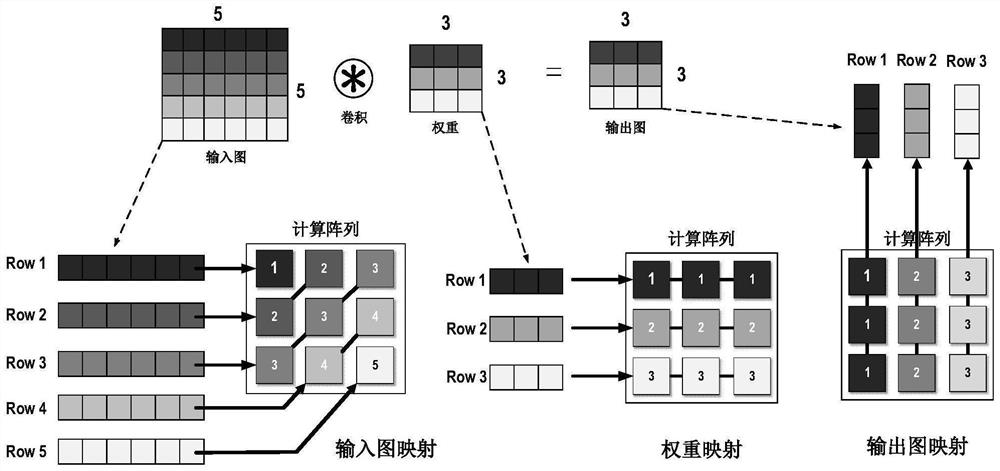

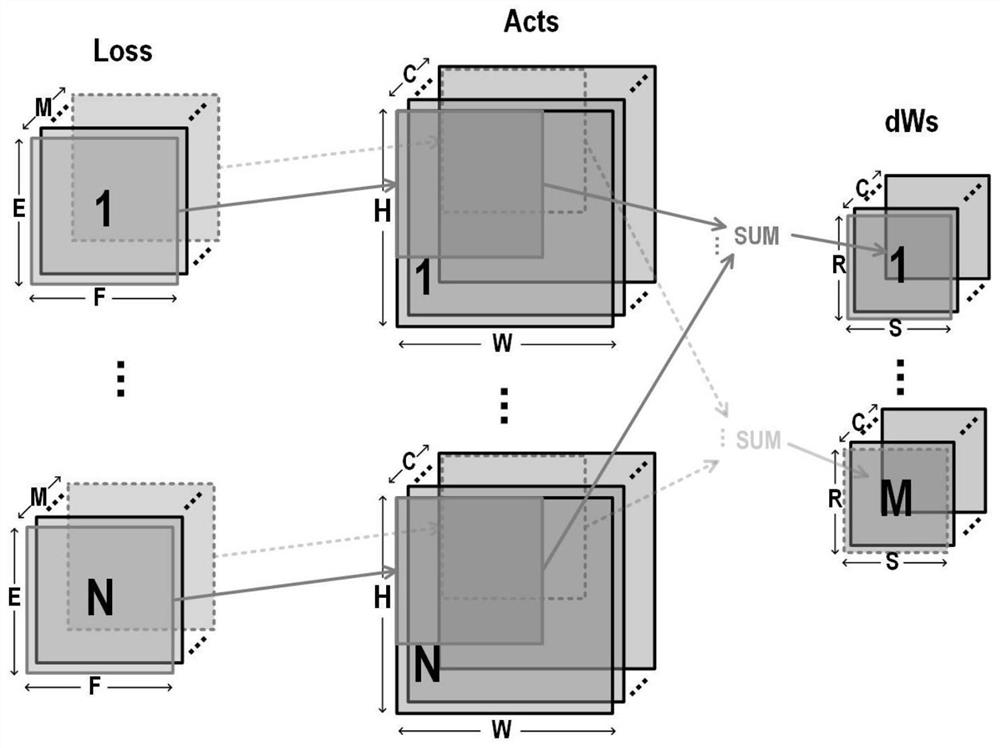

[0029] Usually in the backpropagation of convolutional neural network, it is divided into two steps, the first is error propagation, and the second is weight update. The process of the first error propagation is basically similar to the forward propagation, except that the corresponding input graph and weights have changed, and the scale of the convolution is consistent with the forward propagation. In the second step, when the weights are updated, the input map becomes the activation value tensor of the previous layer, and the weight becomes the error tensor of the current layer. The size of these two tensors may far exceed the scale of forward propagation. For example, the error tensor of the current layer as a weight may reach the size of 100 pixels*100 pixels (usually only 3*3 or 5*5 in the forward direction) ). Therefore, for such large convolutions, accelerators that only support forward direction are usually not well adapted.

[0030] The technical solution provided b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com