Voice interaction method and system, storage medium and electronic equipment

A voice interaction and voice information technology, applied in voice analysis, voice recognition, instruments, etc., can solve the problems of users with different expression styles, insufficient semantic understanding processing, and inability to accurately understand user intentions, etc., to achieve accurate recognition Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

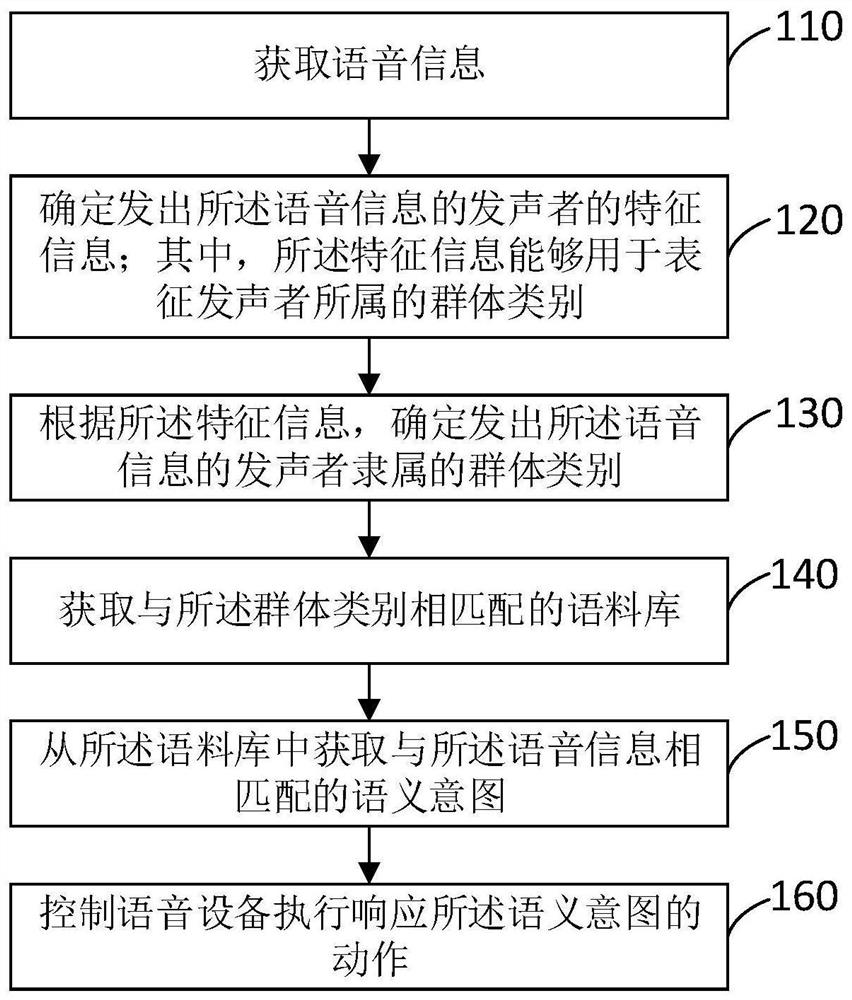

[0049] According to an embodiment of the present invention, a voice interaction method is provided, figure 1 It shows a schematic flowchart of a voice interaction method proposed in Embodiment 1 of the present invention, as shown in figure 1 As shown, the voice interaction method may include: Step 110 to Step 160.

[0050] In step 110, voice information is acquired.

[0051] Here, the voice information refers to the voice dialogue between the user and the smart device. For example, if the user interacts with the air conditioner, and the user sends out the voice of "help me check tomorrow's weather", then "help me check tomorrow's weather" is used as the voice message . Wherein, the smart device may be an air conditioner with a voice function, a refrigerator, a TV, a range hood and other smart devices.

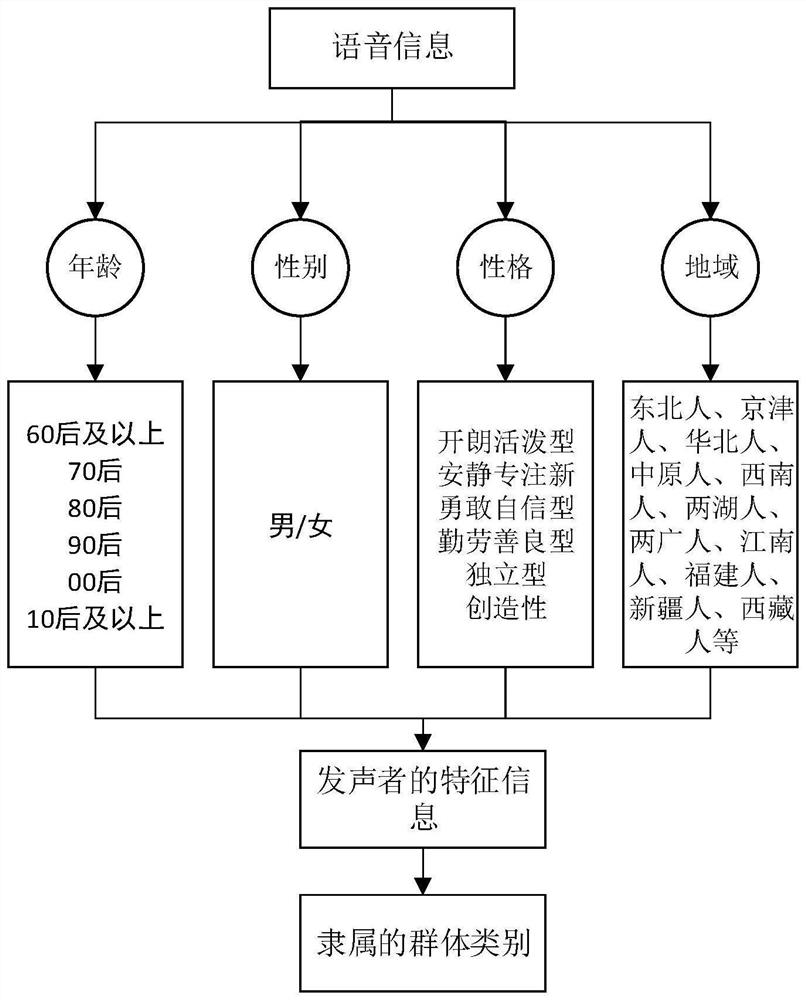

[0052] In step 120, the characteristic information of the speaker who sends out the voice information is determined; wherein, the characteristic information can be used to c...

Embodiment 2

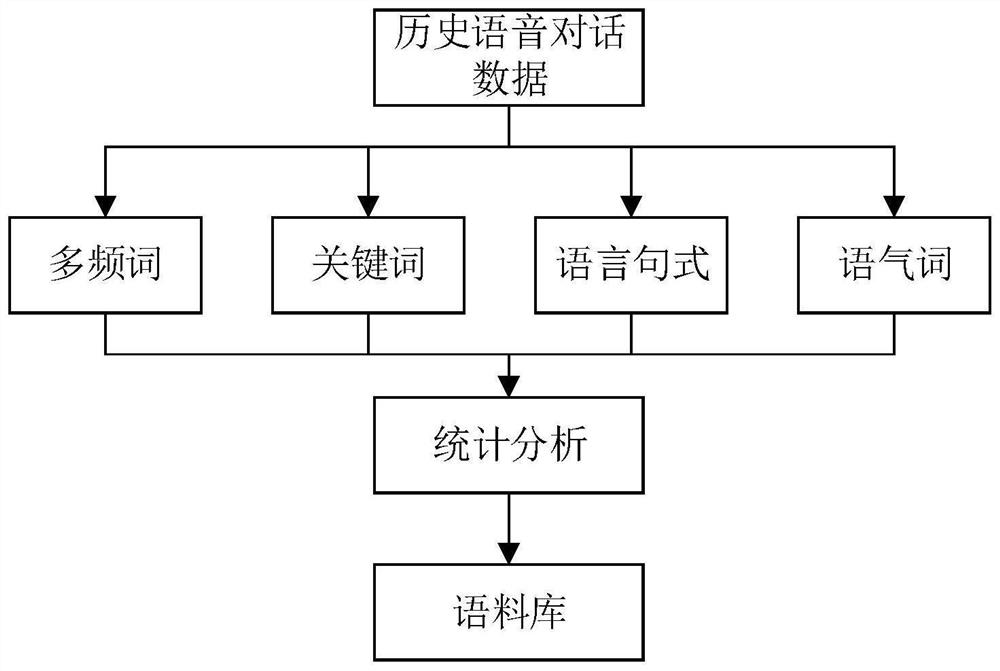

[0069] On the basis of the foregoing embodiments, Embodiment 2 of the present invention may further provide a voice interaction method. The voice interaction method may include: Step 210 to Step 260 .

[0070] In step 210, voice information is acquired.

[0071] Here, the voice information refers to the voice dialogue between the user and the smart device. For example, if the user interacts with the air conditioner, and the user sends out the voice of "help me check tomorrow's weather", then "help me check tomorrow's weather" is used as the voice message . Wherein, the smart device may be an air conditioner with a voice function, a refrigerator, a TV, a range hood and other smart devices.

[0072] In step 220, the characteristic information of the speaker who sends out the voice information is determined; wherein, the characteristic information can be used to characterize the group category to which the speaker belongs.

[0073] Wherein, the feature information includes at ...

Embodiment 3

[0121] According to an embodiment of the present invention, a voice interaction system is also provided, including:

[0122] Voice acquisition module, used to acquire voice information;

[0123] A feature determination module, configured to determine the feature information of the speaker who sends out the voice information;

[0124] A group category determination module, configured to determine the group category to which the speaker who sends out the voice information belongs according to the feature information;

[0125] A corpus acquisition module, configured to acquire a corpus matching the group category;

[0126] A semantic intent determination module, configured to obtain a semantic intent that matches the voice information from the corpus;

[0127] A control module, configured to control the smart device to perform an action in response to the semantic intention.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com