Quantification method, device, storage medium and electronic equipment of neural network

A neural network and quantization method technology, applied in the field of devices, neural network quantization methods, storage media and electronic equipment, can solve problems such as slowing down the running speed of neural network processor chips, and achieve the effect of improving data transmission efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

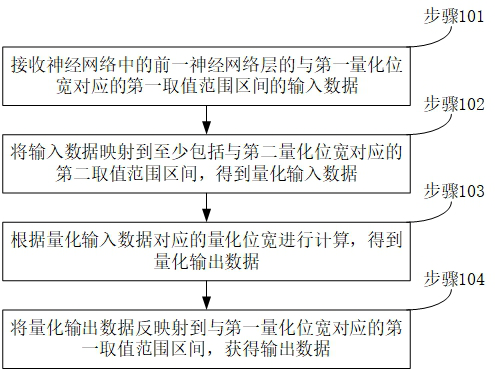

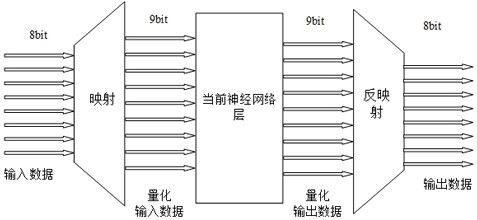

[0040] In order to make the objectives, technical solutions and advantages of the present disclosure more clear, the present disclosure will be described in further detail below with reference to the accompanying drawings and embodiments.

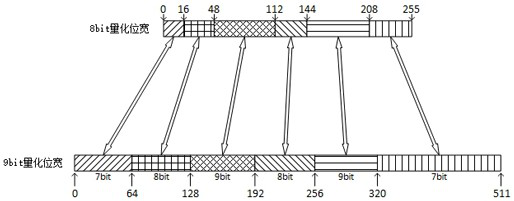

[0041] In the commonly used quantization methods, the quantization bit width is generally 16bit (2 4 ), 8bit (2 3 ), 4bit (2 2 ), 2bit (2 1 ), 1bit (2 0 ) and other alignment bit widths, generally do not choose non-2 n (ie, unaligned bit width) quantization bit width, which will reduce the efficiency of bus access, but these differences can be ignored by the internal computing unit of the NPU, for example, 8bit data can be converted into 9bit Data operation, obviously, 9bit data operation has higher precision than 8bit data operation. Based on this consideration, the quantized data of the original quantized bit width can be increased in the NPU, and then the neural network layer operation can be performed to improve the operation accur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com