Multi-stream fusion-based skeleton graph human body behavior identification method and system

A recognition method and behavior technology, applied in the field of behavior recognition, can solve problems such as limited motion information of joint points, influence on the accuracy of behavior recognition, and difficult variety of behaviors, so as to improve long-distance spatial perception, accurately predict action categories, enhance The effect of motion characteristics

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

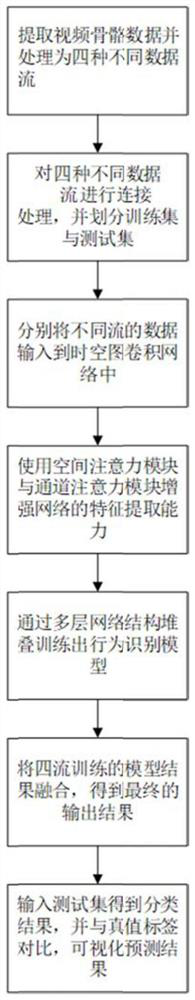

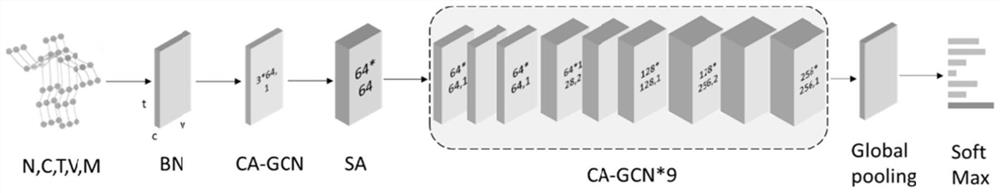

[0100] A method for human behavior recognition based on multi-stream fusion, comprising the following steps:

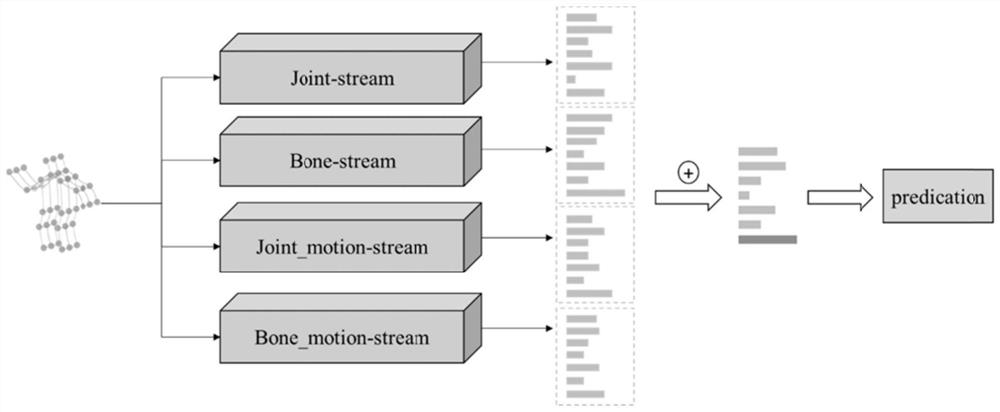

[0101] S1. Extract four different data streams from video skeleton data: joint stream, skeleton stream, joint motion stream and skeleton motion stream. The specific workflow is as follows:

[0102] (1.1), using a set of public skeleton point data sets and a set of public RGB data sets;

[0103] (1.2) For the RGB data set, the public OpenPose toolbox is used to extract the 18 joint points of each frame of the human body, which are represented by coordinates and integrated into a data format that conforms to the network input;

[0104] (1.3), for the bone data in (1.1) and (1.2), four different preprocessing methods are used to divide the data into four different data streams, such as Image 6 As shown, they are joint flow, bone flow, joint motion flow and bone motion flow.

[0105] S2. Process the acquired different data streams, and divide the training set and the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com