Method and system for vision-based interaction in a virtual environment

a virtual environment and interaction technology, applied in the field of human-machine interfaces, can solve the problems of limited user experience in a virtual environment, lack of realism, and limited user experien

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

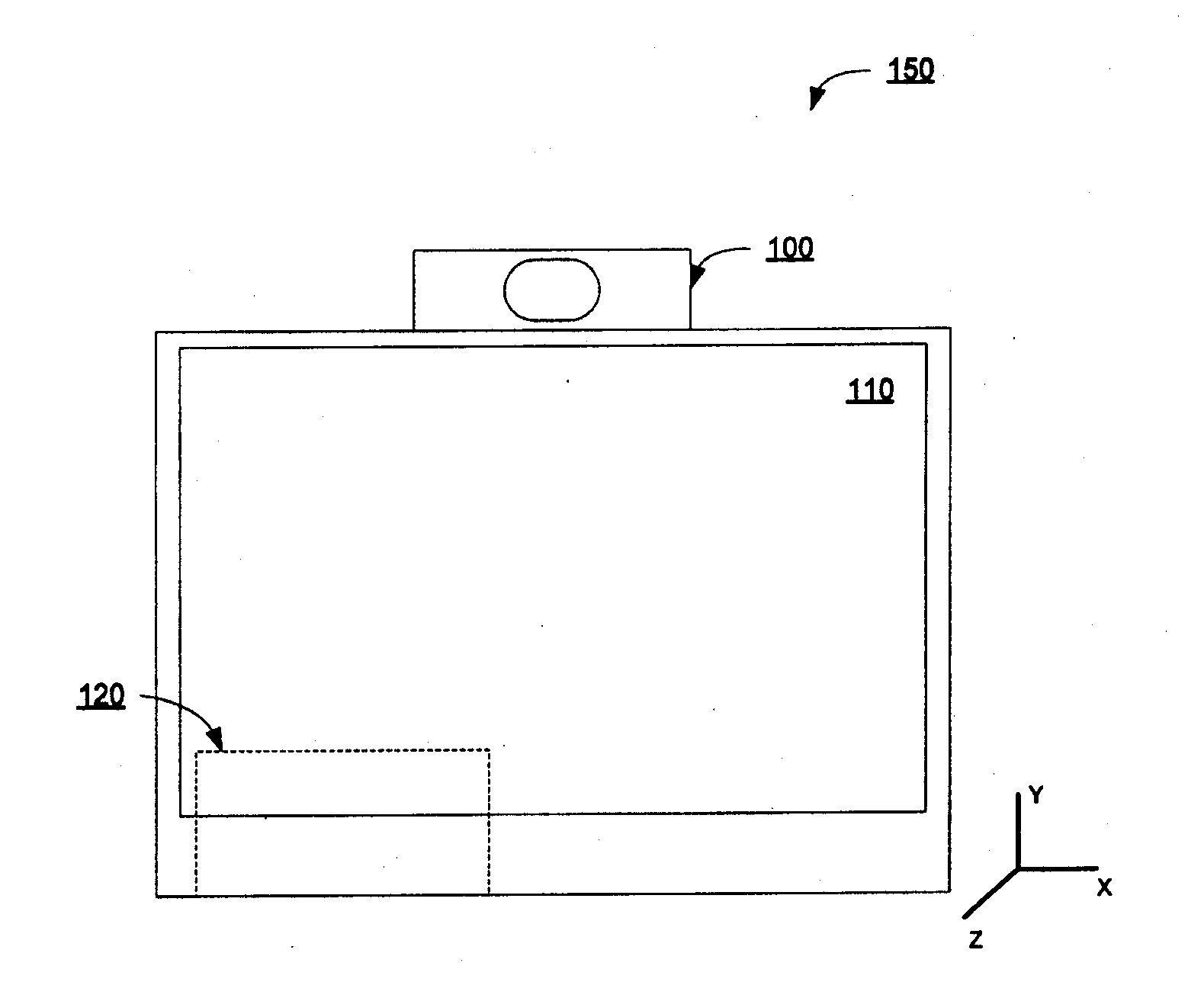

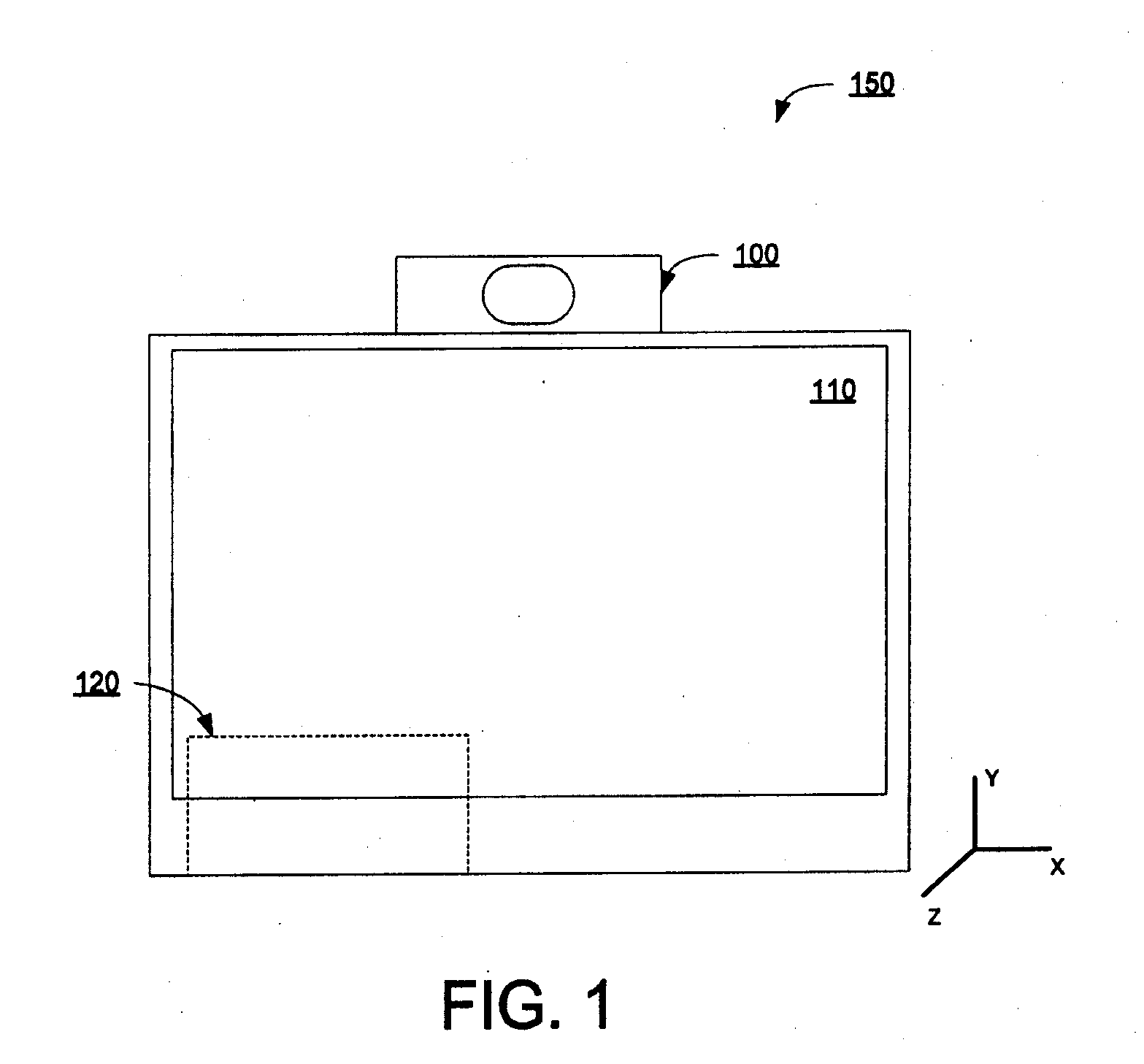

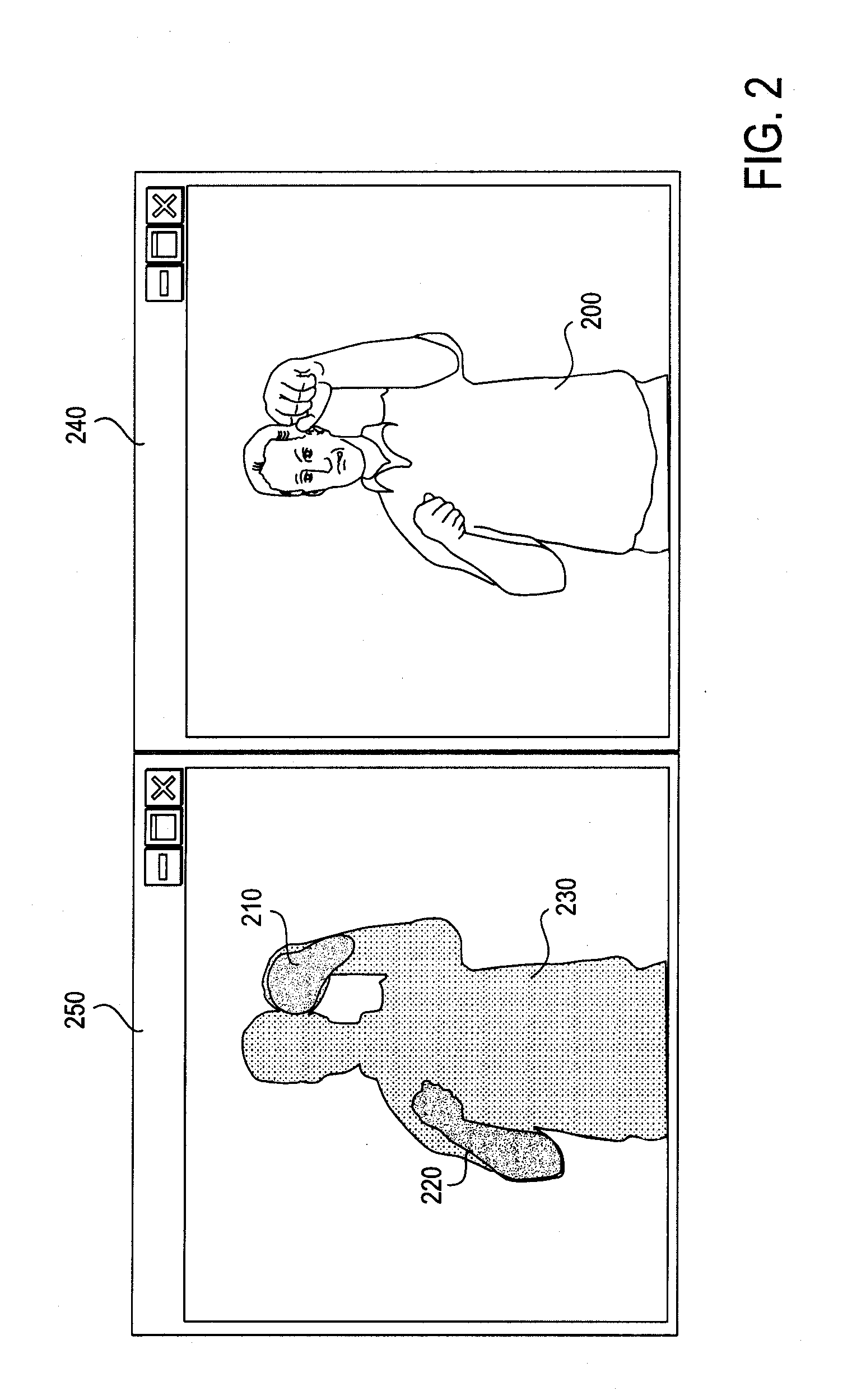

[0024]A method and system for vision-based interaction in a virtual environment is disclosed. According to one embodiment, a computer-implemented method comprises receiving data from a plurality of sensors to generate a meshed volumetric three-dimensional representation of a subject. A plurality of clusters is identified within the meshed volumetric three-dimensional representation that corresponds to motion features. The motion features include hands, feet, knees, elbows, head, and shoulders. The plurality of sensors is used to track motion of the subject and manipulate the motion features of the meshed volumetric three-dimensional representation.

[0025]Each of the features and teachings disclosed herein can be utilized separately or in conjunction with other features and teachings to provide a method and system for vision-based interaction in a virtual environment. Representative examples utilizing many of these additional features and teachings, both separately and combination, ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com