View angle-independent action identification method

A technology of action recognition and perspective, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problem of high recognition rate and achieve the effect of high recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

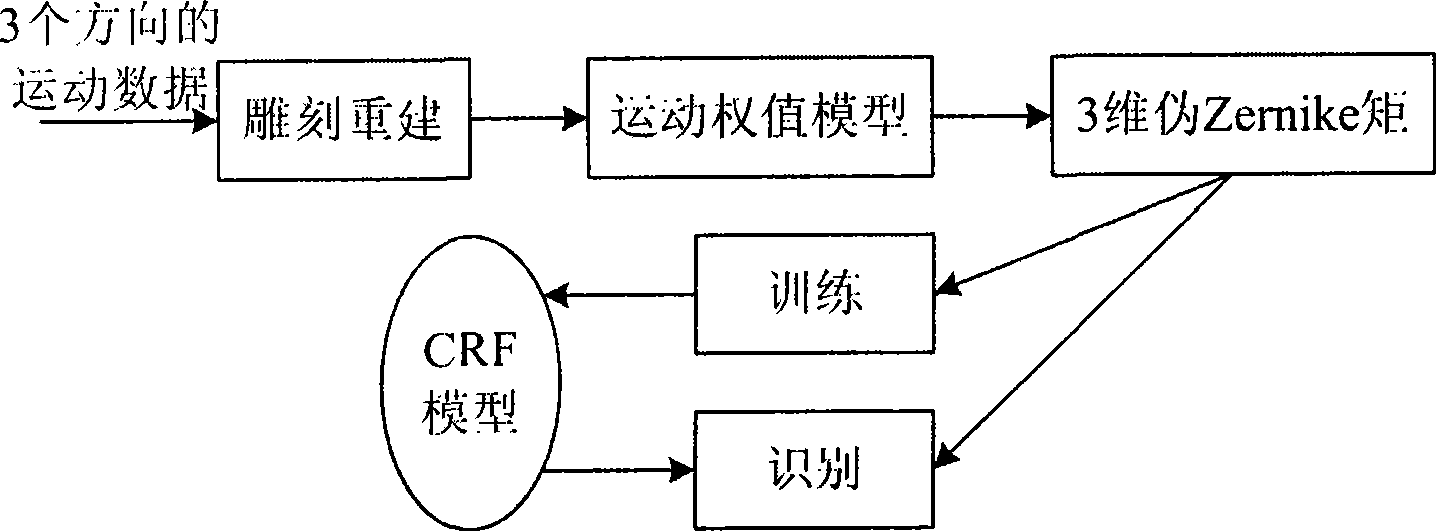

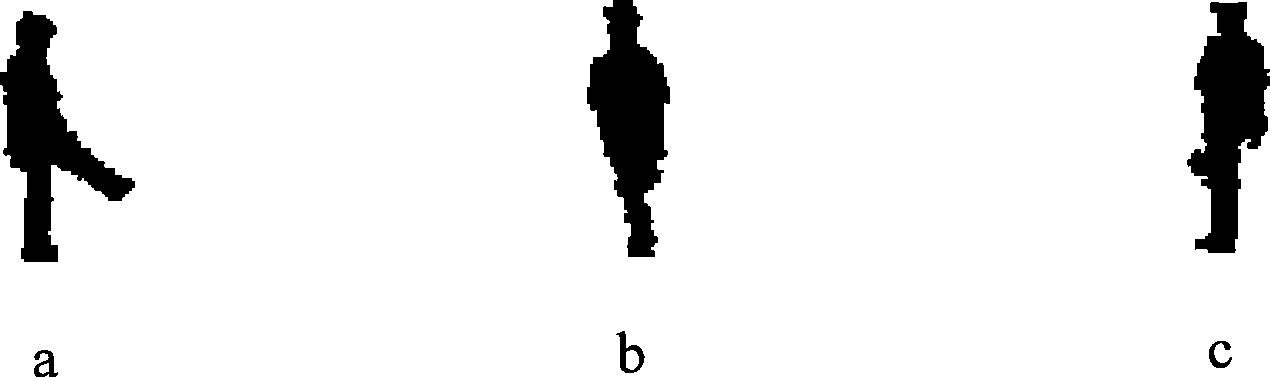

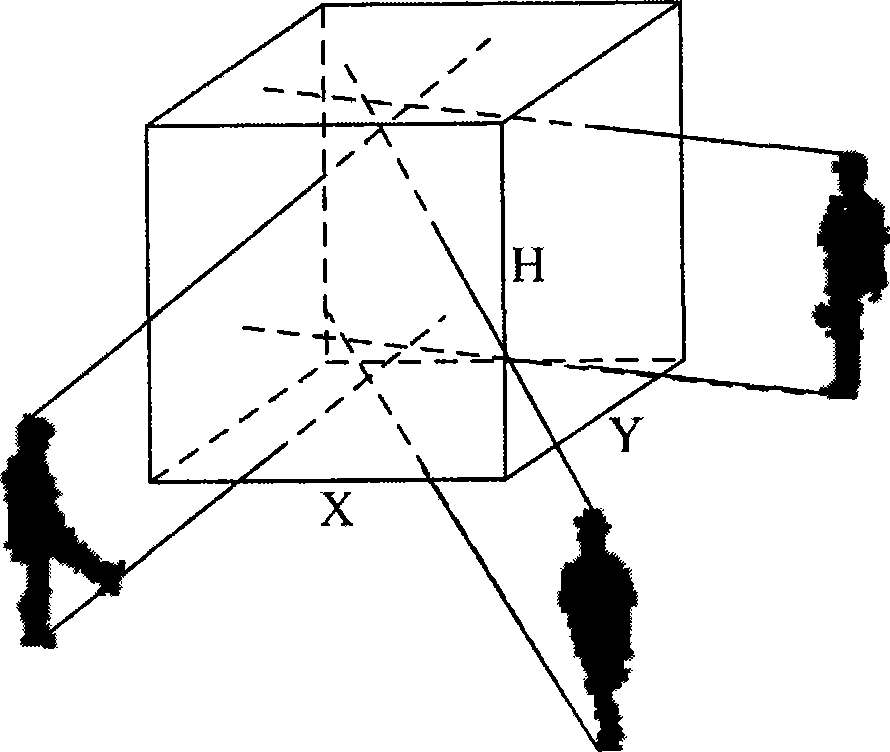

[0019] Action recognition method of the present invention, specific steps are as follows figure 1 As shown, by using three cameras to synchronously collect the human body movement data in the scene from the front, oblique, and side directions respectively, the 3D human body model is reconstructed by using the 3D carving method, and further through the dynamic part of the human body movement data Focus on extracting the motion weight model of the 3D body posture, and then use the 3D pseudo-Zernike moment to describe the perspective-independent human action characteristics, and model the action through the conditional random field to realize action recognition.

[0020] The method of the present invention will be described below by taking the recognition of the "kicking" action as an example.

[0021] Step 1: Use 3 cameras to collect action se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com