An automatic discovery method for maxq task graph structure in complex systems

A complex system and automatic discovery technology, applied in instruments, electrical digital data processing, computers, etc., can solve the problems that subtasks cannot be further divided, and MAXQ has weak automatic layering ability, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The present invention will be described in detail below.

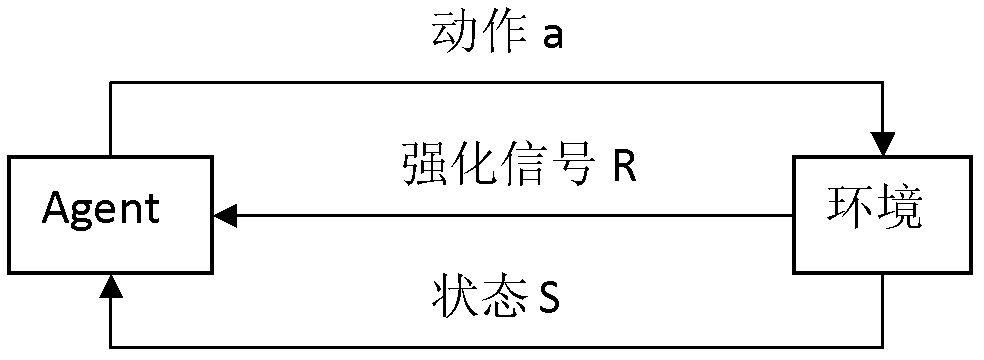

[0045] Assume that the interaction between the Agent and the environment occurs at a series of discrete moments t=0, 1, 2, . . . At each time t, the agent obtains the state s by observing the environment t ∈ S. Agent chooses exploration action a according to strategy π t ∈A and execute. At the next moment t+1, the Agent receives the reinforcement signal (reward value) r given by the environment t+1 ∈R, and reach a new state s t+1 ∈ S. According to the reinforcement signal r t+1 , Agent improves strategy π. The ultimate goal of reinforcement learning is to find an optimal strategy The state value obtained by the Agent (that is, the total reward obtained by the state) V π (S) maximum (or minimum), 0≤γ≤1, where γ is the remuneration discount factor. Due to the randomness of the state transition of the environment, under the action of policy π, the state s t value of: where P(s t+1 |s t ,a t ) is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com