Human-computer interaction device and method used for target tracking

A technology of human-computer interaction and target tracking, which is applied to color TV parts, TV system parts, image data processing, etc. It can solve the problems of low precision, bulky size, high loss rate of tracking targets, etc., and achieve high precision , Low tracking loss rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

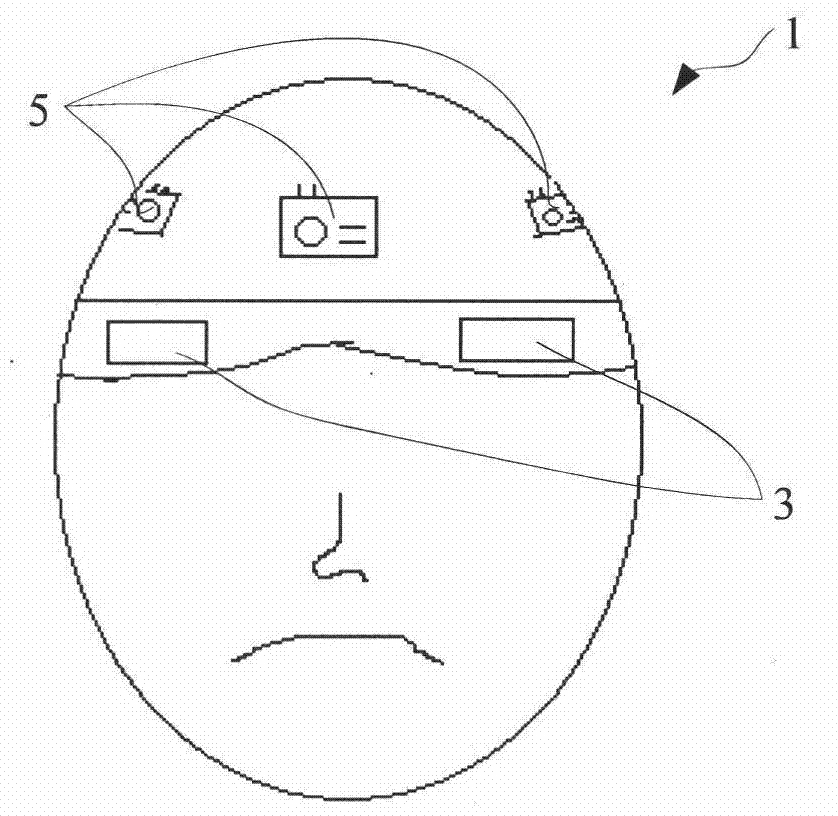

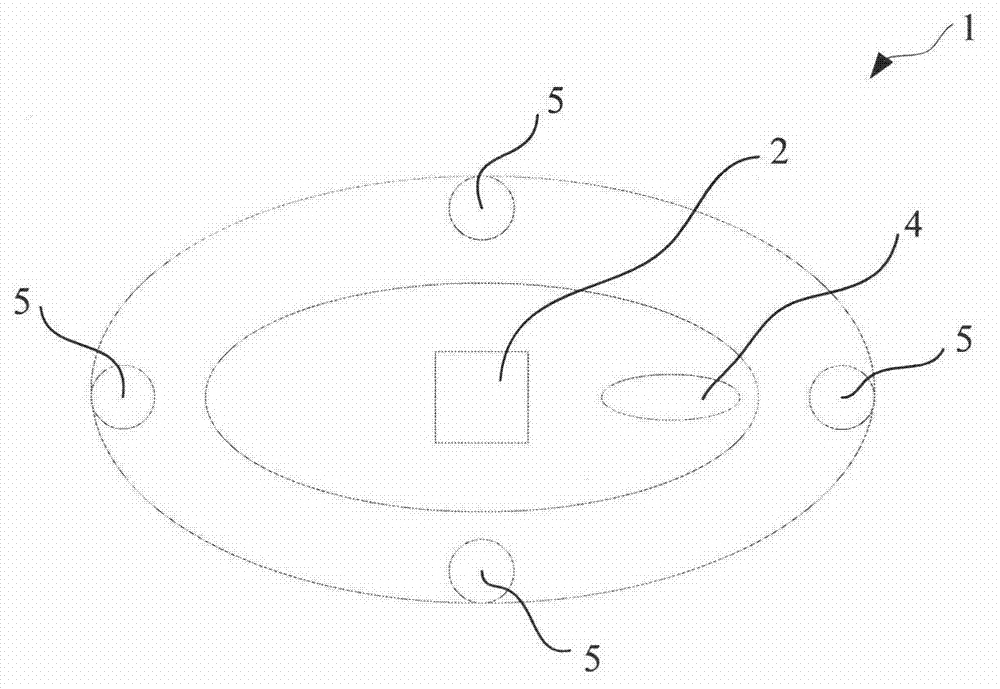

[0047] see figure 1 with figure 2 as shown, figure 1 It is a schematic front view of the human-computer interaction device for target tracking in Embodiment 1 of the present invention, figure 2 It is a schematic top view of the human-computer interaction device for target tracking according to Embodiment 1 of the present invention. This embodiment provides a human-computer interaction device for target tracking, which includes a helmet device 1, on which a first information processing unit 2, a display device 3, a line of sight tracking system 4 and a plurality of cameras are arranged. 5. The camera 5 is installed around the helmet device 1 and connected to the first information processing unit 2, and shoots the scene video around the helmet device 1 in real time and transmits the captured scene video to the first information processing unit 2. There are at least four cameras 5, respectively Installed on the front, back, left, and right sides of the helmet device 1, in t...

Embodiment 2

[0053] The difference between this embodiment and Embodiment 1 is that in this embodiment, the gaze tracking system 4 does not have a second information processing unit 43, and the function of the second information processing unit 43 in Embodiment 1 is passed through the first information in this embodiment. The processing unit 2 is implemented, that is, the first information processing unit 2 and the second information processing unit 43 are integrated together, which reduces the cost of the device. Other parts that are the same as or similar to Embodiment 1 will not be repeated here.

[0054] see Figure 4 As shown, it is a structural block diagram of the human-computer interaction device in Embodiment 2 of the present invention. The gaze tracking system 4 includes an infrared light source 41 and a camera 42 . The infrared light source 41 turns on and off alternately. The camera 42 is connected to the first information processing unit 2 , and takes video frames of altern...

Embodiment 3

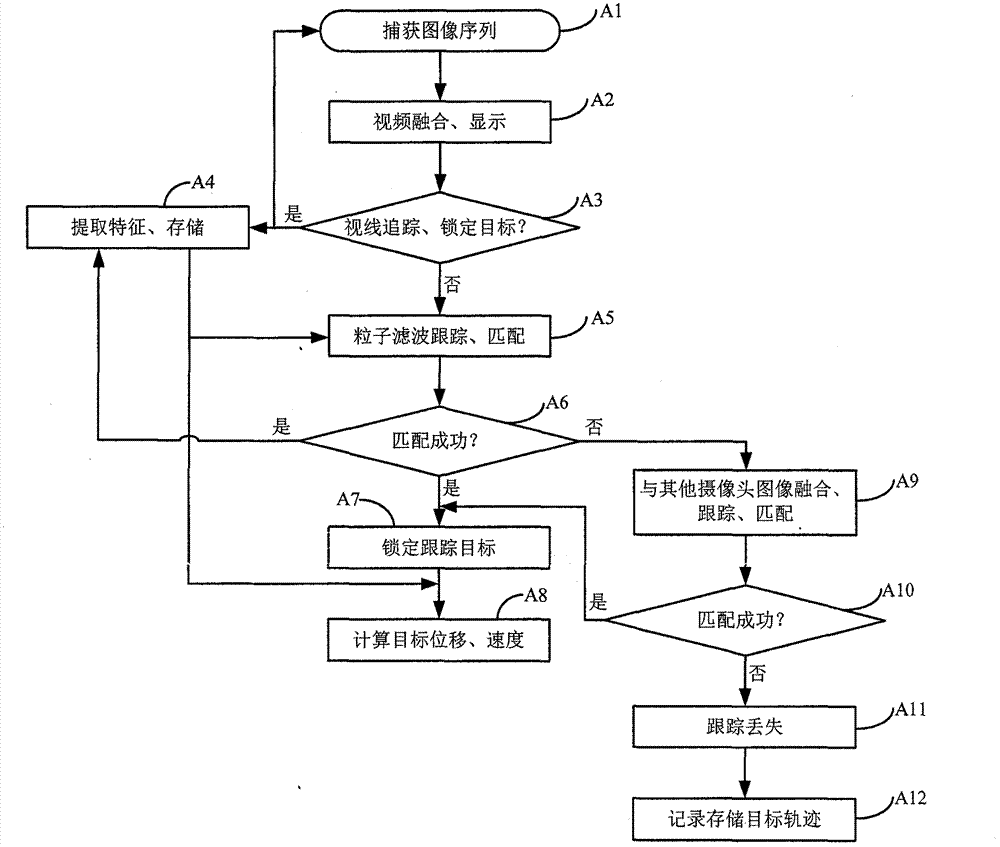

[0057] see image 3 As shown, it is a flow chart of the human-computer interaction method for target tracking in Embodiment 3 of the present invention.

[0058] This method can be implemented on the human-computer interaction device for target tracking in Embodiment 1 or 2. The method comprises the steps of:

[0059] Step S1: Shoot the video of the scene around the helmet device in real time.

[0060] In this step, the video of the scene around the helmet device is captured in real time through multiple cameras, for example, 4 cameras.

[0061] Step S2: Process the scene video.

[0062] In this step, scene fusion and stitching is performed on the video image sequences captured by each camera to obtain a 360-degree panoramic video of the scene.

[0063] Step S3: displaying the processed scene video on the display screen.

[0064] In this step, the processed 360-degree panoramic video is displayed on the glasses-type micro-display.

[0065] Step S4: By tracking the user's ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com