Infrared image and visible-light image fusion method based on saliency region segmentation

A technology of region segmentation and image fusion, applied in the field of image fusion, it can solve the problem that the effect of region segmentation is not obvious, and achieve the effect of being more targeted, improving quality, and increasing information content.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] Below through embodiment and accompanying drawing, the present invention is described in detail.

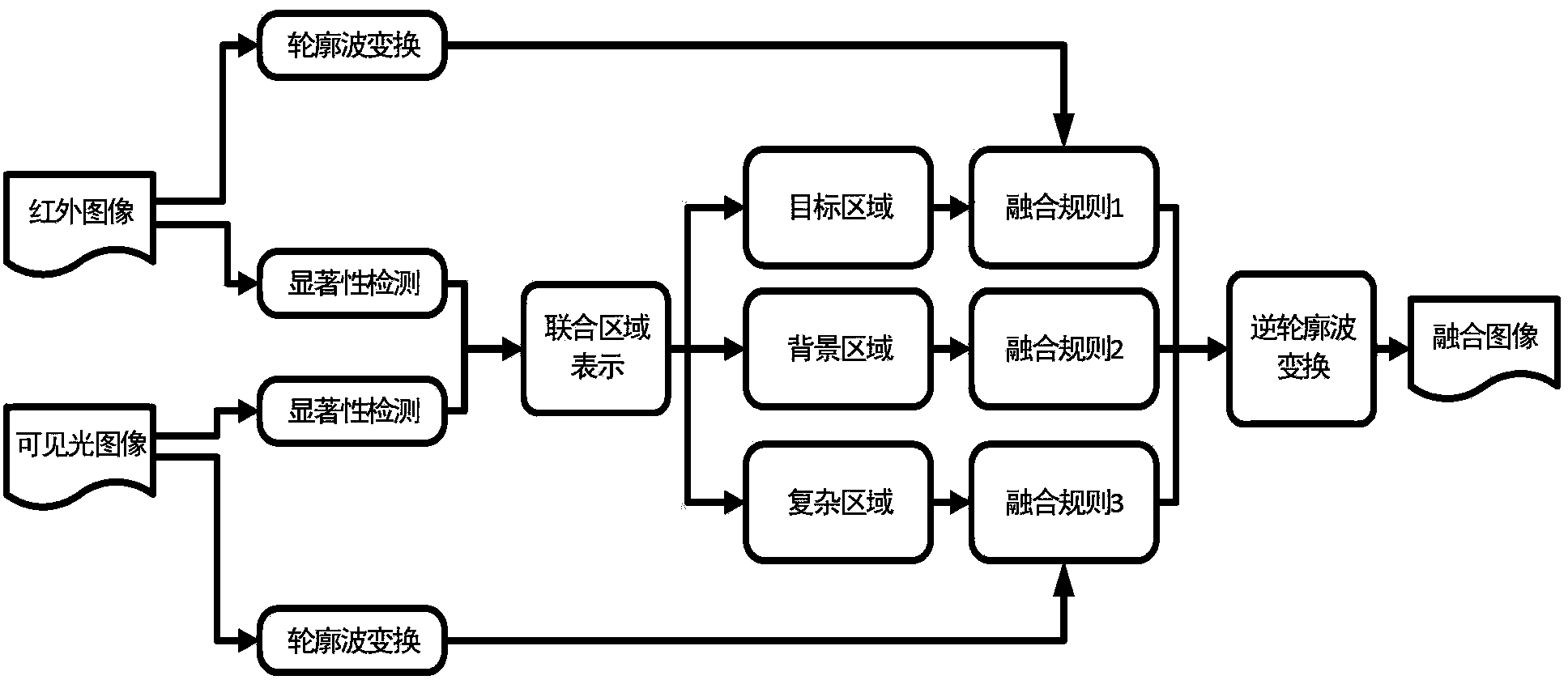

[0028] figure 1 It is a flow chart of the infrared and visible light image fusion method based on salient region segmentation in this embodiment, and its specific implementation steps are as follows:

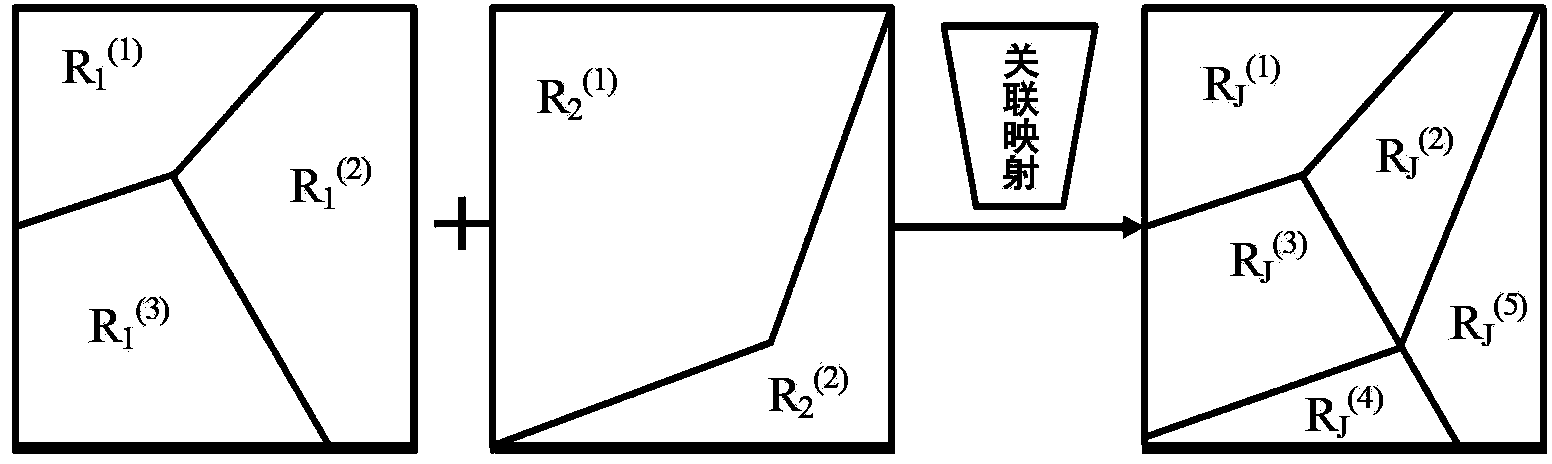

[0029] 1. Separate the infrared and visible light images into regions.

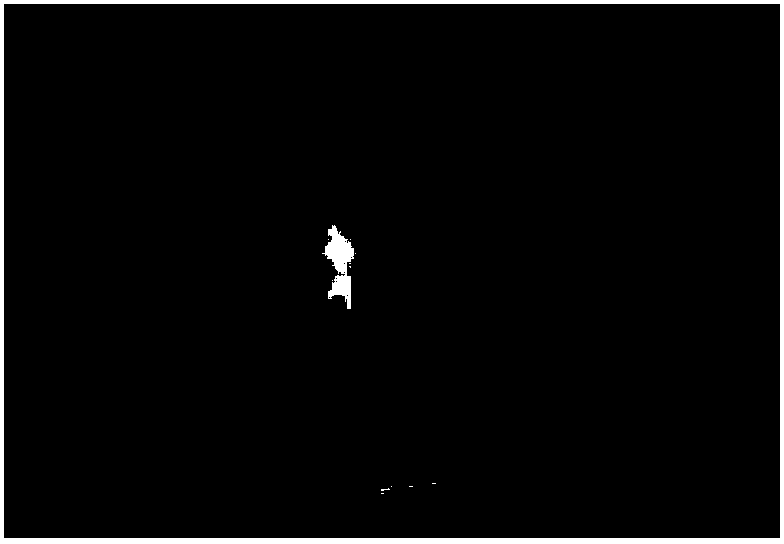

[0030] Here, a saliency detection-based method is used for region division. A salient area in an image usually has a higher contrast in color or brightness than its surrounding area. Therefore, by calculating the contrast between an area and the surrounding area, the salient area of the area can be determined.

[0031] The contrast of each area in the image can be obtained by calculating the difference between the gray level of each pixel in the image and the gray level of the pixels in the surrounding area, such as formula (1):

[0032] c ( i ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com