Human face mapping method and device

A face image and texture technology, applied in the field of image processing, can solve problems such as the inability to edit a large-scale 3D character avatar model, and achieve the effect of improving satisfaction and increasing application functions.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

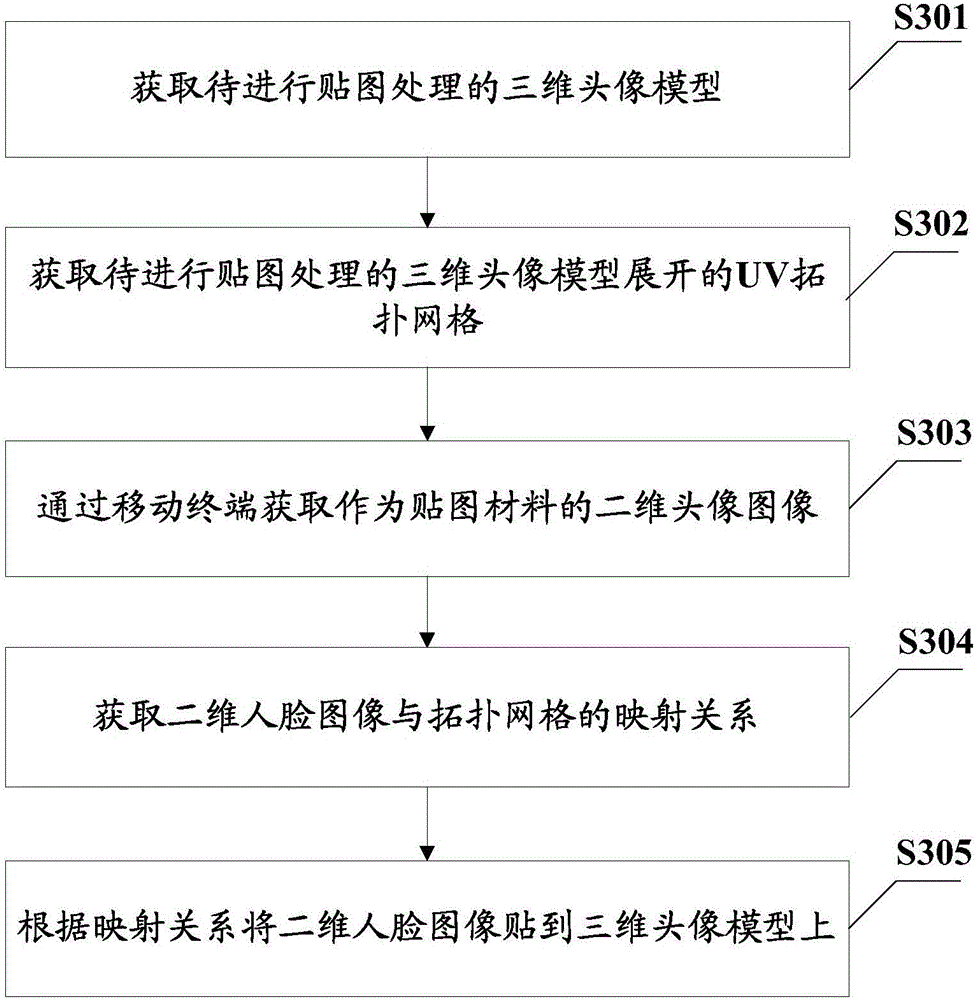

[0035] In order to enable the user to edit the 3D character avatar model in the terminal application to the greatest extent, this embodiment provides a face mapping method, see image 3 shown, including:

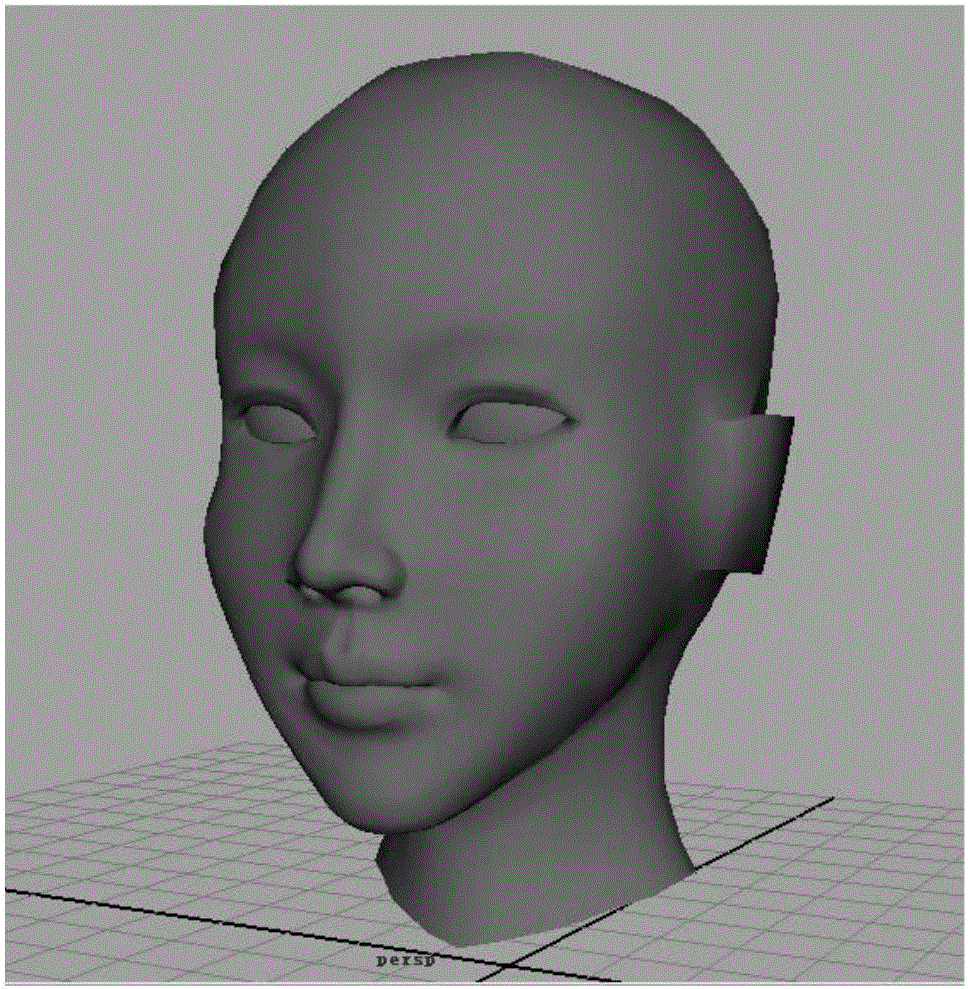

[0036] S301: Acquire a 3D avatar model to be textured.

[0037] It should be noted that obtaining the 3D avatar model to be textured may include: receiving a model selection instruction, and selecting a corresponding 3D avatar model from a preset model library according to the model selection instruction, wherein the model library contains a plurality of different types 3D avatar model. In general, the 3D avatar models of different geographical ranges, age groups and genders are different. Therefore, different types of 3D avatar models in the model library can be divided according to different geographical ranges, age groups and genders. For example, in the model library It can include 3D avatar models of China, India, and the United States, as well as 3D avatar models of ...

Embodiment 2

[0056] In order to better understand the present invention, this embodiment provides a more specific face mapping method, see Figure 7 shown, including:

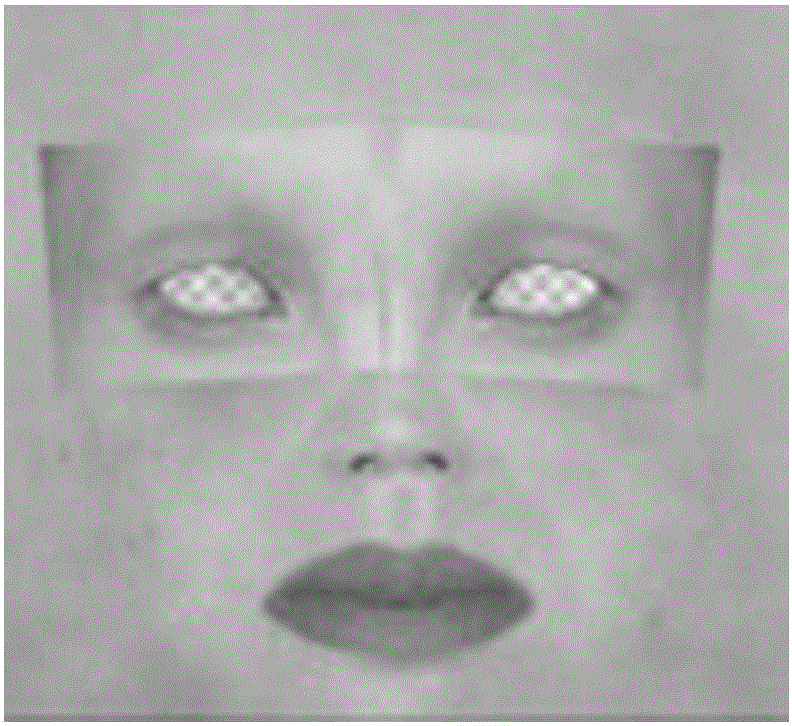

[0057] S701: Select a 3D avatar model to be pasted from a preset model library according to a model selection instruction, and unfold a UV topology grid of the 3D head model to be pasted.

[0058] Wherein, the model selection instruction may be issued by the user through the terminal, and the model library includes a plurality of different types of 3D avatar models. Generally, the 3D avatar models of different geographical ranges, age groups and genders are different. Therefore, different types of 3D avatar models in the model library can be divided according to different geographical ranges, age groups and genders.

[0059] S702: Obtain a two-dimensional face image as a texture material through an image acquisition module of the mobile terminal.

[0060] The image collection module in S702 of this embodiment can collect ...

Embodiment 3

[0072] In order to optimize the 3D character portrait model in the mobile terminal application, so that the user can greatly edit the 3D character portrait model, this embodiment provides a face mapping device, which can be found in Figure 9 As shown, it is applied to a mobile terminal, including: a model selection module 91 , a grid acquisition module 92 , a texture material acquisition module 93 , a processing module 94 and an execution module 95 . Wherein, the model selection module 91 is used to obtain the three-dimensional avatar model to be textured; the grid acquisition module 92 is used to obtain the UV topology grid that the three-dimensional avatar model expands; The two-dimensional human face image; the processing module 94 is used to obtain the mapping relationship between the two-dimensional human face image and the UV topological grid; the execution module 95 is used to paste the two-dimensional human face image on the three-dimensional avatar model according to ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com