Road drivable area detection method based on fusion of monocular vision and lidar

A lidar and monocular vision technology, applied in the field of intelligent transportation, can solve the problems of sparse point cloud data, vulnerable to light conditions, and high time consumption, and achieve the effect of strong robust performance, high algorithm efficiency, and narrowing of the scope.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

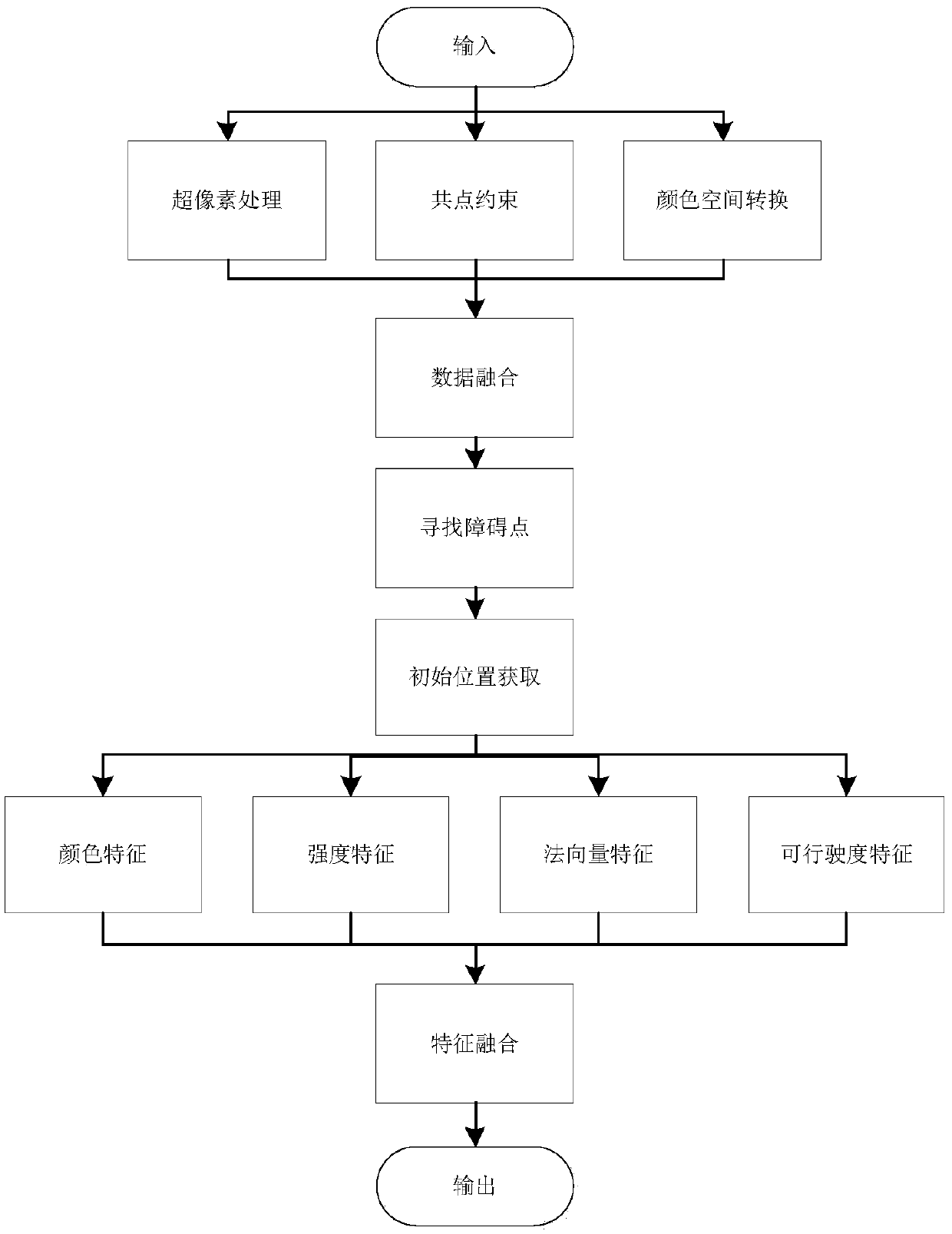

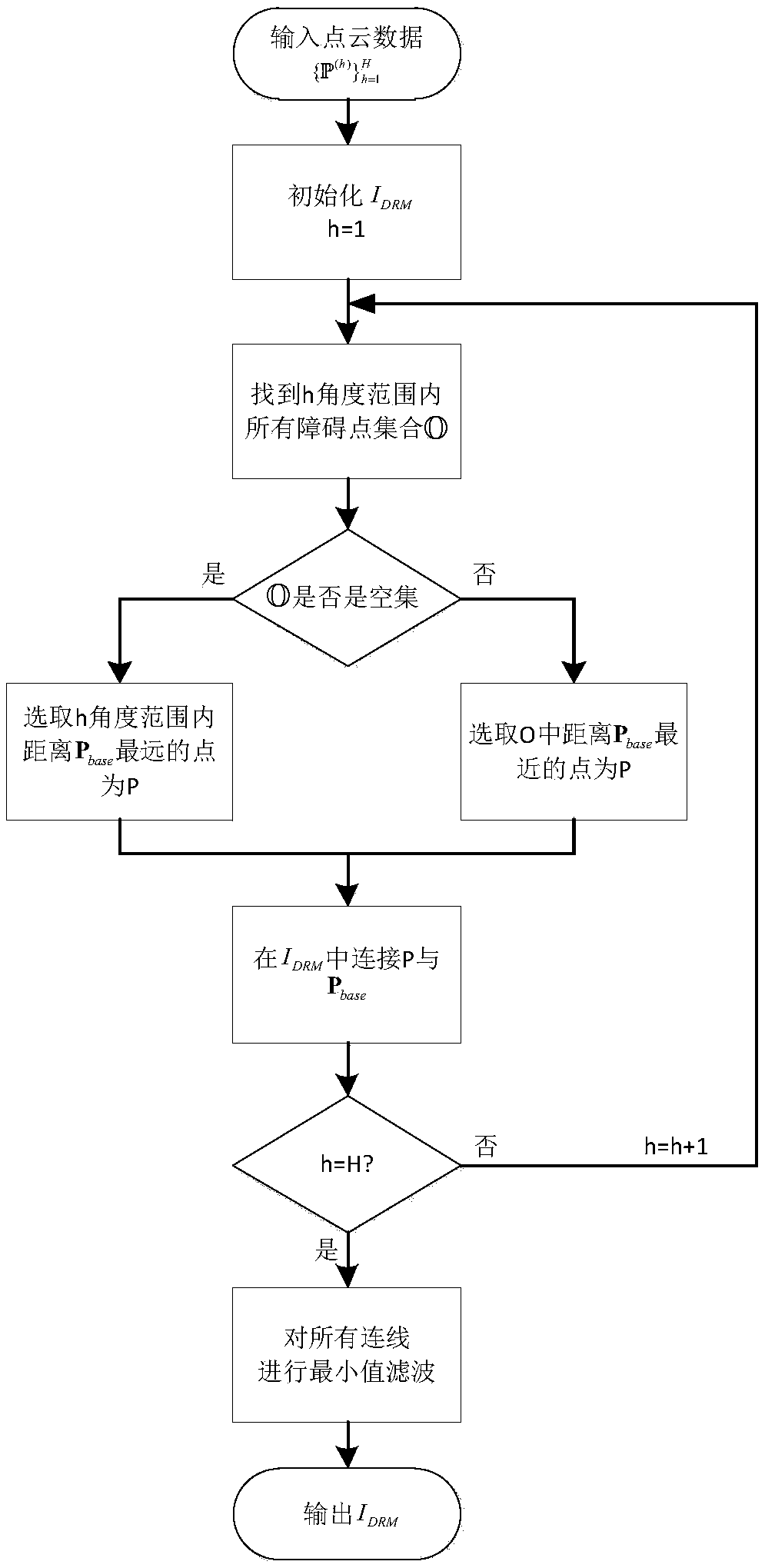

[0042] refer to figure 1 As shown in Fig. 1, the existing improved linear iterative clustering method combined with edge segmentation is used to perform superpixel segmentation on the picture collected by the camera, and the picture is divided into N superpixels, each superpixel p c =(x c ,y c ,z c ,1) T Contains several pixels, where x c ,y c ,z c Indicates the average value of the position information of all pixels in the superpixel in the camera coordinate system, and at the same time, the RGB of these pixels are unified as the average RGB of all pixels in the superpixel. Reuse existing calibration techniques and rotation matrices and transformation matrix According to the formula (1) to get the conversion matrix

[0043]

[0044] Use the rotation matrix and Establish the conversion relationship between the two coordinate systems, such as formula (2):

[0045]

[0046] Each point p obtained by the lidar l =(x l ,y l ,z l ,1) T Projected onto the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com