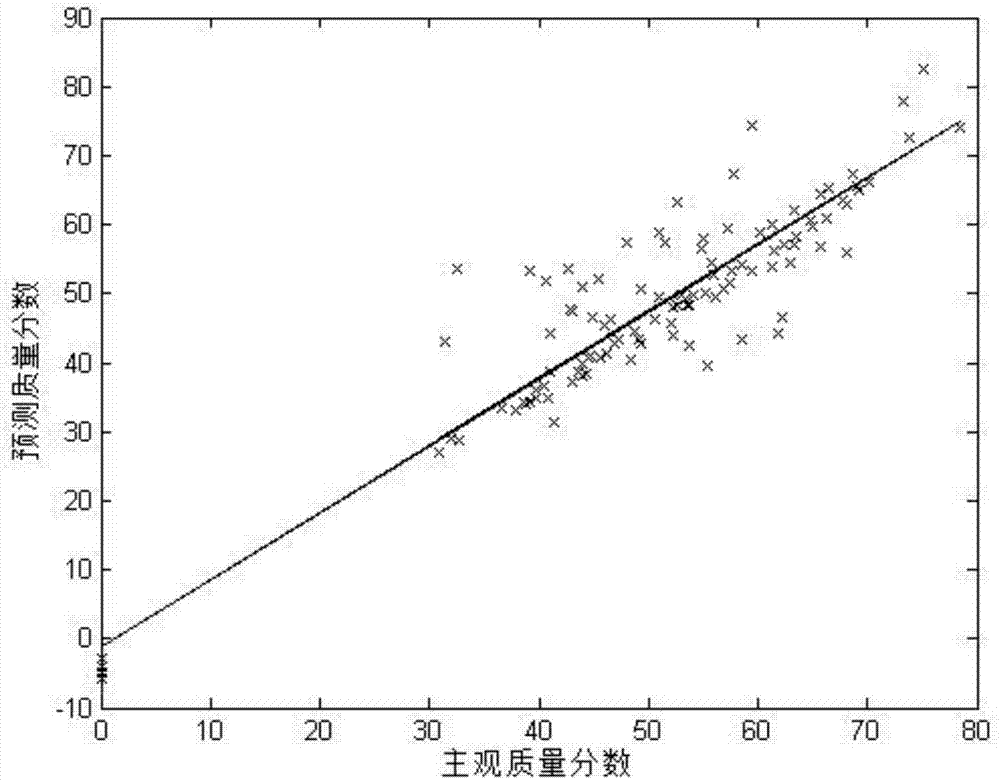

Video quality evaluation method based on visual saliency area and time-space characteristics

A technology of video quality and area, applied in the direction of television, electrical components, image communication, etc., can solve the problems of complex calculation, the evaluation results cannot better conform to the subjective evaluation, and cannot represent all the information of the video, and achieve the effect of accurate results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

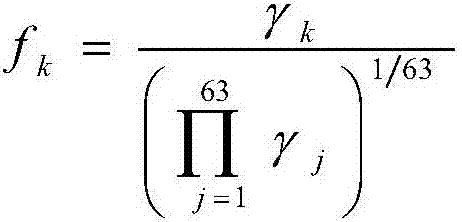

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0053] Refer to attached figure 1 , the concrete steps of the present invention are as follows.

[0054] Step 1, extract the video.

[0055] Randomly select a video from 160 videos in the video quality assessment database live.

[0056] Step 2, randomly select an image frame from the selected video.

[0057] Step 3, extract the visually salient regions of the image.

[0058] Select the maximum gray value and the minimum gray value from the selected frame image plane coordinate system respectively.

[0059] An optimal threshold was determined using the maximum between-class variance method OTSU.

[0060] The specific steps of the maximum between-class variance method OTSU are as follows:

[0061] Step 1, set the initial threshold of the gray value to 60.

[0062] In the second step, the area surrounded by all the gray values in the selected frame imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com