Matching method of optical image and radar image based on multi-channel convolutional neural network

A convolutional neural network, optical image technology, applied in biological neural network models, neural architectures, instruments, etc., can solve problems such as inability to match accurately, and achieve the effect of fully utilizing, good feature space, and stable matching results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

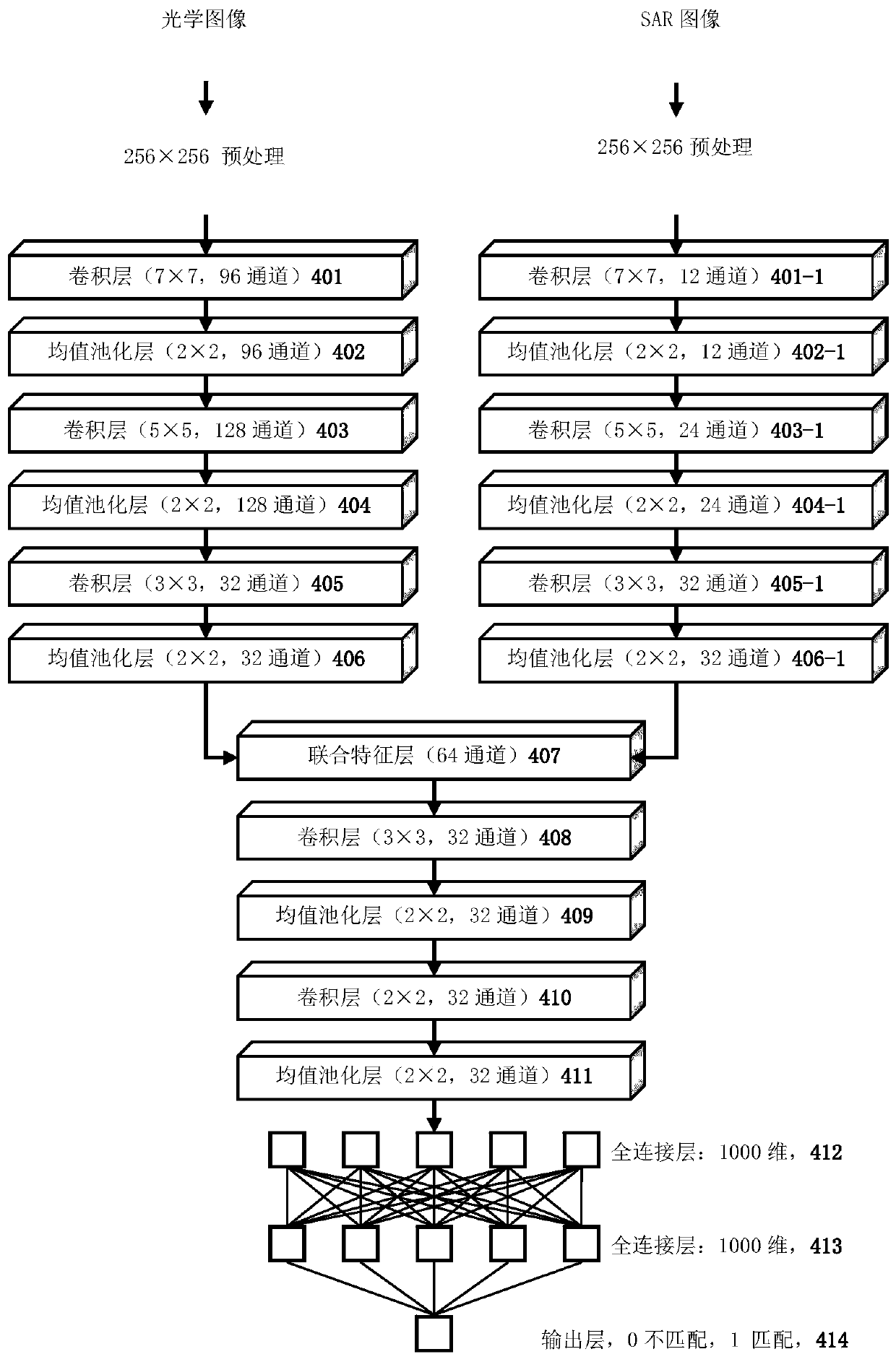

[0050] Such as figure 1 As shown, the following takes the matching of two 1000×1000 images as an example to describe the following steps in detail:

[0051] In the step "preprocessing", both the optical image and the radar image are compressed to 256×256, and then steps 401 and 401-1 are performed;

[0052] In step 401, 96 times of 7×7 convolutions are performed on the optical image, and the convolution result is input to the ReLU neuron; in step 401-1, 12 times of 7×7 convolution is performed on the SAR image, and the convolution result input to the ReLU neuron; then perform steps 402 and 402-1;

[0053] In step 402, the neuron output obtained in step 401 is subjected to 2×2 mean value downsampling; in step 402-1, the neuron output obtained in step 401-1 is subjected to 2×2 mean value downsampling; Then execute steps 403 and 403-1;

[0054]In step 403, 128 5×5 convolutions are performed on the optical image, and the convolution result is input to the ReLU neuron; in step 4...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com