Improved artificial neural network for language modelling and prediction

An artificial neural network, hidden layer technique, applied in the field of improved artificial neural networks for language modeling and prediction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

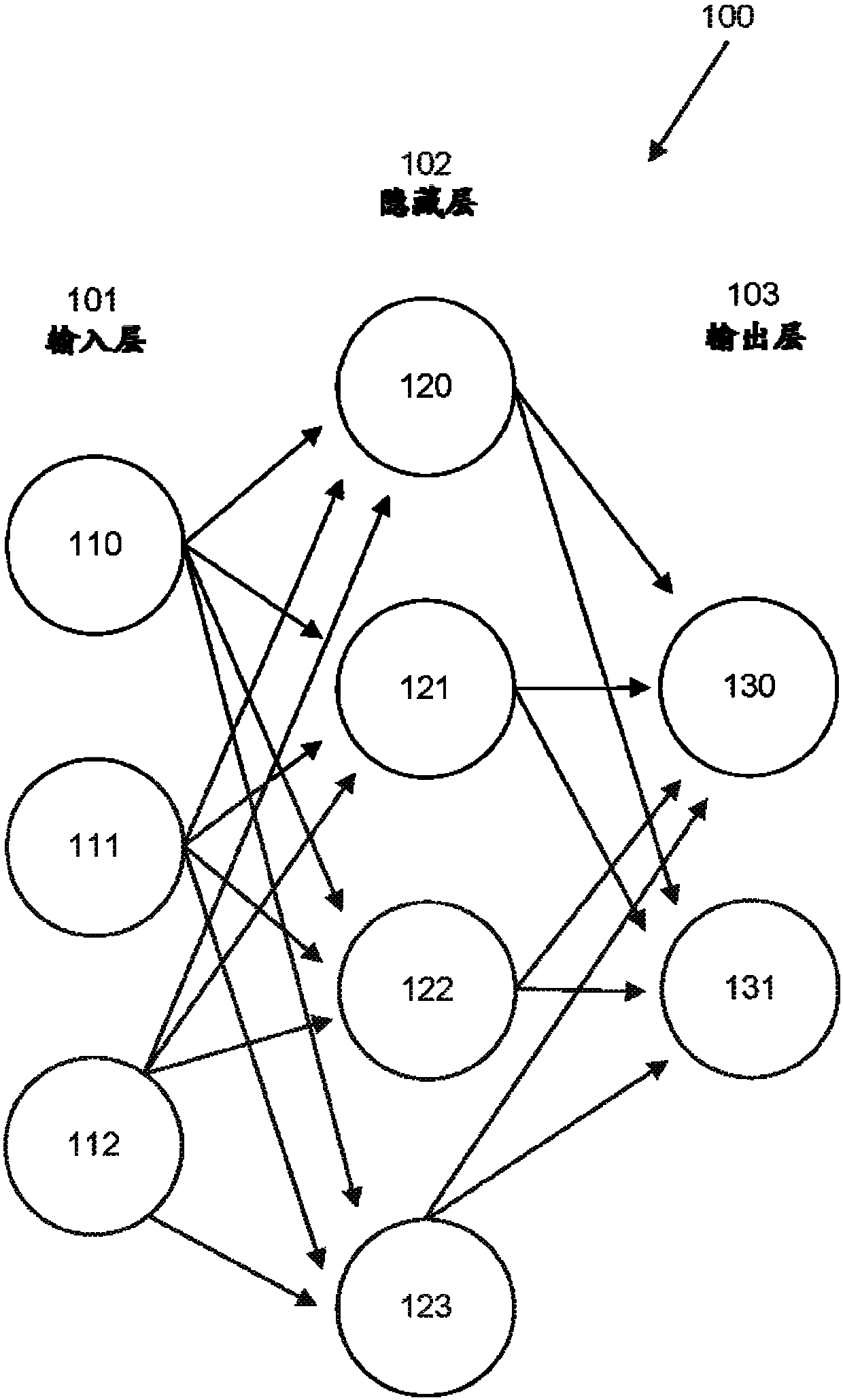

[0038] figure 1 A simple ANN 100 according to the prior art is depicted. In essence, an artificial neural network such as ANN 100 is a chain of mathematical functions organized in direction-dependent layers such as input layer 101, hidden layer 102, and output layer 103, each layer comprising a plurality of units or nodes 110-131. The ANN 100 is called a "feed-forward neural network" because the output of each layer 101-103 is used as the input to the next layer (or the output of the ANN 100 in the case of the output layer 103), and there is no reverse step or loop. It should be understood that figure 1 The number of units 110-131 depicted in is exemplary, and a typical ANN includes many more units in each layer 101-103.

[0039] In operation of the ANN 100 , an input is provided at the input layer 101 . This typically involves mapping the real-world input into a discrete form suitable for the input layer 101 (ie, each unit 110-112 that can be input to the input layer 101)...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com