Video highlight detection with pairwise deep ranking

A focus and video technology, applied in the field of video focus detection with paired depth sorting, can solve redundant, unstructured, lengthy and other problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

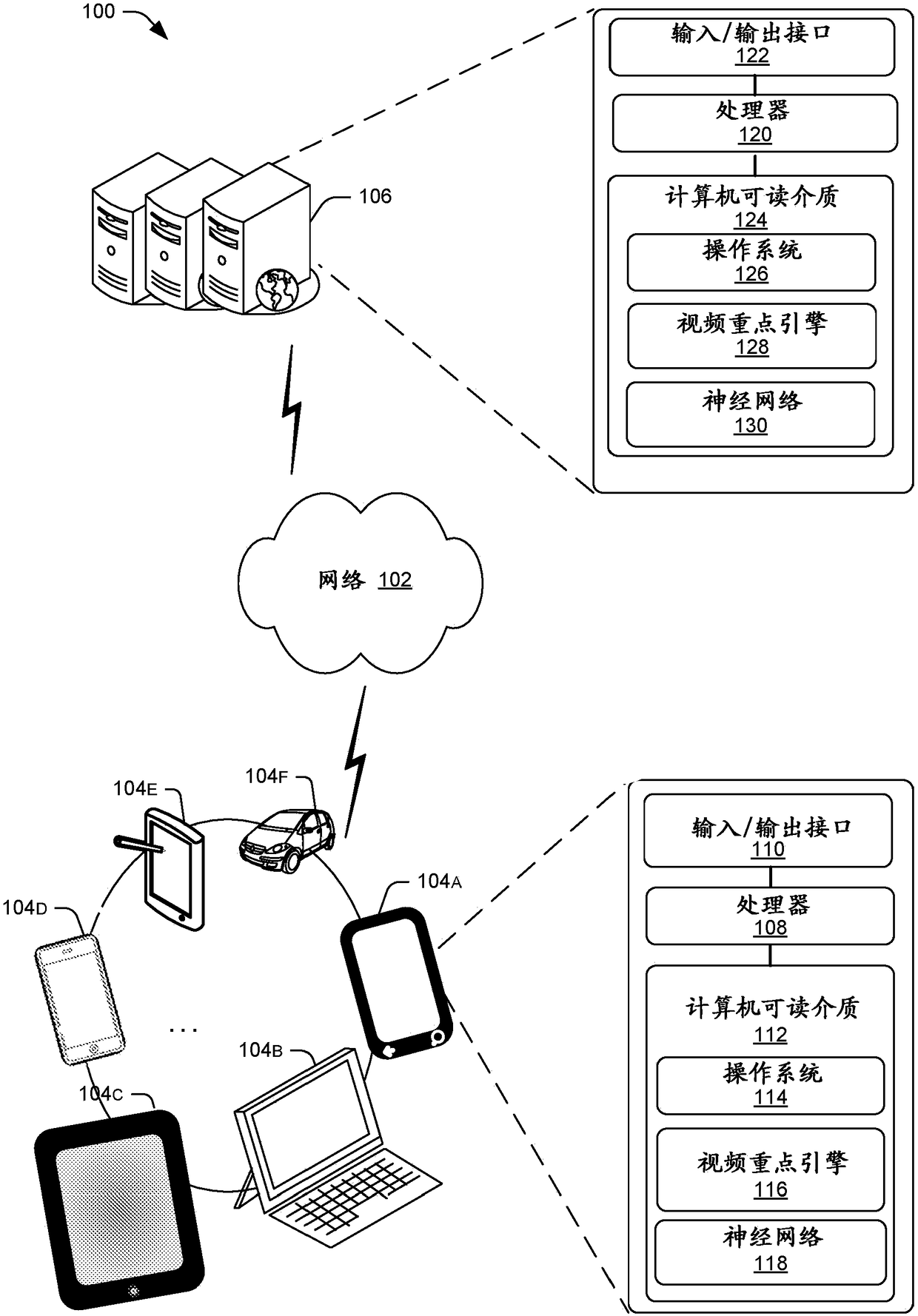

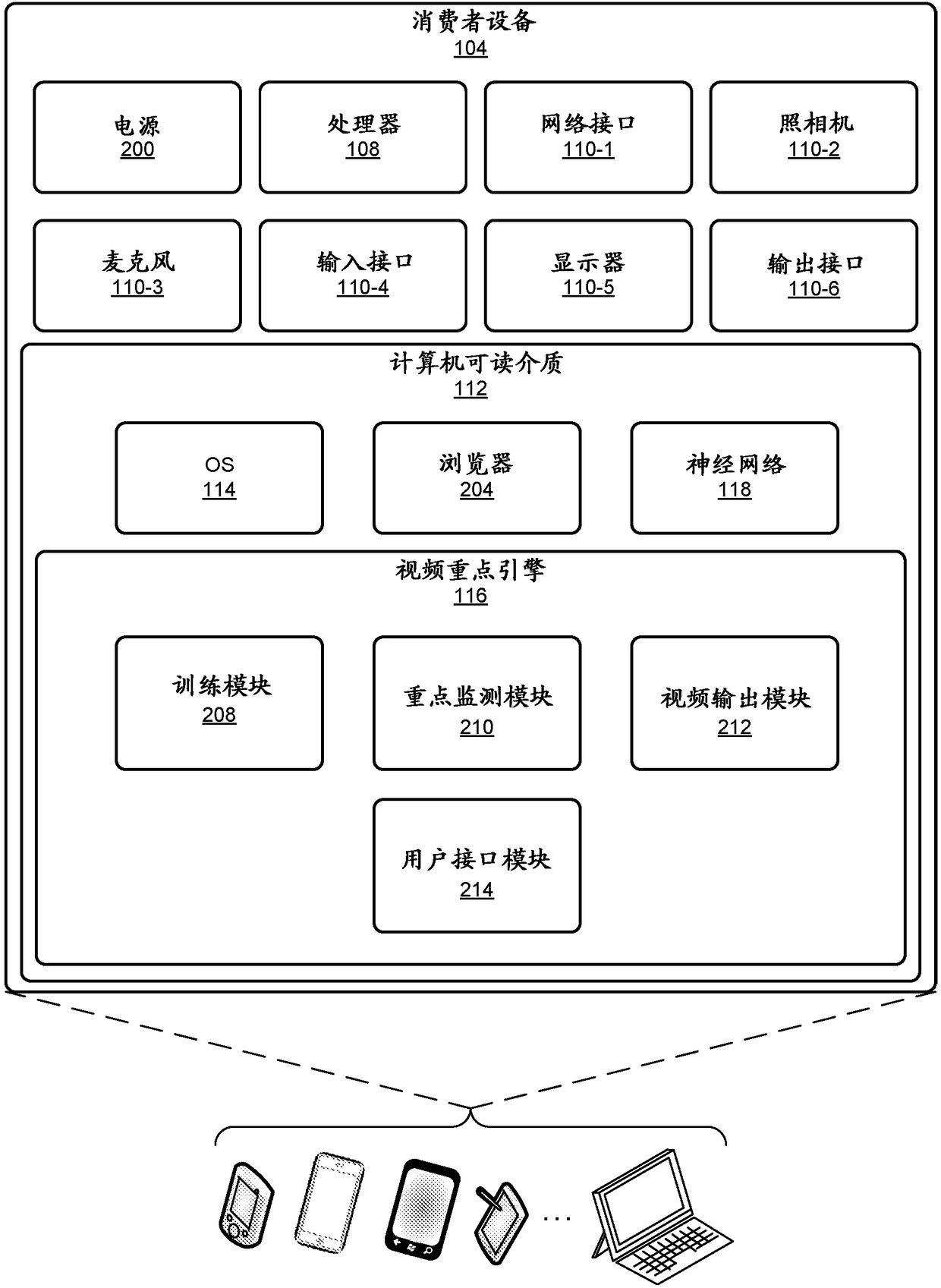

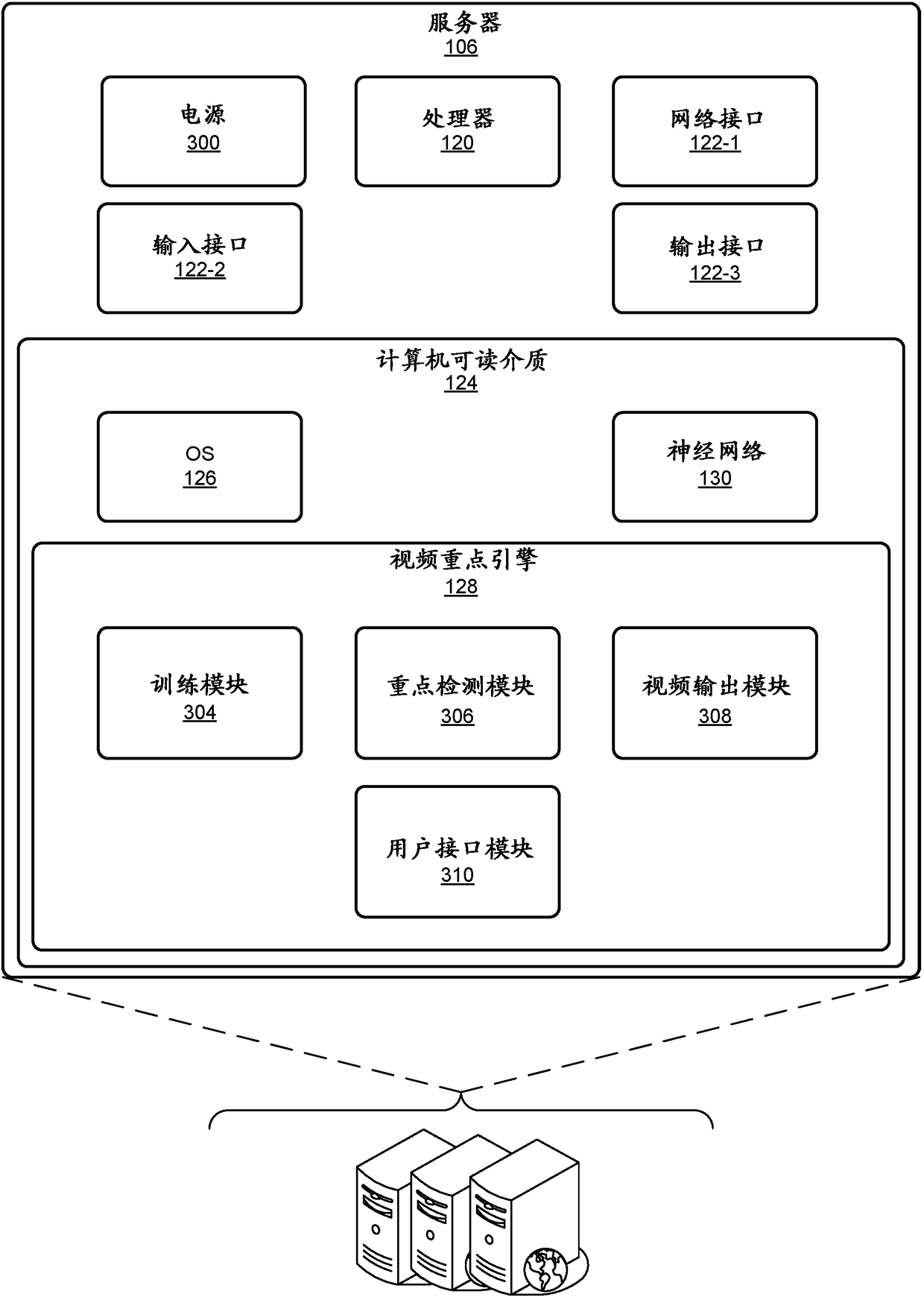

[0017] Concepts and techniques are described herein for providing a video highlight detection system for generating highlight content output to a user for accessing large video streams.

[0018] overview

[0019] Current systems that provide focus on video content do not have the ability to effectively identify spatial moments in a video stream. The advent of wearable devices such as camcorders and smart glasses has made it possible to document life, recorded in first-person video. Browsing through such long, unstructured videos is time-consuming and tedious.

[0020] In some examples, the techniques described herein describe main or special moments of interest (eg, highlights) in a video (eg, a first-person video) for use in generating a summary of the video.

[0021] In one example, the system uses a pairwise deep ranking model that employs deep learning techniques to learn relationships between highlighted and non-focused video segments. The result of deep learning can b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com