Structured sparse representation and low-dimension embedding combined dictionary learning method

A sparse representation and low-dimensional embedding technology, which is applied in character and pattern recognition, instruments, computer parts, etc., can solve the problems of weak discrimination ability and poor discrimination of representation coefficient categories

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] The present invention will be described in detail below in combination with specific embodiments.

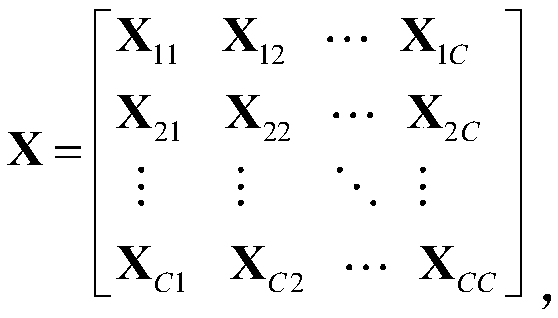

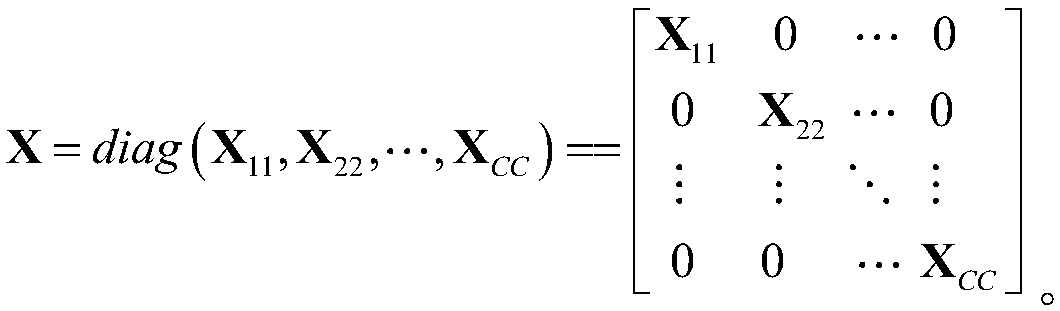

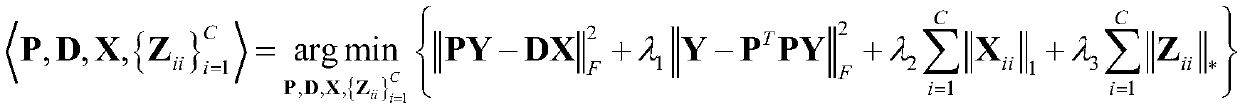

[0055]The present invention is a dictionary learning method that combines structured sparse representation and low-dimensional embedding. The dictionary construction and the dimensionality reduction projection matrix are carried out in parallel and alternately, and the sparse representation coefficient matrix with a block diagonal structure is forced to be enhanced in the low-dimensional projection space. The inter-class incoherence of dictionaries, while maintaining the intra-class correlation of dictionaries by exploiting the low-rank property of the representation coefficients on the class sub-dictionary. Dictionary construction and projection learning can promote each other to fully maintain the sparse structure of the data, so as to encode more class-discriminative coding coefficients. Specifically, follow the steps below:

[0056] Step 1. Read in the feature data se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com