A Speech Emotion Recognition Method Based on Multi-scale Deep Convolutional Recurrent Neural Network

A technology of speech emotion recognition and cyclic neural network, which is applied in speech analysis, instruments, etc., can solve problems such as ignoring discrimination, and achieve the effect of alleviating the lack of samples

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

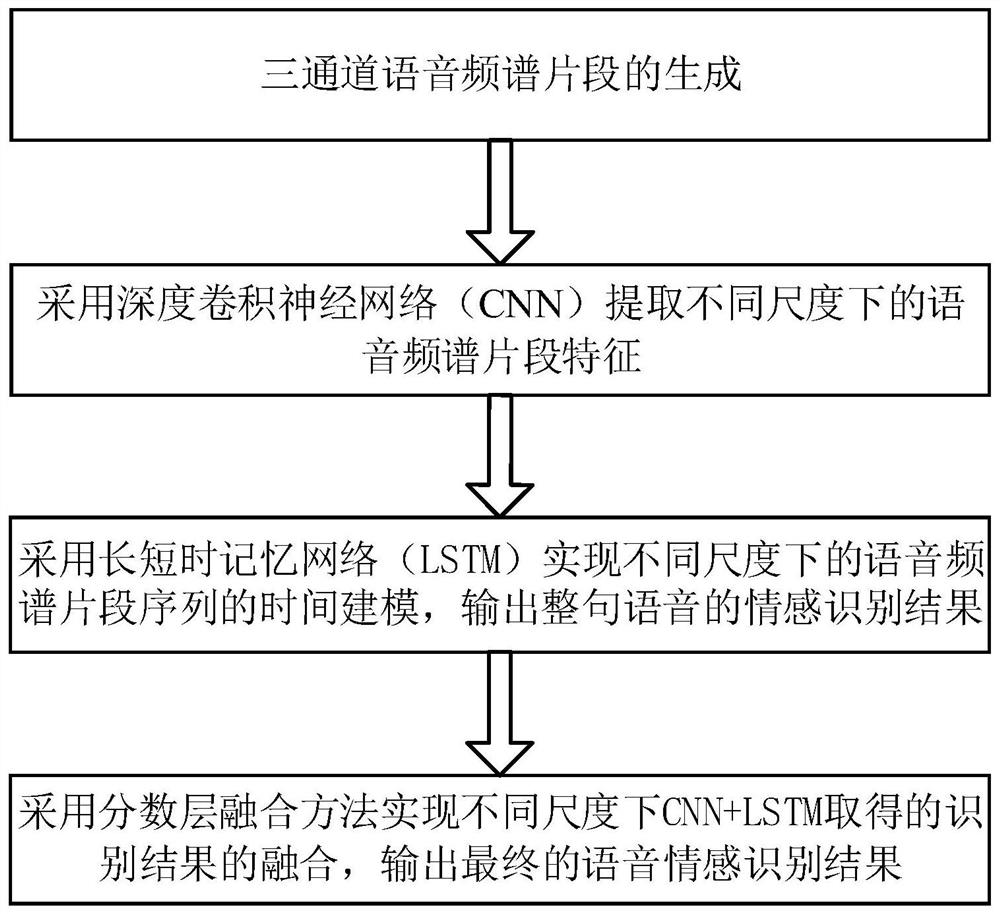

[0046] figure 1 It is a flowchart of the present invention, mainly comprising:

[0047] Step 1: Generation of three-channel speech spectrum segments;

[0048] Step 2: Using a deep convolutional neural network (CNN) to extract features of speech spectrum segments at different scales;

[0049] Step 3: Use long-short-term memory network (LSTM) to realize time modeling of speech spectrum segment sequences at different scales, and output the emotion recognition result of the entire speech;

[0050] Step 4: Use the fractional layer fusion method to realize the fusion of the recognition results obtained by CNN+LSTM at different scales, and output the final speech emotion recognition results.

[0051] One, the realization of each step of the flow chart of the present invention is specifically expressed as follows in conjunction wit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com