Method for fusing bilingual predefined translation pair into neural machine translation model

A machine translation and pre-defined technology, applied in the field of neural machine translation, can solve the problems of not being able to guarantee the pre-defined phrases, reduce the speed of translation, modify the complexity, etc., and achieve the possible effect of increasing the possibility of being successfully translated

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, so that those skilled in the art can better understand the present invention and implement it, but the examples given are not intended to limit the present invention.

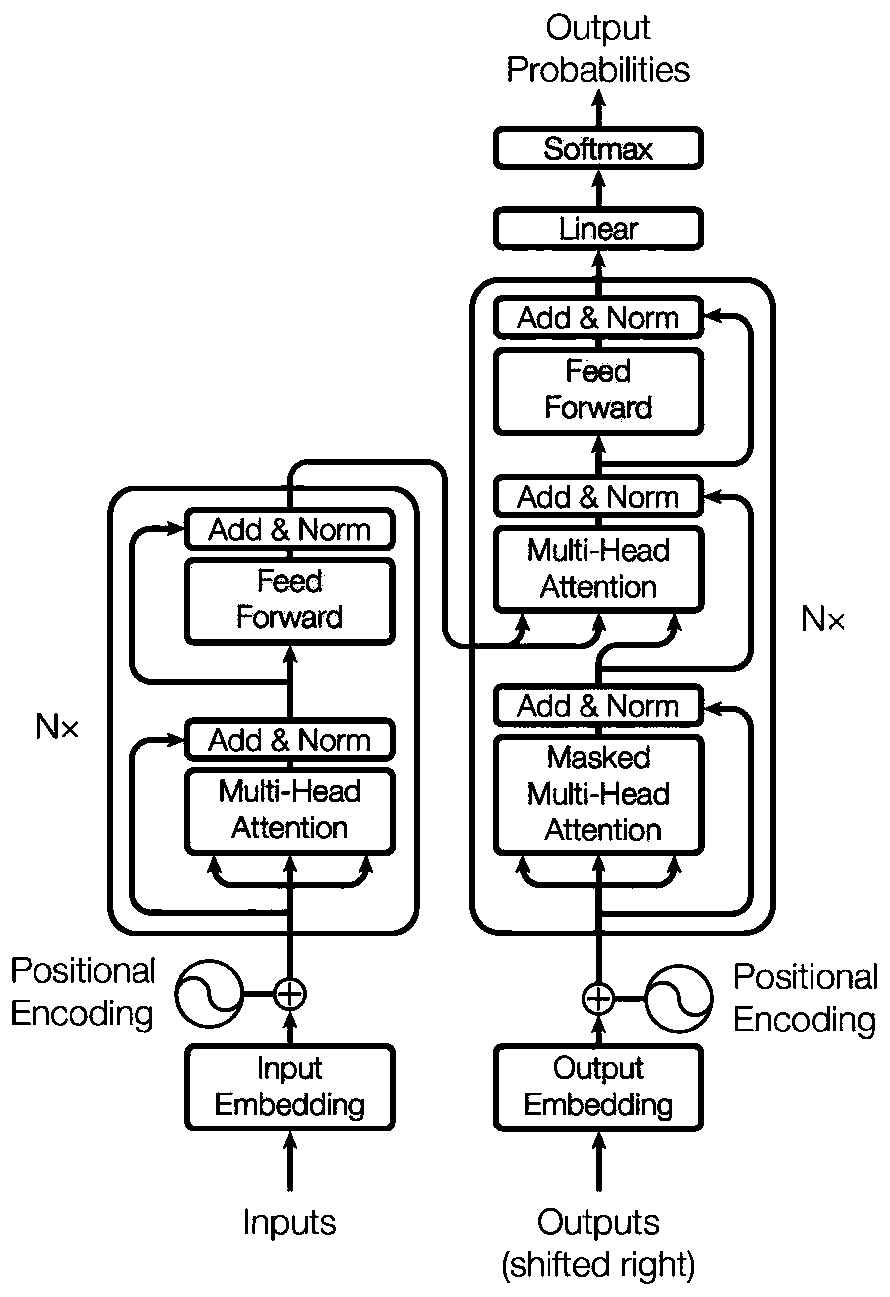

[0027] Background: NMT model based on attention mechanism (attention)

[0028] In neural machine translation systems, an end-to-end model is generally used. The model generally includes an encoder (encoder) and a decoder (decoder). When translating, the general input is converted into a sentence representation through the encoder and input into the decoder. The decoder part accepts the input of the encoder and combines other mechanisms (such as the attention mechanism) to output the result, outputting a word each time, and inputting the word into In the decoder, it is used as the input for the next output word. So, until the translation of this sentence ends.

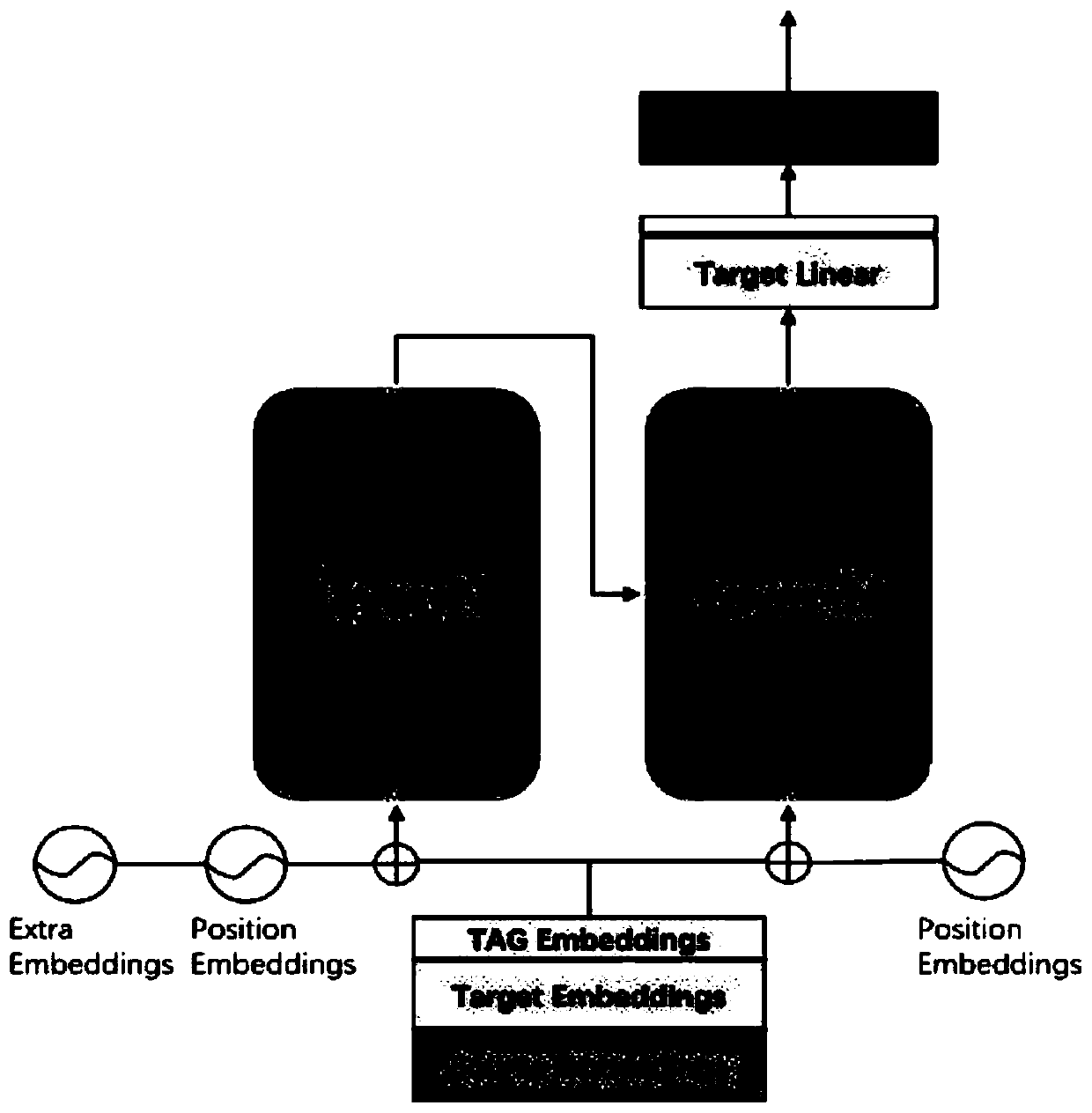

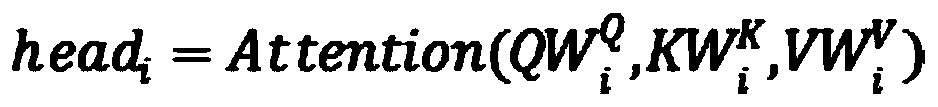

[0029] T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com