Method for obtaining dynamic texture description model and video abnormal behavior retrieval method

A technology for describing models and dynamic textures, applied in character and pattern recognition, instruments, computer components, etc., can solve the problems that video appearance information is not involved, and video time feature information cannot be obtained, so as to achieve fast calculation, simple implementation, and Efficiently describe the effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The method for obtaining a dynamic texture description model and the video abnormal behavior retrieval method of the present invention will be described in more detail below in conjunction with schematic diagrams, which represent a preferred embodiment of the present invention, and it should be understood that those skilled in the art can modify the present invention described here , while still realizing the advantageous effects of the present invention. Therefore, the following description should be understood as the broad knowledge of those skilled in the art, but not as a limitation of the present invention.

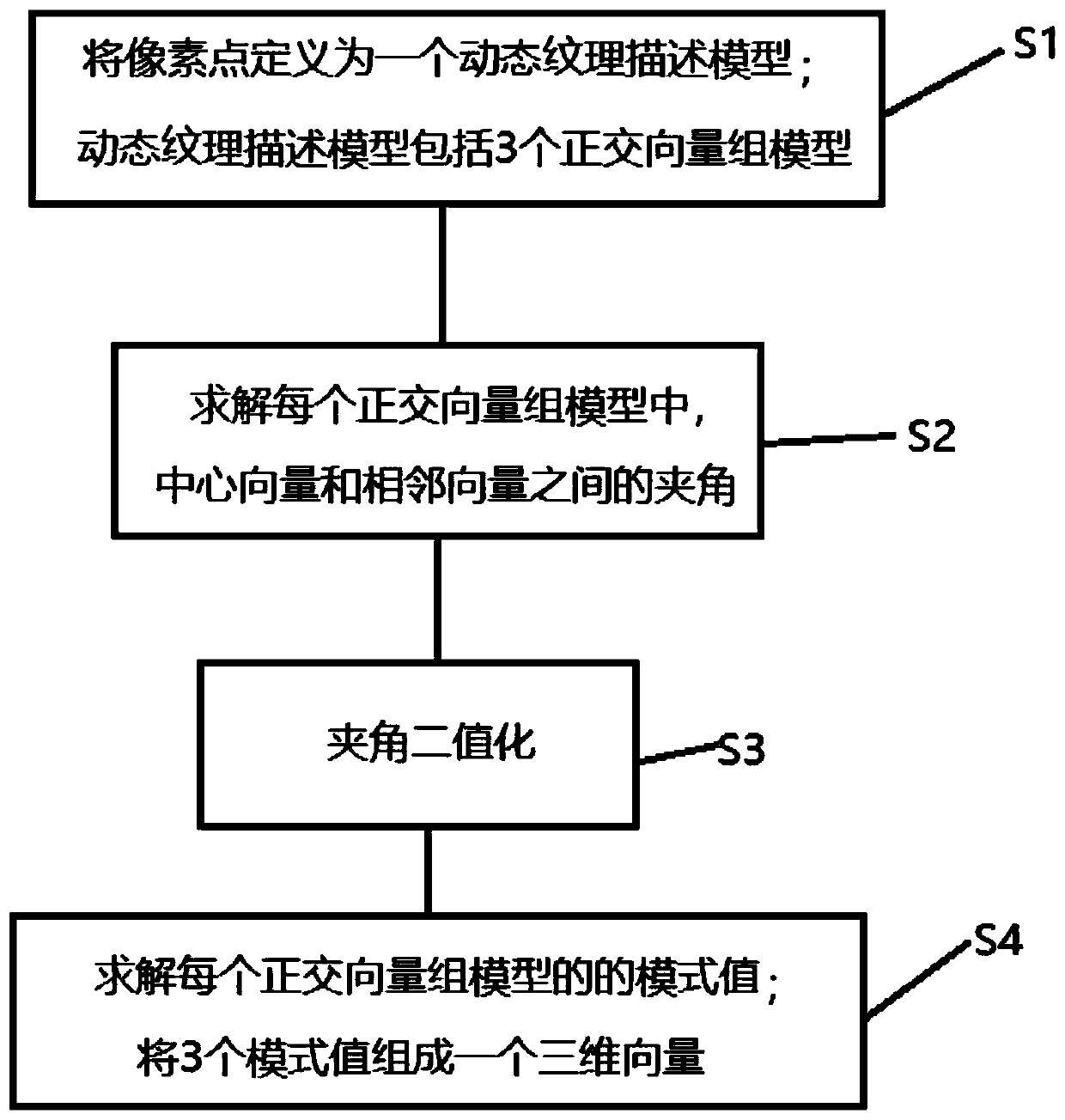

[0039] like figure 1 As shown, this embodiment proposes a method for obtaining a dynamic texture description model including the following steps S1-S4, specifically as follows:

[0040] Step S1: Define a pixel point in a given video as a dynamic texture description model for the pixel point; the dynamic texture description model includes an orthogonal vector ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com