Multi-sample facial expression recognition method based on low-rank tensor decomposition

A tensor decomposition and expression recognition technology, applied in the field of facial expression recognition, can solve the problems that expressions are easily affected by different individuals, and it is difficult to preserve the nonlinear characteristics of expressions, so as to improve the recognition rate of facial expressions and the ability to express them. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the implementation modes and accompanying drawings.

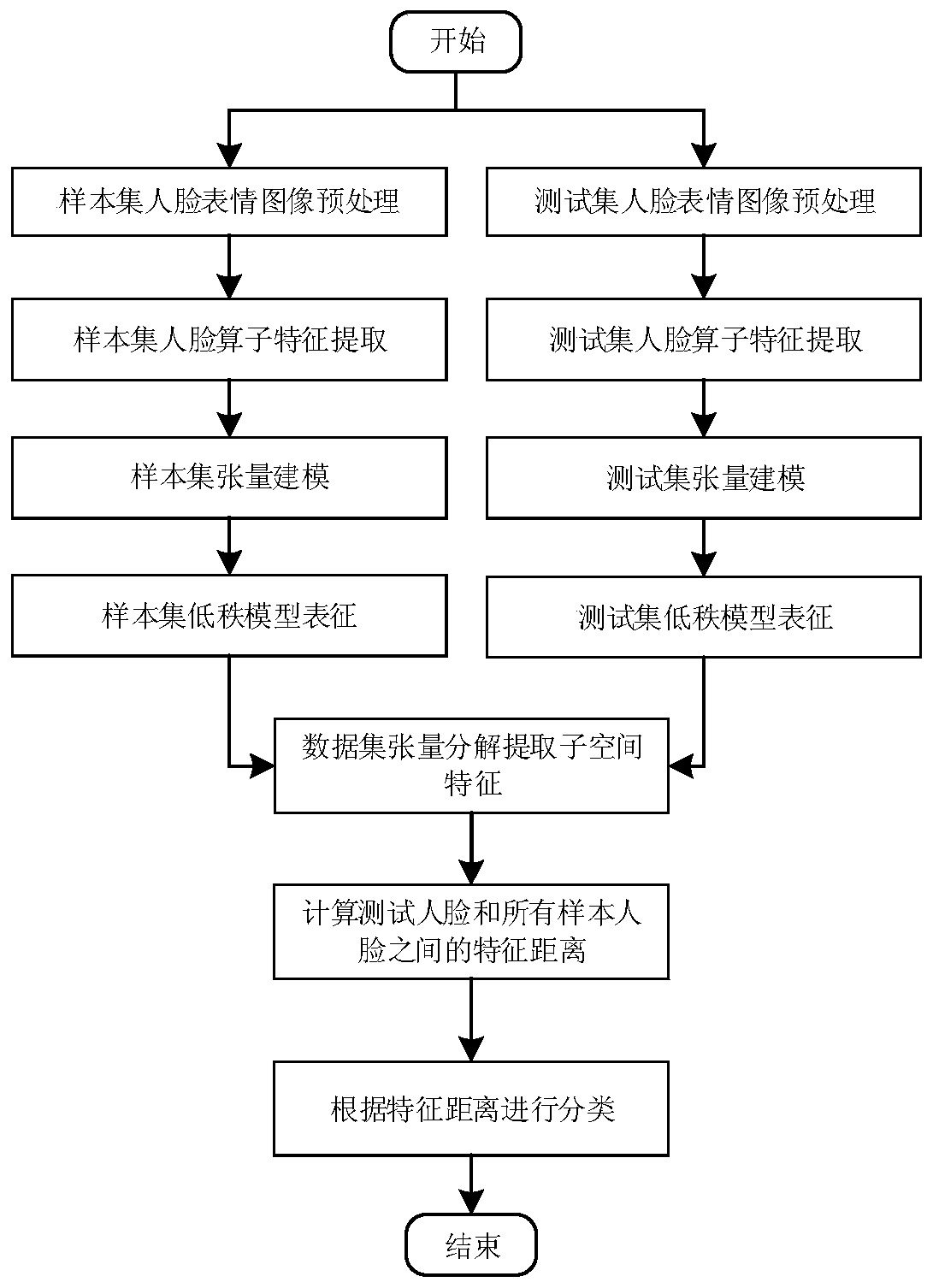

[0017] The present invention proposes a low-rank tensor decomposition-based method for the overall framework of a diverse facial expression recognition method for reference figure 1 shown.

[0018] The present invention provides a kind of facial expression recognition method, comprises the following steps:

[0019] Perform steps S1 to S5 on the sample set and test set respectively:

[0020] S1: Image preprocessing, using the face detection algorithm to intercept the face area in the image;

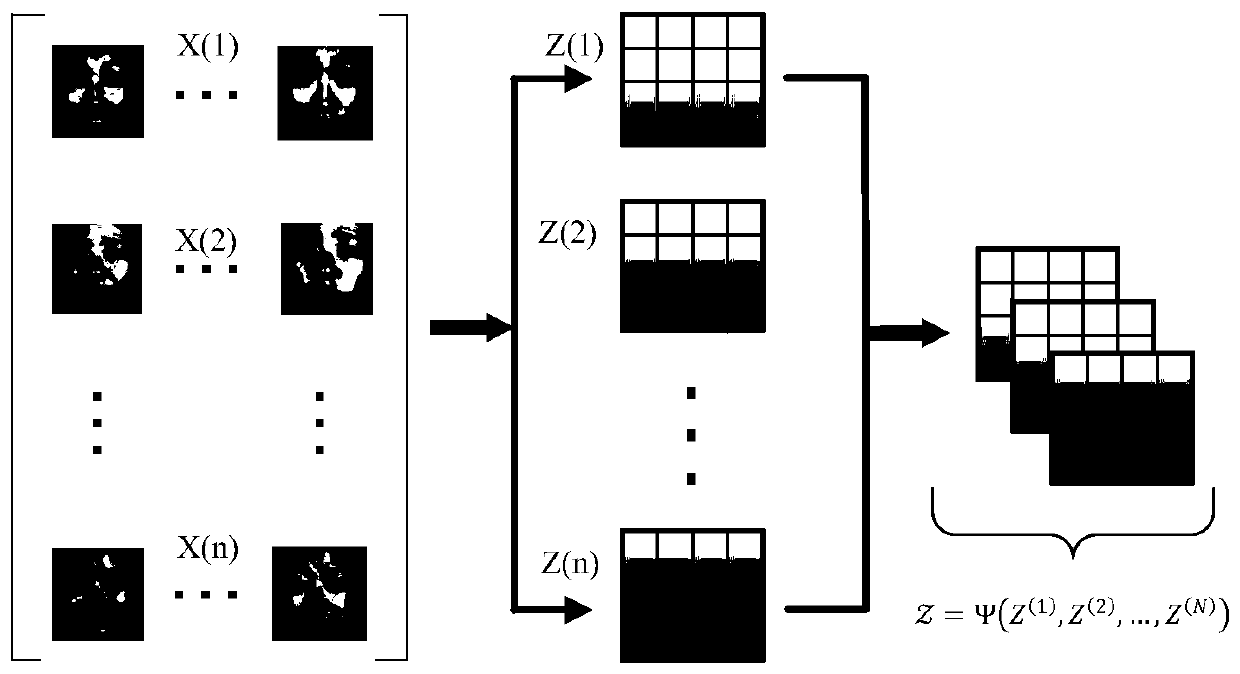

[0021] S2: Feature extraction, feature extraction of facial expression images through feature operators in various modes;

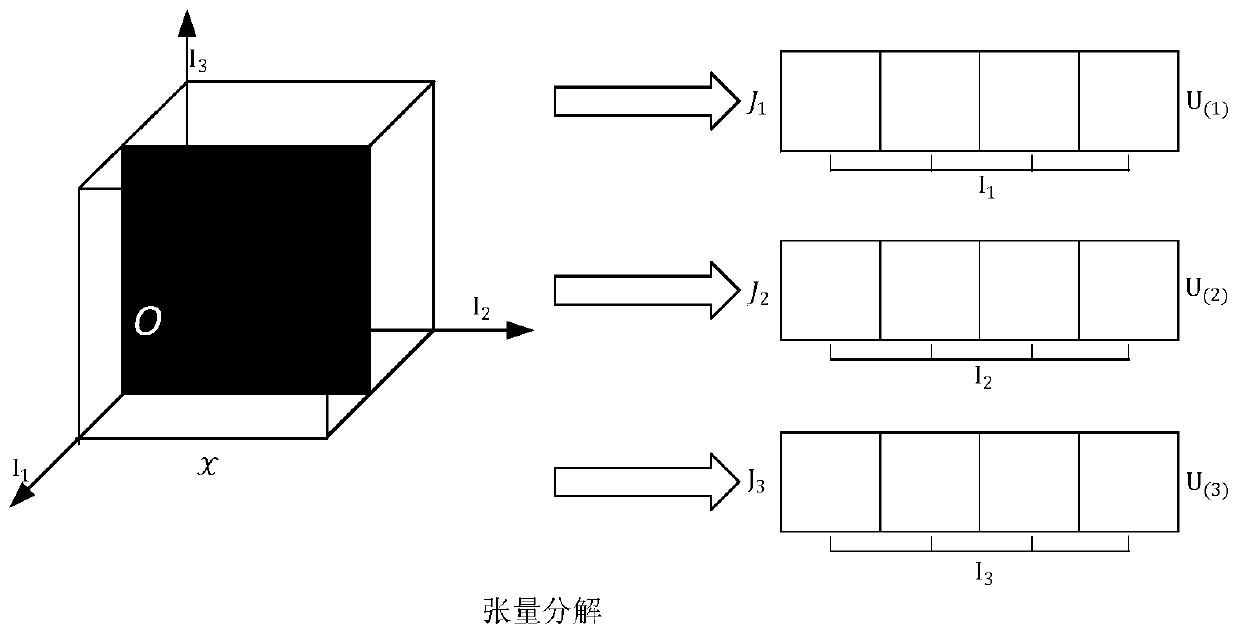

[0022] S3: Tensor modeling, according to the operator features of the extracted face area, construct a tensor model based on...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com