A model training method, device, electronic equipment and storage medium

A model training and model technology, applied in the field of computer and model training, can solve problems such as high cost and poor timeliness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

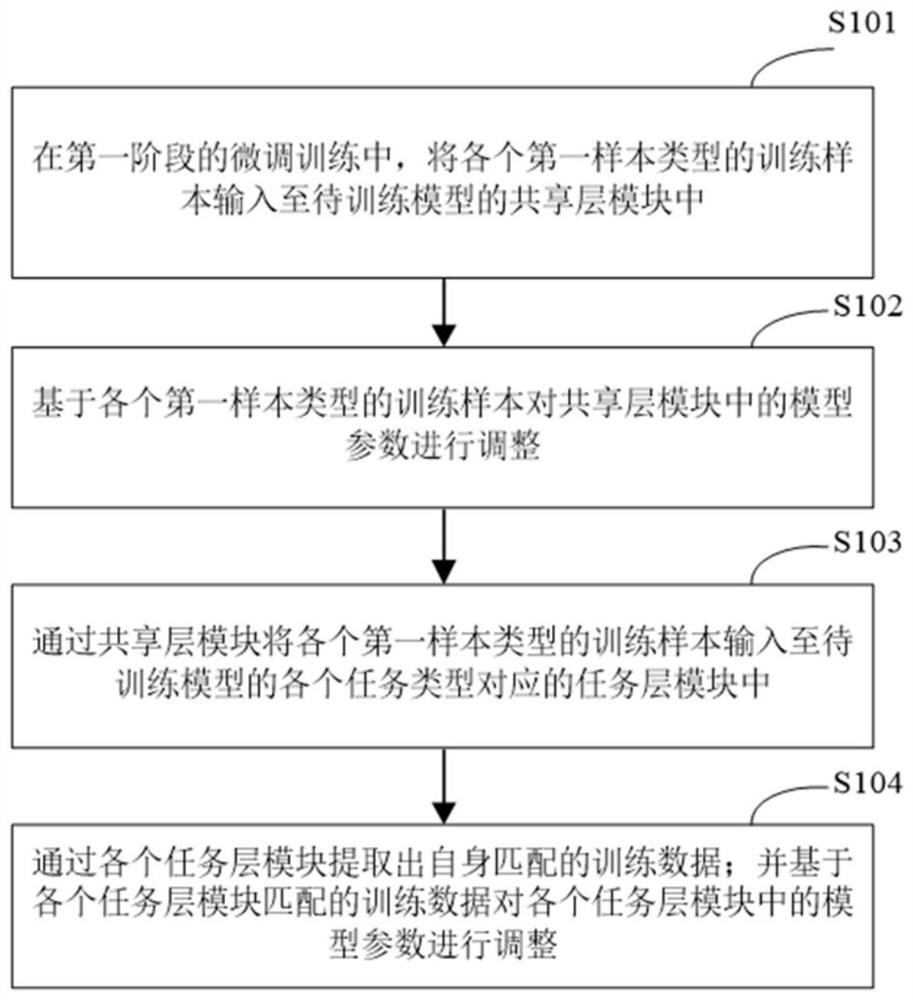

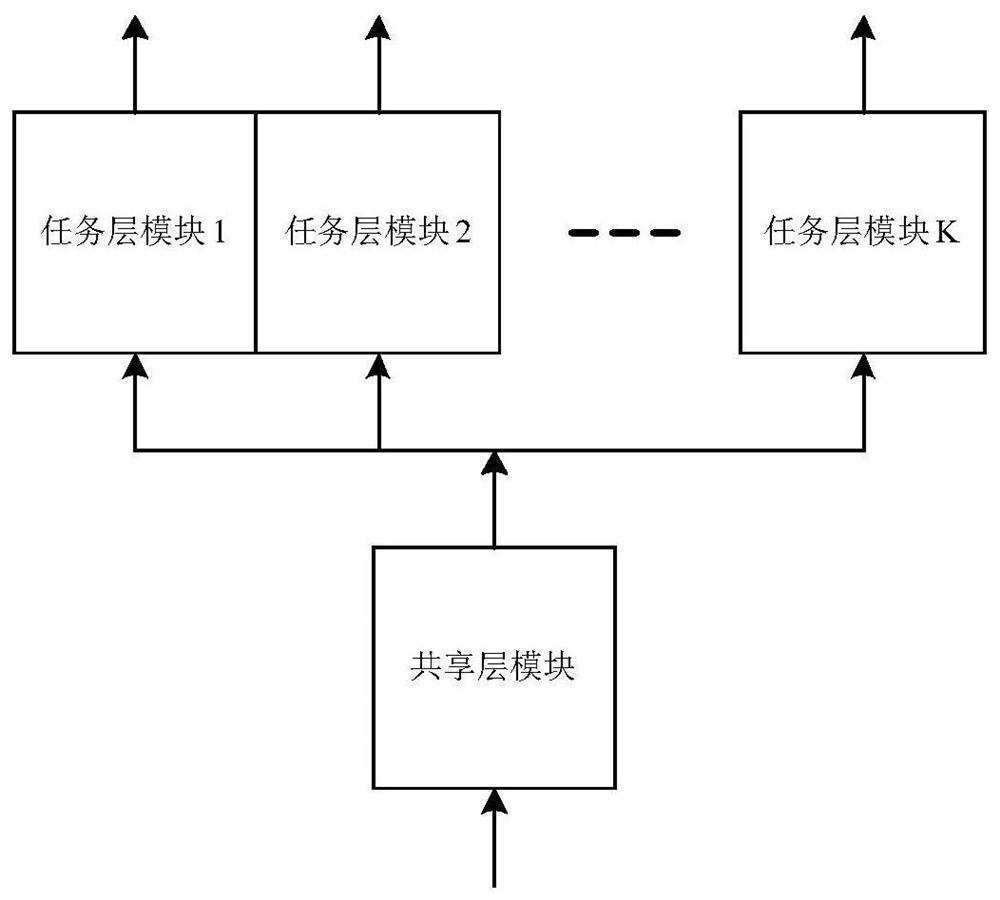

[0046] figure 1 It is a schematic flow chart of the model training method provided in the first embodiment of the present application. The method can be executed by a model training device or an electronic device. The device or electronic device can be implemented by software and / or hardware. The device or electronic device can Integrate in any smart device with network communication function. Such as figure 1 As shown, the model training method may include the following steps:

[0047] S101. In the first stage of fine-tuning training, input training samples of each first sample type into the shared layer module of the model to be trained.

[0048] In the specific embodiment of the present application, the training process of the speech synthesis front-end model based on the pre-trained language model may only include the first stage and fine-tuning training, or may include the first-stage fine-tuning training and the second-stage fine-tuning training; Can include more stages of f...

Embodiment 2

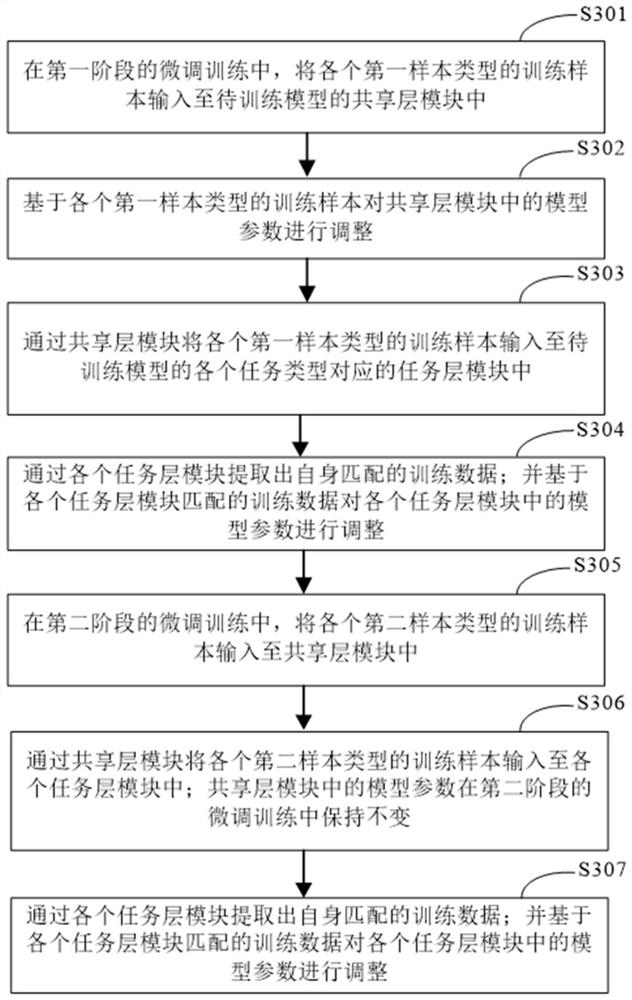

[0059] image 3 It is a schematic flowchart of a training method for a speech synthesis front-end model based on a pre-trained language model provided in the second embodiment of the present application. Such as image 3 As shown, the training method of the speech synthesis front-end model based on the pre-trained language model may include the following steps:

[0060] S301: In the first stage of fine-tuning training, input training samples of each first sample type into the shared layer module of the model to be trained.

[0061] In the specific embodiment of the present application, the training process of the speech synthesis front-end model based on the pre-trained language model may only include the first stage and fine-tuning training, or may include the first-stage fine-tuning training and the second-stage fine-tuning training; Can include more stages of fine-tuning training. In the first stage of fine-tuning training, the electronic device can input each training sample o...

Embodiment 3

[0078] Image 6 It is a schematic structural diagram of a training device for a speech synthesis front-end model based on a pre-trained language model provided in the third embodiment of the present application. Such as Image 6 As shown, the device 600 includes: a first input module 601, a first training module 602, a second input module 603, and a second training module 604; wherein,

[0079] The first input module 601 is used to input training samples of each first sample type into the shared layer module of the model to be trained in the first stage of fine-tuning training;

[0080] The first training module 602 is configured to adjust the model parameters in the shared layer module based on the training samples of each first sample type;

[0081] The second input module 603 is configured to input the training samples of each first sample type into the task layer module corresponding to each task type of the model to be trained through the sharing layer module;

[0082] The second...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com