Audio data generation method, device and system and controller

An audio data and controller technology, which is applied in the parts of the TV system, pulse modulation TV signal transmission, TV and other directions, can solve the problems of increasing the number of calculations and low efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

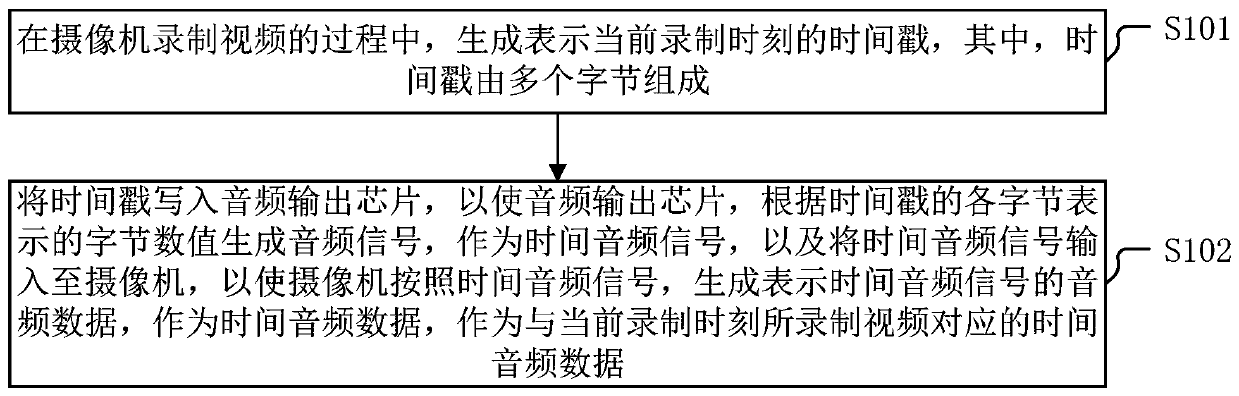

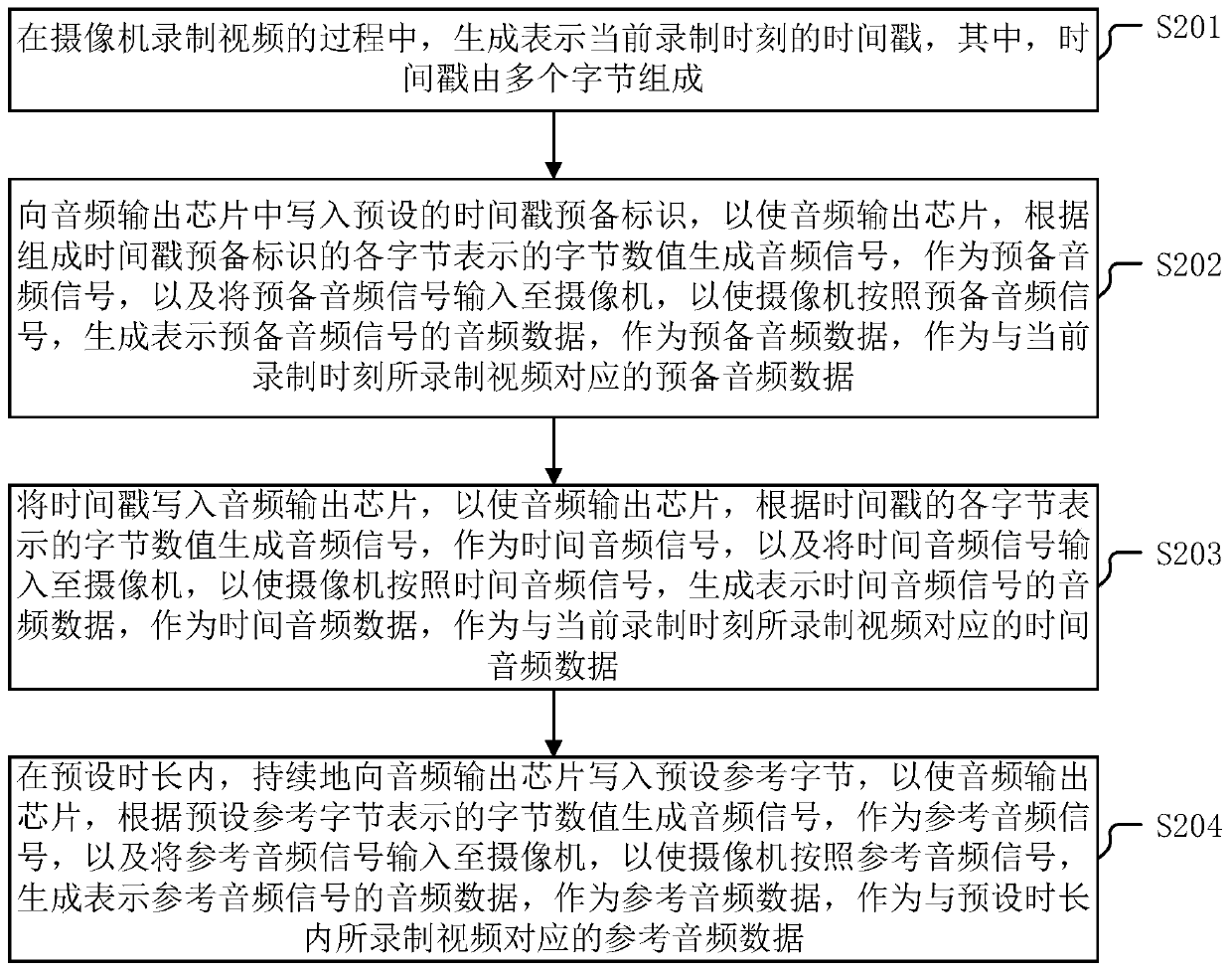

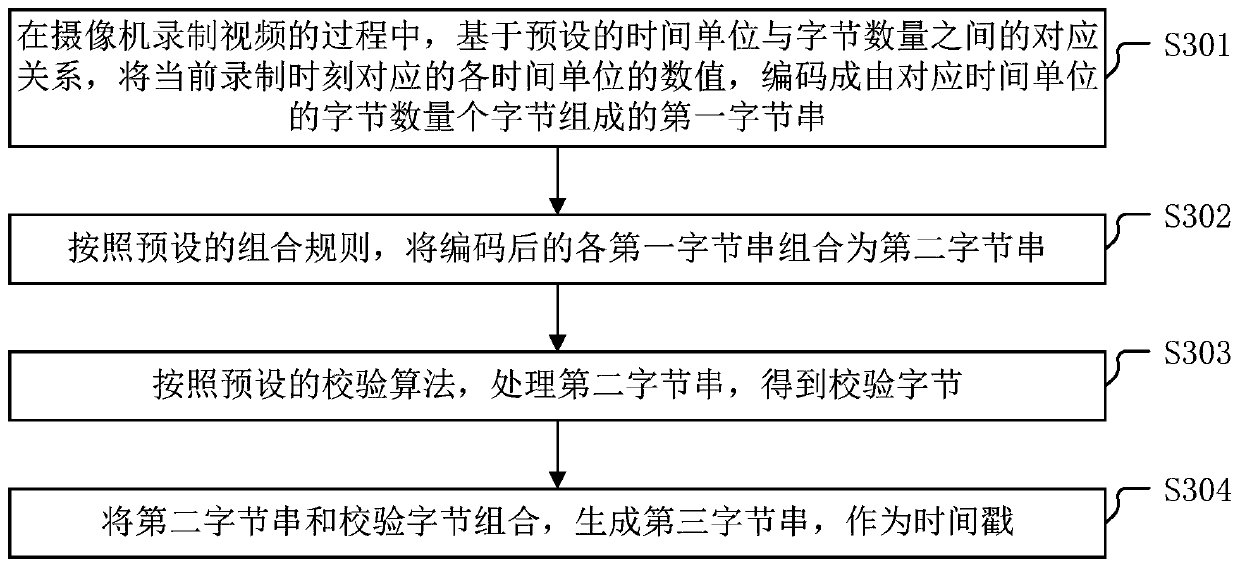

[0090] In order to provide an implementation plan for improving the efficiency of synchronizing multiple videos, embodiments of the present invention provide an audio data generation method, device, system, and controller. The embodiments of the present invention will be described below with reference to the accompanying drawings. And in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other.

[0091] An embodiment of the present invention provides a method for generating audio data, which can be applied to a controller in a camera auxiliary device, wherein the camera auxiliary device further includes an audio output chip.

[0092] For ease of understanding, first a brief introduction to the audio output chip in the embodiment of the present invention:

[0093] In the embodiment of the present invention, the audio output chip is a chip capable of outputting a PCM (Pulse Code Modulation, Pulse Code...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com