Text data representation learning using random document embedding

A machine learning model and data technology, applied in machine learning, neural learning methods, electrical digital data processing, etc., can solve problems such as feature embedding, expensive WMD calculations, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

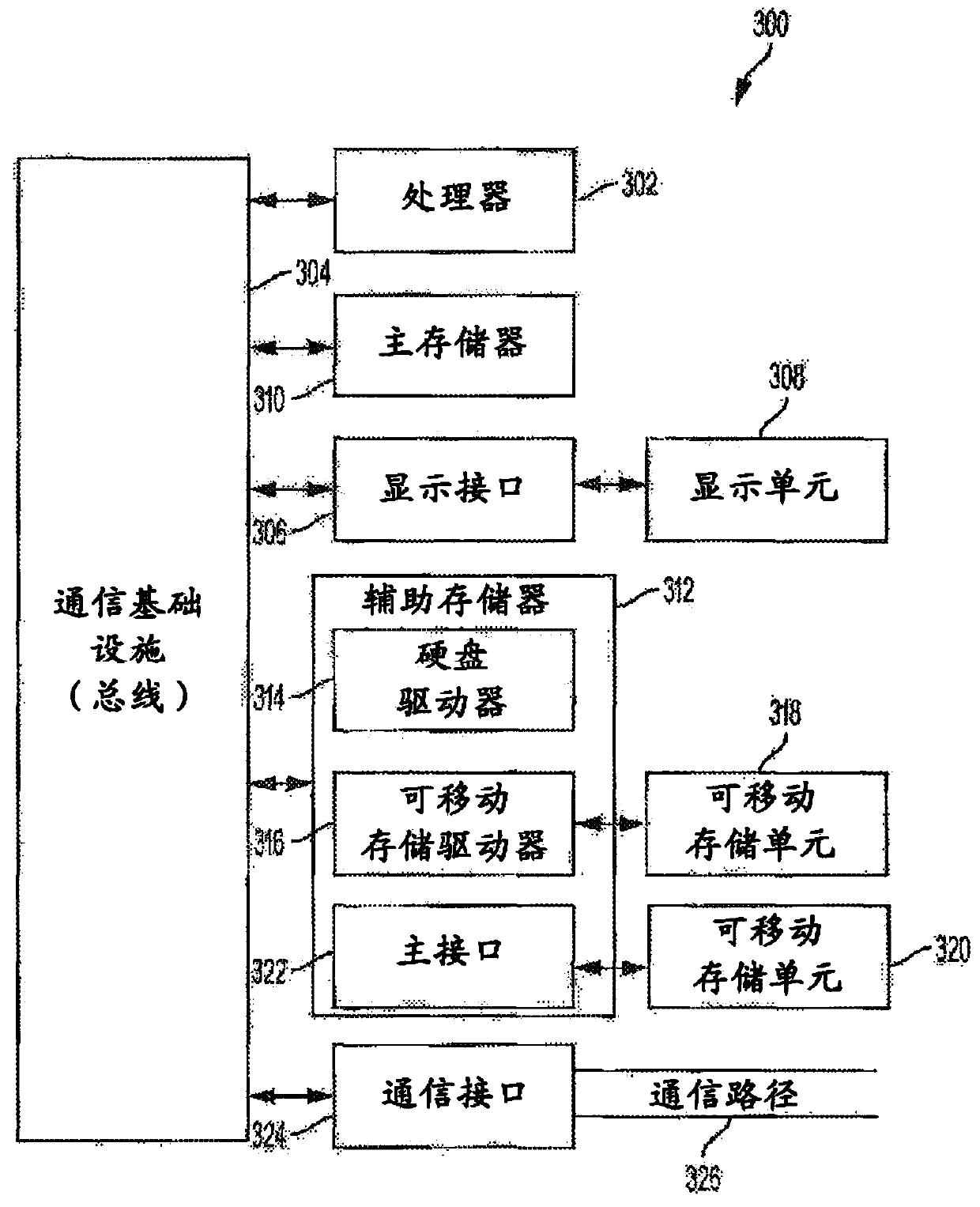

[0027] Various embodiments of the invention are described herein with reference to the associated drawings. Alternative embodiments of the invention may be devised without departing from the scope of the invention. In the following description and drawings, various connections and positional relationships (eg, above, below, adjacent, etc.) are set forth between elements. Unless stated otherwise, such connections and / or positional relationships may be direct or indirect, and the invention is not intended to be limited in this respect. Accordingly, a coupling of entities may refer to a direct or indirect coupling, and a positional relationship between entities may be a direct or indirect positional relationship. Furthermore, the various tasks and process steps described herein may be incorporated into a more comprehensive procedure or process having additional steps or functionality not described in detail herein.

[0028] The following definitions and abbreviations are used t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com