Environment semantic perception method based on visual information

A visual information and semantic technology, applied in image data processing, instrumentation, computing, etc., can solve problems such as difficult to distinguish between two terrains, and achieve the effect of improving high-level semantic understanding and reliable information input

Inactive Publication Date: 2020-04-28

HARBIN INST OF TECH

View PDF8 Cites 28 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

[0002] The effective perception of the mobile platform in the environment can be realized through synchronous positioning and drawing technology. It can not only know the obstacle information in the environment, but also obtain the relative relationship between itself and the environment. This is a key step to realize the autonomy of the platform. However, as With the continuous development of platforms and detection payloads, more mission scenarios and requirements have been proposed, and the recognition of target appearance and geometric features can no longer solve practical problems

In the process of patrolling extraterrestrial celestial bodies, if a piece of terrain with a similar appearance can only rely on traditional recognition methods, the three-dimensional reconstruction of the environment can be obtained, but it is difficult to distinguish the difference between the two terrains. For the previous detection missions, as long as It is sufficient to be able to identify whether there are obstacles ahead and whether they can pass through. However, with the increase of detection time and scale, the existing cognition is far from enough. It is necessary to have a semantic understanding of the environment, that is, not only to detect whether If there is a goal, it needs to be further analyzed to find out what it is, which will be the core of the patrol to become intelligent

In the research of unmanned driving, this problem is equally important. During the driving process, you will encounter random targets such as vehicles, pedestrians, and roadblocks. When effectively judging what is the obstacle, it highlights the importance of semantic understanding. After giving the understanding result that one is a person and the other is a haystack, it is easy to make a correct judgment

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

specific Embodiment

[0098] (1) Verify parameter settings

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

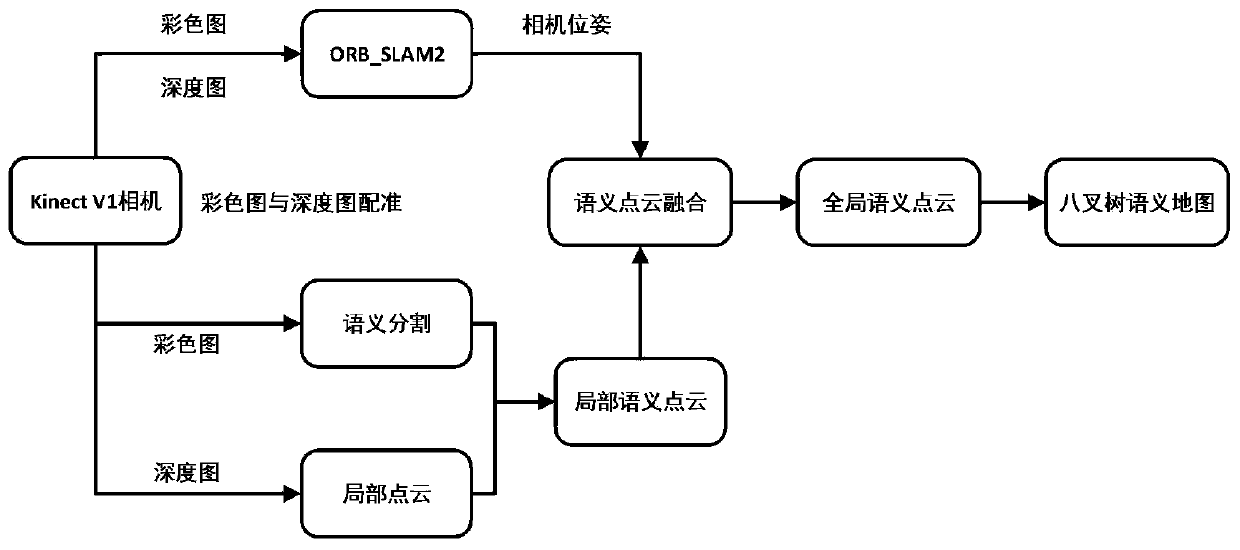

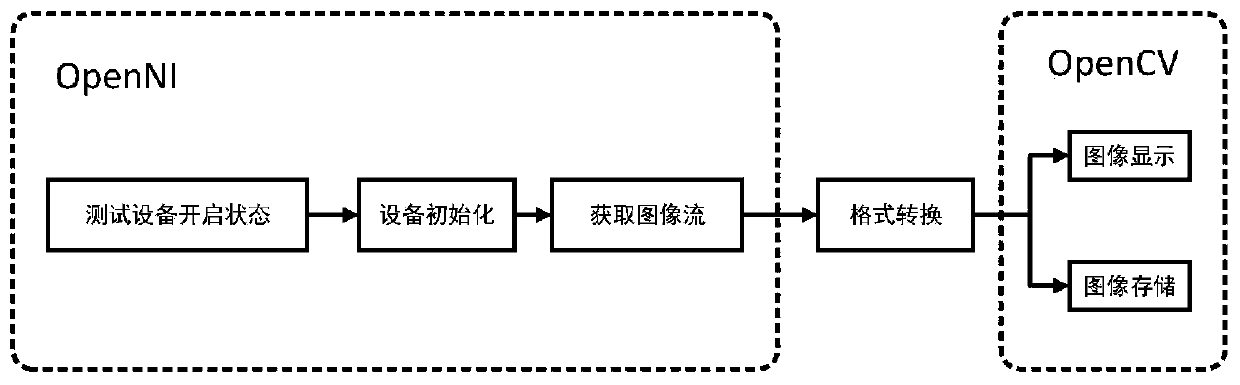

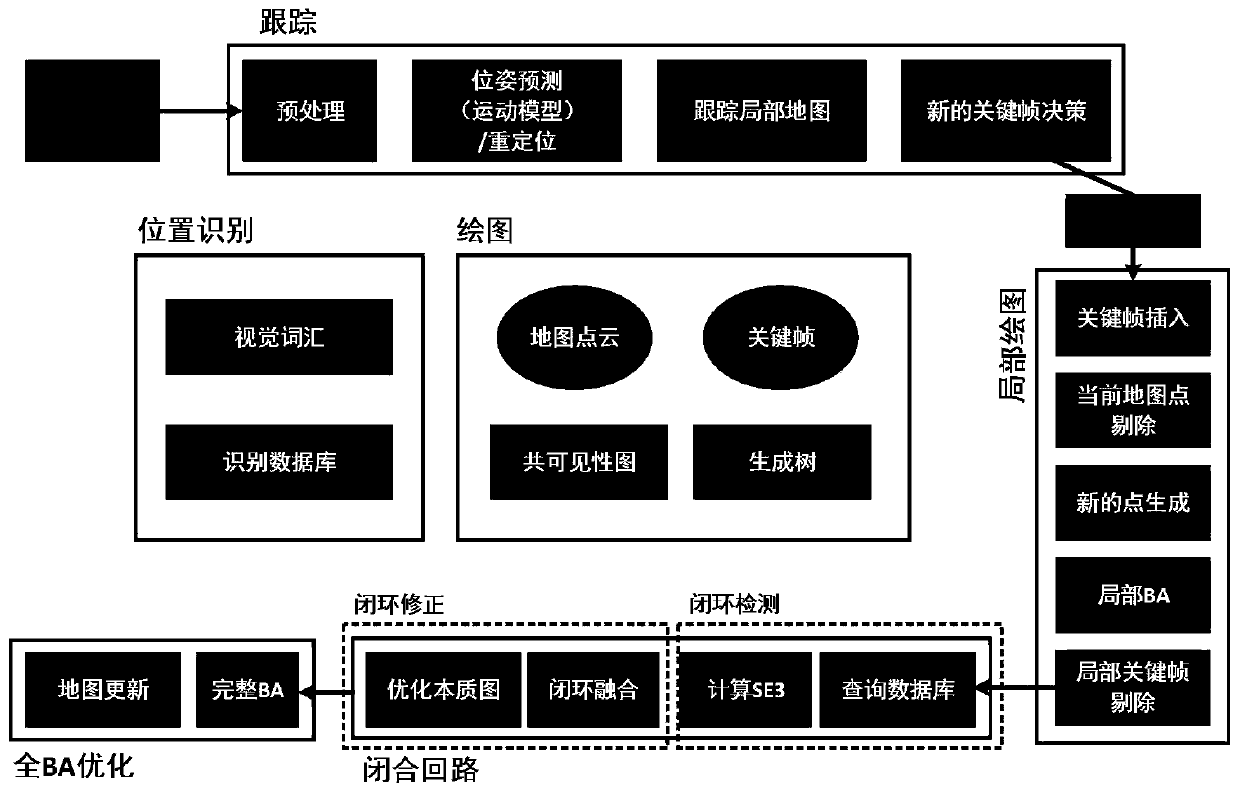

The invention provides an environmental semantic perception method based on visual information, which comprises the following steps: acquiring environmental image information by using a Kinect V1.0 camera to obtain a registered color image and depth image; resolving the three-dimensional pose of the camera according to the ORB feature points extracted from each frame through an ORB _ SLAM2 processbased on the registered color image and depth image to obtain camera pose information; performing semantic segmentation on each frame of image to generate semantic color information; synchronously generating a point cloud according to the input depth map and the internal reference matrix of the camera; registering the semantic color information into the point cloud to obtain a local semantic point cloud result; fusing the camera pose information with the local semantic point cloud result to obtain new global semantic point cloud information; and representing the fused global semantic point cloud information by using an octree map to obtain a final three-dimensional octree semantic map. According to the invention, deeper humanoid understanding is provided for environment detection of the extraterrestrial celestial body patroller.

Description

technical field [0001] The invention relates to an environmental semantic perception method based on visual information, and belongs to the field of artificial intelligence information technology. Background technique [0002] The effective perception of the mobile platform in the environment can be realized through synchronous positioning and drawing technology. It can not only know the obstacle information in the environment, but also obtain the relative relationship between itself and the environment. This is a key step to realize the autonomy of the platform. However, as With the continuous development of platforms and detection payloads, more mission scenarios and requirements have been proposed, and the recognition of target appearance and geometric features can no longer solve practical problems. In the process of patrolling extraterrestrial celestial bodies, if a piece of terrain with a similar appearance can only rely on traditional recognition methods, the three-di...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Applications(China)

IPC IPC(8): G06T7/11G06T7/50G06T7/90

CPCG06T2207/10024G06T2207/10028G06T7/11G06T7/50G06T7/90

Inventor 白成超郭继峰郑红星刘天航

Owner HARBIN INST OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com