Auxiliary semantic recognition system based on gesture recognition

A technology of semantic recognition and gesture recognition, applied in the field of human-computer interaction, can solve problems such as less time for thinking and narration errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

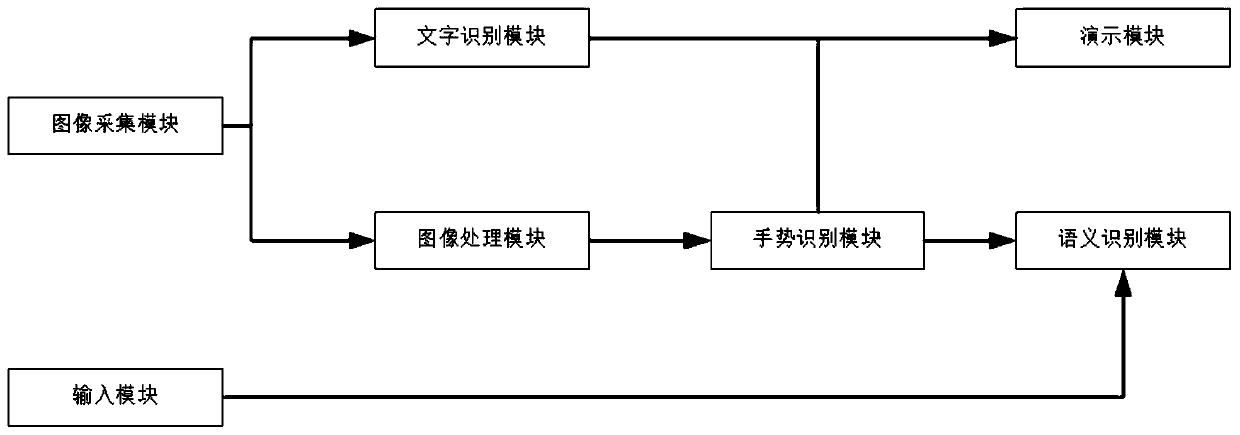

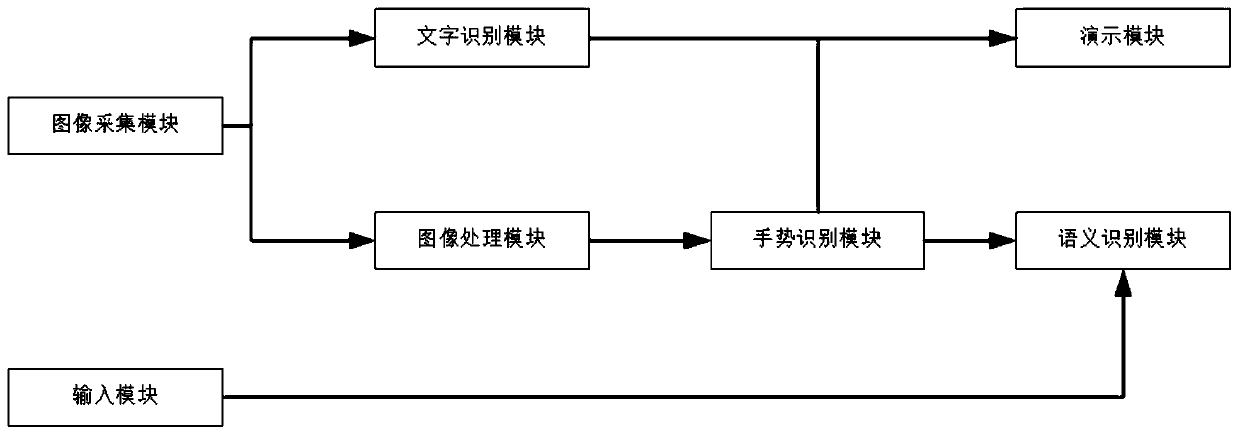

[0037] The auxiliary semantic recognition system based on gesture recognition of the present embodiment, such as figure 1 As shown, it includes an input module, an image acquisition module, an image processing module, a character recognition module, a gesture recognition module, a semantic recognition module and a demonstration module.

[0038] The input module is used for collecting voice information and converting the voice information into the first text.

[0039] The image collection module is used to collect the image of the disability certificate, and the text recognition module is used to recognize the text in the image of the disability certificate, and extract personal data from the recognized text. In this embodiment, the personal data includes name, gender, age, and disability type, and the disability type includes hearing, speech, physical, intelligence, multiple, etc.

[0040] The demonstration module is used to play gesture demonstration video before the image a...

Embodiment 2

[0054] The difference between this embodiment and Embodiment 1 is that in this embodiment, after the demonstration module plays a reminder that the gesture range is too fast, the gesture recognition module is also used to continue to judge whether the motion range is lower than the second threshold. If below the second threshold,

[0055] The gesture recognition module is also used to send the range guide instruction to the demonstration module, and the demonstration module is also used to play the range guide file according to the range guide instruction. In this embodiment, the range guide file is range guide music or range guide video. Specifically, if the user is hearing-impaired, the amplitude-guiding video is played; if the user is not hearing-impaired, the amplitude-guiding music is played. The volume of amplitude guide music is inversely proportional to the amplitude of motion, and the brightness of amplitude guide video is inversely proportional to the amplitude of m...

Embodiment 3

[0058] The difference between the present embodiment and the second embodiment is that the amplitude guide music and the speed guide music in this embodiment are the same music, and the difference is that the volume will change when the amplitude guide music is used. The amplitude guide video and the speed guide video are the same video, the difference is that the brightness will change when used as the amplitude guide video. When the user's movement speed exceeds the first threshold and the movement amplitude is lower than the second threshold at the same time, there is no need to play two different music or videos, and no conflict will be caused.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com