Deep learning memory management method and system based on tensor access

A technology of memory management and deep learning, which is applied in the field of deep learning memory management methods and systems, can solve problems such as insufficient memory, and achieve the effect of effective management and realization of memory

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

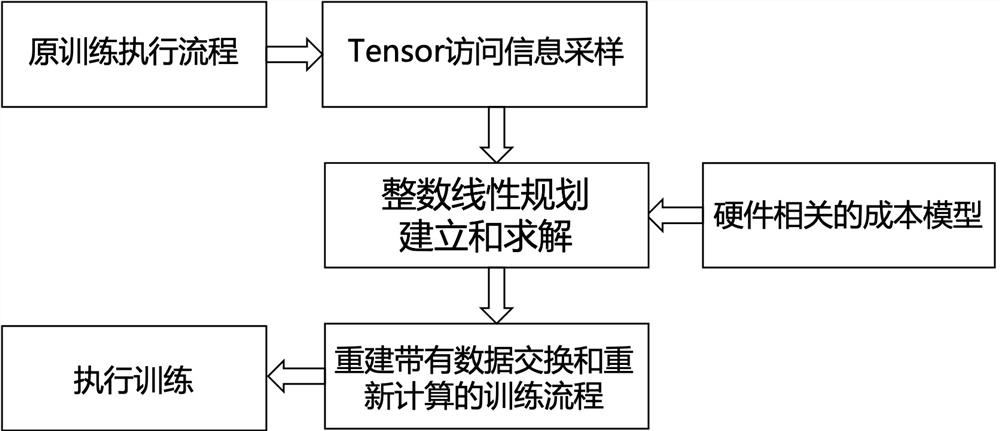

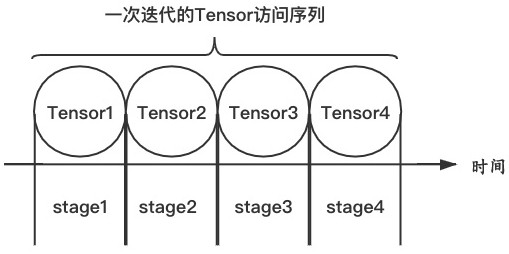

Method used

Image

Examples

Embodiment

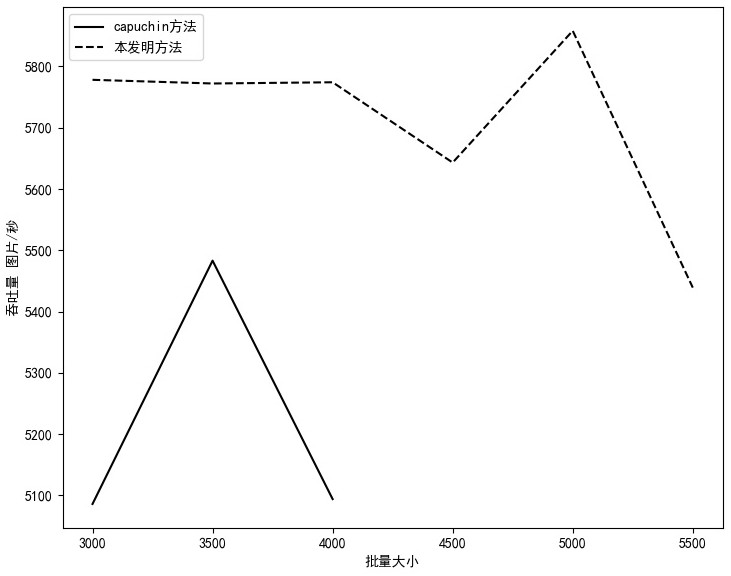

[0084] In one configuration Tesla V100GPU, the video memory is 32GB. The CPU model is Intel® Xeon® Gold 6126CPU@2.60GHz, the operating system is Ubuntu 18.04.3 LTS, the CUDA Toolkit version is 9.0, and the pytorch version is 1.5. The existing Capuchin deep learning memory management method and the method of the present invention are performed on the machine Comparison.

[0085] The training of two optimization methods is performed on the vgg16 network, and the results are as follows image 3 As shown, it can be found from the training speed that the method of the present invention is better than capuchin in all batchsize cases. In the optimization of memory occupation, the maximum batch size supported by the method of the present invention is 5500, while the maximum batch size of capuchin is only 4000.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com