Human body action recognition method based on space-time attention mechanism

A human action and attention technology, applied in the field of computer vision, can solve the problem of not considering the relationship between the local area of interest and the global area features of human action, and achieve the effect of strengthening the representation and improving the recognition efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] In order to make the technical solution and detailed principles of the present invention more clear and specific, the present invention will be further described below with reference to the accompanying drawings and examples.

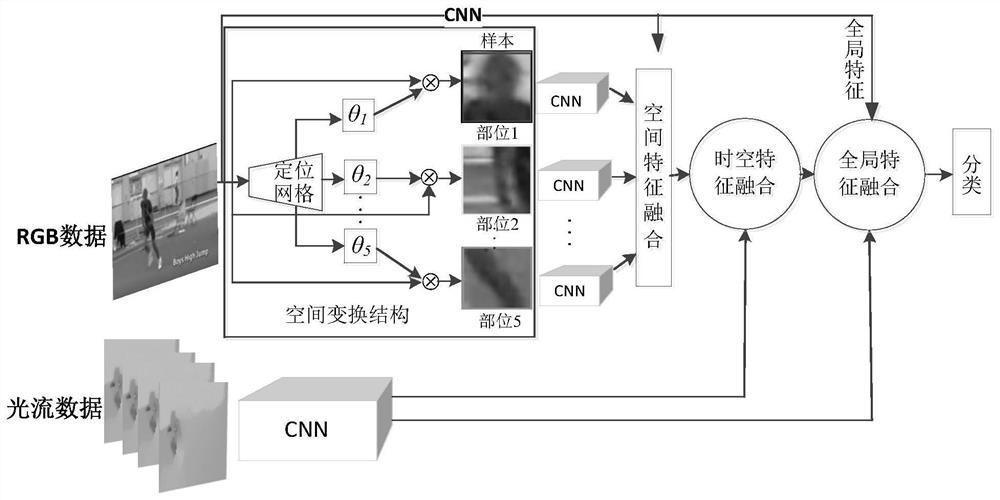

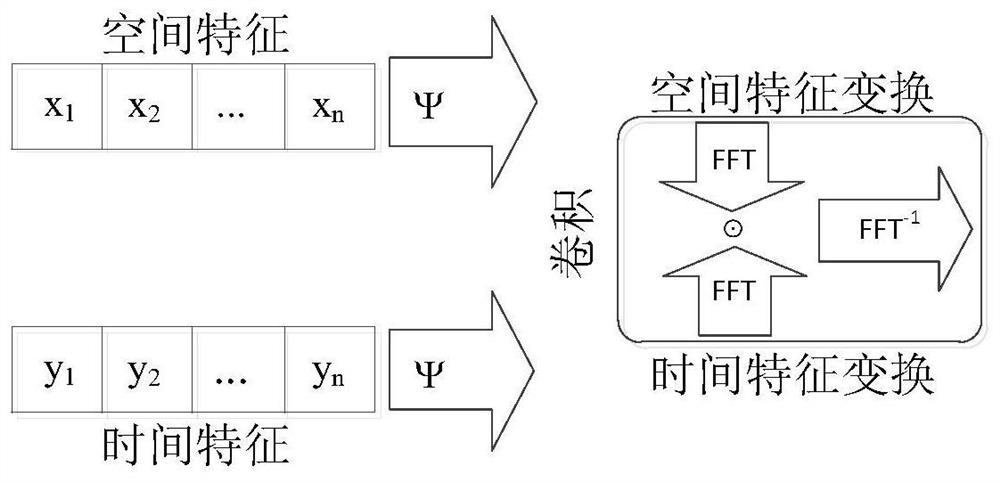

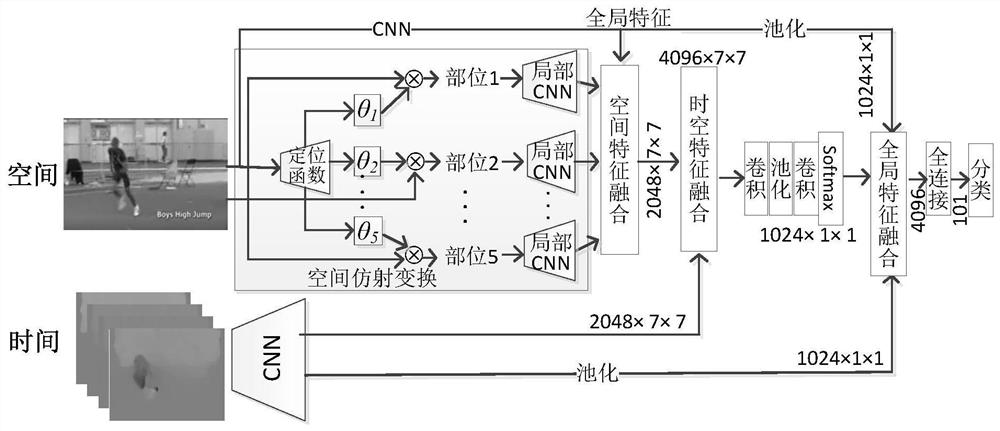

[0058] This embodiment discloses a human action recognition method based on the spatio-temporal attention mechanism, the general diagram is as follows figure 1 As shown, the schematic diagram of the detailed network structure is shown in image 3 shown. Specific steps are as follows:

[0059] 1. Divide the human action video clips in the data set into 5 clips with 20 frames as the unit, and adjust the video frames to 224*224 pixels uniformly. Randomly select a single frame from each intercepted human action video clip as the input of the spatial network, use the TVL1 optical flow method to extract the video frame data to obtain the optical flow graph in the horizontal and vertical directions, and store it as a JPEG image as the input of the tem...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com