Euler video color amplification method based on deep learning

A technology of color amplification and deep learning, applied to color TV, color TV parts, color signal processing circuits, etc., can solve the problems of color amplification result interference, spatial decomposition process relying on manual design, noise, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

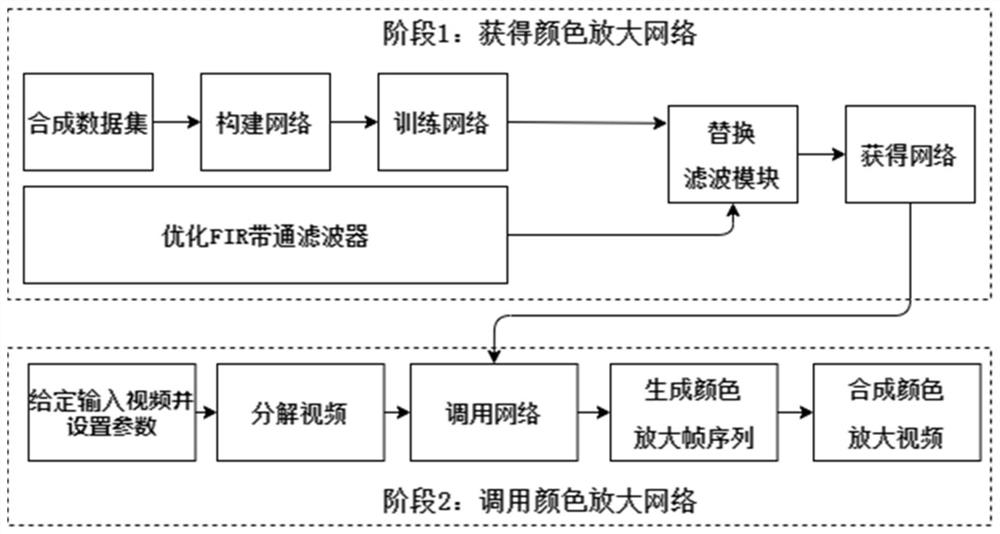

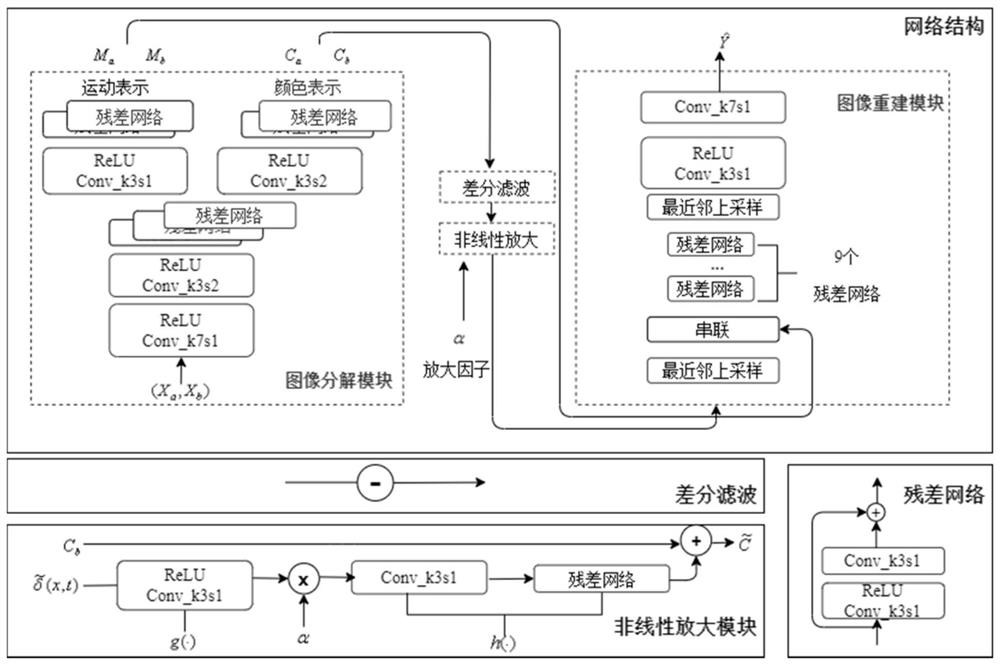

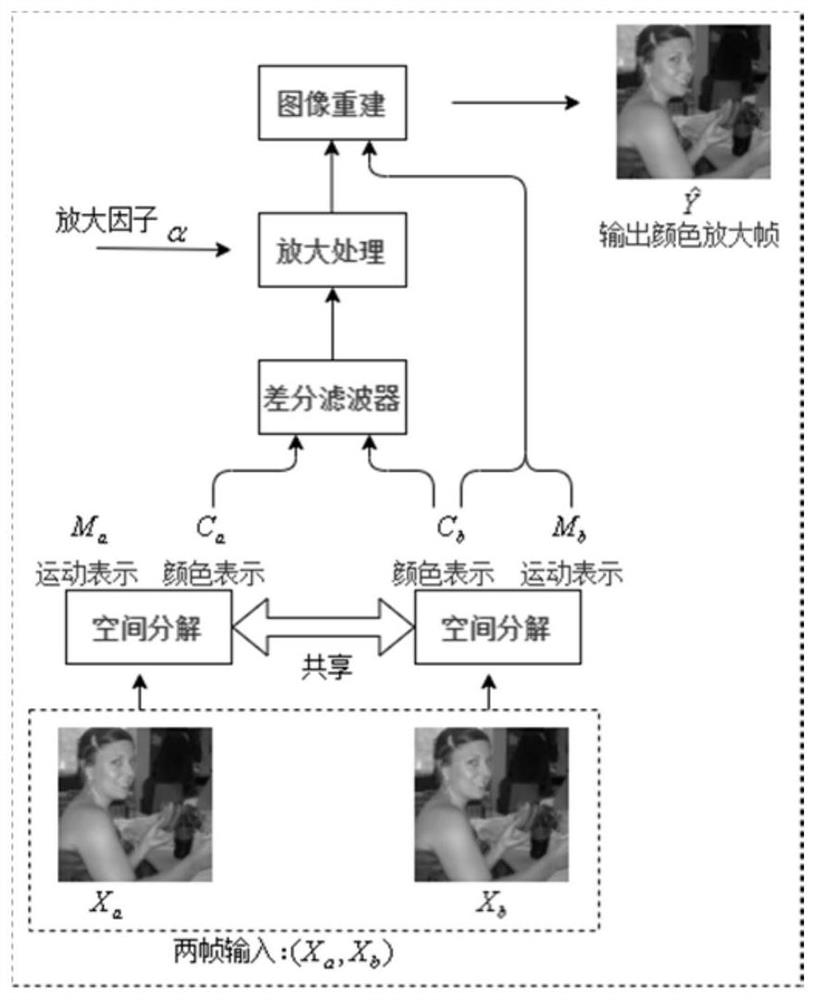

Method used

Image

Examples

Embodiment 1

[0083] Embodiment 1. The inventor tested the effectiveness of the Euler video color amplification method based on deep learning in a static scene. like Figure 5 As shown, the Face video is enlarged, and the head remains still in the video. From the enlarged result, it is not difficult to see that the present invention amplifies the color change caused by the blood pulse that was originally invisible on the face. Comparing the magnification result of the method of the present invention with the linear Euler video color magnification method, it can be seen from the time slice diagram that the magnification effect of the present invention is more obvious, the noise is less, and the picture is clearer.

Embodiment 2

[0084] Embodiment two, such as Image 6 As shown, the dynamic scene is enlarged, and the Bulb video hand-held light bulb moves upwards. Compared with the method of the present invention and the linear Euler video color amplification method, it is obvious that the method of the present invention has a strong amplification effect. Figure 7 It is shown that step (1.4) optimizes the FIR bandpass filter so that artifacts will not be generated in dynamic scenes.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com