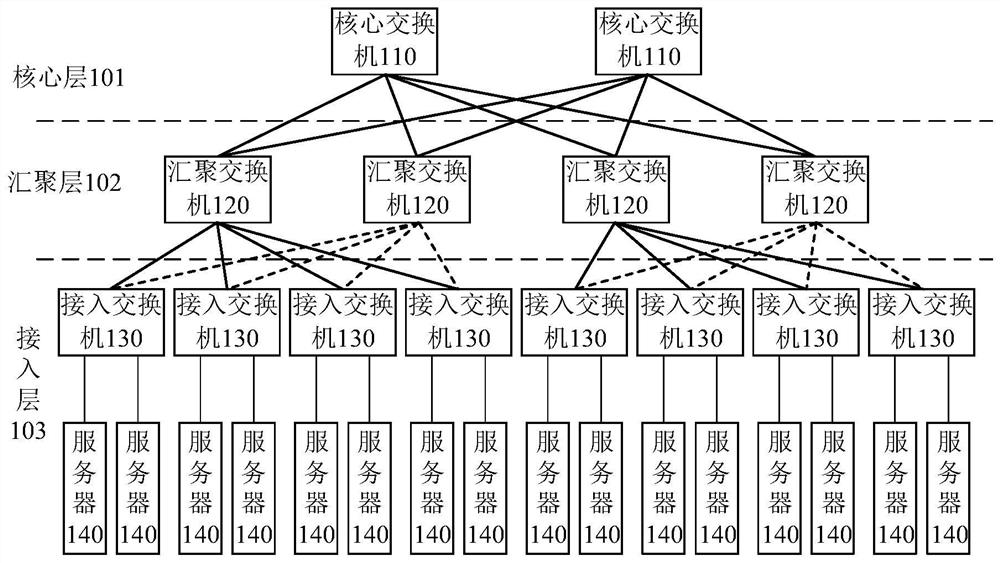

Deep learning model tuning method and computing device

A computing device and deep learning technology, applied in the field of neural networks, can solve problems such as loss of data accuracy, and achieve the effect of ensuring optimality and accuracy of precision data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

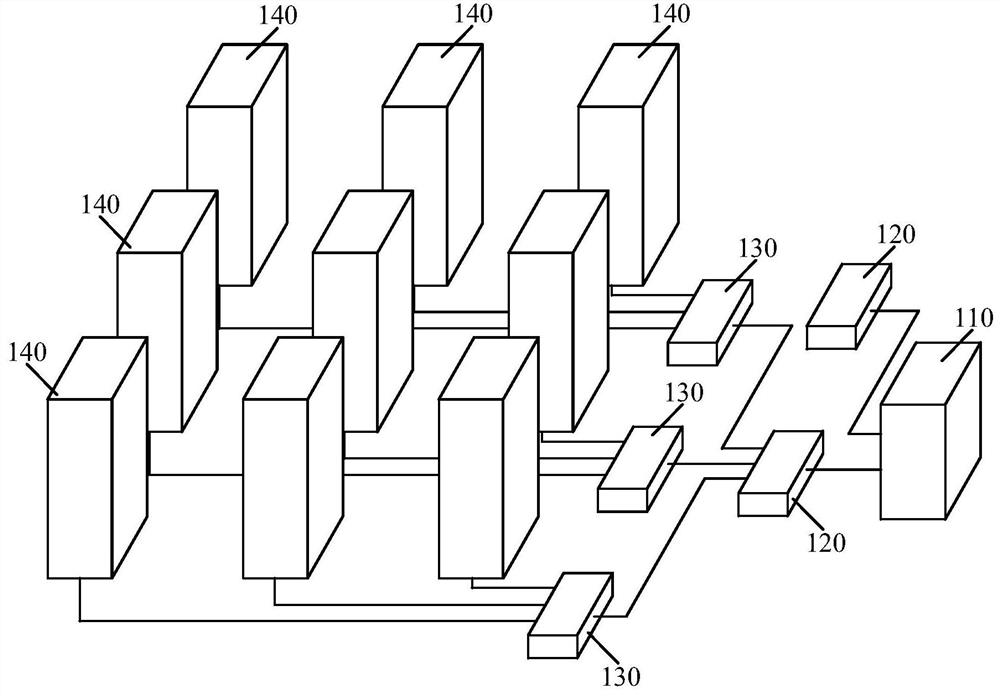

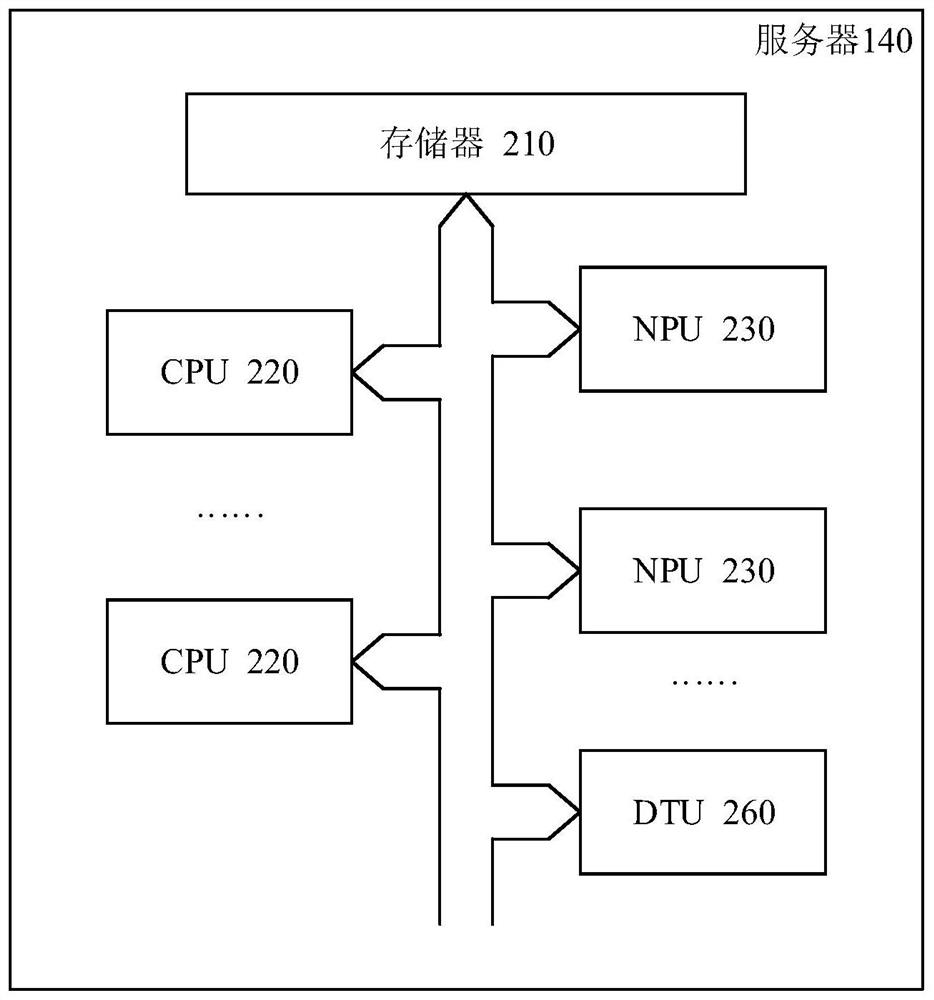

Embodiment Construction

[0052] The present disclosure is described below based on examples, but the present disclosure is not limited only to these examples. In the following detailed description of the disclosure, some specific details are set forth in detail. The present disclosure can be fully understood by those skilled in the art without the description of these detailed parts. In order to avoid obscuring the essence of the present disclosure, well-known methods, procedures, and procedures are not described in detail. Additionally, the drawings are not necessarily drawn to scale.

[0053] The following terms are used in this document.

[0054]Acceleration unit: also known as neural network acceleration unit, aimed at the low efficiency of general-purpose processors in some special-purpose fields (for example, processing images, processing various operations of neural networks, etc.), in order to improve the efficiency of these special-purpose The processing unit designed for the data processi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com