Device for producing shape model

A shape model and generation device technology, applied in the field of visual recognition, can solve the problems of reduced production efficiency and considerable time spent

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] Hereinafter, embodiments of the present invention will be described in detail with reference to the drawings. In the drawings, common reference signs are attached to the same or similar components.

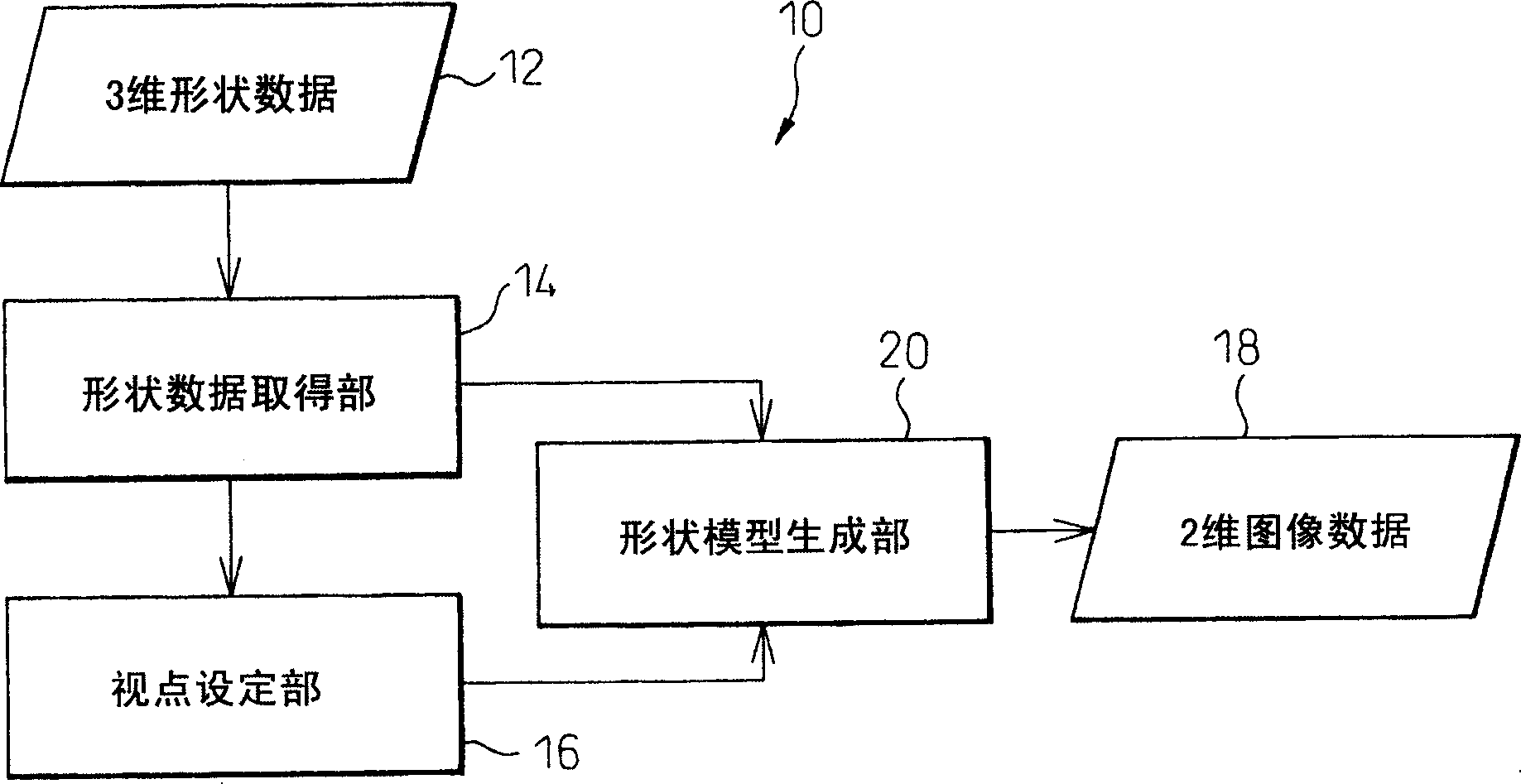

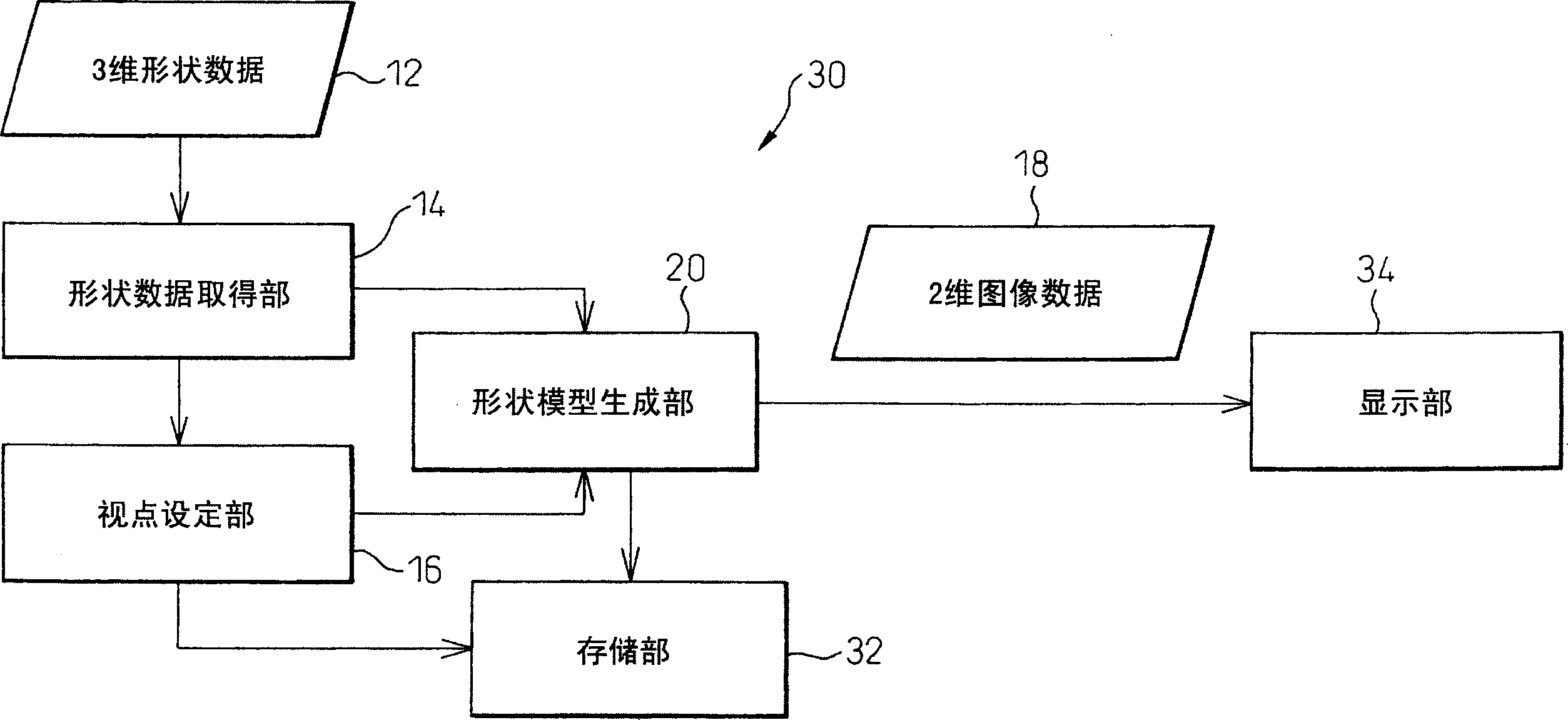

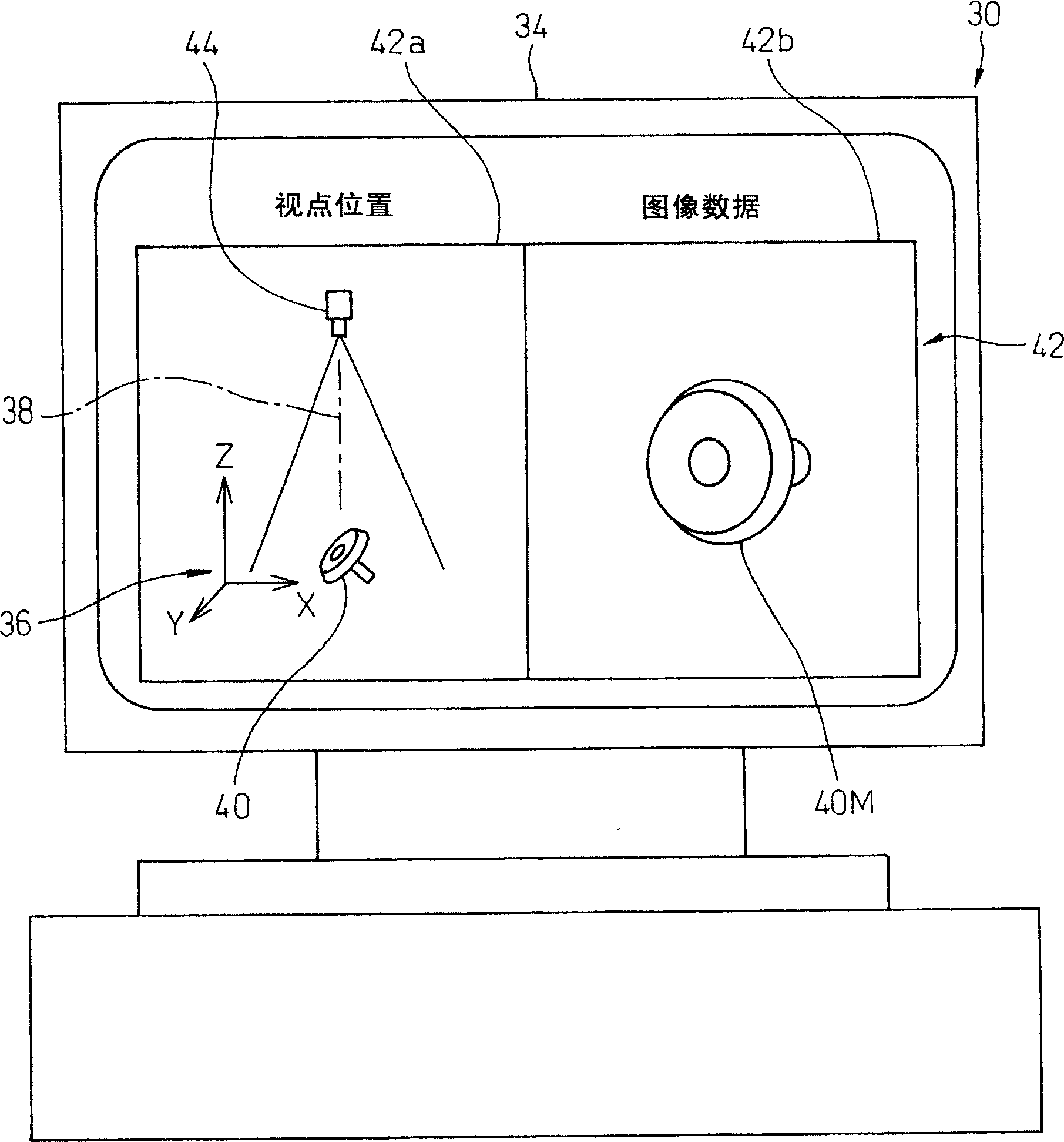

[0021] With reference to the accompanying drawings, figure 1 It is a block diagram showing the basic configuration of the shape model generation device 10 according to the present invention. The shape model generation device 10 is configured to include: a shape data acquisition unit 14 that acquires 3D shape data 12 of an object to be worked (not shown); In the coordinate system to which it belongs, a plurality of hypothetical viewpoints (not shown) that can observe an object placed at an arbitrary position in the coordinate system from mutually different directions are set; and the shape model generation unit 20 sets A plurality of hypothetical viewpoints set by the determination unit 16, when the object is observed on the above-mentioned coordinate system, a plurality ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com