Method and system for planning motion of virtual human

A motion planning and virtual human technology, applied in 3D image processing, image data processing, instruments, etc., can solve the problems of low efficiency, inability to converge, and inability to use model training in the optimization process, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be described in further detail below in conjunction with the accompanying drawings.

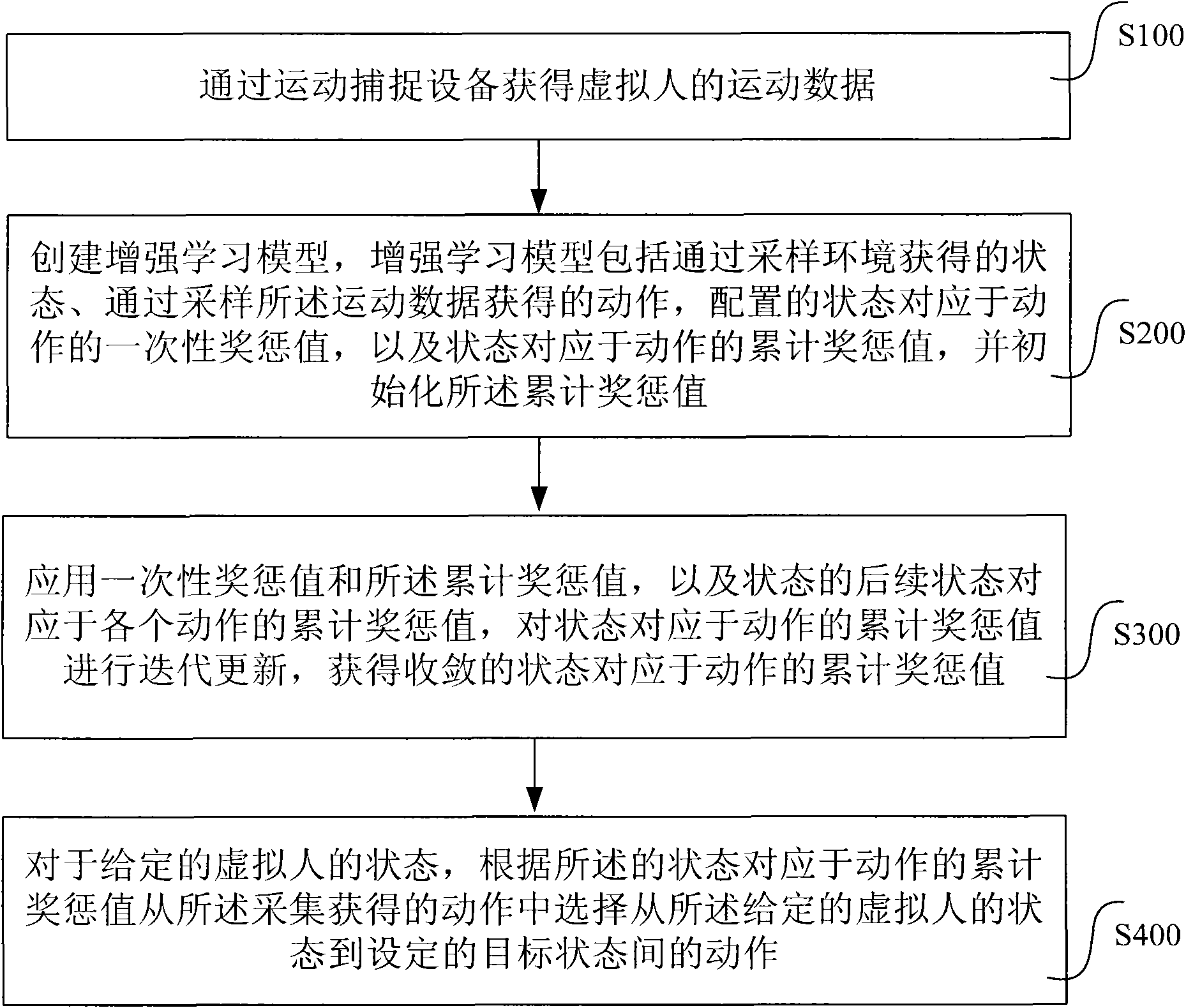

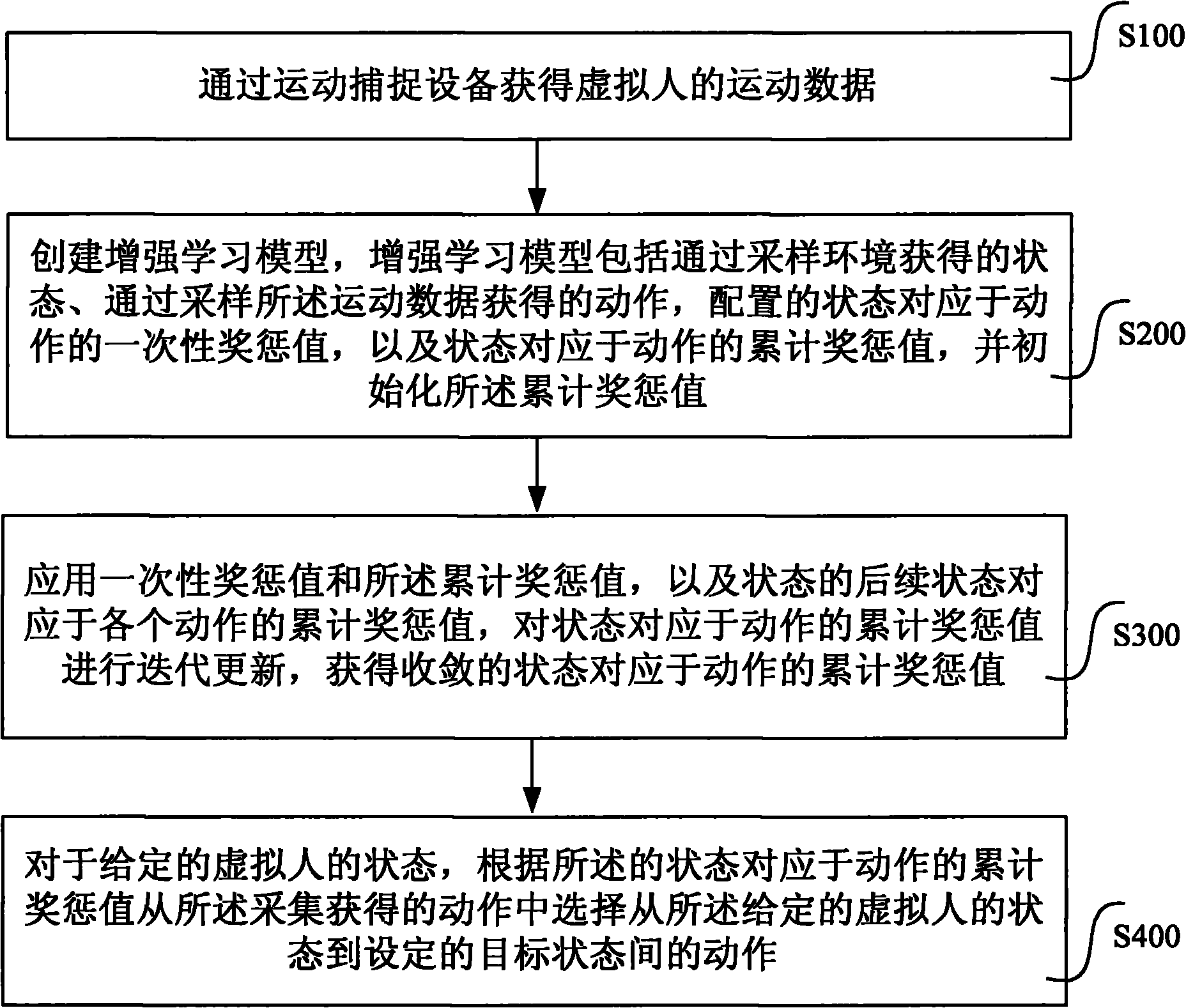

[0053] The process flow of the virtual human motion planning method of the present invention is as follows: figure 1 shown.

[0054] In step S100, the motion data of the virtual person is obtained through the motion capture device.

[0055] Use various optical and electromagnetic motion capture devices currently on the market, such as the capture device VICON 8 produced by VICON, to collect character motion data samples. The motion capture technology involved in this step is the existing technology. For related equipment and technology, please refer to: http: / / www.vicon.com / .

[0056] Record the collected motion data sequence as {Motion i} i=1 |A| , where |A| is the number of motion segments in the motion data sequence. Each motion segment consists of a set of poses, denoted as Among themPose j i Indicates the pose of frame j in the i-th motion segm...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com