Classifier integration method based on floating classification threshold

An integrated method and classification threshold technology, which is applied in the fields of instruments, special data processing applications, electrical digital data processing, etc., can solve the problem of unstable classification of points near the classification boundary, and achieve the effect of good classification boundary.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

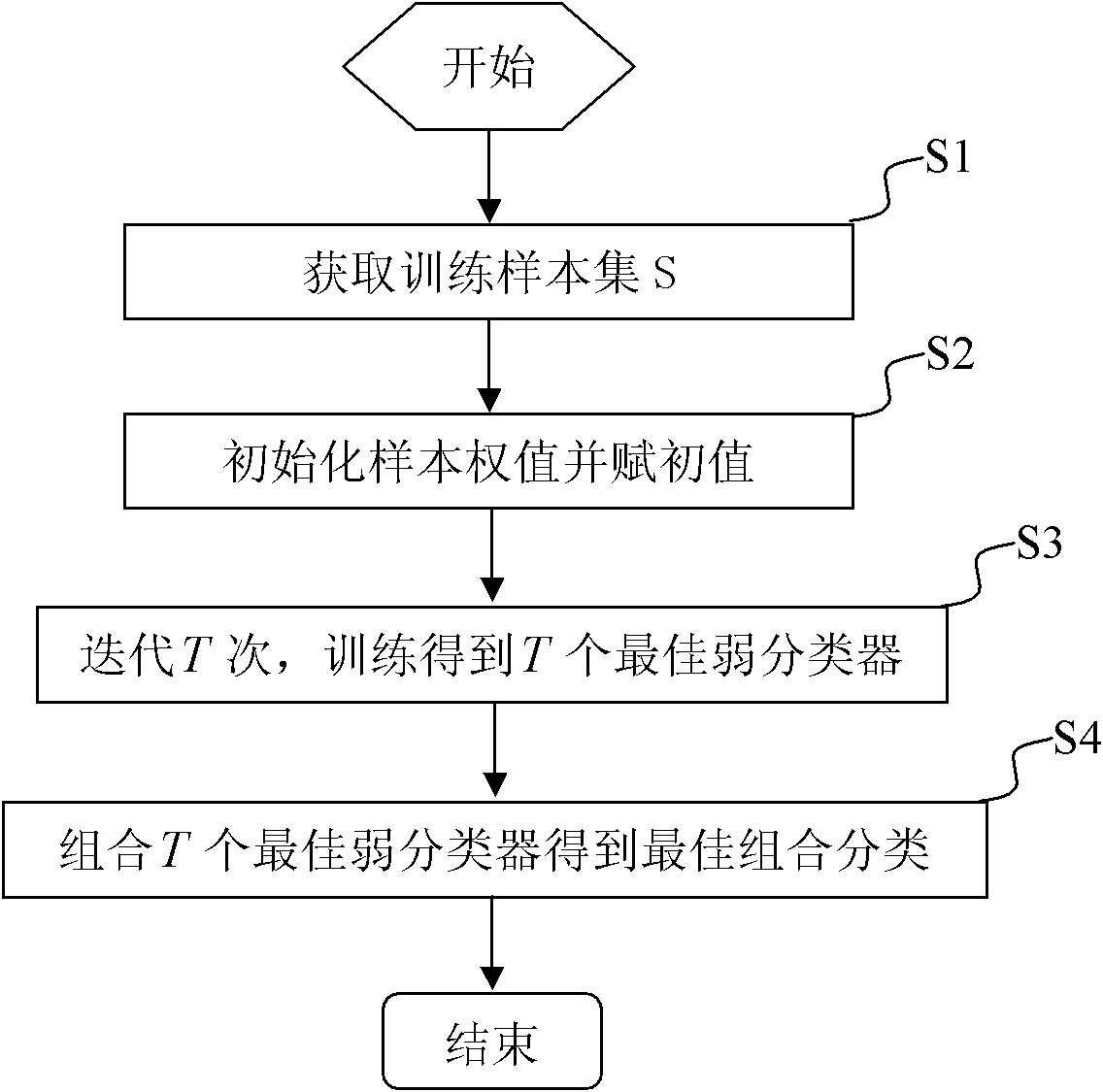

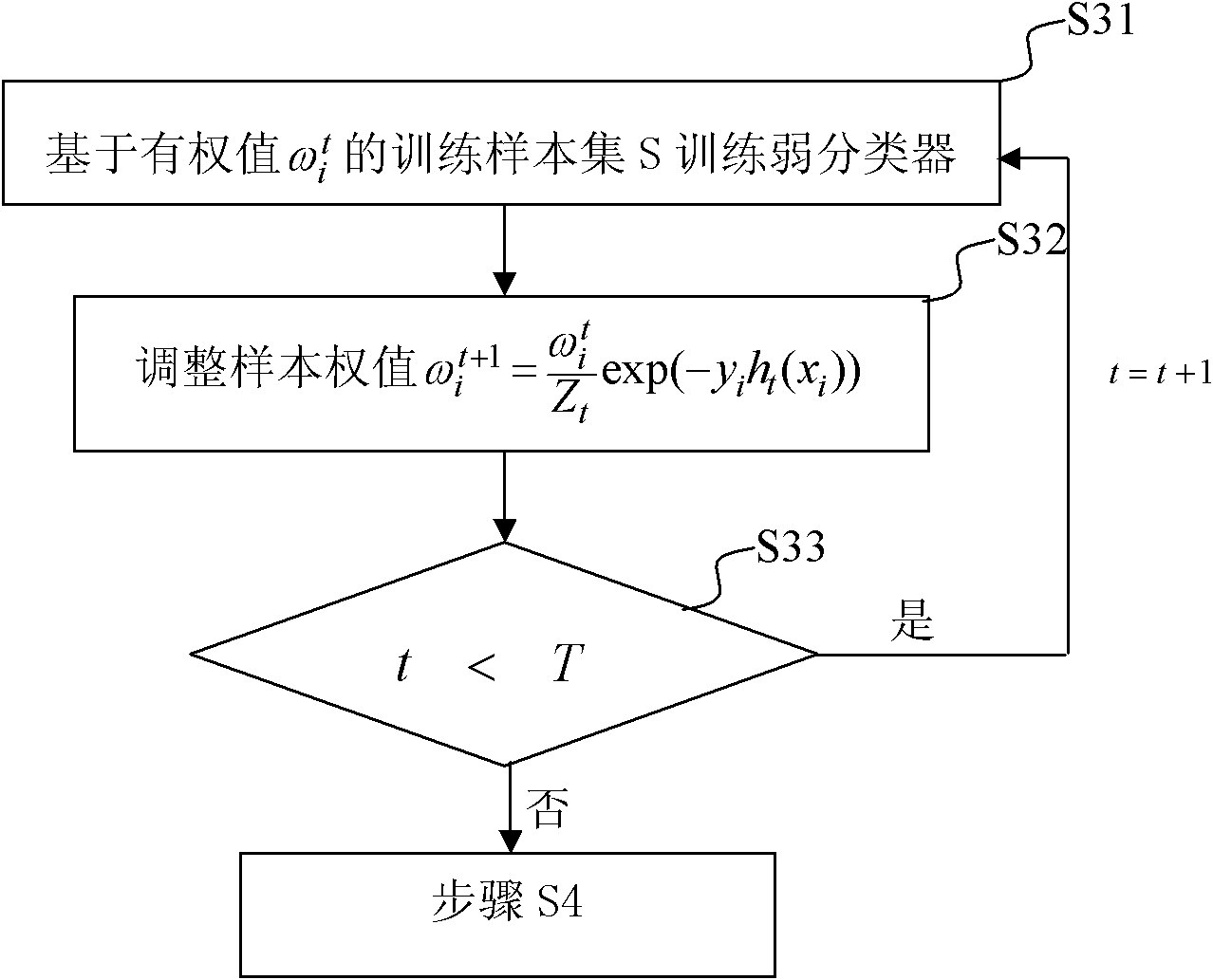

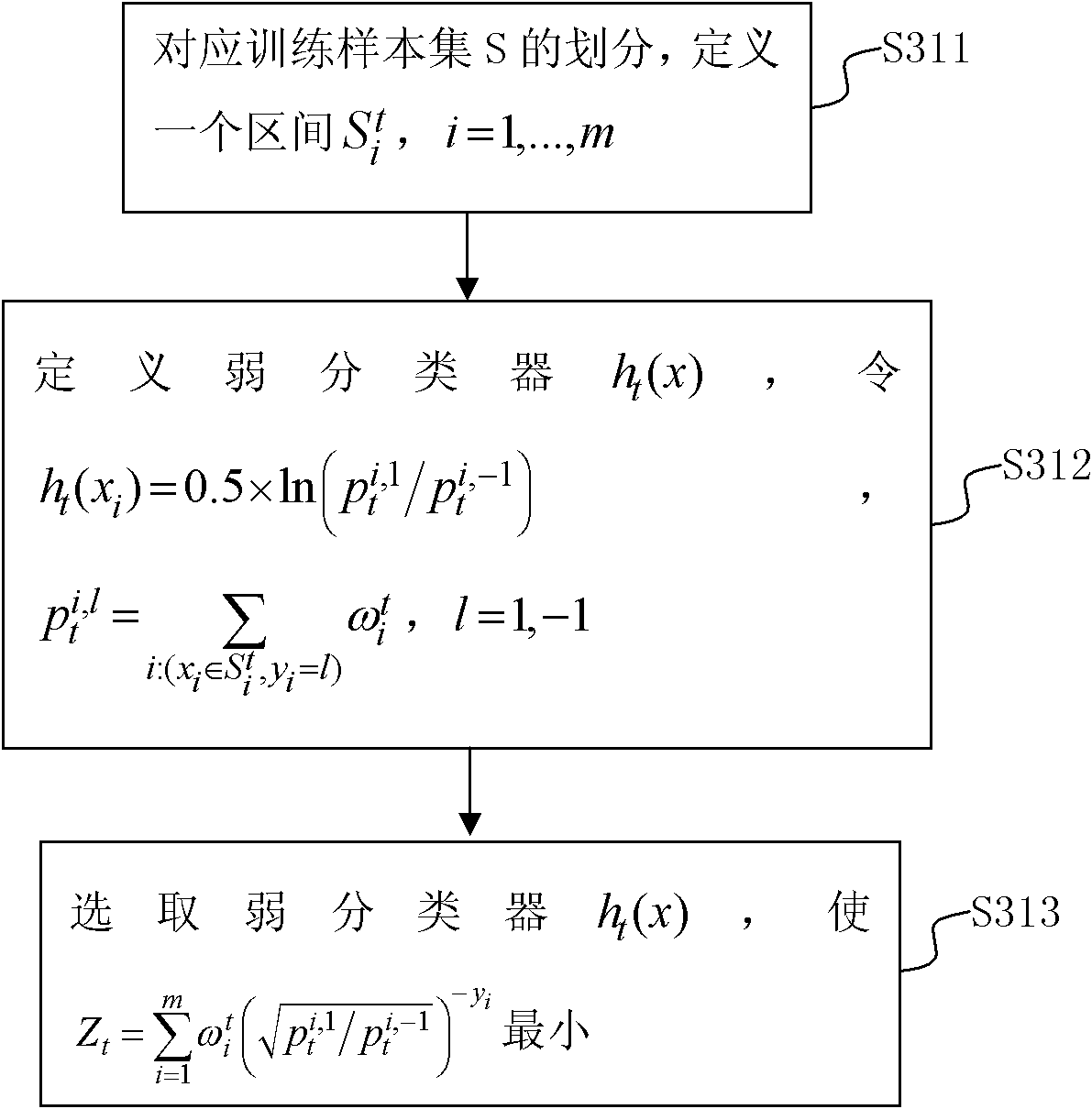

[0040] Combine below figure 1 , figure 2 , image 3 The specific process steps of the floating classification threshold-based classifier integration method for the binary classification problem of the present invention are described in detail.

[0041] When using the existing continuous AdaBoost algorithm, set the training sample set S={(x 1 ,y 1 ), (x 2 ,y 2 ), .., (x m ,y m )}, y i ∈{-1,+1}, i=1,...,m, where x i Represents the specific value of the i-th sample, y i represents the category of the i-th sample. (y i ,y i )∈S is simply written as x i ∈ S. Perform an n on the sample space S t Section division: When i≠j, Weak classifier h t (x) actually corresponds to an n of the sample space tSegment division, when the target is in the division segment , according to the probability of occurrence of samples of class 1 and class -1 in the segment and Weak classifier h t (x) will output Obviously, the output value of the weak classifier is the same fo...

Embodiment 2

[0057] Combine below figure 1 , Figure 4 , Figure 5 The specific flow steps of the classifier integration method based on floating classification thresholds for multi-classification problems in the present invention are described in detail.

[0058] In the binary classification problem, 1 and -1 are used to represent two types of labels. Therefore, the output value of the weak classifier ht(x) is directly the difference between the two types of label confidence, that is hour, Combined classifiers then output classes based on the sign of the cumulative confidence difference. In multi-classification problems, each weak classifier can only output the confidence of the corresponding category label, and the combined classifier is to accumulate the confidence of the same label, and finally output the corresponding label with the largest cumulative confidence. Remember h t (x, l) is h t (x) Confidence of the output label l (=1,...,K), combined classifier in

[0059] F...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com