A Region-Based 3D Video Mapping Method

A mapping method and sub-regional technology, applied in the field of 3D video synthesis, can solve the problems of large amount of data and large amount of calculation in the multi-view method

- Summary

- Abstract

- Description

- Claims

- Application Information

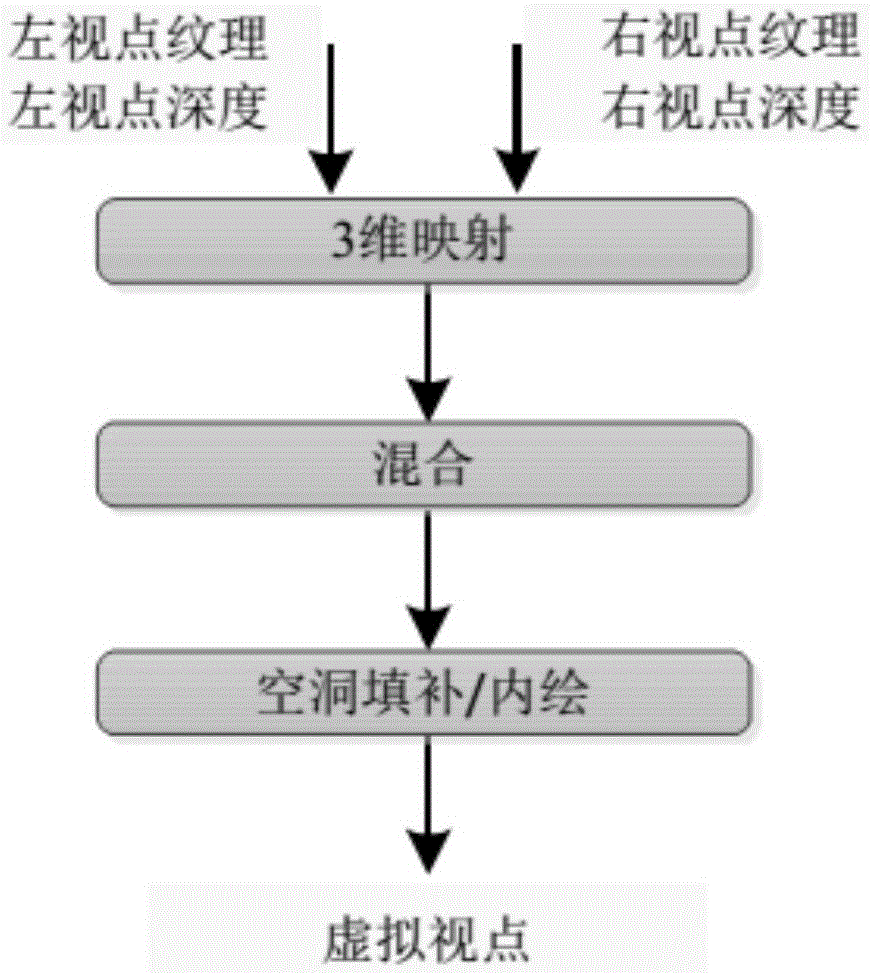

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0088] Preliminary test experiments have been done on the subregional 3D video mapping scheme proposed by the present invention. The standard test sequence is used as input, that is, the Philips Mobile sequence, and the first 100 frames are taken for testing, and the sequence resolution is 720*540. Three different modes are used: whole pixel, half pixel, quarter pixel. Use a dell workstation for simulation. The parameters of the workstation are: Intel(R), Xeon(R) Quad-Core CPU, 2.8GHz, 4.00GB-DDR3 memory. The software platform is Visual studio 2008, and the program is realized by programming in C++ language.

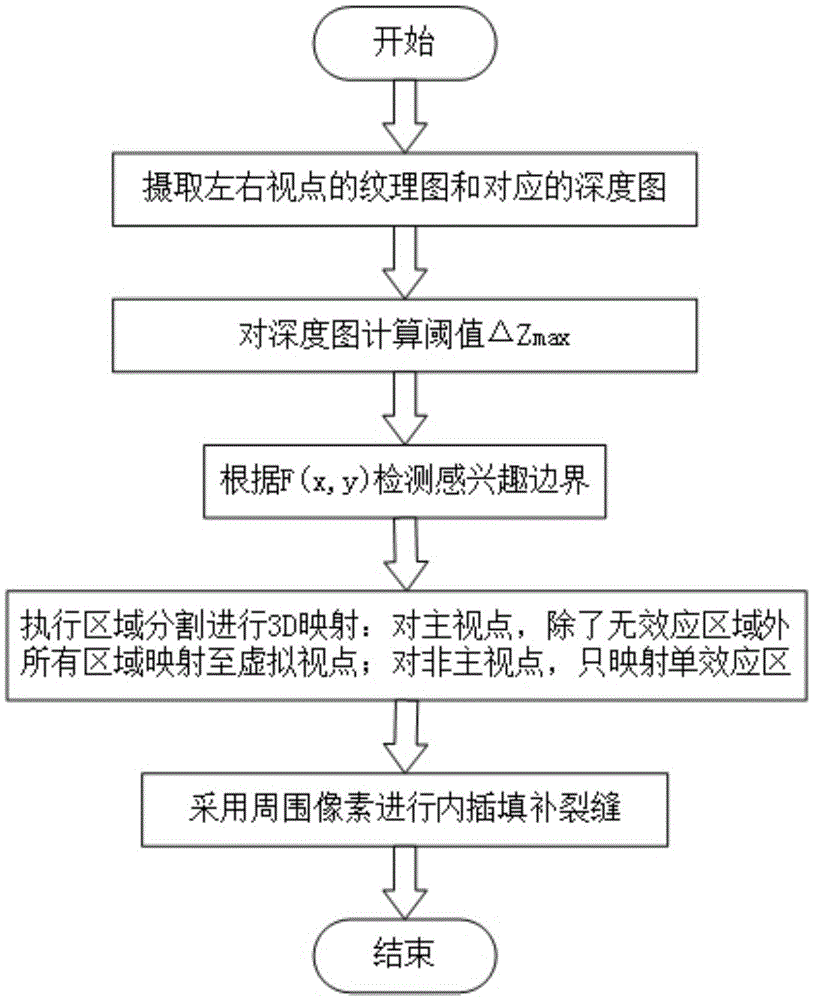

[0089] This example is implemented in this way, and its process includes the following steps:

[0090] Step 1: Ingest the texture maps and corresponding depth maps of two adjacent viewpoints of the 3D video, and then calculate the threshold ΔZ max =20.

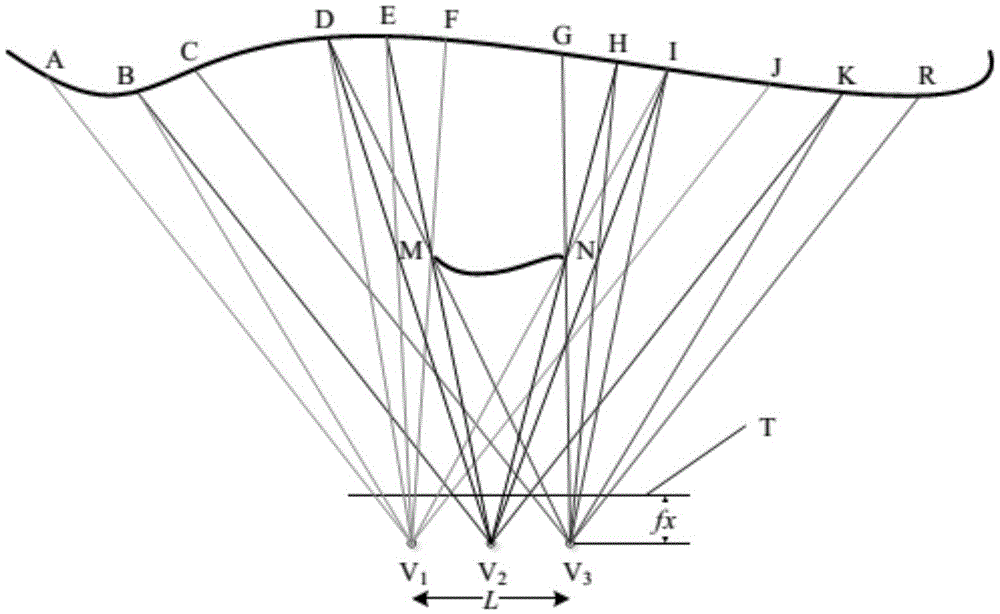

[0091] Step 2: Select the viewpoint V 1 or viewpoint V 3 As the main viewpoint, detect the boundaries in the dep...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com