Indoor scene semantic annotation method based on RGB-D data

A technology for semantic labeling and indoor scene, applied in the field of image semantic labeling and indoor scene semantic labeling based on RGB-D data, which can solve the problem of single depth information, difficulty in selecting the quantization level of labeling primitives, and insufficient geometric depth information. attention etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings.

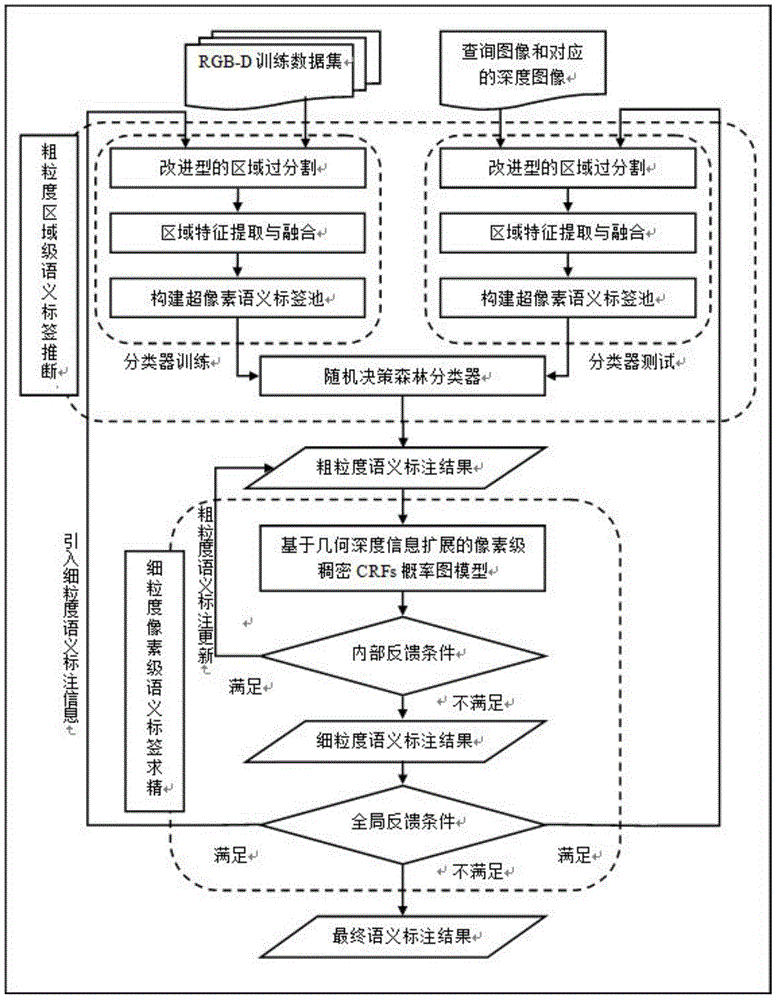

[0054] Such as figure 1 As shown, the present invention designs an indoor scene semantic annotation method based on RGB-D data. In the actual application process, the semantic annotation framework of the indoor scene image is carried out by using the semantic annotation framework based on RGB-D information from coarse to fine and global recursive feedback. , which is characterized in that: the semantic annotation framework is composed of coarse-grained region-level semantic label inference and fine-grained pixel-level semantic label refinement, which are alternately iteratively updated, including the following steps:

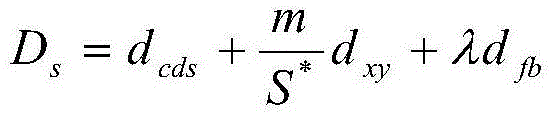

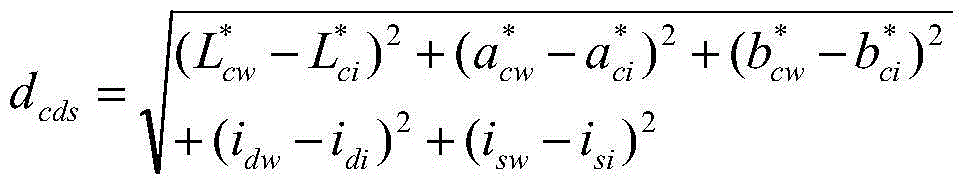

[0055] Step 001. Using the simple linear iterative clustering (SLIC) over-segmentation algorithm based on image hierarchical saliency guidance, over-segment the RGB image in the RGB-D training data set, ob...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com