Point-of-interest-position-information-based human body motion identification method in video

A technology of human action recognition and location of interest points, applied in the field of computer vision, can solve problems such as excessive memory requirements and complex calculations, achieve high recognition accuracy, and solve the effects of complex calculations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

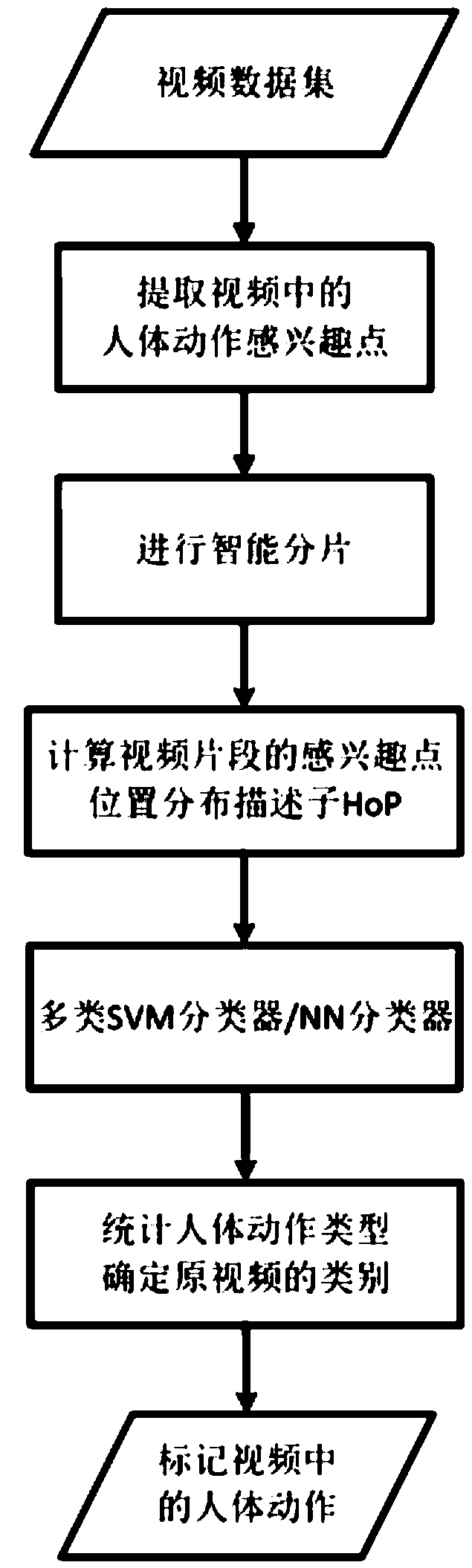

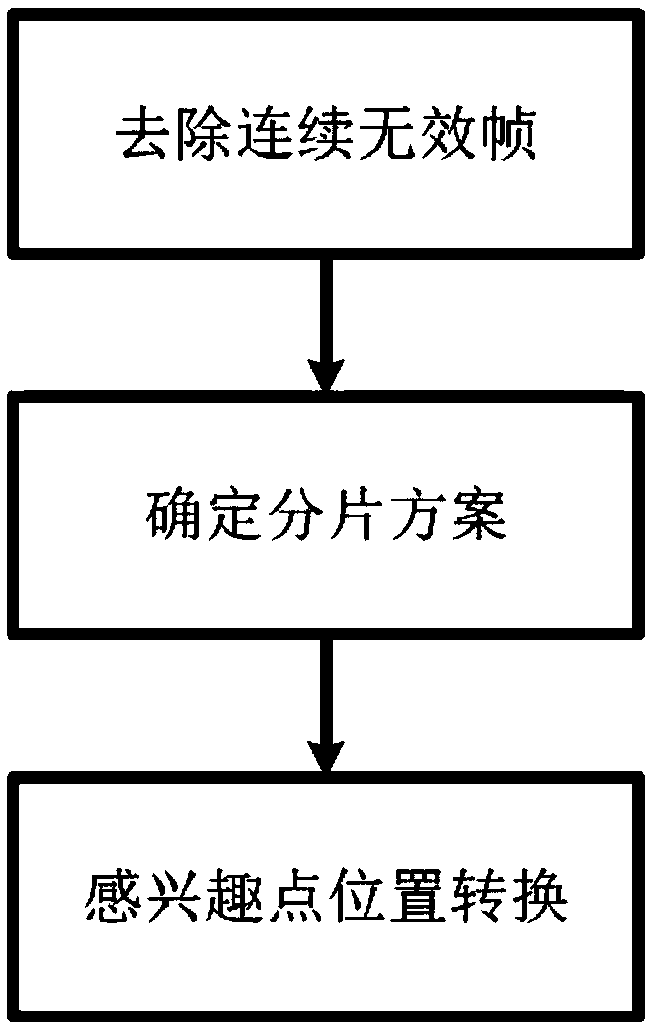

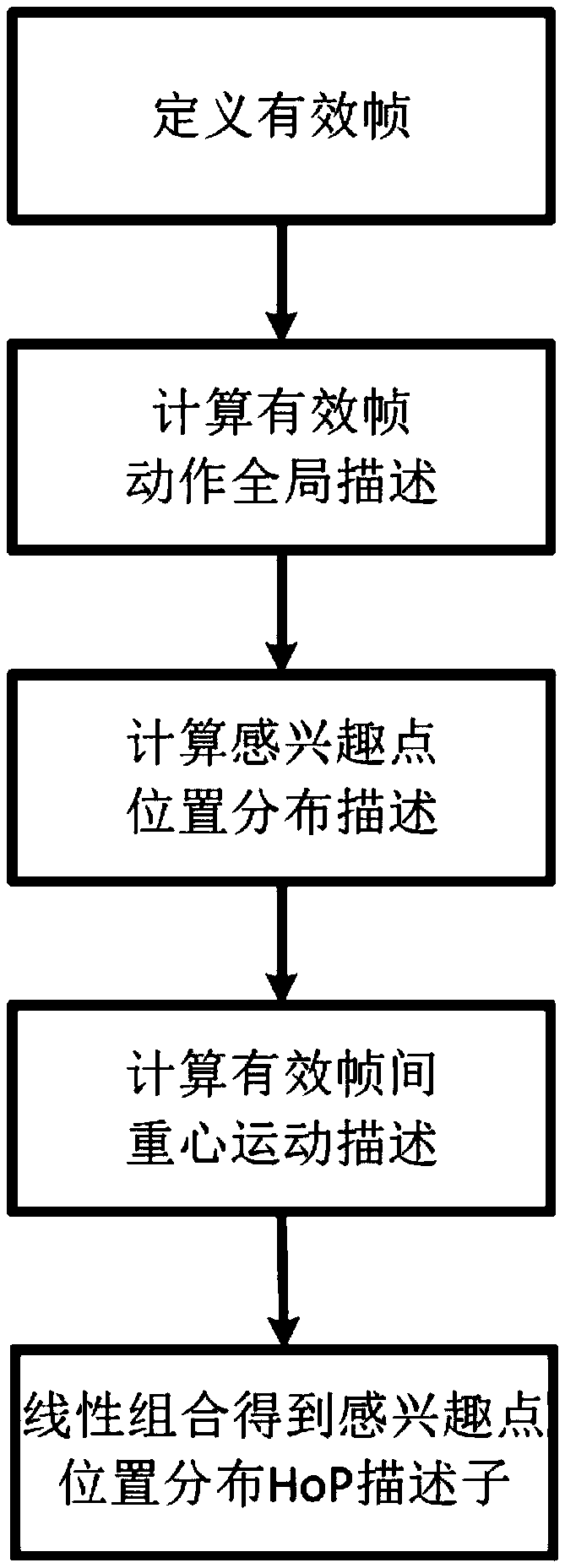

[0072] like figure 1 shown. Firstly, for each video sequence in the video data set, the points of interest of human body movements in the video sequence are extracted; then, the location information of the points of interest is used to intelligently segment it, and the video is divided into several video segments. Then, for each video clip, calculate its interest point position distribution HoP descriptor, and use the HoP descriptor to represent the human body action of the video. The videos can then be trained and tested using methods such as support vector machines, nearest neighbor classifiers, etc. For each test video, it is also intelligently segmented to obtain the human action category to which each video segment belongs, and finally the human action with the highest frequency is taken as the human action represented by the test video.

[0073] Specifically include the following steps:

[0074] S1 For each video sequence in the video data set, extract points of inter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com