Behavior video non-supervision time-sequence partitioning method

An unsupervised, video sequence technology, applied in image analysis, image enhancement, instruments, etc., can solve the problems of manpower and material resources, low timeliness of video monitoring and screening, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

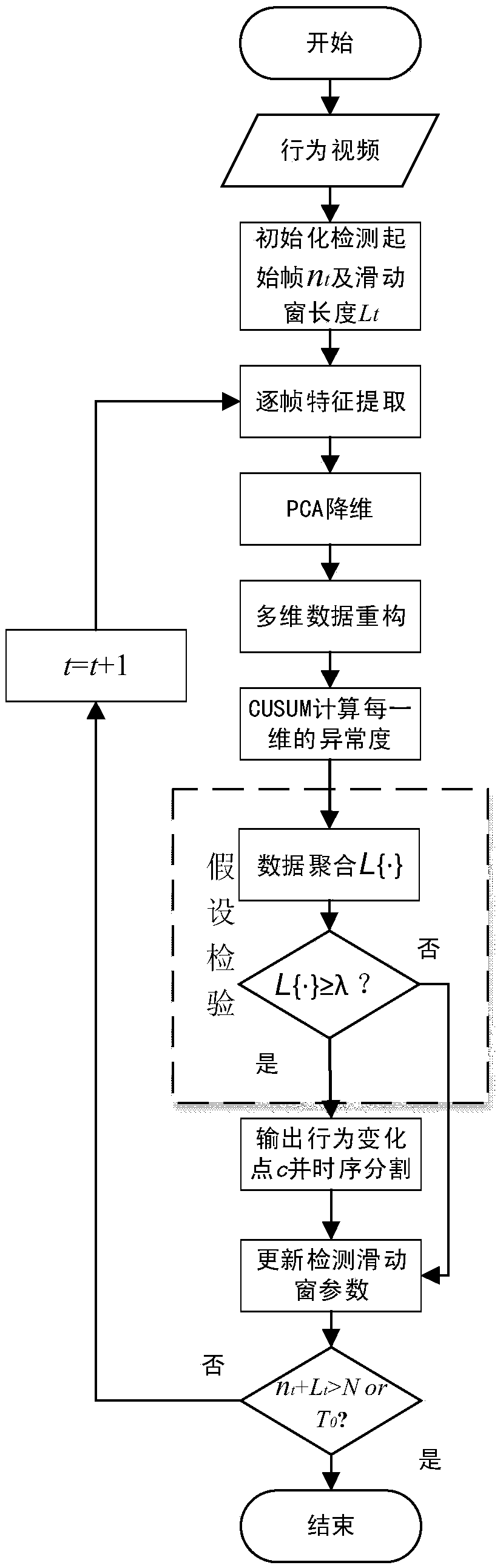

[0052]A method for unsupervised time-series segmentation of behavioral video, involving a sliding window model of behavioral video in the method, comprising

[0053] (1-1) The start time of initializing video detection is n t and the corresponding sliding window frame length L t ;

[0054] (1-2) do the detection of the behavior change point in the video sequence window of setting up;

[0055] (1-3) If it is detected that there is a behavior change point c in the video sequence window, then take the time point c as the detection start time and re-initialize the frame length of the sliding window to continue to detect the subsequent video; otherwise, if in the video sequence window If no behavior change point is detected in , the initialized n t To detect the starting frame, ie n t+1 =n t , while the frame length of the sliding window is updated to n t+1 =L t +ΔL, where ΔL is the incremental step of the sliding window length;

[0056] (1-4) The entire detection process i...

Embodiment 2

[0063] A kind of behavioral video unsupervised temporal sequence segmentation method as described in embodiment 1, its difference is, the establishment method of the sliding window model of described behavioral video, comprises the steps:

[0064] Step (1-1):

[0065] Initialize the start frame n of video detection t =n 1 and the frame length L of the corresponding sliding window t =L 1 , where L 1 Set to 2L 0 , L 0 It is the minimum length of a type of behavioral video, which is set to 50 in the application;

[0066] Step (1-2)

[0067] Perform behavior change point detection within the established video sequence sliding window;

[0068] Steps (1-3)

[0069] If a behavior change point c is detected in the video sequence window, the time point c is used as the starting frame of subsequent detection and the sliding window frame length L 1 Continue to detect subsequent videos, ie n t+1 = c, L t+1 =L 1 ; If no behavior change point is detected in the video sequence w...

Embodiment 3

[0073] A kind of unsupervised temporal segmentation method of behavior video as described in embodiment 2, its difference is, described in the described step (1-2) in the method for the detection of behavior change point in the video sequence sliding window of setting up, comprises Follow the steps below:

[0074] Under the above-mentioned incremental sliding window, the timing segmentation of different behaviors is realized by detecting the timing change point detection of the video subsequence in each window;

[0075] Step (2-1)

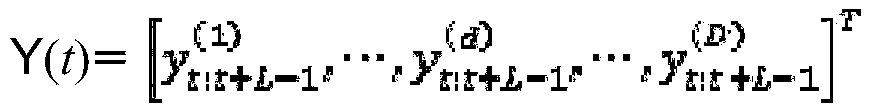

[0076] For a given video subsequence Y, use y(t) to represent the feature vector of the tth frame;

[0077] Y is recorded as: Y={y(t)}, t=1, 2, ..., N, where N represents the frame number of the video, and the dimension of y(t) is represented by D; assuming that Y(t) is given A video subsequence of length L in the behavior video Y, starting from time t and ending at time t+L-1, and recorded as:

[0078] Y(t):=[y(t) T , y(t+1) T ,...,y(t+L-1) ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com