Face tracking for additional modalities in spatial interaction

A spatial interaction, facial technology, applied in the input/output of user/computer interaction, mechanical mode conversion, computer components, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

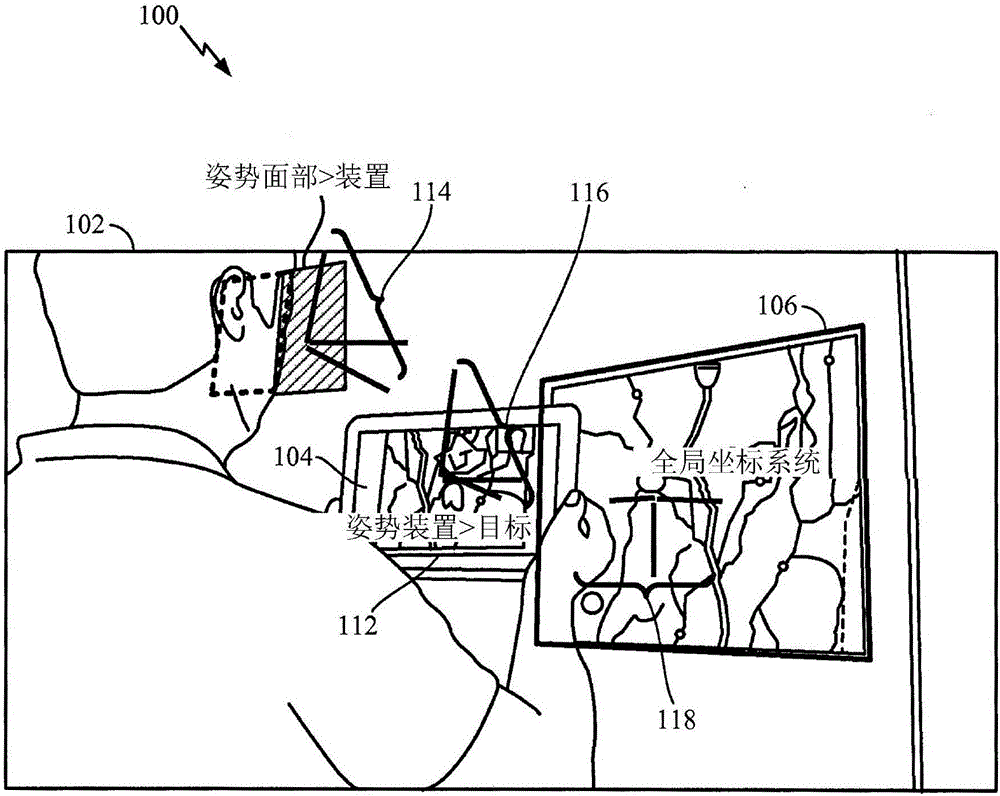

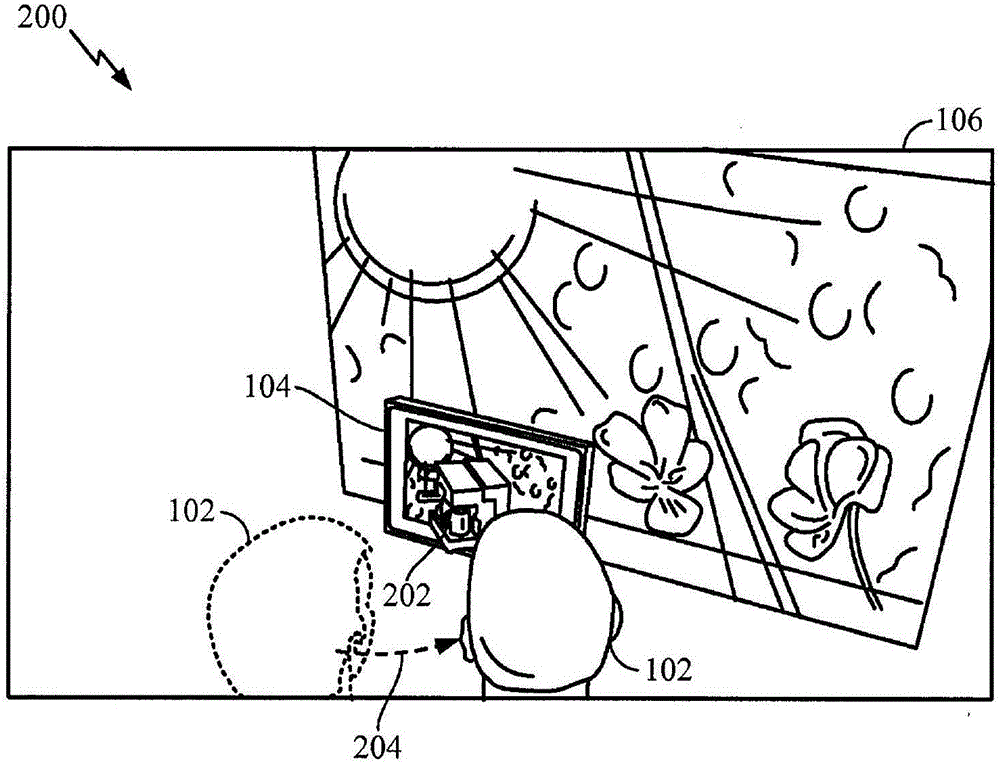

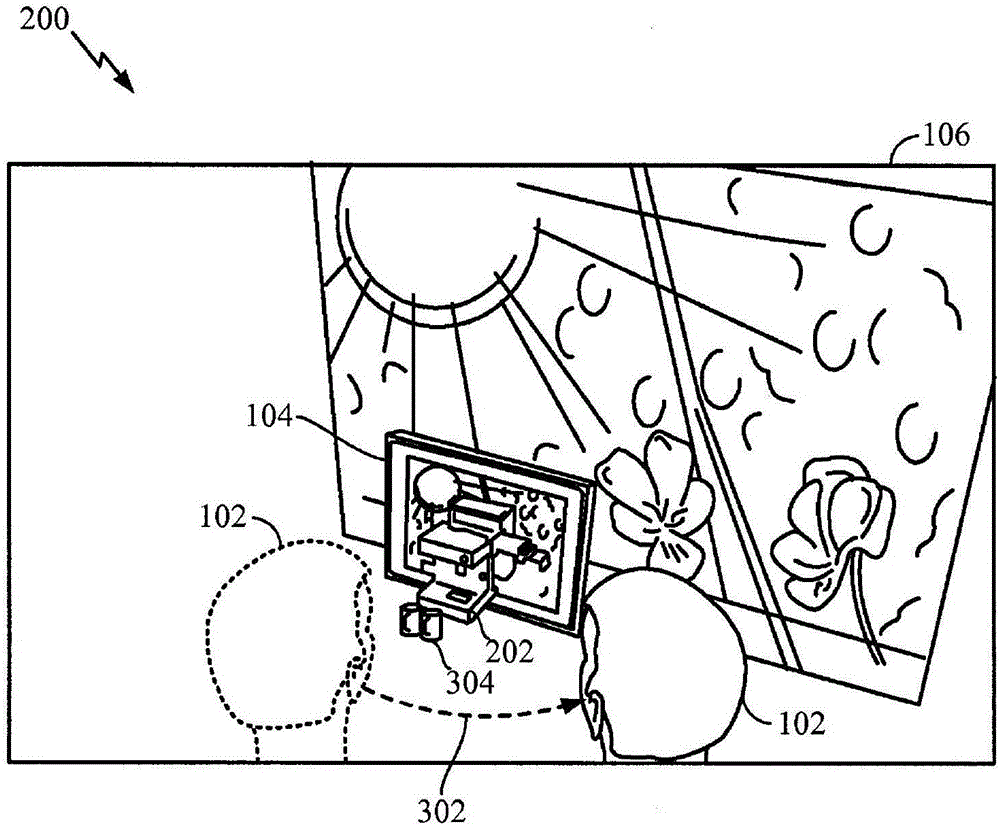

[0019] For example, the techniques described herein include mechanisms for interaction in an augmented reality environment between a screen side of a user device and a camera side of the user device. As used herein, the term "augmented reality" means any environment that combines real-world images with computer-generated data and superimposes graphics, audio, and other sensory input onto the real world.

[0020] Operating a user device in an augmented reality environment can be challenging because, among other operations, the user device should be spatially aligned toward the augmented reality scene. However, because the user holds the user device with both hands, there are limited input modalities for the user device, since on-screen menus, tabs, widgets need to be accessed using the user's hands.

[0021] In one aspect, a camera in a user device receives a constant image stream from a user side (or front) of the user device and an image stream from a target side (or rear) of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com