Method used for intelligent robot system to achieve real-time face tracking

An intelligent robot, real-time tracking technology, applied in the field of intelligent robots, can solve the problems of complex calculation, difficult continuous face tracking, etc., to simplify the tracking algorithm, improve the human-computer interaction experience, and reduce the system cost.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

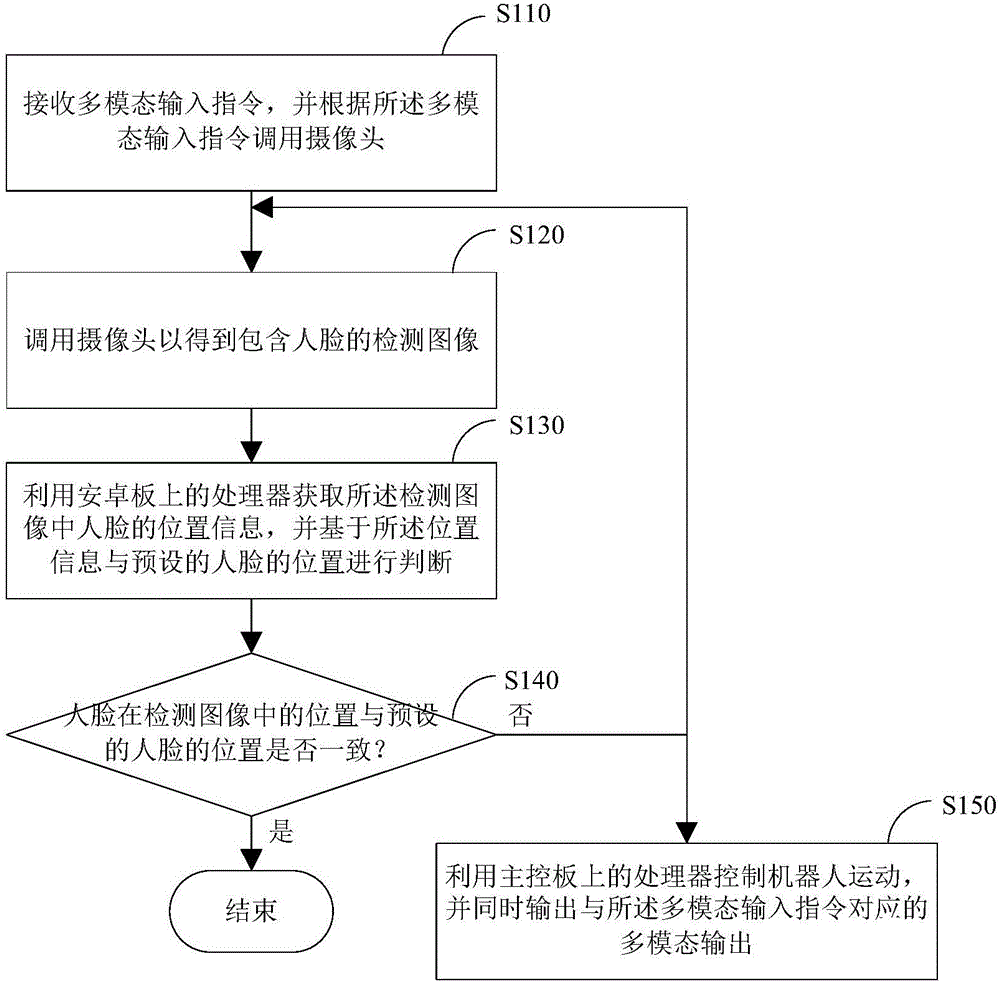

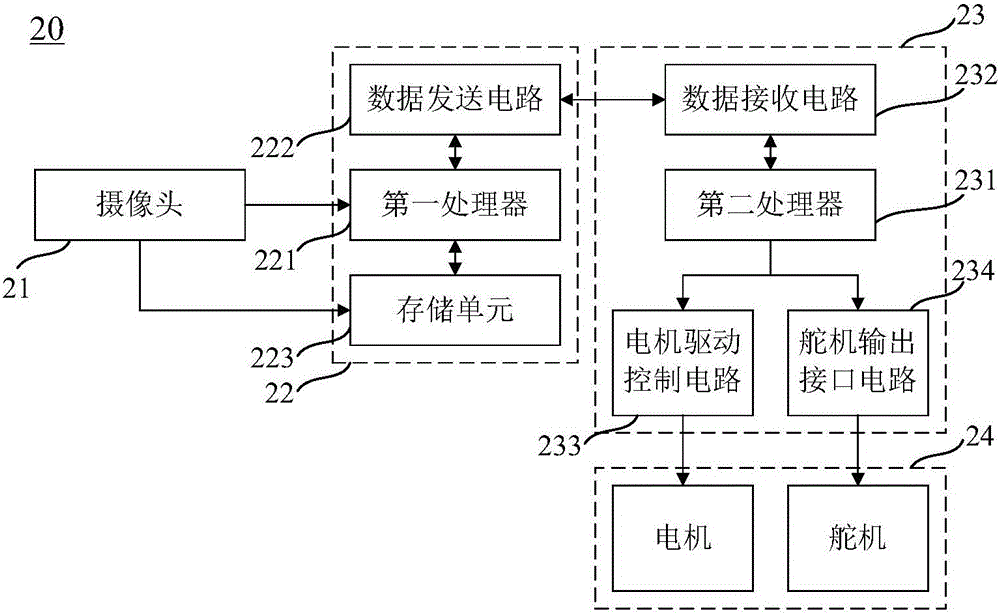

[0026] figure 1 It is a schematic flow diagram of a method for an intelligent robot system to track a human face in real time according to an embodiment of the present invention, figure 2 It is a schematic structural diagram of an intelligent robot system capable of real-time tracking of human faces according to an embodiment of the present invention. From figure 2 It can be seen that the intelligent robot system 20 mainly includes a camera 21 , an Android board 22 , a main control board 23 and an execution device 24 .

[0027] further as figure 2 As shown, the Android board 22 is mainly provided with a first processor 221 and a data transmission circuit 222 and a storage unit 223 connected to the first processor, and the main control board 23 is mainly provided with a second processor 231, A data receiving circuit 232 , a motor drive control circuit 233 and a steering gear output interface circuit 234 connected to the second processor 231 . Wherein, the camera 21 is co...

Embodiment 2

[0042] Figure 4 It is a schematic flowchart of a method for an intelligent robot system to track a human face in real time according to another embodiment of the present invention. combine figure 2 and Figure 4 First, call the camera 21 according to the received multi-modal input instruction to obtain a detected image including a human face, and store the preprocessed image data in the storage unit 223 arranged on the Android board 22. This step is consistent with the embodiment The corresponding steps in No. 1 and No. 1 are the same and will not be repeated here. Then the first processor 221 reads the image data from the storage unit 223, and recognizes the face information in the detected image.

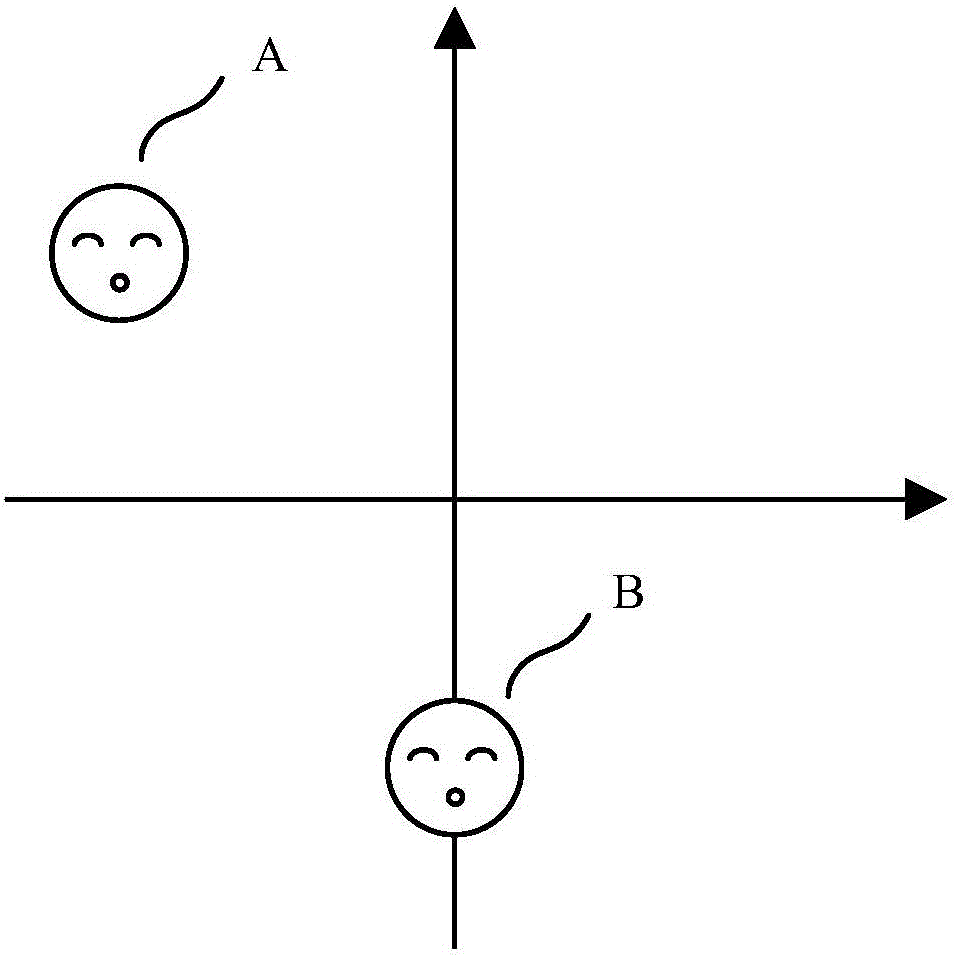

[0043] In practice, there may be more than one human face information in the detection image collected by the camera 21, so in this embodiment, the number of human faces contained in the detection image is first judged, if only one person is included in the detection image f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com