Multidimensional user identity identification method

A technology of user identification and user identity, applied in the field of smart home, can solve problems such as single identification dimension, inability to meet complex scenarios, and inability to effectively solve user authority control

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

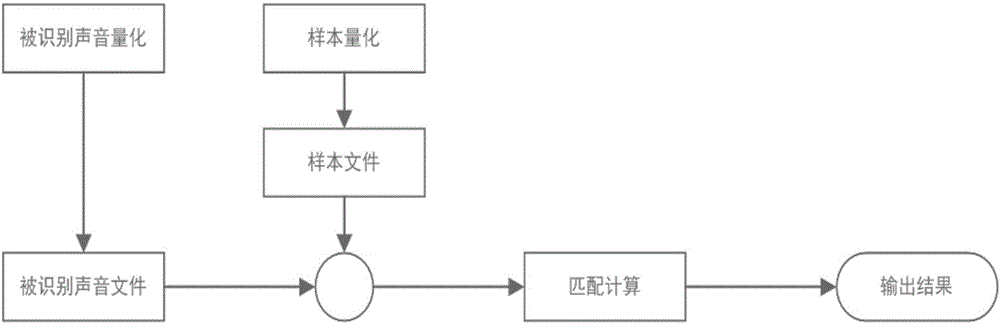

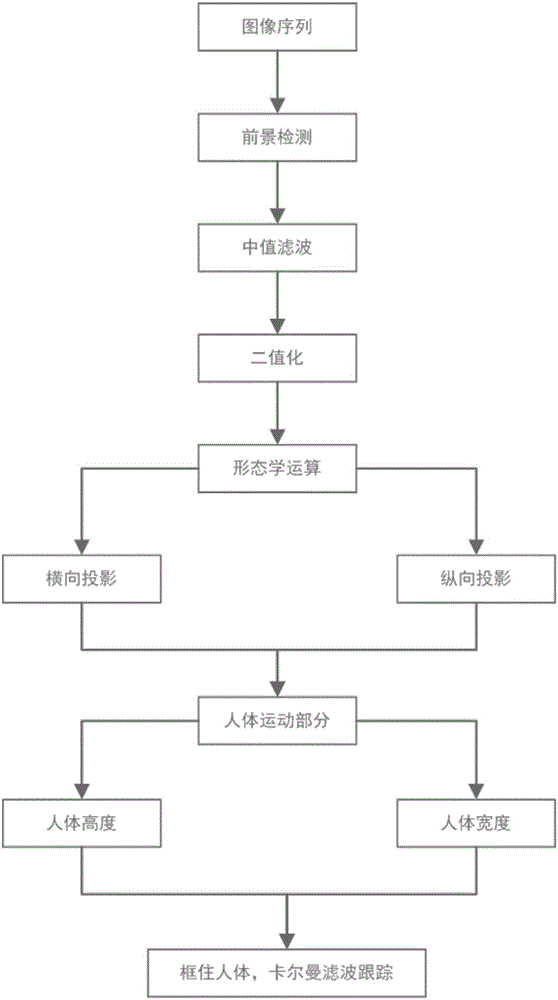

[0028] The technical implementation processes involved in the present invention will be described respectively below in conjunction with the accompanying drawings.

[0029] Face recognition: Users who need to obtain permission first take a photo of their face through the camera, and the photo will be stored in the sample library as a comparison sample. After capturing the user image that needs to determine the authority, it is firstly convolved with multiple Gabor filters of different scales and directions (the convolution result is called the Gabor feature map) to obtain a multi-resolution transformed image. Then divide each Gabor feature map into several disjoint local spatial regions, extract the brightness variation pattern of local neighborhood pixels for each region, and extract the spatial region histogram of these variation patterns in each local spatial region, The histograms of all Gabor feature maps and all regions are concatenated into a high-dimensional feature hi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com