Complex audio segmentation clustering method based on bottleneck feature

A technology for segmentation and clustering and bottlenecks, applied in speech analysis, speech recognition, special data processing applications, etc., can solve the problems of strong subjectivity, high cost of manual labeling, and low efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0165] Figure 4 It is a flowchart of an embodiment of the complex audio segmentation clustering method based on bottleneck features, and it mainly includes the following processes:

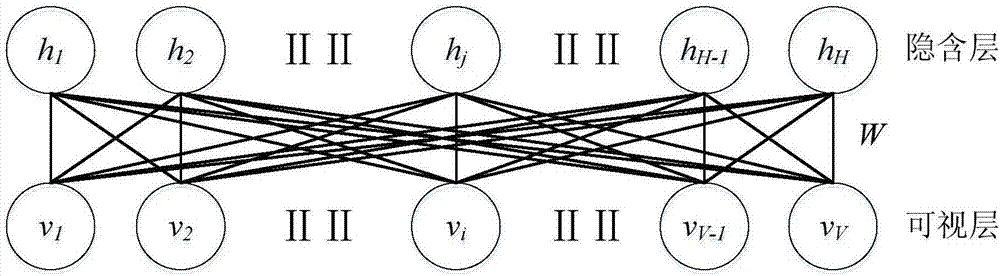

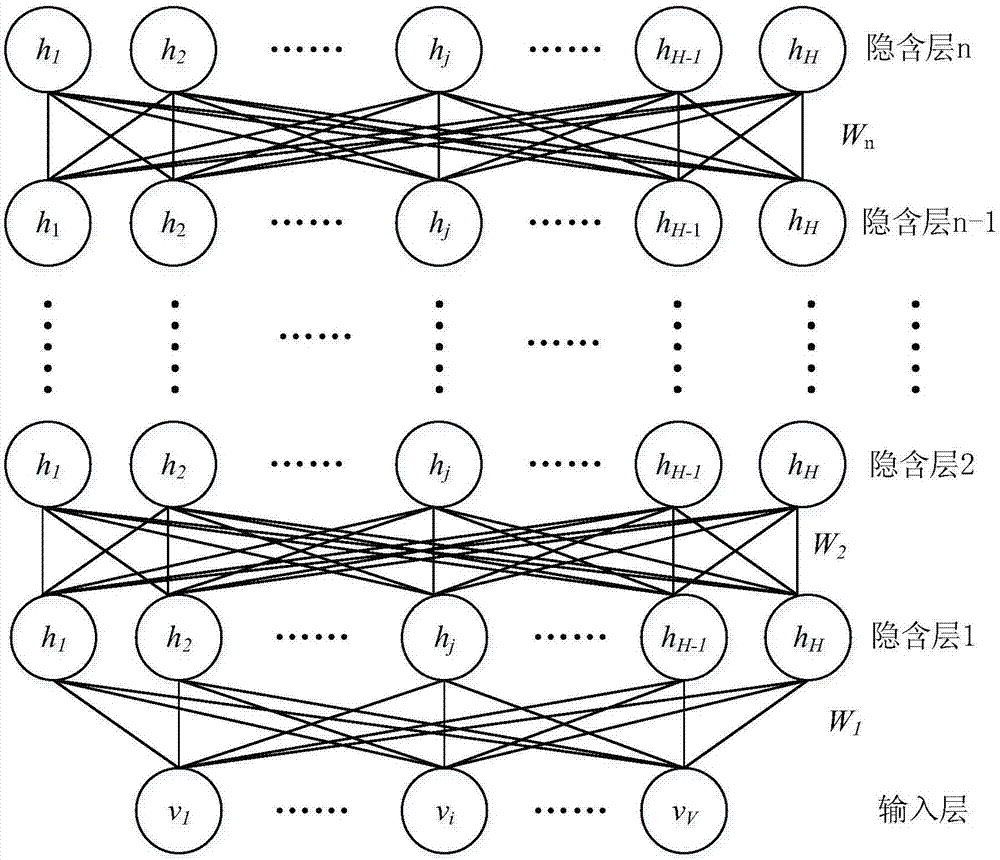

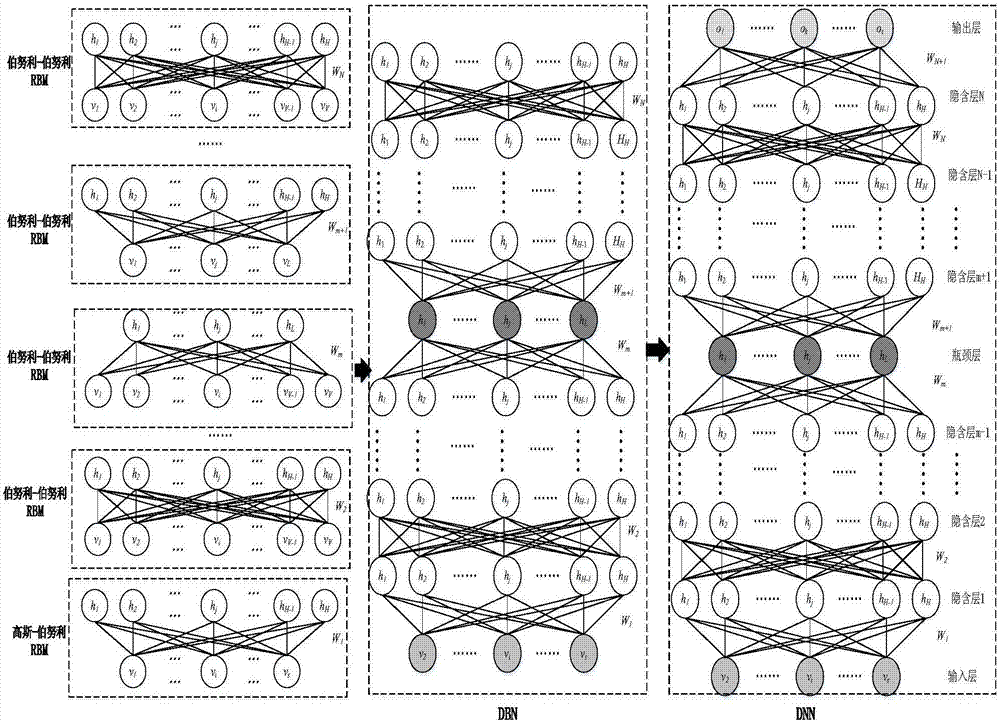

[0166] 1. Construction of deep neural network with bottleneck layer: read in training data and extract MFCC features, and then train a DNN feature extractor with bottleneck layer through two steps of unsupervised pre-training and supervised precise adjustment; the specific steps include:

[0167] S1.1. Read in the training data and extract the features of Mel-frequency cepstral coefficients. The specific steps are as follows:

[0168] S1.1.1, pre-emphasis: set the transfer function of the digital filter as H(z)=1-αz -1 , where α is a coefficient and its value is: 0.9≤α≤1, and the read-in audio stream is pre-emphasized after passing through the digital filter;

[0169] S1.1.2, Framing: Set the frame length of the audio frame to 25 milliseconds, the frame shift to 10 milliseconds, and the number ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com