Binocular camera occlusion detection method and apparatus

A binocular camera, occlusion detection technology, applied in image enhancement, image analysis, image communication and other directions, can solve the problems of missed detection, false detection, and low detection accuracy, achieve real-time occlusion detection, effective anti-interference, and improve detection accuracy degree of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0042] In the step S102, the dual-view image and the disparity map are simultaneously scaled to a target size, preferably to 320*240 in this embodiment. However, for the convenience of image calculation, the pixel value of the adjusted image may be less than 300,000 pixels. Those skilled in the art can know that any other deformations that can be obtained without labor creation are within the scope of this embodiment.

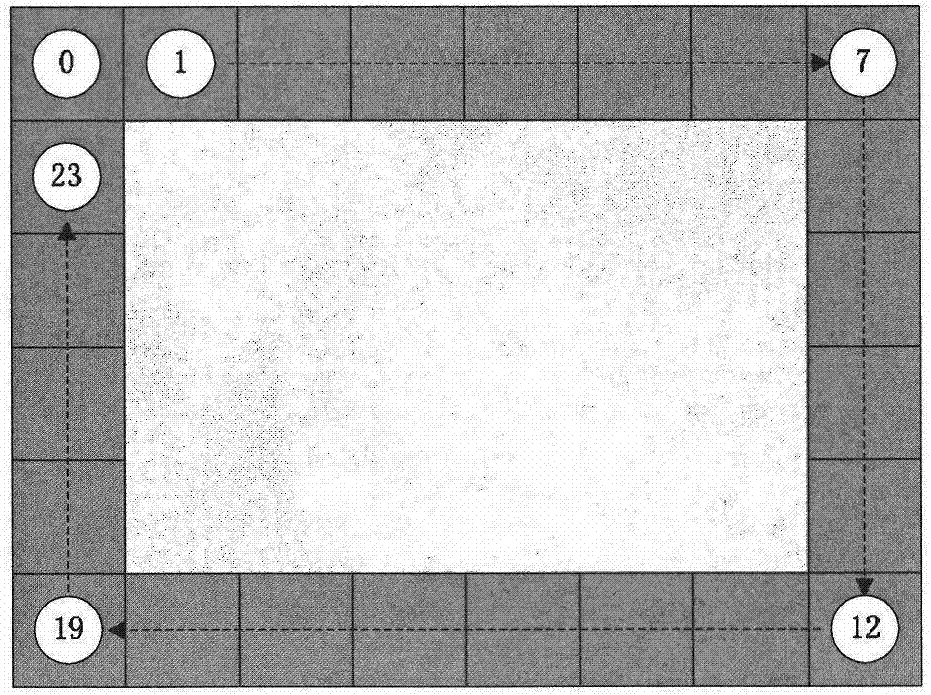

[0043] Further, in this embodiment, it is preferable to define the pixel block size of the rectangular grid as 40*40, mark a total of 24 rectangular grids along the edge, and calculate the average depth of the edge pixel blocks of the two images. Specifically, the depth mean calculation method: first, in the left view, scan from the first point pl to the right view, find the corresponding matching point pr, and then use the H matrix to map pl to pl', and then pr-pl' It is the parallax. The parallax lookup table can get a depth value, and fill the depth value i...

Embodiment 2

[0060] In another preferred embodiment of the present invention, in the step S103 to the step S105, the same kind of characteristic information of each rectangular grid pixel block is preferentially calculated, that is, the gray scale width of all rectangular grid pixel blocks can be uniformly detected first , sharpness, color change rate or depth mean feature information. For the rectangular grid pixel blocks that do not meet the specified threshold range, mark them as invalid rectangular grid pixel blocks, and do not continue to calculate other feature information.

[0061] The calculation sequence in this embodiment is the average depth value, the grayscale width, the definition, and the color change rate. However, those skilled in the art know that this order can be disrupted, and it is not limited to the protection order, as long as the same feature information is calculated first, and then the rectangular grid pixel blocks that meet the specified threshold range are sort...

Embodiment 3

[0063] The difference between this embodiment and Embodiment 1 lies in that the object of the invention is realized by calculating different feature information. Therefore, algorithms with the same information characteristics as those in Embodiment 1 will not be repeated in this embodiment.

[0064]In the step S103 , in this embodiment, at least four feature information need to be calculated, namely gray scale width, definition, skin color and edge. In a preferred embodiment of the present invention, an average brightness value may also be included.

[0065] In the step S104, specifically, it is judged whether the gray scale width value corresponding to the gray histogram of each grid is smaller than the first threshold, and the state array A[N] is recorded; the sharpness coefficient of each grid is judged Whether it is less than the second threshold, record the state array B[N]; judge whether the area of each rectangular grid skin color area is greater than the fifth thres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com