A multi-scale fully convolutional network and method and device for visual guidance

A fully convolutional network and multi-scale technology, applied in the field of visual guidance, can solve the problems of complex hardware technology, high power consumption, and impossibility of popularization, and achieve high detection effect and real-time detection speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

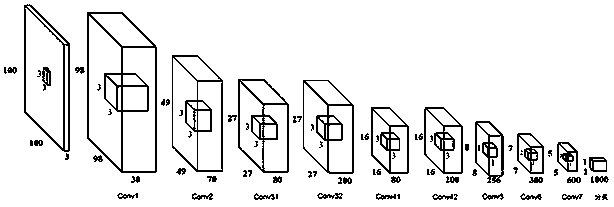

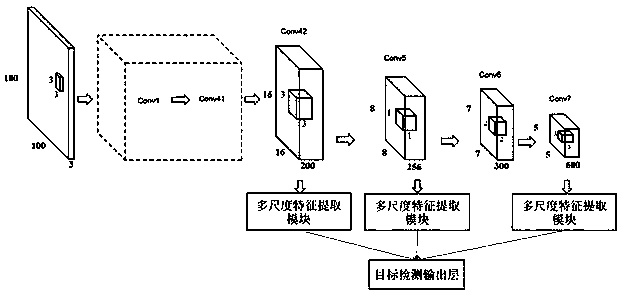

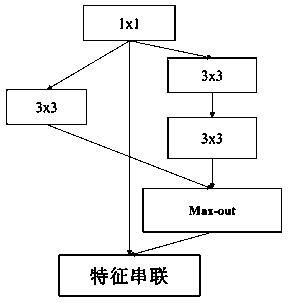

[0048] A multi-scale fully convolutional network, including a classification network and a detection network, the classification network is used to extract the characteristics of the preset window; the detection network is used to score and return the preset window, using a multi-channel parallel The structure directly performs feature fusion on the 1*1 convolution layer, and splits the 5*5 convolution into two 3*3 convolution operations.

[0049] In this specific embodiment, because the parameters of the network model of inception are too large, it cannot meet the real-time requirements on the embedded platform. In order to reduce the parameters of the network model, on the basis of the network structure of GoogleNetInception, the 1*1 convolutional layer is directly fused with features, and the 5*5 convolution is split into two 3*3 convolution operations, Attached figure 2 , 3 shown. The invention reduces model parameters, deletes some redundant layers according to experi...

specific Embodiment 2

[0051]On the basis of specific embodiment 1, the specific method of directly performing feature fusion on the 1*1 convolutional layer is as follows: firstly, use a 1×1 convolution kernel to adjust the number of channels of the convolutional feature spectrum, and then use convolutional layers of different sizes The product kernel extracts convolution features of different scales, and finally fuses the features of different channels.

specific Embodiment 3

[0053] On the basis of the specific embodiment 1 or 2, the classification network cuts the input color picture size to 100*100 (unit: pixel) size, and then connects more than two convolution modules, each convolution module includes a volume Product operation, batch normalization operation and ReLU activation function with parameters; the classification network adopts filters of 3*3, 2*2 and 1*1 (unit: pixel) size, with a step size of 1, and set After the convolution module is set, the maximum pooling operation is added respectively. The size of the pooling area is 2*2 (unit: pixel), and the step size is 1; the image is classified by using the features of the set convolution module.

[0054] In this specific embodiment, the entire classification network such as figure 1 As shown, the present invention proposes a small network model, aiming at reducing the computational complexity of the model, and satisfying the real-time requirement of the algorithm while ensuring the accurac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com